官方SDK https://github.com/slightech/MYNT-EYE-SDK

WINDOS 下配置http://blog.csdn.net/u013749068/article/details/79463736

ORB-SLAM2 https://github.com/slightech/MYNT-EYE-ORB-SLAM2-Sample

每个版本SDK下载: https://pan.baidu.com/s/1i5REqVz#list/path=%2F&parentPath=%2F

查看

ls /dev/video*

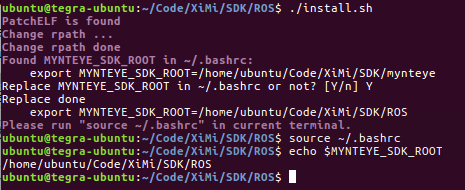

1 TX1安装记录

1 安装基本依赖

2 下载SDK

3 安装教程 https://slightech.github.io/MYNT-EYE-SDK/getting_started_tegra.html

从 Install SDK 开始 ,第一个kuda自带

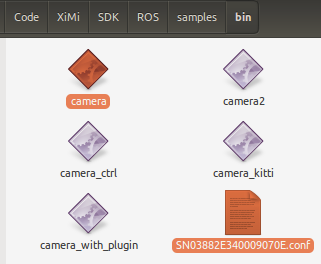

4 运行示例

环境 配置普通tx1版的 opencv3.2

在路径ubuntu@tegra-ubuntu:~/Code/XiMi/SDK/mynteye/samples$

./samples/bin/camera [name]

[name] 为空 自动选择摄像头

4.1 测试左右视图

- 自动选择摄像头

- 使用HUB,因为USB问题,可能读不出來,换一个

- 查看USB ls /dev/video*

4.2 测试深度图

./samples/bin/camera2

2ORB-SLAM2

我们创建了一个stereo_mynteye在ORB-SLAM2中命名的样本,以展示如何使用我们的MYNT EYE相机。

在运行stereo_mynteyeORB-SLAM2之前,请按照以下步骤操作:

-

在这里下载MYNT EYE SDK 并按照教程进行安装。

-

按照正常程序安装ORB-SLAM2。

- $cd

stereo_mynteyeORB-SLAM2

$chmod +x build.sh

$./build.sh -

校准MYNT EYE相机并更改参数

cam_stereo.yaml。 -

运行

stereo_mynteye,$ ./Examples/Stereo/mynt-eye-orb-slam2-sample ./Vocabulary/ORBvoc.txt ./Examples/Stereo/cam_stereo.yaml 1./Vocabulary/ORBvoc.txt是词汇文件./Examples/Stereo/cam_stereo.yaml的路径,是相机配置文件的路径,1代表video1。 -

你可以通过这里的视频了解运行效果。

在文件夹

ubuntu@tegra-ubuntu:~/Code/XiMi/MYNT-EYE-ORB-SLAM2-Sample-mynteye/Examples/Stereo$

./stereo_mynteye ./ORBvoc.txt ./cam_stereo.yaml 0

3ROS

1必须安装ROS 版的SDK

下载地址https://pan.baidu.com/s/1i5REqVz#list/path=%2FMYNT-EYE-SDK%2F1.x%2F1.8&parentPath=%2F

2Build SDK Samples

3 运行实例

./samples/bin/camera

需要一个相机标定文件,在博文最后自己标定

SN03882E340009070E.conf

4在ROS中打开 发布左右图 深度图 IMU

4.1 下载ROS测试包

地址 https://github.com/slightech/MYNT-EYE-ROS-Wrapper

新建一个ROS包,下载

git clone https://github.com/slightech/MYNT-EYE-ROS-Wrapper.git

4.2 编译

catkin_make

source ./devel/setup.bash

4.3 根据相机USB口修改打开0还是1

修改launchu 文件 0 usb

<param name="device_name" type="int" value="0" />

4.4 开始运行实例

# Run MYNT EYE camera node, and open Rviz to display

$ roslaunch mynteye_ros_wrapper mynt_camera_display.launch

# Run MYNT EYE camera node

$ roslaunch mynteye_ros_wrapper mynt_camera.launch

# Or,

$ rosrun mynteye_ros_wrapper mynteye_wrapper_node

ROS 标定参数

%YAML:1.0

M1: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 7.0670498518636600e+002, 0., 3.7297901043077900e+002, 0.,

7.0666416610920203e+002, 2.1240374979570200e+002, 0., 0., 1. ]

D1: !!opencv-matrix

rows: 1

cols: 14

dt: d

data: [ -4.6388704908098000e-001, 2.3425087249454701e-001, 0., 0., 0.,

0., 0., 0., 0., 0., 0., 0., 0., 0. ]

M2: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 7.0503556956133104e+002, 0., 4.0450936863506502e+002, 0.,

7.0491472237897597e+002, 2.2503054543868200e+002, 0., 0., 1. ]

D2: !!opencv-matrix

rows: 1

cols: 14

dt: d

data: [ -4.6174421404117999e-001, 2.1536606632286900e-001, 0., 0., 0.,

0., 0., 0., 0., 0., 0., 0., 0., 0. ]

R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 9.9997459395392996e-001, -3.3977699515345002e-003,

-6.2663072083021200e-003, -3.3890405418889199e-003,

9.9999327274544803e-001, 1.4031635874333700e-003,

-6.2710326803329004e-003, -1.3818911694177701e-003,

9.9997938205040904e-001 ]

T: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [ -1.1963887403661900e+002, -1.5522537096063099e-001,

-9.4210539328372297e-003 ]

SGM-双目深度图 ROS

#include <iostream>

#include <numeric>

#include <sys/time.h>

#include <vector>

#include <stdlib.h>

#include <typeinfo>

#include <opencv2/opencv.hpp>

//ROS

#include <ros/ros.h>

#include <image_transport/image_transport.h>

#include <opencv2/highgui/highgui.hpp>

#include <cv_bridge/cv_bridge.h>

//CUDA sgm

#include <numeric>

#include <stdlib.h>

#include <ctime>

#include <sys/types.h>

#include <stdint.h>

#include <linux/limits.h>

#include <dirent.h>

#include <iostream>

#include <fstream>

#include "disparity_method.h"

//MY-EYE

#include "camera.h"

#include "utility.h"

char command;

cv::Mat left,right;

void imageCallbackLeft(const sensor_msgs::ImageConstPtr &msg)

{

try

{

left = cv_bridge::toCvShare(msg,"bgr8")->image;

cv::imshow("left",left );

command=cv::waitKey(1);

if(command == ' ')

{

cv::imwrite("left.jpg",left);

cv::imwrite("right.jpg",right);

}

}

catch(cv_bridge::Exception &e)

{

}

}

void imageCallbackRight(const sensor_msgs::ImageConstPtr &msg)

{

try

{

right = cv_bridge::toCvShare(msg,"bgr8")->image;

cv::imshow("right",right);

//cv::waitKey(1);

}

catch(cv_bridge::Exception &e)

{

}

}

int main(int argc, char *argv[]) {

ros::init(argc, argv, "sgm_ros");

ros::NodeHandle nh("~");

image_transport::ImageTransport it(nh);

image_transport::Subscriber sub_left = it.subscribe("/left/image_rect_color", 1,imageCallbackLeft);

image_transport::Subscriber sub_right = it.subscribe("/right/image_rect_color", 1,imageCallbackRight);

//open camera

std::string name;

if (argc >= 2) {

name = argv[1];

} else {

bool found = false;

name = FindDeviceName(&found);

}

cout << "Open Camera: " << name << endl;

CalibrationParameters *calib_params = nullptr;

if (argc >= 3) {

stringstream ss;

ss << argv[2];

calib_params = new CalibrationParameters;

calib_params->Load(ss.str());

}

InitParameters init_params(name, calib_params);

Camera cam;

//cam.SetMode(Mode::MODE_CPU);

cam.Open(init_params);

if (calib_params)

delete calib_params;

if (!cam.IsOpened()) {

std::cerr << "Error: Open camera failed" << std::endl;

return 1;

}

cout << "�33[1;32mPress ESC/Q on Windows to terminate�33[0m

";

uint8_t p1 = 7;

uint8_t p2 = 84;

init_disparity_method(p1, p2);

ros::Rate loop_rate(50);

while(ros::ok())

{

ros::spinOnce();

loop_rate.sleep();

cv::Mat h_im0 = left.clone();

if(!h_im0.data) {

std::cerr << "Couldn't read the file " << std::endl;

continue;

}

cv::Mat h_im1 = right.clone();

if(!h_im1.data) {

std::cerr << "Couldn't read the file " << std::endl;

continue;

}

// Convert images to grayscale

if (h_im0.channels()>1) {

cv::cvtColor(h_im0, h_im0, CV_RGB2GRAY);

}

if (h_im1.channels()>1) {

cv::cvtColor(h_im1, h_im1, CV_RGB2GRAY);

}

if(h_im0.rows != h_im1.rows || h_im0.cols != h_im1.cols) {

std::cerr << "Both images must have the same dimensions" << std::endl;

return EXIT_FAILURE;

}

if(h_im0.rows % 4 != 0 || h_im0.cols % 4 != 0) {

std::cerr << "Due to implementation limitations image width and height must be a divisible by 4" << std::endl;

return EXIT_FAILURE;

}

// Compute

float elapsed_time_ms;

cv::Mat disparity_im = compute_disparity(h_im0, h_im1, &elapsed_time_ms);

std::cout << "matching time: " << elapsed_time_ms << std::endl;

const int type = disparity_im.type();

const uchar depth = type & CV_MAT_DEPTH_MASK;

if(depth == CV_8U) {

cv::imshow("disp",disparity_im);

//cv::imwrite("disp.jpg", disparity_im);

} else {

cv::Mat disparity16(disparity_im.rows, disparity_im.cols, CV_16UC1);

for(int i = 0; i < disparity_im.rows; i++) {

for(int j = 0; j < disparity_im.cols; j++) {

const float d = disparity_im.at<float>(i, j)*256.0f;

disparity16.at<uint16_t>(i, j) = (uint16_t) d;

}

}

cv::imshow("disp",disparity_im);

//cv::imwrite("disp.jpg", disparity16);

}

}

return 0;

}