因为最近在找房子在豆瓣小组-上海租房上找,发现搜索困难,于是想利用爬虫将数据抓取. 顺便熟悉一下Python.

这边有scrapy 入门教程出处:http://www.cnblogs.com/txw1958/archive/2012/07/16/scrapy-tutorial.html

差不多跟教程说的一样,问题技术难点是 转码,上述教程并未详细指出. 我还是把代码贴出来,请供参考.

E: utorial>tree /f Folder PATH listing for volume 文档 Volume serial number is 0003-BBB3 E:. │ scrapy.cfg │ └─tutorial │ items.py │ items.pyc │ pipelines.py │ pipelines.pyc │ settings.py │ settings.pyc │ __init__.py │ __init__.pyc │ └─spiders douban_spider.py douban_spider.pyc __init__.py __init__.pyc

item.py: 这有一篇很好介绍ITEM的文章(http://blog.csdn.net/iloveyin/article/details/41309609)

from scrapy.item import Item, Field class DoubanItem(Item): title = Field() link = Field() #resp = Field() #dateT = Field()

pipelines.py #定义你自己的PipeLine方式,详细中文转码可在此处解决

# -*- coding: utf-8 -*- # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html import json import codecs class TutorialPipeline(object): def __init__(self): self.file = codecs.open('items.json', 'wb', encoding='gbk') def process_item(self, item, spider): line = json.dumps(dict(item)) + ' ' print line self.file.write(line.decode("unicode_escape")) return item

在setting.py 加入相应的 ITEM_PIPELINES 属性(红色字体为新加部分)

# -*- coding: utf-8 -*- # Scrapy settings for tutorial project # # For simplicity, this file contains only the most important settings by # default. All the other settings are documented here: # # http://doc.scrapy.org/en/latest/topics/settings.html # BOT_NAME = 'tutorial' SPIDER_MODULES = ['tutorial.spiders'] NEWSPIDER_MODULE = 'tutorial.spiders' ITEM_PIPELINES = { 'tutorial.pipelines.TutorialPipeline':300 } # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'tutorial (+http://www.yourdomain.com)'

接下来是spider.py

from scrapy.spider import BaseSpider from scrapy.selector import HtmlXPathSelector from scrapy.http import Request from tutorial.items import DoubanItem class DoubanSpider(BaseSpider): name = "douban" allowed_domains = ["douban.com"] start_urls = [ "http://www.douban.com/group/shanghaizufang/discussion?start=0", "http://www.douban.com/group/shanghaizufang/discussion?start=25", "http://www.douban.com/group/shanghaizufang/discussion?start=50", "http://www.douban.com/group/shanghaizufang/discussion?start=75", "http://www.douban.com/group/shanghaizufang/discussion?start=100", "http://www.douban.com/group/shanghaizufang/discussion?start=125", "http://www.douban.com/group/shanghaizufang/discussion?start=150", "http://www.douban.com/group/shanghaizufang/discussion?start=175", "http://www.douban.com/group/shanghaizufang/discussion?start=200" ] def parse(self, response): hxs = HtmlXPathSelector(response) sites = hxs.xpath('//tr/td') items=[] for site in sites: item = DoubanItem() item['title'] =site.xpath('a/@title').extract() item['link'] = site.xpath('a/@href').extract() # item['resp'] = site.xpath('text()').extract() # item['dateT'] = site.xpath('text()').extract() items.append(item) return items

用JSON数据方式导出:

scrapy crawl douban -o items.json -t json

这有个JSON 转成CSV工具的网站,可以帮助转换:

https://json-csv.com/

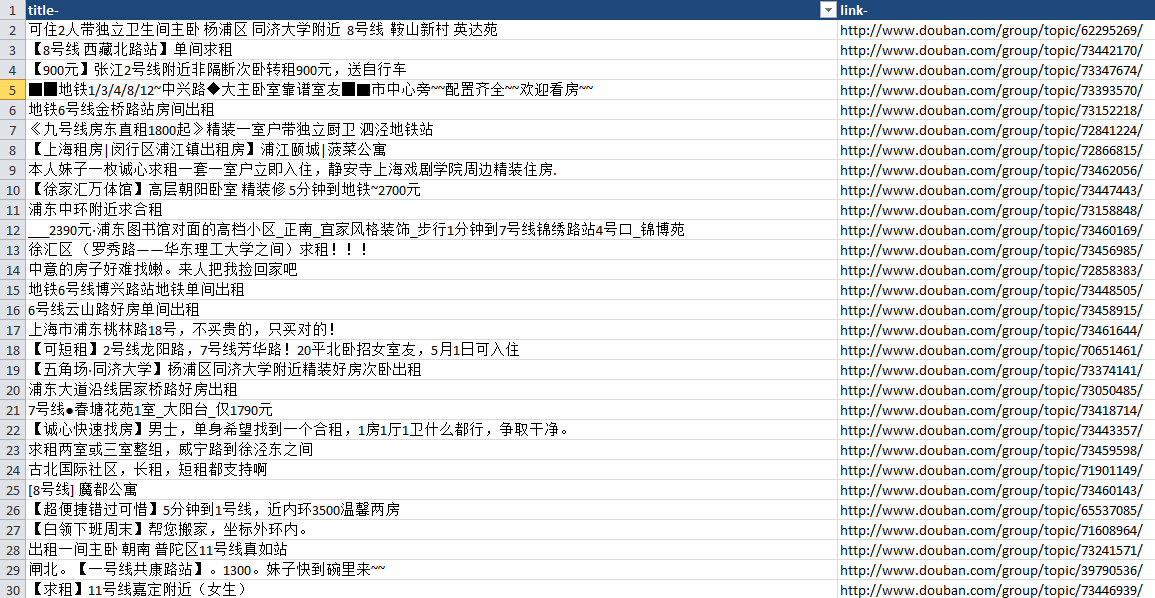

结果效果展示,这样方便检索和过滤