使用eclipse开发MapReduce项目更加方便(使用hadoop插件)

插件和window编译程序下载地址:链接:https://pan.baidu.com/s/1iXp3MeiE8pXS3QevDJ24kw 提取码:mzye

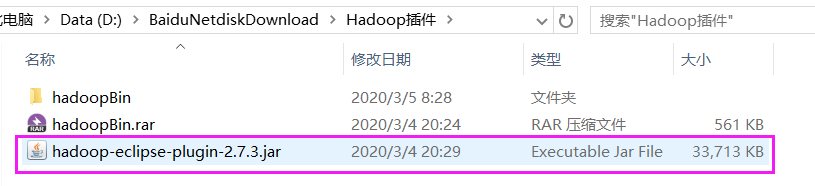

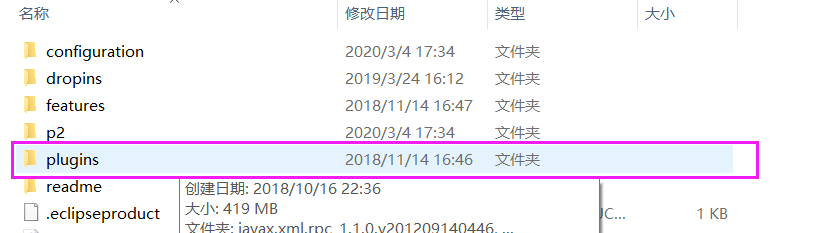

1.把插件jar包放到eclipse目录的plugins下面

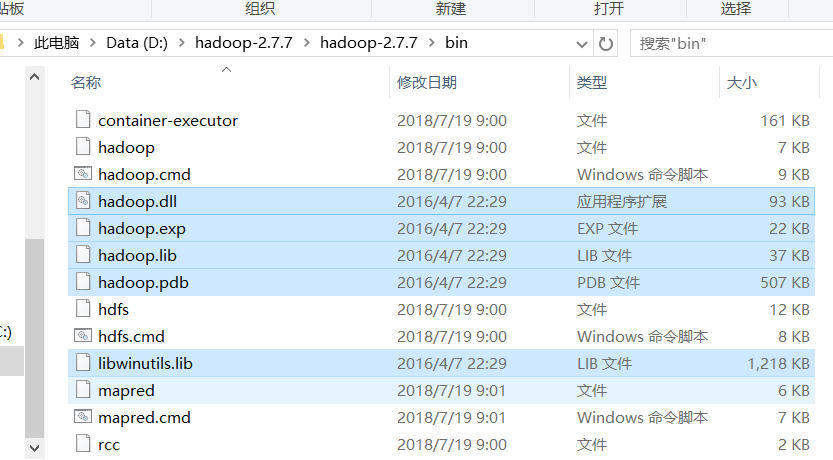

2.将Window编译后的hadoop文件放到hadoop的bin目录下

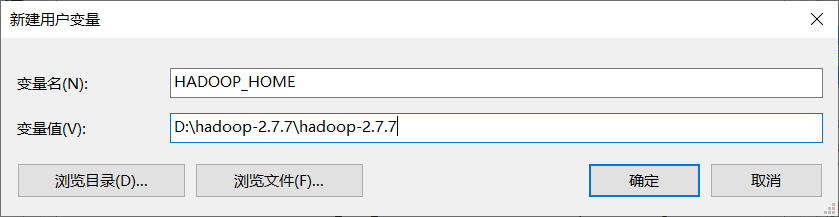

3.添加环境变量支持

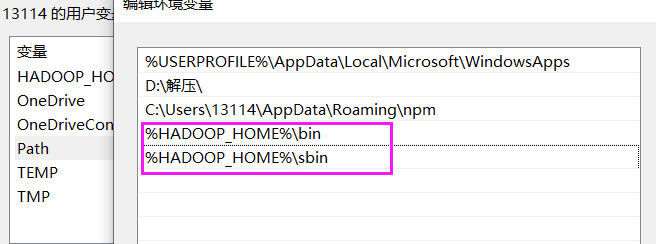

4.修改hdfs-site.xml的配置

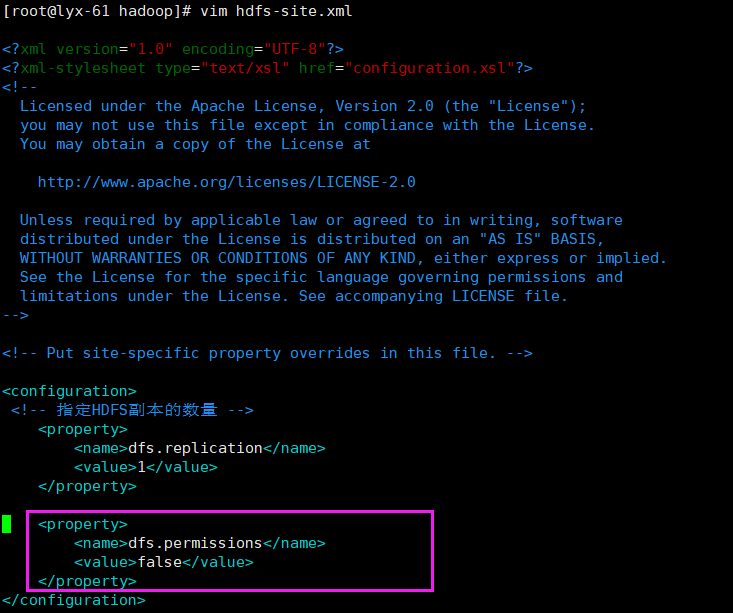

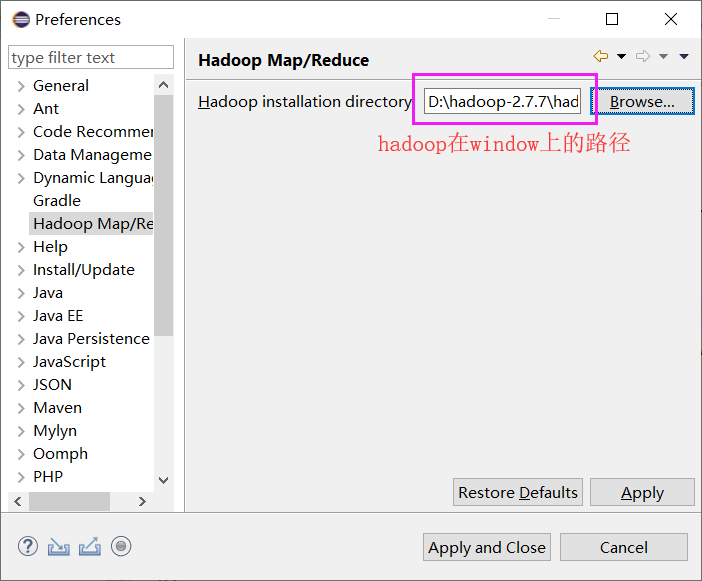

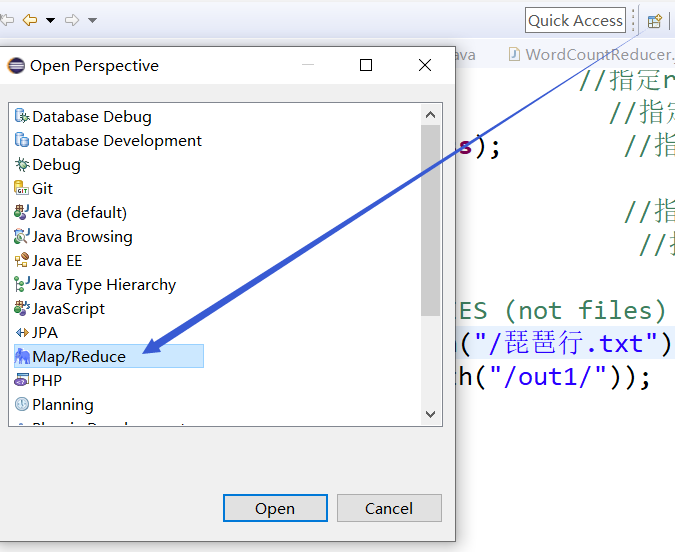

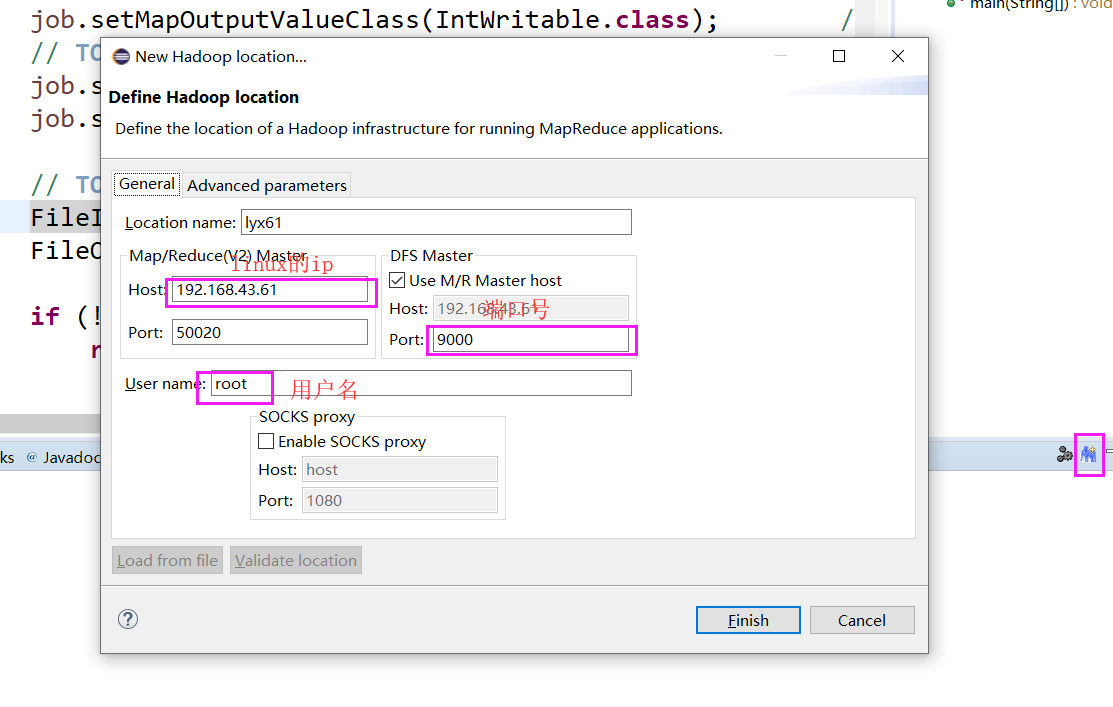

5.eclipse上配置

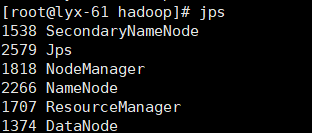

需要先打开虚拟机上的hadoop服务

然后才能连上去

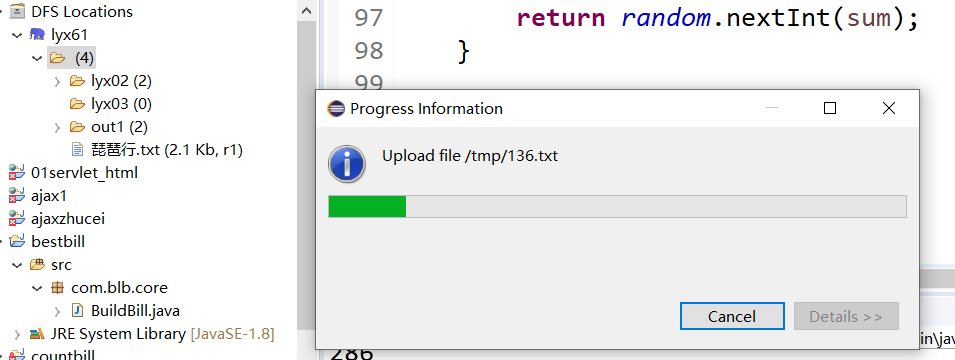

6.准备要分析的数据并且上传到hdfs 会在D盘的tmp文件下生成1-300.txt 里面就是要分析的数据

package com.blb.core;

import java.io.BufferedWriter;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStreamWriter;

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

/**

* 300户 每户都会有一个清单文件

* 商品是随机 数量也是随机

* 洗漱用品 脸盆、杯子、牙刷和牙膏、毛巾、肥皂(洗衣服的)以及皂盒、洗发水和护发素、沐浴液 [1-5之间]

* 床上用品 比如枕头、枕套、枕巾、被子、被套、棉被、毯子、床垫、凉席 [0 1之间]

* 家用电器 比如电磁炉、电饭煲、吹风机、电水壶、豆浆机、台灯等 [1-3之间]

* 厨房用品 比如锅、碗、瓢、盆、灶 [1-2 之间]

* 柴、米、油、盐、酱、醋 [1-6之间]

* 要生成300个文件 命名规则 1-300来表示

* @author Administrator

*

*/

public class BuildBill {

private static Random random=new Random(); //要还是不要

private static List<String> washList=new ArrayList<>();

private static List<String> bedList=new ArrayList<>();

private static List<String> homeList=new ArrayList<>();

private static List<String> kitchenList=new ArrayList<>();

private static List<String> useList=new ArrayList<>();

static{

washList.add("脸盆");

washList.add("杯子");

washList.add("牙刷");

washList.add("牙膏");

washList.add("毛巾");

washList.add("肥皂");

washList.add("皂盒");

washList.add("洗发水");

washList.add("护发素");

washList.add("沐浴液");

///////////////////////////////

bedList.add("枕头");

bedList.add("枕套");

bedList.add("枕巾");

bedList.add("被子");

bedList.add("被套");

bedList.add("棉被");

bedList.add("毯子");

bedList.add("床垫");

bedList.add("凉席");

//////////////////////////////

homeList.add("电磁炉");

homeList.add("电饭煲");

homeList.add("吹风机");

homeList.add("电水壶");

homeList.add("豆浆机");

homeList.add("电磁炉");

homeList.add("台灯");

//////////////////////////

kitchenList.add("锅");

kitchenList.add("碗");

kitchenList.add("瓢");

kitchenList.add("盆");

kitchenList.add("灶 ");

////////////////////////

useList.add("米");

useList.add("油");

useList.add("盐");

useList.add("酱");

useList.add("醋");

}

//确定要还是不要 1/2

private static boolean iswant()

{

int num=random.nextInt(1000);

if(num%2==0)

{

return true;

}

else

{

return false;

}

}

/**

* 表示我要几个

* @param sum

* @return

*/

private static int wantNum(int sum)

{

return random.nextInt(sum);

}

//生成300个清单文件 格式如下

//输出的文件的格式 一定要是UTF-8

//油 2

public static void main(String[] args) {

for(int i=1;i<=300;i++)

{

System.out.println(i);

try {

//字节流

FileOutputStream out=new FileOutputStream(new File("D:\tmp\"+i+".txt"));

//转换流 可以将字节流转换字符流 设定编码格式

//字符流

BufferedWriter writer=new BufferedWriter(new OutputStreamWriter(out,"UTF-8"));

//随机一下 我要不要 随机一下 要几个 再从我们的清单里面 随机拿出几个来 数量

boolean iswant1=iswant();

if(iswant1)

{

//我要几个 不能超过该类商品的总数目

int wantNum = wantNum(washList.size()+1);

//3

for(int j=0;j<wantNum;j++)

{

String product=washList.get(random.nextInt(washList.size()));

writer.write(product+" "+(random.nextInt(5)+1));

writer.newLine();

}

}

boolean iswant2=iswant();

if(iswant2)

{

//我要几个 不能超过该类商品的总数目

int wantNum = wantNum(bedList.size()+1);

//3

for(int j=0;j<wantNum;j++)

{

String product=bedList.get(random.nextInt(bedList.size()));

writer.write(product+" "+(random.nextInt(1)+1));

writer.newLine();

}

}

boolean iswant3=iswant();

if(iswant3)

{

//我要几个 不能超过该类商品的总数目

int wantNum = wantNum(homeList.size()+1);

//3

for(int j=0;j<wantNum;j++)

{

String product=homeList.get(random.nextInt(homeList.size()));

writer.write(product+" "+(random.nextInt(3)+1));

writer.newLine();

}

}

boolean iswant4=iswant();

if(iswant4)

{

//我要几个 不能超过该类商品的总数目

int wantNum = wantNum(kitchenList.size()+1);

//3

for(int j=0;j<wantNum;j++)

{

String product=kitchenList.get(random.nextInt(kitchenList.size()));

writer.write(product+" "+(random.nextInt(2)+1));

writer.newLine();

}

}

boolean iswant5=iswant();

if(iswant5)

{

//我要几个 不能超过该类商品的总数目

int wantNum = wantNum(useList.size()+1);

//3

for(int j=0;j<wantNum;j++)

{

String product=useList.get(random.nextInt(useList.size()));

writer.write(product+" "+(random.nextInt(6)+1));

writer.newLine();

}

}

writer.flush();

writer.close();

} catch (FileNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

}

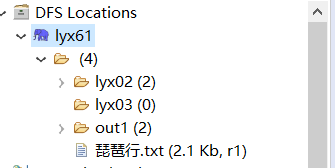

生成的文件上传到hdfs

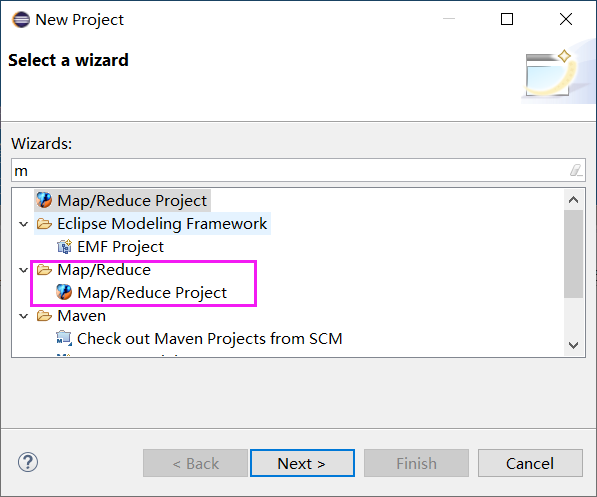

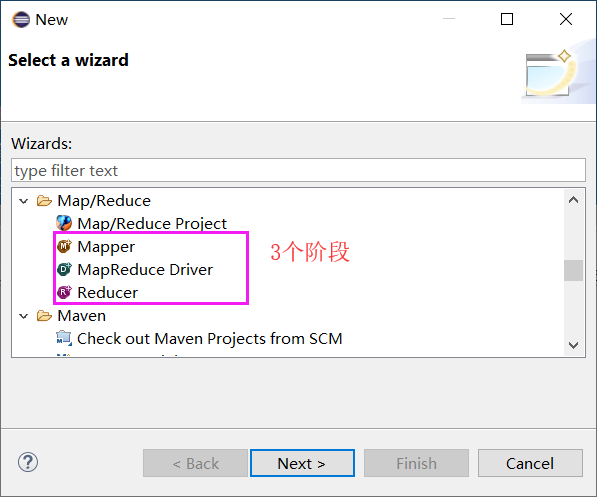

7.开始写MapReduce程序

创建一个MapReduce项目

map阶段

package com.blb.lyx;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class GoodCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

public void map(LongWritable ikey, Text ivalue, Context context) throws IOException, InterruptedException {

//读取一行的文件

String line = ivalue.toString();

//进行字符串的切分

String[] split = line.split(" ");

//写入

context.write(new Text(split[0]), new IntWritable(Integer.parseInt(split[1])));

}

}

reduce阶段

package com.blb.lyx;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class GoodCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text _key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

//将IntWritable转换为Int类型

int i = val.get();

sum += i;

}

context.write(_key, new IntWritable(sum));

}

}

job阶段

package com.blb.lyx;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class GoodCountDriver {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

//配置服务器的端口和地址

conf.set("fs.defaultFS", "hdfs://192.168.43.61:9000");

Job job = Job.getInstance(conf, "CountDriver");

job.setJarByClass(GoodCountDriver.class);

// TODO: specify a mapper

job.setMapperClass(GoodCountMapper.class);

// TODO: specify a reducer

job.setReducerClass(GoodCountReducer.class);

//如果reducer的key类型和map的key类型一样,可以不写map的key类型

//如果reduce的value类型和map的value类型一样,可以不写map的value类型

// TODO: specify output types

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// TODO: specify input and output DIRECTORIES (not files)

FileInputFormat.setInputPaths(job, new Path("/tmp/"));

FileOutputFormat.setOutputPath(job, new Path("/out2/"));

if (!job.waitForCompletion(true))

return;

}

}

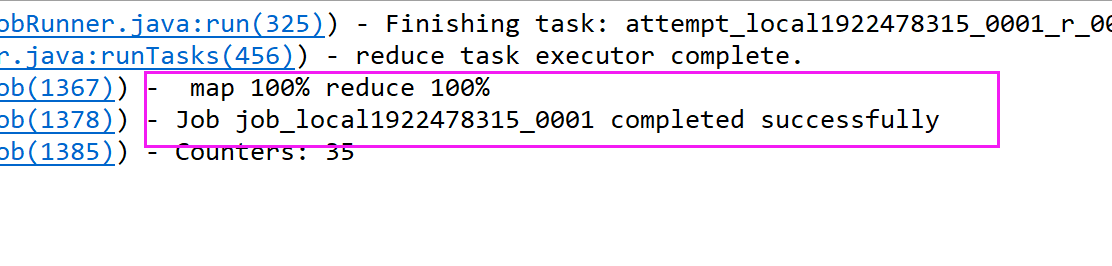

8.运行项目 主要运行在hadoop上 Run on Hadoop

运行成功

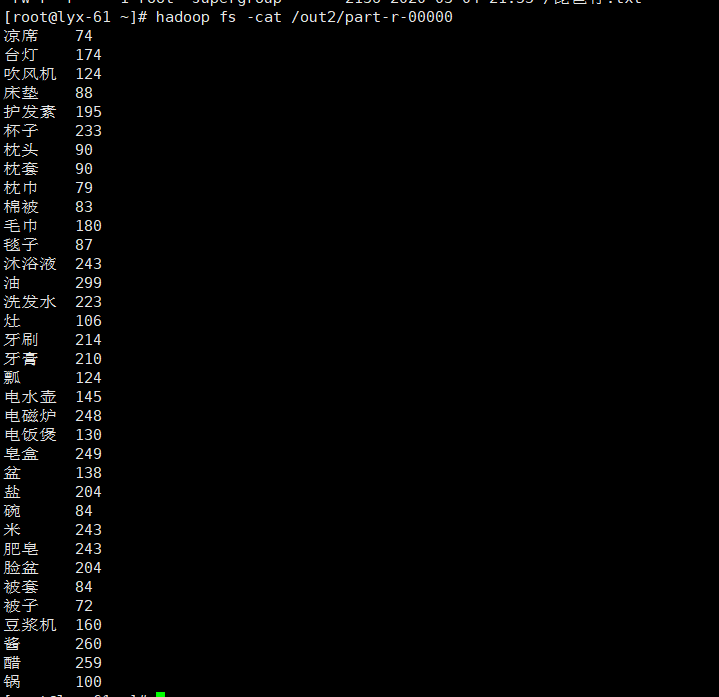

查看结果