problems_hive

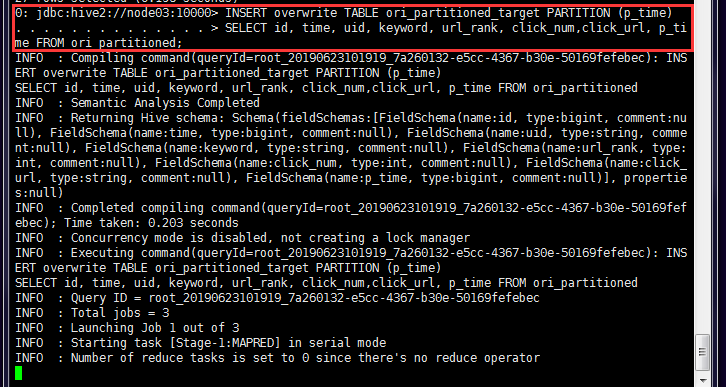

1 hive执行如下截图中的语句时卡住了

原因:yarn未启动,hive底层是要提交mapreduce到yarn上才能计算结果的。

RCA: 之前启动yarn时,未执行jps查看是否已经启动。其实未启动成功,具体原因未知:

[root@node01 ~]# start-yarn.sh

starting yarn daemons resourcemanager running as process 3220. Stop it first.

solution: 重新启动dfs、yarn、jobhistory。

2 执行jar包报错:udfFull.jar中没有主清单属性

在windows系统的cmd命令行窗口中执行: java -jar udfFull.jar {"movie":"1287","rate":"5","timeStamp":"978302039","uid":"1"}

报错1:udfFull.jar中没有主清单属性

原因:jar包中的META-INF文件夹下的MANIFEST.MF文件缺少定义jar接口类。

说白了就是没有指定class类。这里说明一下MANIFEST.MF就是一个清单文件,通俗点将就相当于WINDOWS中ini配置文件。用来配置程序的一些信息。

解决方法:在MANIFEST.MF文件中添加一个入口类,添加这一行:Main-Class: TransferJsonUDF (TransferJsonUDF是含有main方法的入口类)

继续执行,报另一个错误:找不到或无法加载主类 TransferJsonUDF

原因:入口类配置得不对。

解决方法:入口类配置修改为: Main-Class: cn.itcast.hive.udf.TransferJsonUDF (即在入口类前面添加其类路径 cn.itcast.hive.udf.)

再次运行,成功:

D:> java -jar udfFull.jar {"movie":"1287","rate":"5","timeStamp":"978302039","uid":"1"}

1287 5 978302039 1

另外,linux中执行该命令,如果后面带参数,则需要在参数两边加上单引号''. 否则执行可能有异常,可以这么写:

java -jar udfFull.jar '{"movie":"914","rate":"3","timeStamp":"978301968","uid":"1"}'

3 beeline连接hive数据库报错

beeline> !connect jdbc:hive2://cdh03:10000 root root org.apache.hive.jdbc.HiveDriver

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/develop/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://cdh03:10000

19/12/16 20:53:02 [main]: WARN jdbc.HiveConnection: Failed to connect to cdh03:10000

Error: Could not open client transport with JDBC Uri: jdbc:hive2://cdh03:10000: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): <font color=red>User: root is not allowed to impersonate root (state=08S01,code=0)</font>

RCA: hadoop未设置超级代理。

hadoop引入了一个安全伪装机制,使得hadoop 不允许上层系统直接将实际用户传递到hadoop层,而是将实际用户传递给一个超级代理,由此代理在hadoop上执行操作,避免任意客户端随意操作hadoop,

solution:

在hadoop的配置文件core-site.xml中添加如下属性:

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

就将上面配置hadoop.proxyuser.xxx.hosts和hadoop.proxyuser.xxx.groups中的xxx设置为root(即你的错误日志中显示的User:xxx为什么就设置为什么)。

“*”表示可通过超级代理“xxx”操作hadoop的用户、用户组和主机。重启hdfs。

参考链接:https://blog.csdn.net/qq_16633405/article/details/82190440

注意:如果要使用beeline连接hive的语法格式为:

!connect jdbc:hive2://cdh01:10000 username password org.apache.hive.jdbc.HiveDriver

使用beeline有2个前提条件:

- mysql中创建好hive的元数据的数据库

- 事先启动hiveserver2服务:

- CDH版本启动命令:service hive-server2 start

- apache版本启动命令:nohup hive --service hiveserver2 2>&1 &

4 运行hive命令报错

[root@cdh01 conf]# hive

Cannot find hadoop installation: $HADOOP_HOME or $HADOOP_PREFIX must be set or hadoop must be in the path

原因:未设置环境变量HADOOP_HOME.

解决方法:

1.vim /etc/hive/conf/hive-env.sh

在该文件中添加语句:export HADOOP_HOME=/usr/lib/hadoop

注意:CDH版本的hadoop的工作目录是/usr/lib/hadoop

5 hive中创建和hbase的整合的表失败报错:

执行语句:

create table course.hbase_score(id int,cname string,score int) stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' with serdeproperties("hbase.columns.mapping" = "cf:name,cf:score") tblproperties("hbase.table.name" = "hbase_score");

报错语句:

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. org.apache.hadoop.hbase.client.HBaseAdmin.

排查步骤:

1.运行 hive -hiveconf hive.root.logger=DEBUG,console,以便查看详细错误日志。然后再次执行建表语句,报如下详细的错误:

ERROR exec.DDLTask: java.lang.NoSuchMethodError: org.apache.hadoop.hbase.client.HBaseAdmin.<init>(Lorg/apache/hadoop/conf/Configuration;)V

at org.apache.hadoop.hive.hbase.HBaseStorageHandler.getHBaseAdmin(HBaseStorageHandler.java:115)

at org.apache.hadoop.hive.hbase.HBaseStorageHandler.preCreateTable(HBaseStorageHandler.java:195)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:727)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:720)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:105)

at com.sun.proxy.$Proxy18.createTable(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient$SynchronizedHandler.invoke(HiveMetaStoreClient.java:2126)

at com.sun.proxy.$Proxy18.createTable(Unknown Source)

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:784)

at org.apache.hadoop.hive.ql.exec.DDLTask.createTable(DDLTask.java:4202)

at org.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:311)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:214)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:99)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2054)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1750)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1503)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1287)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1277)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:226)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:175)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:389)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:781)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:634)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:226)

at org.apache.hadoop.util.RunJar.main(RunJar.java:141)

怀疑是hive和hbase版本不兼容,hive是CDH版本,hbase是apache开源版本,所以在cdh03上重新安装了一个apache开源版本的hive。解决。

小结:cdh01中是CDH版本的hive,cdh03中是apache开源版本的hive。

6 hive执行sql报错

desc:执行sql语句,join两个子查询

errorlog:

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

Caused by: java.lang.IllegalStateException: Invalid input path hdfs://node1:8020/user/hive/warehouse/weblog.db/ods_click_stream_visit/datestr=20181101/visit

action&solution:

检查发现日志中说无效的路径,其实是存在的。

检查发现hive本地模式开启了,尝试关闭本地模式 set hive.exec.mode.local.auto=false;

再次尝试,执行成功。

7 创建hive自定义函数报错

desc:

执行下面语句报错: add jar /develop/weblog/0210udf-1.0.jar;

create temporary function ua_parse as 'com.udf.UAParseUDF';

errorlog:

FAILED: Execution Error, return code -101 from org.apache.hadoop.hive.ql.exec.FunctionTask. Invalid signature file digest for Manifest main attributes

solution:

方法1. 删除jar包中的META-INF/.RSA META-INF/.DSA META-INF/*.SF 文件:

zip -d 0210udf-1.0.jar META-INF/*.RSA META-INF/*.DSA META-INF/*.SF

方法2. 打包时排除以上的需要被删除的文件:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<minimizeJar>true</minimizeJar>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

</configuration>

</execution>

</executions>

</plugin>

8 beeline连接hive报错

errorlog:

java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException):

User root is not allowed to impersonate anonymous 错误。

solution: 修改hadoop 配置文件 .../etc/hadoop/core-site.xml,加入如下配置项

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>