https://blog.csdn.net/u010026901/article/details/26587251

eclipse快捷键alt+shift+m将选中的代码封装成方法;alt+shift+l将选中的代码加入相应类型放回參数

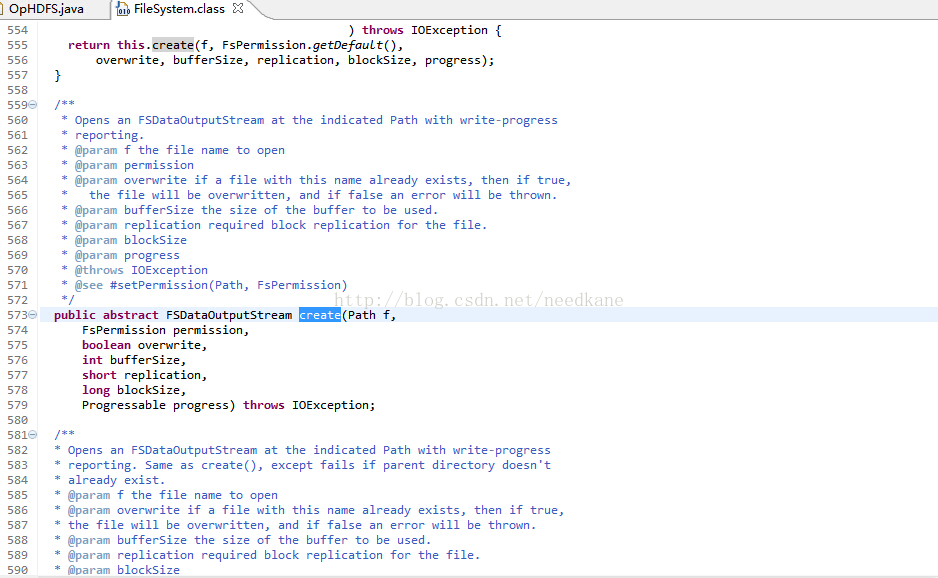

当调用一个陌生方法时,进入源代码不停的向里循环,当找不到return相似方法的时候。能够看到最原始的方法

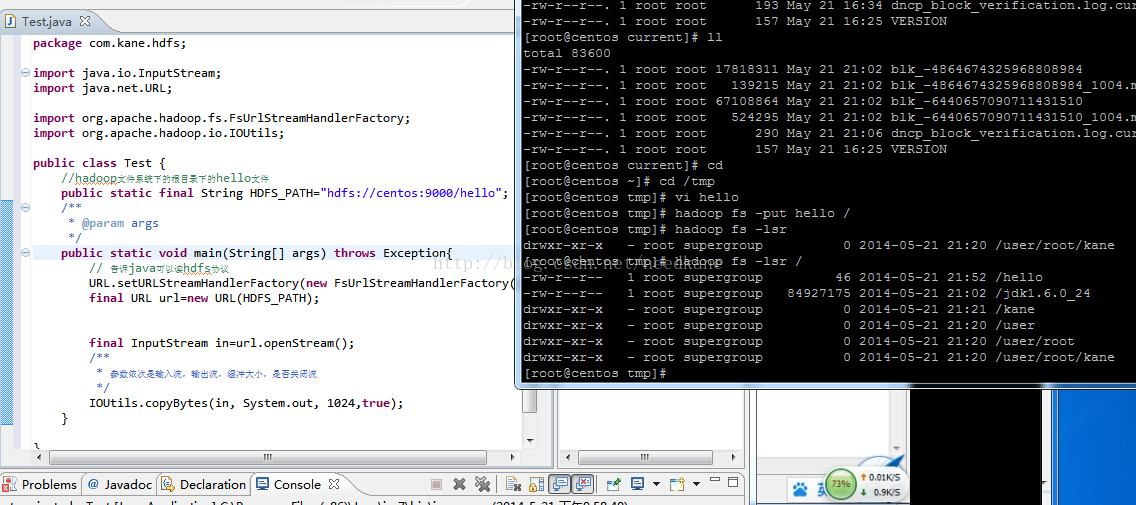

package com.kane.hdfs;

import java.io.InputStream;

import java.net.URL;

import org.apache.hadoop.fs.FsUrlStreamHandlerFactory;

import org.apache.hadoop.io.IOUtils;

public class Test {

//hadoop文件系统下的根文件夹下的hello文件

public static final String HDFS_PATH="hdfs://centos:9000/hello";

/**

* @param args

*/

public static void main(String[] args) throws Exception{

// 告诉java能够读hdfs协议

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

final URL url=new URL(HDFS_PATH);

final InputStream in=url.openStream();

/**

* 參数依次是输入流。输出流。缓冲大小,是否关闭流

*/

IOUtils.copyBytes(in, System.out, 1024,true);

}

}

package com.kane.hdfs;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class OpHDFS {

public static final String PATH="hdfs://centos:9000";

public static final String DIR_PATH="/kane";

public static final String FILE_PATH="/kane/needkane";

/**

* @param args

* @throws URISyntaxException

* @throws IOException

*/

public static void main(String[] args) throws Exception {

final FileSystem fs=FileSystem.get(new URI(PATH),new Configuration());

//创建文件夹

//makeDir(fs);

//上传文件

//uploadFile(fs);

//下载文件

//downLoad(fs);

//删除文件

deleteFile(fs);

}

private static void deleteFile(final FileSystem fs) throws IOException {

//第二个參数是否递归,假设是文件夹就递归删除,当然文件也没关系

fs.delete(new Path(FILE_PATH), true);

}

public static void makeDir(FileSystem fs) throws IOException {

fs.mkdirs(new Path(DIR_PATH));

}

public static void uploadFile(FileSystem fs) throws Exception {

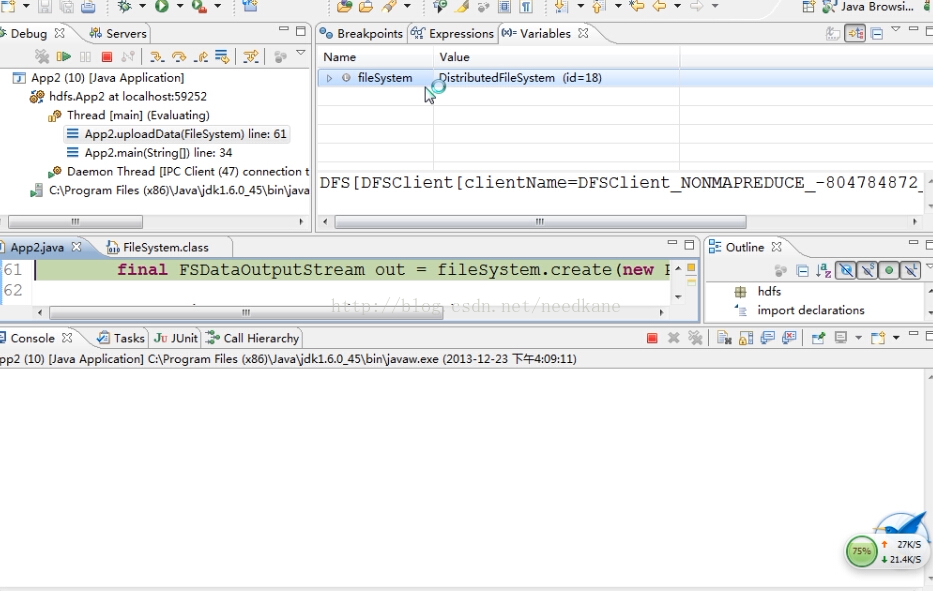

//我们查看源代码的时候,后来直到filesystem,又由于是抽象类,仅仅好打断点获取哪个类实现了

final FSDataOutputStream out=fs.create(new Path(FILE_PATH));//这里的in是本地文件的输入流

final FileInputStream in=new FileInputStream("D:/wordorid");

//參数输入流,输出流,缓冲大小。是否关闭流

IOUtils.copyBytes(in, out,1024,true );

}

public static void downLoad(FileSystem fs) throws Exception {

//从hdfs读取文件

final FSDataInputStream in=fs.open(new Path(FILE_PATH));

IOUtils.copyBytes(in, System.out, 1024,true);

}

}

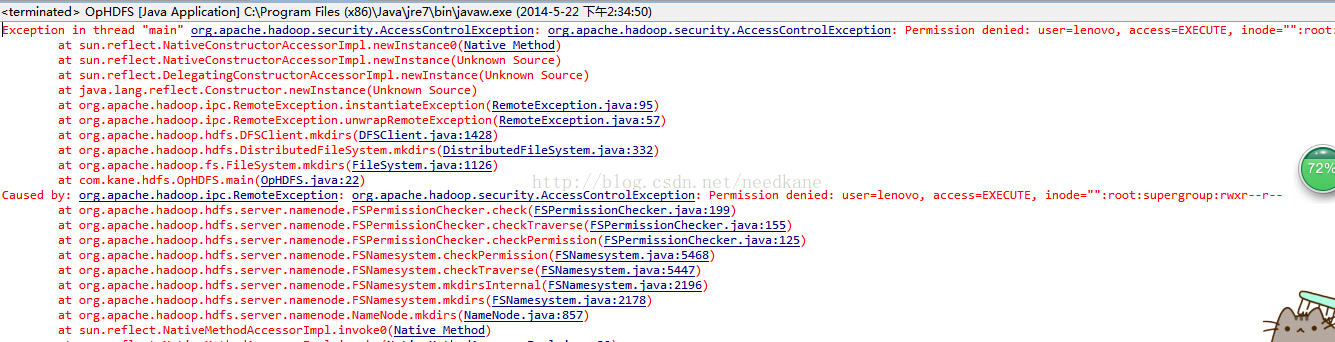

解决的方法改动hadoop根文件夹权限 hadoop fs -chmod 777 /

fs.create(new Path(FILE_PATH)),ctrl+左击查看源代码一直到最里面,结果发现时抽象类

抽象类通过debug查找源代码