效果:

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

version_0

分析:

返回的数据是Json

大分类:https://lapi.jd.com/pool?body={%22pid%22:%220101947%22}&source=book,每个poolid对应一个大title

```

title: "文学综合馆",

operate_title: "",

sort_num: 2,

fid: 0,

id: 7369,

ext_info: {

poolId: "0101945"

}

```

部分数据,即title对应一个poolId,属于同一个字典下

拿到大分类的poolId,就可以用pooId取构造请求:https://lapi.jd.com/pool?body={%22pid%22:%220101945%22}&source=book,来获取中title以及小title,以及对应的小title的url

请求小title的url拿到图书列表,图书信息在https://list.jd.com/listNew.php?cat=1713%2C3260%2C3345&page=150,在这个地址中,有总的页数,SEARCH.adv_param={page:"150",page_count:"200",psort:"0",cid1: 0,

其中page_count为总的页数,page为当前页数,价格未有单独请求,包含在这个网址中,至此,网页分析完毕。

<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

源代码

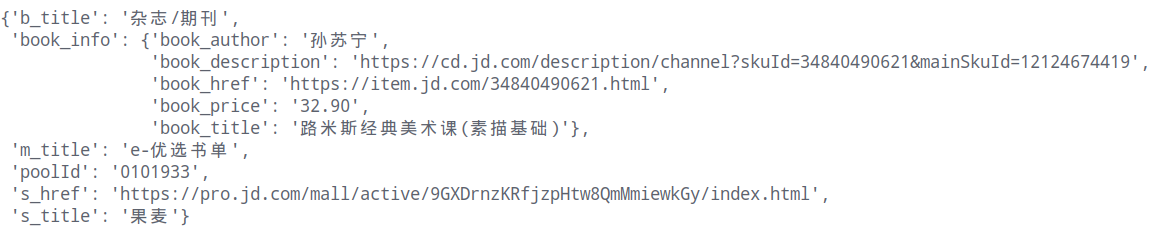

# -*- coding: utf-8 -*- import scrapy import json from copy import deepcopy from pprint import pprint import re from urllib import parse class JdtsSpider(scrapy.Spider): name = 'jdts' # allowed_domains = ['book.jd.com','lapi.jd.com','coll.jd.com','list.jd.com','search.jd.com'] allowed_domains = ["jd.com"] start_urls = ['https://lapi.jd.com/pool?body={%22pid%22:%220101947%22}&source=book'] def parse(self, response): item = dict() json_obj = json.loads(response.body.decode()) data_list = json_obj["data"][1:-4] for data in data_list: # 拿到大分类及poolId item["b_title"] = data["title"] item["poolId"] = data["ext_info"]["poolId"] # 用poolId去请求中级分类和下级分类 temporary_var = '"pid":"{}"'.format(item["poolId"]) next_url = 'https://lapi.jd.com/pool?body={'+temporary_var+'}&source=book' yield scrapy.Request( url=next_url, callback=self.parse_poolid, meta={"item":item} ) break def parse_poolid(self,response): item = response.meta["item"] json_obj = json.loads(response.body.decode()) data_list = json_obj["data"][2:] # 获取中级分类 for data in data_list: item["m_title"] = data["title"] s_item_list = data["children"] # 获得下级分类 for s_item in s_item_list: item["s_title"] = s_item["title"] item["s_href"] = s_item["link_value"] yield scrapy.Request( url=item["s_href"], callback=self.parse_s_href, meta={"item":item} ) def parse_s_href(self,response): item = deepcopy(response.meta["item"]) book_info = dict() print("-"*20) print("响应的url:", response.url) # with open ("test.html",'w',encoding='utf8') as f: # f.write(response.body.decode()) # 拿到总页数 count_page = int(re.findall(r'page_count:"(.*?)"',response.body.decode(),re.S)[0]) print("count_page:",count_page) # 获取书籍列表 content_list = re.findall(r'class="p-img">(.*?)<div class="p-commit">',response.body.decode(),re.S) for content in content_list: # 获取书籍信息 item["book_info"] = book_info item["book_info"]["book_title"] = re.findall(r'<em>(.*?)</em>', content, re.S)[1] item["book_info"]["book_href"] = re.findall(r'href="(.*?)"',content,re.S)[0] item["book_info"]["book_href"] = parse.urljoin(response.url,item["book_info"]["book_href"]) item["book_info"]["book_price"] = re.findall(r'<i>(.*?)</i>', content, re.S)[0] yield scrapy.Request( url=item["book_info"]["book_href"], callback=self.parse_detail, meta={"item":deepcopy(item)} ) num = 2 # 计数指针 while num<count_page: if "&page=" in response.url: next_page_href = response.url.split("&page",1)[0]+"&page={}".format(num) # print("*"*20) # print("next_page_url:", next_page_href) # print("*"*20) else: next_page_href = response.url+"&page={}".format(num) # print("next_page_url:",next_page_href) yield scrapy.Request( url=next_page_href, callback=self.parse_s_href, meta={"item":response.meta["item"]} ) num += 1 def parse_detail(self,response): item = response.meta["item"] item["book_info"]["book_author"] = response.xpath("//div[@class='p-author']/a/text()").extract_first() # url_1 = "https://dx.3.cn/desc/12234231" skudid # url_2 = "https://cd.jd.com/description/channel?skuId=69612641897&mainSkuId=14541400416" skuid mainskuid skuid = re.findall(r'com/(.*?).html',response.url,re.S)[0] mainskuid = re.findall(r"mainSkuId:'(.*?)'",response.body.decode(),re.S) if mainskuid: mainskuid = mainskuid[0] url = "https://cd.jd.com/description/channel?skuId={}&mainSkuId={}" next_url = url.format(skuid,mainskuid) else: url = "https://dx.3.cn/desc/{}" next_url = url.format(skuid) item["book_info"]["book_description"] = next_url print("*"*20) print("描述文件获取地址:",next_url) print("*"*20) pprint(item)