CentOS: Linux localhost.localdomain 3.10.0-862.el7.x86_64 #1 SMP Fri Apr 20 16:44:24 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

JDK: Oracle jdk1.8.0_241 , https://www.oracle.com/java/technologies/javase-jdk8-downloads.html

Hadoop : hadoop-3.2.1.tar.gz

官方安装文档:https://hadoop.apache.org/docs/r3.2.1/hadoop-project-dist/hadoop-common/SingleCluster.html

1、设置自身免登陆,输入命令(红色部分)

# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 1b:e4:ff:13:55:69:6a:2f:46:10:b0:ec:42:fe:5b:80 root@localhost.localdomain The key's randomart image is: +--[ RSA 2048]----+ | .... .| | . .. o.| | ..o . o. | | ooo +. | | ESo o.. | | o+. .o . | | ....... | | o.. | | . .. | +-----------------+

# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# chmod 600 ~/.ssh/authorized_keys # ssh root@localhost Last login: Sun Mar 29 15:00:23 2020

2.关闭selinux、防火墙

a. 永久有效

修改 /etc/selinux/config 文件中的 SELINUX=enforcing 修改为 SELINUX=disabled ,然后重启。

b. 临时生效

# setenforce 0

# systemctl stop firewalld.service

# systemctl disable iptables.service

3.下载Java SDK,前往 https://www.oracle.com/java/technologies/javase-jdk8-downloads.html 下载

# mkdir /data/server/hadoop/ # cd /data/server/hadoop/ # rz #选择你下载好的文件,上传到当前目录下 # tar zxvf jdk-8u241-linux-x64.tar.gz

4. 下载Hadoop,前往https://downloads.apache.org/hadoop/common/ 选择你想要的版本,这里选最新版本3.2.1

# cd /data/server/hadoop # wget https://downloads.apache.org/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz # tar zxvf hadoop-3.2.1.tar.gz # mv hadoop-3.2.1/ 3.2.1

5.设置Hadoop环境变量,vi /etc/profile ,在末尾增加如下内容:

#hadoop export HADOOP_HOME=/data/server/hadoop/3.2.1 export HADOOP_HDFS_HOME=$HADOOP_HOME export HADOOP_YARN_HOME=$HADOOP_HOME export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin export HDFS_DATANODE_USER=root export HDFS_DATANODE_SECURE_USER=root export HDFS_NAMENODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=root

再执行使变量生效

# source /etc/profile

6.设置JAVA_HOME, vi 3.2.1/etc/hadoop/hadoop-env.sh ,末尾添加如下内容:

export JAVA_HOME=/data/server/hadoop/jdk1.8.0_241

7.查看是否正常

# hadoop version Hadoop 3.2.1 Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842 Compiled by rohithsharmaks on 2019-09-10T15:56Z Compiled with protoc 2.5.0 From source with checksum 776eaf9eee9c0ffc370bcbc1888737 This command was run using /home/data/server/hadoop/3.2.1/share/hadoop/common/hadoop-common-3.2.1.jar

8.重命名启动脚本,避免和spark服务脚本冲突(可不删除cmd脚本)。

rm -rf ./3.2.1/sbin/*.cmd rm -rf ./3.2.1/bin/*.cmd mv ./3.2.1/sbin/start-all.sh ./3.2.1/sbin/start-hadoop-all.sh mv ./3.2.1/sbin/stop-all.sh ./3.2.1/sbin/stop-hadoop-all.sh

Hadoop设置

编辑 3.2.1/etc/hadoop/core-site.xml :

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://172.16.1.122:9000</value> <description>指定HDFS Master(namenode)的通信地址,默认端口</description> </property> <property> <name>hadoop.tmp.dir</name> <value>/data/server/hadoop/3.2.1/tmp</value> <description>指定hadoop运行时产生文件的存储路径</description> </property> <property> <name>hadoop.native.lib</name> <value>false</value> <description>是否应使用本机hadoop库(如果存在)</description> </property> </configuration>

编辑 3.2.1/etc/hadoop/hdfs-site.xml(参考:https://www.cnblogs.com/duanxz/p/3799467.html) :

<configuration> <property> <name>dfs.replication</name> <value>1</value> <description>设置数据块应该被复制的份数</description> </property> <property> <name>dfs.safemode.threshold.pct</name> <value>0</value> <description>小于等于0意味不进入安全模式,大于1意味一直处于安全模式</description> </property> <property> <name>dfs.permissions</name> <value>false</value> <description>文件操作时的权限检查标识, 关闭</description> </property> </configuration>

编辑 3.2.1/etc/hadoop/yarn-site.xml (参考:https://www.cnblogs.com/yinchengzhe/p/5142659.html):

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<description>NodeManager上运行的附属服务。需配置成mapreduce_shuffle,才可运行MapReduce程序</description>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

编辑 3.2.1/etc/hadoop/mapred-site.xml :

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<description>yarn模式</description>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

格式化hdfs

# hdfs namenode -format

启动hadoop

# start-hadoop-all.sh

查看启动情况(红色部分)

# /data/server/hadoop/jdk1.8.0_241/bin/jps 20400 NodeManager 32566 QuorumPeerMain 20054 SecondaryNameNode 19687 NameNode 20567 Jps 19817 DataNode 18108 ResourceManager

至此,hadoop启动成功;

验证hadoop

下面运行一次经典的WorkCount程序来检查hadoop工作是否正常:

创建input文件夹:

# hdfs dfs -mkdir /input

将test.txt文件上传的hdfs的/input目录下:

# hdfs dfs -put ./3.2.1/LICENSE.txt /input/test.txt

接运行hadoop安装包中自带的workcount程序:

hadoop jar ./3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount /input/test.txt /output/

控制台输出结果:

2020-03-29 18:38:52,220 INFO client.RMProxy: Connecting to ResourceManager at /172.16.1.122:8032 2020-03-29 18:38:52,840 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1585465225552_0004 2020-03-29 18:38:52,948 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false 2020-03-29 18:38:53,059 INFO input.FileInputFormat: Total input files to process : 1 2020-03-29 18:38:53,094 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false 2020-03-29 18:38:53,523 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false 2020-03-29 18:38:53,934 INFO mapreduce.JobSubmitter: number of splits:1 2020-03-29 18:38:54,075 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false 2020-03-29 18:38:54,500 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1585465225552_0004 2020-03-29 18:38:54,500 INFO mapreduce.JobSubmitter: Executing with tokens: [] 2020-03-29 18:38:54,684 INFO conf.Configuration: resource-types.xml not found 2020-03-29 18:38:54,684 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'. 2020-03-29 18:38:54,745 INFO impl.YarnClientImpl: Submitted application application_1585465225552_0004 2020-03-29 18:38:54,784 INFO mapreduce.Job: The url to track the job: http://172.16.1.122:8088/proxy/application_1585465225552_0004/ 2020-03-29 18:38:54,784 INFO mapreduce.Job: Running job: job_1585465225552_0004 2020-03-29 18:39:01,959 INFO mapreduce.Job: Job job_1585465225552_0004 running in uber mode : false 2020-03-29 18:39:01,959 INFO mapreduce.Job: map 0% reduce 0% 2020-03-29 18:39:07,051 INFO mapreduce.Job: map 100% reduce 0% 2020-03-29 18:39:11,109 INFO mapreduce.Job: map 100% reduce 100% 2020-03-29 18:39:12,121 INFO mapreduce.Job: Job job_1585465225552_0004 completed successfully 2020-03-29 18:39:12,208 INFO mapreduce.Job: Counters: 54 File System Counters FILE: Number of bytes read=46852 FILE: Number of bytes written=546085 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=150673 HDFS: Number of bytes written=35324 HDFS: Number of read operations=8 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 HDFS: Number of bytes read erasure-coded=0 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=2802 Total time spent by all reduces in occupied slots (ms)=2705 Total time spent by all map tasks (ms)=2802 Total time spent by all reduce tasks (ms)=2705 Total vcore-milliseconds taken by all map tasks=2802 Total vcore-milliseconds taken by all reduce tasks=2705 Total megabyte-milliseconds taken by all map tasks=2869248 Total megabyte-milliseconds taken by all reduce tasks=2769920 Map-Reduce Framework Map input records=2814 Map output records=21904 Map output bytes=234035 Map output materialized bytes=46852 Input split bytes=104 Combine input records=21904 Combine output records=2981 Reduce input groups=2981 Reduce shuffle bytes=46852 Reduce input records=2981 Reduce output records=2981 Spilled Records=5962 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=167 CPU time spent (ms)=1940 Physical memory (bytes) snapshot=516464640 Virtual memory (bytes) snapshot=5573906432 Total committed heap usage (bytes)=393216000 Peak Map Physical memory (bytes)=310145024 Peak Map Virtual memory (bytes)=2784337920 Peak Reduce Physical memory (bytes)=206319616 Peak Reduce Virtual memory (bytes)=2789568512 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=150569 File Output Format Counters Bytes Written=35324

查看输出结果:

# hdfs dfs -ls /output

可见hdfs的/output目录下,有两个文件:

Found 2 items -rw-r--r-- 1 root supergroup 0 2020-03-29 18:39 /output/_SUCCESS -rw-r--r-- 1 root supergroup 35324 2020-03-29 18:39 /output/part-r-00000

看一下文件part-r-00000的内容:

# hdfs dfs -cat /output/part-r-00000 hadoop 3 hbase 1 hive 2 mapreduce 1 spark 2 sqoop 1 storm 1

可见WorkCount计算成功,结果符合预期;

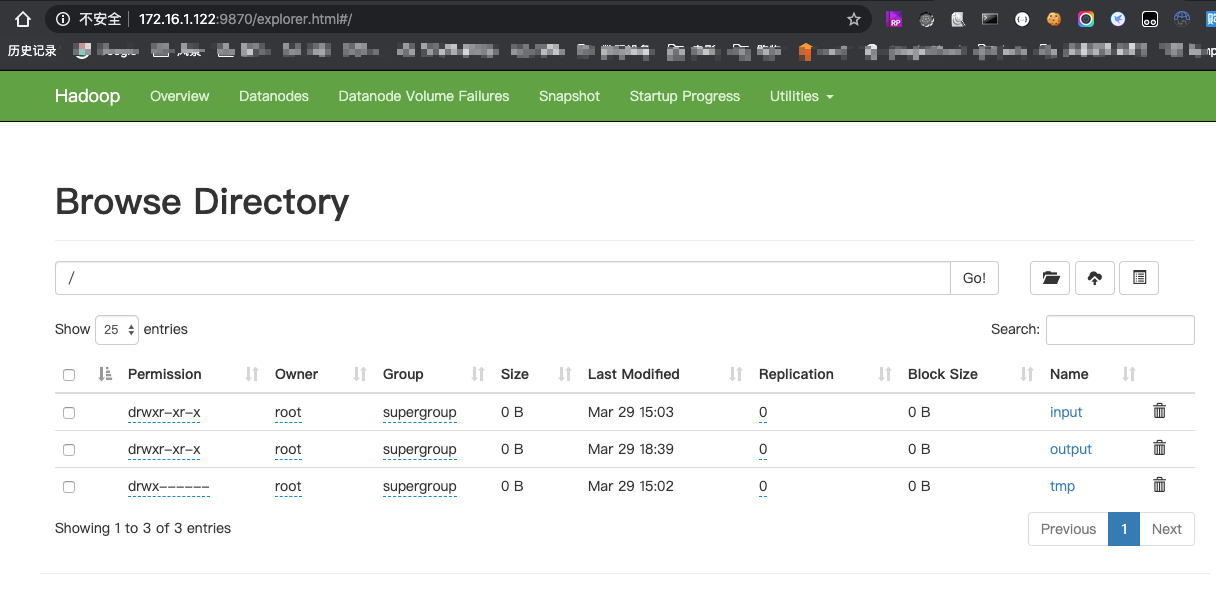

7. hdfs网页如下图,可以看到文件信息,地址:http://172.16.1.122:9870/

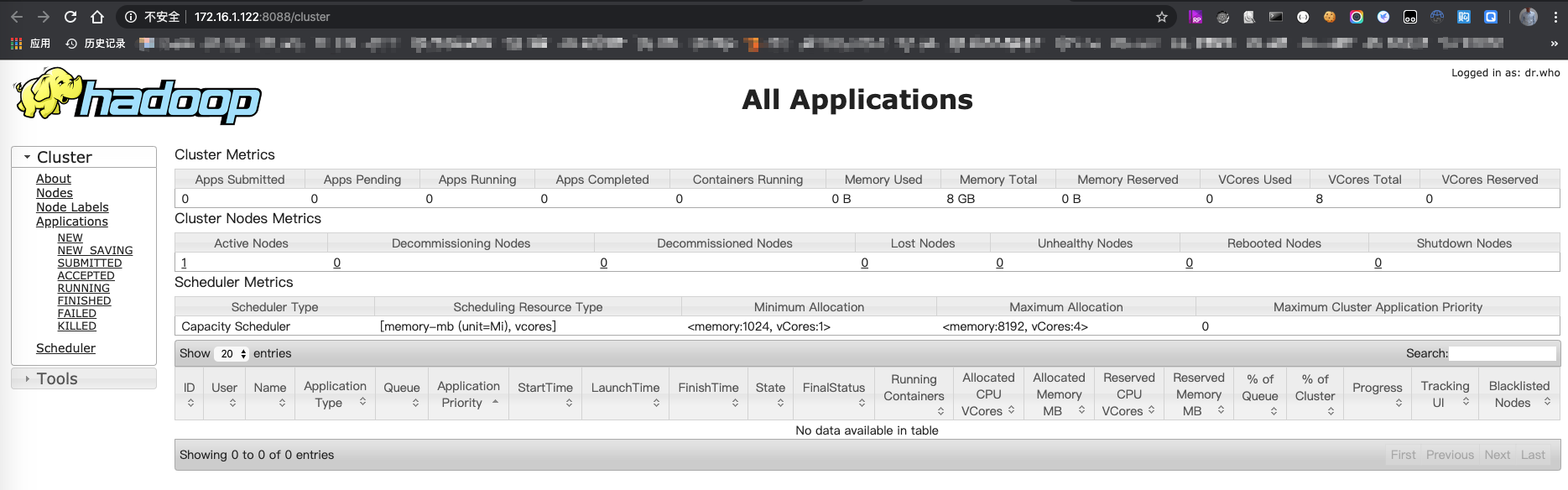

8. yarn的网页如下图,可以看到任务信息,地址:http://172.16.1.122:8088/cluster

至此,hadoop3.2.1伪分布式搭建和验证完毕。

PS:

https://blog.csdn.net/u010476739/article/details/86647585?depth_1-utm_source=distribute.pc_relevant.none-task&utm_source=distribute.pc_relevant.none-task

https://blog.csdn.net/lu1171901273/article/details/86518494

https://blog.csdn.net/chenxun_2010/article/details/78238251

https://www.cnblogs.com/jancco/p/4447756.html