hadoop安装配置

大数据的四个V特征

-----------------

1.volume //体量大

2.variety //样式多.

3.velocity //速度快

4.valueless //价值密度低

hadoop四个模块

-------------------

1.common

2.hdfs

3.hadoop yarn

4.hadooop mapreduce(mr)

安装hadoop

-------------------

1.安装jdk

a)下载jdk-8u65-linux-x64.tar.gz 到~/downloads目录 b)tar开 $>tar -xzvf jdk-8u65-linux-x64.tar.gz c)创建/soft文件夹 $>mkdir /soft d)移动tar开的文件到/soft下 $>mv ~/downloads/jdk-1.8.0_65 /soft/ e)创建符号连接 $>ln -s /soft/jdk-1.8.0_65 /soft/jdk f)验证jdk安装是否成功 $>cd /soft/jdk/bin $>./java -version

centos配置环境变量

------------------------

1.编辑/etc/profile $>vi /etc/profile ... export JAVA_HOME=/soft/jdk exprot PATH=$PATH:$JAVA_HOME/bin 2.使环境变量即刻生效 $>source /etc/profile 3.进入任意目录下,测试是否ok $>cd ~ $>java -version

安装hadoop

-------------------------

1

.安装hadoop a)下载hadoop-2.7.3.tar.gz 到~/downloads目录 b)tar开 $>tar -xzvf hadoop-2.7.3.tar.gz c)无 d)移动tar开的文件到/soft下 $>mv ~/downloads/hadoop-2.7.3 /soft/ e)创建符号连接 $>ln -s /soft/hadoop-2.7.3 /soft/hadoop f)验证jdk安装是否成功 $>cd /soft/hadoop/bin $>./hadoop version 2.配置hadoop环境变量 $>vi /etc/profile ... export JAVA_HOME=/soft/jdk exprot PATH=$PATH:$JAVA_HOME/bin export HADOOP_HOME=/soft/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin 3.生效 $>source /etc/profile

配置hadoop

--------------------

1.standalone(local)

nothing !

不需要启用单独的hadoop进程。

2.Pseudodistributed mode

伪分布模式。

a)进入${HADOOP_HOME}/etc/hadoop目录

b)编辑core-site.xml

<?xml version="1.0"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost/</value>

</property>

</configuration>

c)编辑hdfs-site.xml

<?xml version="1.0"?>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

d)编辑mapred-site.xml

注意:cp mapred-site.xml.template mapred-site.xml

<?xml version="1.0"?>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

e)编辑yarn-site.xml

<?xml version="1.0"?>

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>localhost</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

f)配置SSH

1)检查是否安装了ssh相关软件包(openssh-server + openssh-clients + openssh)

$yum list installed | grep ssh

2)检查是否启动了sshd进程

$>ps -Af | grep sshd

3)在client侧生成公私秘钥对。

$>ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

4)生成~/.ssh文件夹,里面有id_rsa(私钥) + id_rsa.pub(公钥)

查看 .ssh文件

$>ls -al

5)追加公钥到~/.ssh/authorized_keys文件中(文件名、位置固定)

$>cd ~/.ssh

$>cat id_rsa.pub >> authorized_keys

6)修改authorized_keys的权限为644.

$>chmod 644 authorized_keys

7)测试

$>ssh localhost

配置SSH

-------------

生成密钥对$>ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

添加认证文件$>cat ~/.ssh/id_rsa.pub > ~/.ssh/authorized_keys

权限设置,文件和文件夹权限除了自己之外,别人不可写。

$>chmod 700 ~/.ssh

$>chmod 644 ~/.ssh/authorized_keys

配置hadoop,使用符号连接的方式,让三种配置形态共存。

----------------------------------------------------

1.创建三个配置目录,内容等同于hadoop目录

cp -r hadoop local

${hadoop_home}/etc/local

${hadoop_home}/etc/pesudo

${hadoop_home}/etc/full

2.创建符号连接

$>ln -s pesudo hadoop

启动hadoop

1.对hdfs进行格式化 $>hadoop namenode -format 2.修改hadoop配置文件,手动指定JAVA_HOME环境变量 [${hadoop_home}/etc/hadoop/hadoop-env.sh] ... export JAVA_HOME=/soft/jdk ... 3.启动hadoop的所有进程 $>start-all.sh 在启动中如果出现以下问题,The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established. 请关闭防火墙: 4.启动完成后,出现以下进程 $>jps 33702 NameNode 33792 DataNode 33954 SecondaryNameNode 29041 ResourceManager 34191 NodeManager 5.查看hdfs文件系统 $>hdfs dfs -ls / 6.创建目录 $>hdfs dfs -mkdir -p /user/centos/hadoop 7.通过webui查看hadoop的文件系统 http://localhost:50070/ 8.停止hadoop所有进程 $>stop-all.sh

centos防火墙操作

[cnetos 6.5之前的版本] $>sudo service firewalld stop //停止服务 $>sudo service firewalld start //启动服务 $>sudo service firewalld status //查看状态 [centos7] $>sudo systemctl enable firewalld.service //"开机启动"启用 $>sudo systemctl disable firewalld.service //"开机自启"禁用 $>sudo systemctl start firewalld.service //启动防火墙 $>sudo systemctl stop firewalld.service //停止防火墙 $>sudo systemctl status firewalld.service //查看防火墙状态 [开机自启] $>sudo chkconfig firewalld on //"开启自启"启用 $>sudo chkconfig firewalld off //"开启自启"禁用

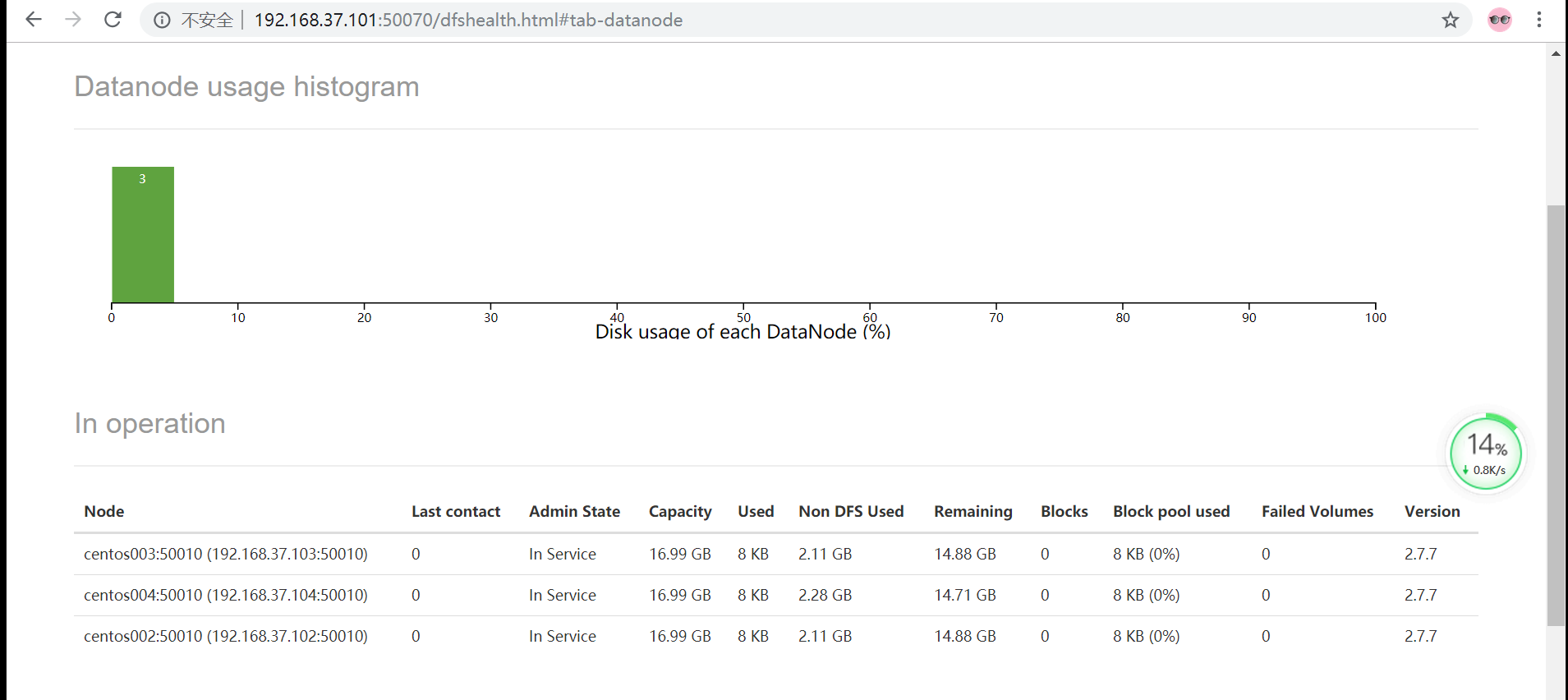

hadoop的端口

-----------------

50070 //namenode http port

50075 //datanode http port

50090 //2namenode http port

8020 //namenode rpc port

50010 //datanode rpc port

hadoop四大模块

-------------------

common

hdfs //namenode + datanode + secondarynamenode

mapred

yarn //resourcemanager + nodemanager

3. full distributed mode 完全分布式

修改主机名 ------------------- 1./etc/hostname s201 2./etc/hosts 127.0.0.1 localhost 192.168.231.201 s201 192.168.231.202 s202 192.168.231.203 s203 192.168.231.204 s204 修改主机名 ------------------- 1./etc/hostname s201 2./etc/hosts 127.0.0.1 localhost 192.168.231.201 s201 192.168.231.202 s202 192.168.231.203 s203 192.168.231.204 s204 完全分布式 -------------------- 1.克隆3台client(centos7) 右键centos-7-->管理->克隆-> ... -> 完整克隆 2.启动client 3.启用客户机共享文件夹。 4.修改hostname和ip地址文件 [/etc/hostname] s202 [/etc/sysconfig/network-scripts/ifcfg-ethxxxx] ... IPADDR=.. 5.重启网络服务 $>sudo service network restart 6.修改/etc/resolv.conf文件 nameserver 192.168.231.2 7.重复以上3 ~ 6过程. 准备完全分布式主机的ssh ------------------------- 1.删除所有主机上的/home/centos/.ssh/* 2.在s201主机上生成密钥对 $>ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa 3.将s201的公钥文件id_rsa.pub远程复制到202 ~ 204主机上。 并放置/home/centos/.ssh/authorized_keys $>scp id_rsa.pub centos@s201:/home/centos/.ssh/authorized_keys $>scp id_rsa.pub centos@s202:/home/centos/.ssh/authorized_keys $>scp id_rsa.pub centos@s203:/home/centos/.ssh/authorized_keys $>scp id_rsa.pub centos@s204:/home/centos/.ssh/authorized_keys 4.配置完全分布式(${hadoop_home}/etc/hadoop/) [core-site.xml] <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://s201/</value> </property> </configuration> [hdfs-site.xml] <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>dfs.replication</name> <value>3</value> </property> </configuration> [mapred-site.xml] 不变 [yarn-site.xml] <?xml version="1.0"?> <configuration> <property> <name>yarn.resourcemanager.hostname</name> <value>s201</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration> [slaves] s202 s203 s204 [hadoop-env.sh] ... export JAVA_HOME=/soft/jdk ... 5.分发配置 $>cd /soft/hadoop/etc/ $>scp -r full centos@s202:/soft/hadoop/etc/ $>scp -r full centos@s203:/soft/hadoop/etc/ $>scp -r full centos@s204:/soft/hadoop/etc/ 6.删除临时目录文件 $>cd /tmp $>rm -rf hadoop-centos $>ssh s202 rm -rf /tmp/hadoop-centos $>ssh s203 rm -rf /tmp/hadoop-centos $>ssh s204 rm -rf /tmp/hadoop-centos 7.删除hadoop日志 $>cd /soft/hadoop/logs $>rm -rf * $>ssh s202 rm -rf /soft/hadoop/logs/* $>ssh s203 rm -rf /soft/hadoop/logs/* $>ssh s204 rm -rf /soft/hadoop/logs/* 8.格式化文件系统 $>hadoop namenode -format 9.启动hadoop进程 $>start-all.sh

webui查看结果