简单demo的代码路径在tensorflow ensorflowg3doc utorialsword2vecword2vec_basic.py

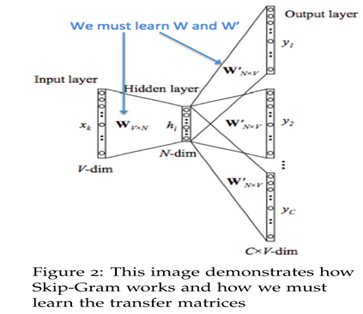

Sikp gram方式的model思路

http://tensorflow.org/tutorials/word2vec/index.md

另外可以参考cs224d课程的课件。

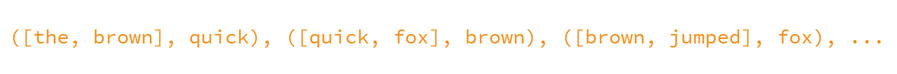

窗口设置为左右1个词

对应skip gram模型 就是一个单词预测其周围单词(cbow模型是 输入一系列context词,预测一个中心词)

Quick -> the quick -> brown

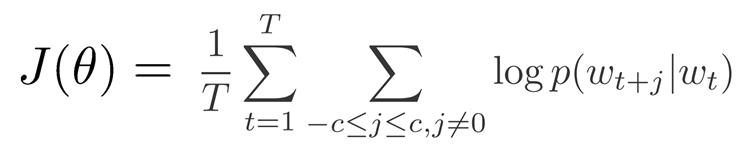

Skip gram的训练目标cost function是

对应

但是这样太耗时了 每一步训练时间代价都是O(VocabularySize)

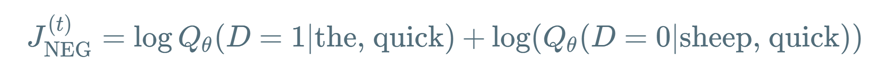

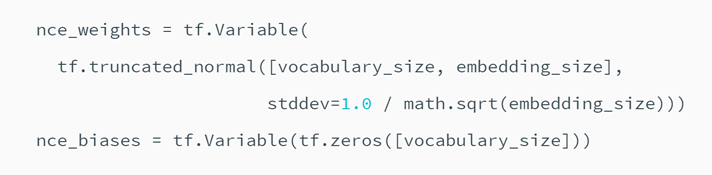

于是我们采用了 nce(noise-contrastive estimation)的方式,也就是负样本采样,采用某种方式随机生成词作为负样本,比如 quick -> sheep ,sheep作为负样本,假设我们就取一个负样本

- 输入数据 这里是 分隔好的单词

- 读入单词存储到list中

- 统计词频 0号位置给 unknown, 其余按照频次由高到低排列,unknown的获取按照预设词典大小 比如50000,则频次排序靠后于50000的都视为unknown

建立好 key->id id->key的双向索引map

4. 产生一组training batch

batch_size = 128

embedding_size = 128 # Dimension of the embedding vector.

skip_window = 1 # How many words to consider left and right.

num_skips = 2 # How many times to reuse an input to generate a label.

Batch_size每次sgd训练时候扫描的数据大小, embedding_size 词向量的大小,skip_window 窗口大小,

Num_skips = 2 表示input用了产生label的次数限制

demo中默认是2, 可以设置为1 对比下

默认2的时候

batch, labels = generate_batch(batch_size=8, num_skips=2, skip_window=1)

for i in range(8):

print(batch[i], '->', labels[i, 0])

print(reverse_dictionary[batch[i]], '->', reverse_dictionary[labels[i, 0]])

Sample data [5239, 3084, 12, 6, 195, 2, 3137, 46, 59, 156]

3084 -> 5239

originated -> anarchism

3084 -> 12

originated -> as

12 -> 6

as -> a

12 -> 3084

as -> originated

6 -> 195

a -> term

6 -> 12

a -> as

195 -> 2

term -> of

195 -> 6

term -> a

3084左侧出现2次,对应窗口左右各1

设置1的时候

batch, labels = generate_batch(batch_size=8, num_skips=1, skip_window=1)

for i in range(8):

print(batch[i], '->', labels[i, 0])

print(reverse_dictionary[batch[i]], '->', reverse_dictionary[labels[i, 0]])

Sample data [5239, 3084, 12, 6, 195, 2, 3137, 46, 59, 156]

3084 -> 12

originated -> as

12 -> 3084

as -> originated

6 -> 12

a -> as

195 -> 2

term -> of

2 -> 3137

of -> abuse

3137 -> 46

abuse -> first

46 -> 59

first -> used

59 -> 156

3084左侧只出现1次

# Step 4: Function to generate a training batch for the skip-gram model.

def generate_batch(batch_size, num_skips, skip_window):

global data_index

assert batch_size % num_skips == 0

assert num_skips <= 2 * skip_window

batch = np.ndarray(shape=(batch_size), dtype=np.int32)

labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

span = 2 * skip_window + 1 # [ skip_window target skip_window ]

buffer = collections.deque(maxlen=span)

for _ in range(span):

buffer.append(data[data_index])

data_index = (data_index + 1) % len(data)

for i in range(batch_size // num_skips):

target = skip_window # target label at the center of the buffer

targets_to_avoid = [ skip_window ]

for j in range(num_skips):

while target in targets_to_avoid:

target = random.randint(0, span - 1)

targets_to_avoid.append(target)

batch[i * num_skips + j] = buffer[skip_window]

labels[i * num_skips + j, 0] = buffer[target]

buffer.append(data[data_index])

data_index = (data_index + 1) % len(data)

return batch, labels

batch, labels = generate_batch(batch_size=8, num_skips=2, skip_window=1)

for i in range(8):

print(batch[i], '->', labels[i, 0])

print(reverse_dictionary[batch[i]], '->', reverse_dictionary[labels[i, 0]])

就是对于一个中心词 在window范围 随机选取 num_skips个词,产生一系列的

(input_id, output_id) 作为(batch_instance, label)

这些都是正样本

训练准备,

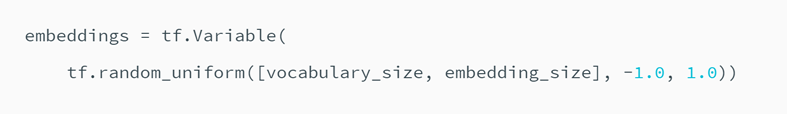

Input embedding W

Output embedding W^

后面code都比较容易理解,tf定义了nce_loss来自动处理,每次会自动添加随机负样本

num_sampled = 64 # Number of negative examples to sample.

graph = tf.Graph()

with graph.as_default():

# Input data.

train_inputs = tf.placeholder(tf.int32, shape=[batch_size])

train_labels = tf.placeholder(tf.int32, shape=[batch_size, 1])

valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

# Construct the variables.

embeddings = tf.Variable(

tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

nce_weights = tf.Variable(

tf.truncated_normal([vocabulary_size, embedding_size],

stddev=1.0 / math.sqrt(embedding_size)))

nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

# Look up embeddings for inputs.

embed = tf.nn.embedding_lookup(embeddings, train_inputs)

# Compute the average NCE loss for the batch.

# tf.nce_loss automatically draws a new sample of the negative labels each

# time we evaluate the loss.

loss = tf.reduce_mean(

tf.nn.nce_loss(nce_weights, nce_biases, embed, train_labels,

num_sampled, vocabulary_size))

# Construct the SGD optimizer using a learning rate of 1.0.

optimizer = tf.train.GradientDescentOptimizer(1.0).minimize(loss)

训练过程利用embedding矩阵的乘法计算了不同词向量的欧式距离 并计算了高频几个词对应的距离最近的词展示

最后调用 skitlearn的TSNE模块 进行降维到2元,绘图展示。