【softmax分类器的加速器】

https://www.tensorflow.org/api_docs/python/tf/nn/sampled_softmax_loss

This is a faster way to train a softmax classifier over a huge number of classes.

【分类的结果集过大,选取子集】

https://www.tensorflow.org/api_guides/python/nn#Candidate_Sampling

Do you want to train a multiclass or multilabel model with thousands or millions of output classes (for example, a language model with a large vocabulary)? Training with a full Softmax is slow in this case, since all of the classes are evaluated for every training example. Candidate Sampling training algorithms can speed up your step times by only considering a small randomly-chosen subset of contrastive classes (called candidates) for each batch of training examples.

https://www.tensorflow.org/extras/candidate_sampling.pdf

【 compute F(x, y) for every class y ∈ L for every training example----耗时点,这是要解决的问题】

What is Candidate Sampling Say we have a multiclass or multilabel problem where each training example (x , ) consists of i Ti a context xi a small (multi)set of target classes Ti out of a large universe L of possible classes. For example, the problem might be to predicting the next word (or the set of future words) in a sentence given the previous words.

We wish to learn a compatibility function F(x, y) which says something about the compatibility of a class y with a context x . For example the probability of the class given the context.

“Exhaustive” training methods such as softmax and logistic regression require us to compute F(x, y) for every class y ∈ L for every training example. When |L| is very large, this can be prohibitively expensive.

【the model having a very large target vocabulary by selecting only a small subset of the whole target vocabulary:子集】

https://arxiv.org/pdf/1412.2007.pdf

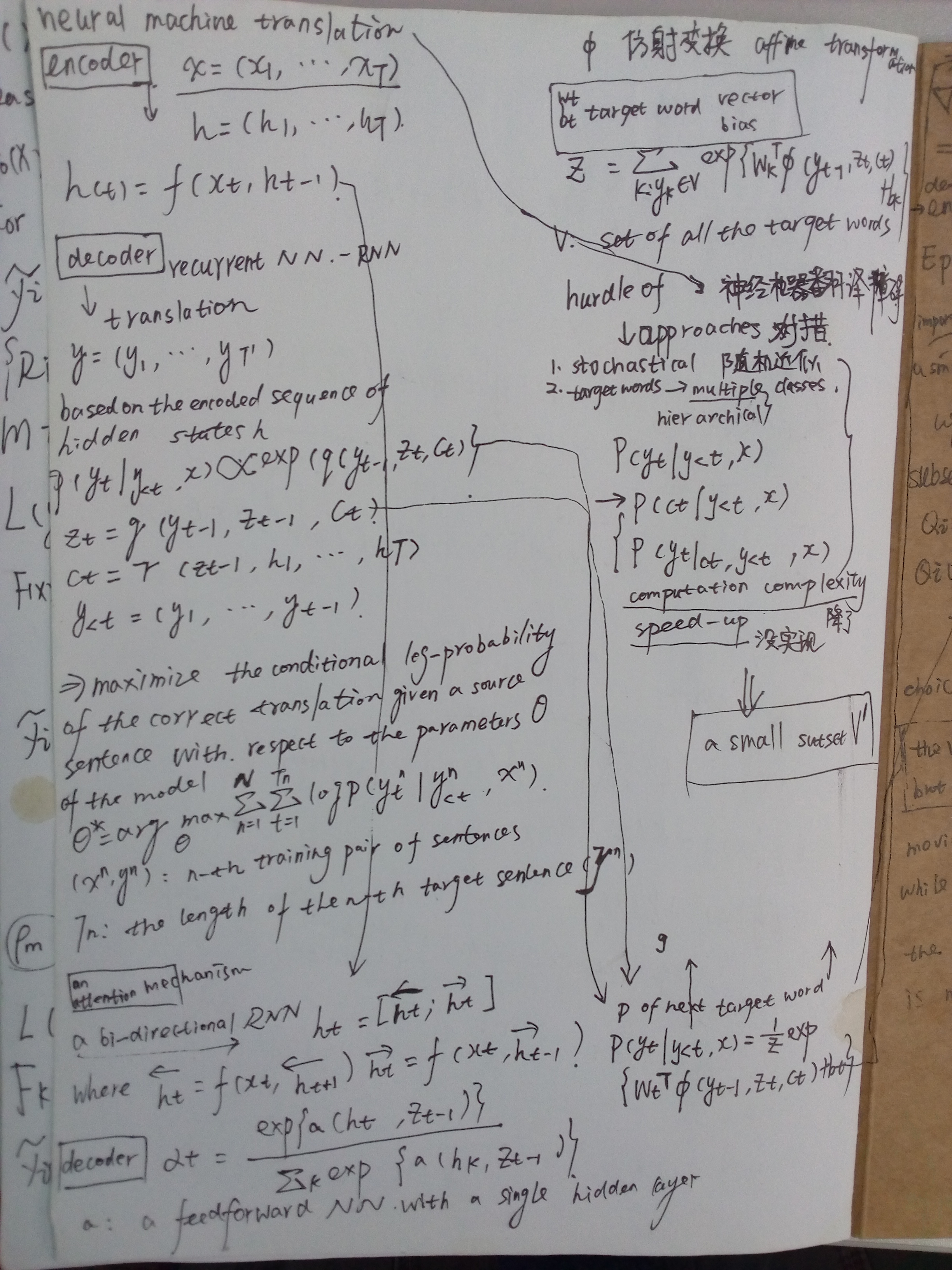

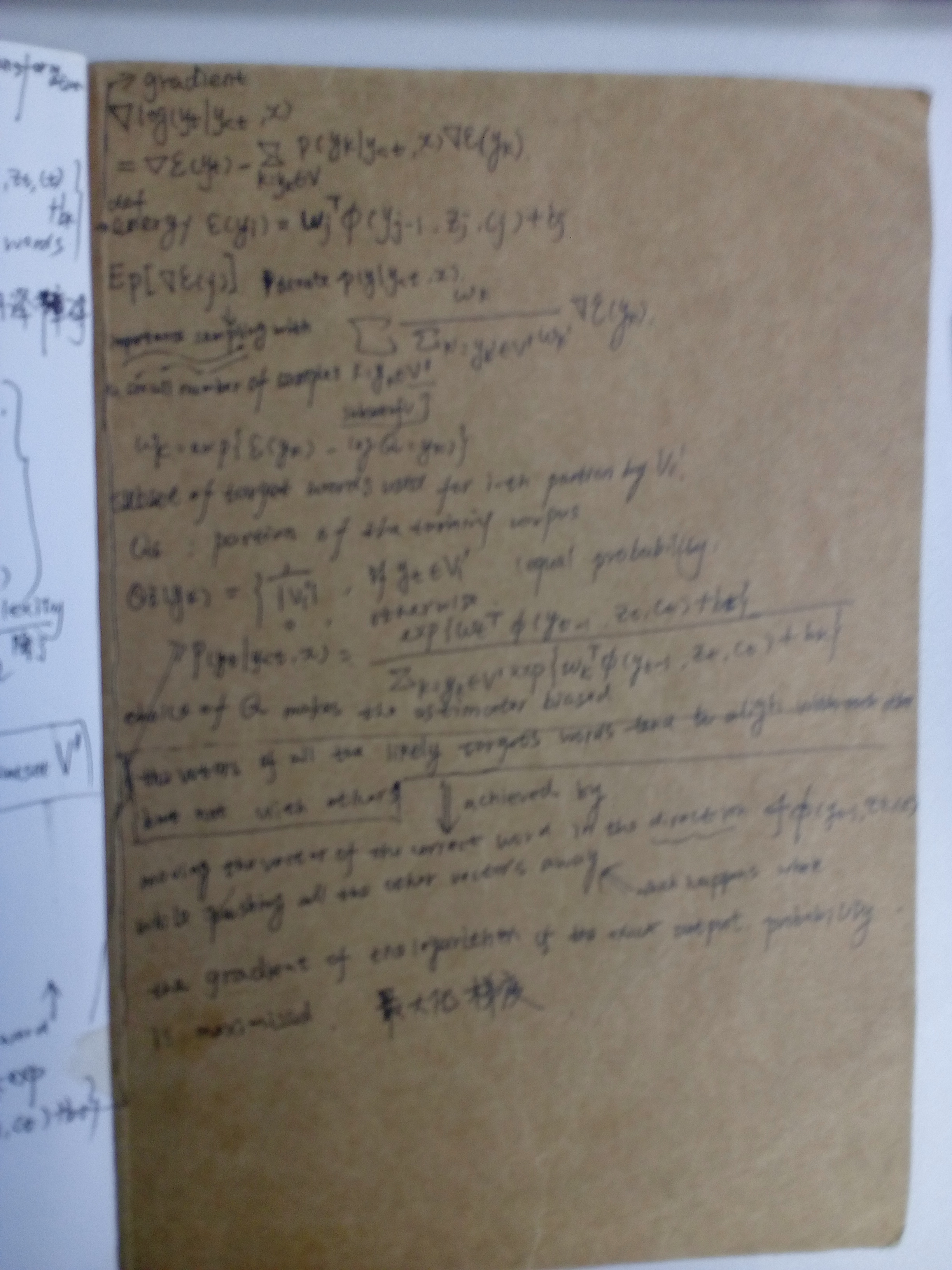

Neural machine translation, a recently proposed approach to machine translation based purely on neural networks, has shown promising results compared to the existing approaches such as phrase-based statistical machine translation. Despite its recent success, neural machine translation has its limitation in handling a larger vocabulary, as training complexity as well as decoding complexity increase proportionally to the number of target words. In this paper, we propose a method that allows us to use a very large target vocabulary without increasing training complexity, based on importance sampling. We show that decoding can be efficiently done even with the model having a very large target vocabulary by selecting only a small subset of the whole target vocabulary. The models trained by the proposed approach are empirically found to outperform the baseline models with a small vocabulary as well as the LSTM-based neural machine translation models. Furthermore, when we use the ensemble of a few models with very large target vocabularies, we achieve the state-of-the-art translation performance (measured by BLEU) on the English->German translation and almost as high performance as state-of-the-art English->French translation system.