收藏些图像处理,机器学习,深度学习方面比较不错的文章,时常学习,复习和膜拜吧。。。

图像方面(传统CV):

1. SIFT特征

https://www.cnblogs.com/wangguchangqing/p/4853263.html

http://shartoo.github.io/SIFT-feature/?FbmNv=5d9f3d0c8ca5090a

https://blog.csdn.net/u010440456/article/details/81483145

2. HOG特征

http://shartoo.github.io/HOG-feature/?FbmNv=5d9f3d48e0647071

https://senitco.github.io/2017/06/10/image-feature-hog/

https://www.cnblogs.com/aoru45/p/9748481.html

https://zhuanlan.zhihu.com/p/40960756

3. 图像金字塔

http://shartoo.github.io/image-pramid/?FbmNv=5d9f3d6e990e41bb

https://zhuanlan.zhihu.com/p/32815143

4. Haar特征

http://shartoo.github.io/img-haar-feature/

https://senitco.github.io/2017/06/15/image-feature-haar/

https://juejin.im/post/5b0e6f83f265da0910791a38

https://blog.csdn.net/zouxy09/article/details/7929570

5.Harris角点

https://www.cnblogs.com/ronny/p/4009425.html

https://senitco.github.io/2017/06/18/image-feature-harris/

https://zhuanlan.zhihu.com/p/42490675

https://zhuanlan.zhihu.com/p/36382429

机器学习方面:

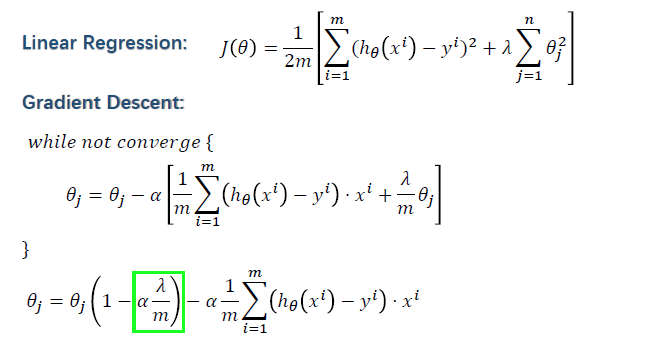

1. Linear Regression

https://zhuanlan.zhihu.com/p/45023349

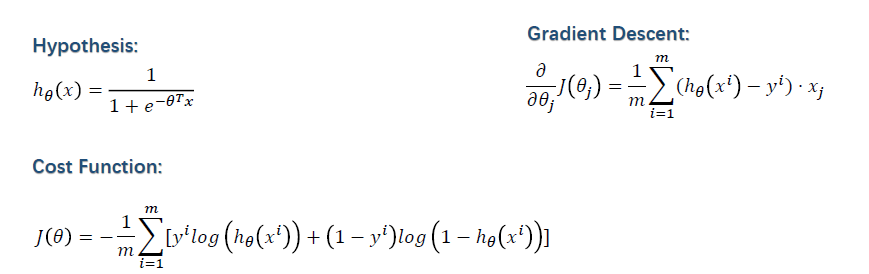

2. Logistic Regression

https://chenrudan.github.io/blog/2016/01/09/logisticregression.html

https://www.jiqizhixin.com/articles/2018-05-13-3

https://zhuanlan.zhihu.com/p/28408516

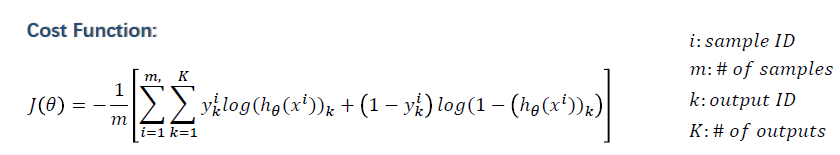

3.Neutral Network

https://clyyuanzi.gitbooks.io/julymlnotes/content/dl_nn.html

https://www.cnblogs.com/subconscious/p/5058741.html

神经网络损失函数(loss function):

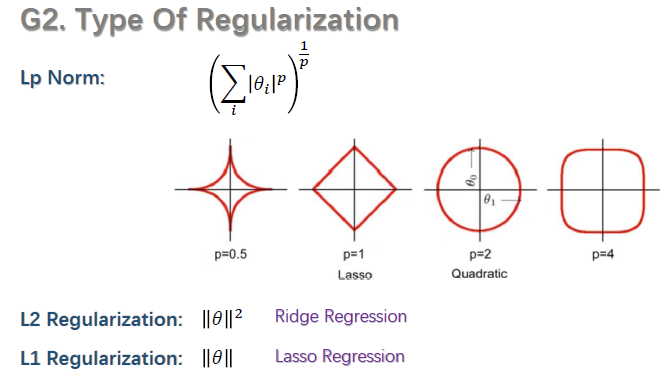

4. 回归和正则化(Regression and Regularization)

https://www.zhihu.com/question/20924039

https://zhuanlan.zhihu.com/p/29957294

线性回归,逻辑回归和神经网络带正则化的损失函数:

正则化项能减缓梯度的变化:

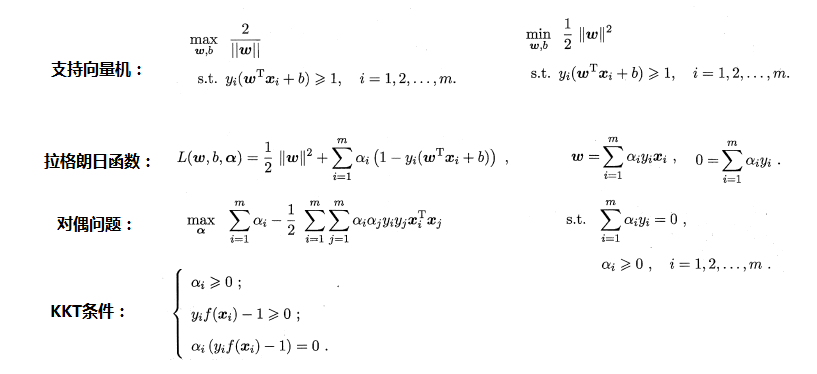

5. SVM(support vector machine)

拉格朗日乘子法

对偶问题:

KKT条件:

SVM原理:

https://www.jiqizhixin.com/articles/2018-10-17-20

https://www.cnblogs.com/leftnoteasy/archive/2011/05/02/basic-of-svm.html

https://wizardforcel.gitbooks.io/dm-algo-top10/content/svm-1.html

https://blog.csdn.net/v_JULY_v/article/details/7624837

支持向量机的表达式,拉格朗日函数,对偶问题和KKT条件:

软间隔支持向量机的表达式,拉格朗日函数,对偶问题和KKT条件:

支持向量机非线性化的核函数:

SVM使用代码(sklearn包):(线性svm,和采用核函数的非线性SVM)

SVM的python实现: https://blog.csdn.net/laobai1015/article/details/82763033

6. kmeans算法

https://www.csuldw.com/2015/06/03/2015-06-03-ml-algorithm-K-means/

https://www.cnblogs.com/pinard/p/6164214.html

k-Means++

https://zhuanlan.zhihu.com/p/32375430

kmeans和kmeans++ python代码实现:

https://github.com/silence-cho/cv-learning/blob/master/week4/assignment.py

https://github.com/ViperBling/CV_Course/blob/master/Week5/K-Means%2B%2B/K-Means.py

7.KNN(k近邻)算法

https://coolshell.cn/articles/8052.html

https://www.cnblogs.com/ybjourney/p/4702562.html

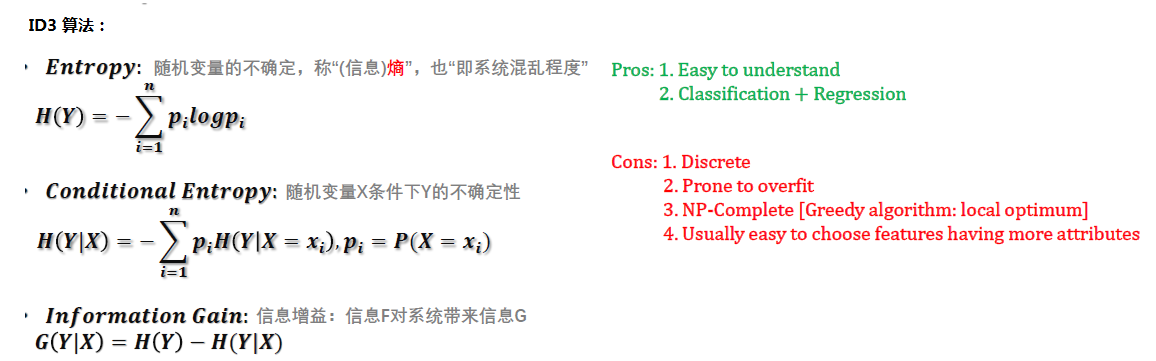

8.决策树 (Decision tree)

https://www.csuldw.com/2015/05/08/2015-05-08-decision%20tree/

https://lotabout.me/2018/decision-tree/

https://blog.csdn.net/xbinworld/article/details/44660339

信息增益:

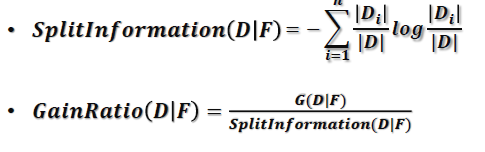

信息增益率:

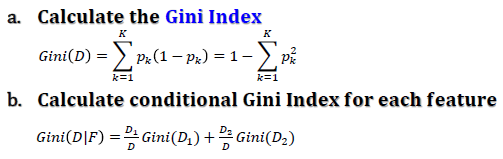

基尼指数:

ID3(信息增益)和C4.5(信息增益率):https://zhuanlan.zhihu.com/p/26760551?utm_source=wechat_session&utm_medium=social&utm_oi=71873182302208

sklearn实现决策树:https://www.v2ex.com/amp/t/544062

9.其他算法

AdaBoost:

https://www.cnblogs.com/pinard/p/6133937.html

https://blog.csdn.net/guyuealian/article/details/70995333

LDA(隐式狄利克雷分布): https://github.com/endymecy/spark-ml-source-analysis/blob/master/%E8%81%9A%E7%B1%BB/LDA/lda.md

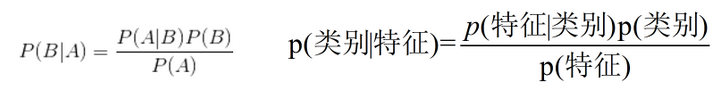

朴素贝叶斯:https://www.cnblogs.com/leoo2sk/archive/2010/09/17/naive-bayesian-classifier.html

https://zhuanlan.zhihu.com/p/26262151

深度学习方面

1. overfit/underfit (过拟合和欠拟合)

https://zh.d2l.ai/chapter_deep-learning-basics/underfit-overfit.html

https://zhuanlan.zhihu.com/p/29707029

2. bias and variance (高偏差和高方差)

https://www.jianshu.com/p/a585d5506b1e

https://www.cnblogs.com/hutao722/p/9921788.html

http://nanshu.wang/post/2015-05-17/

http://www.voidcn.com/article/p-tqoebcaa-dq.html

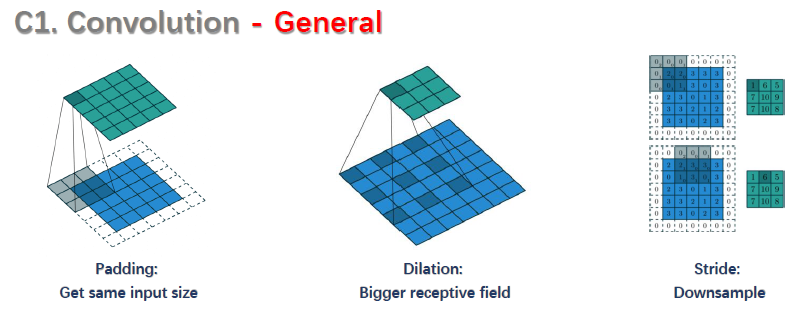

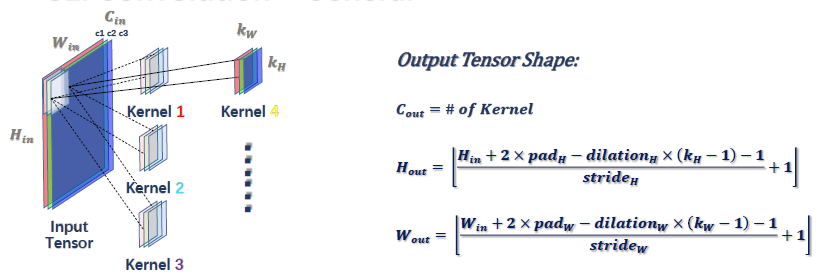

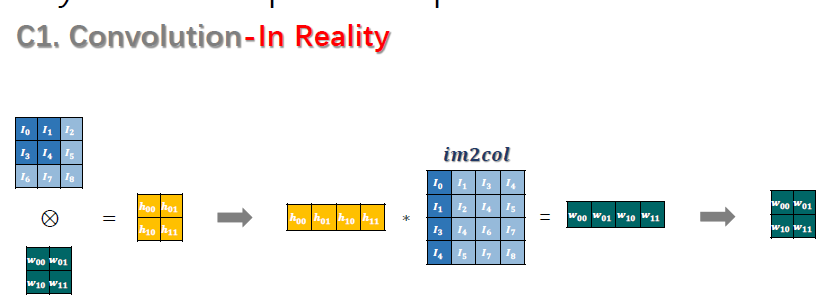

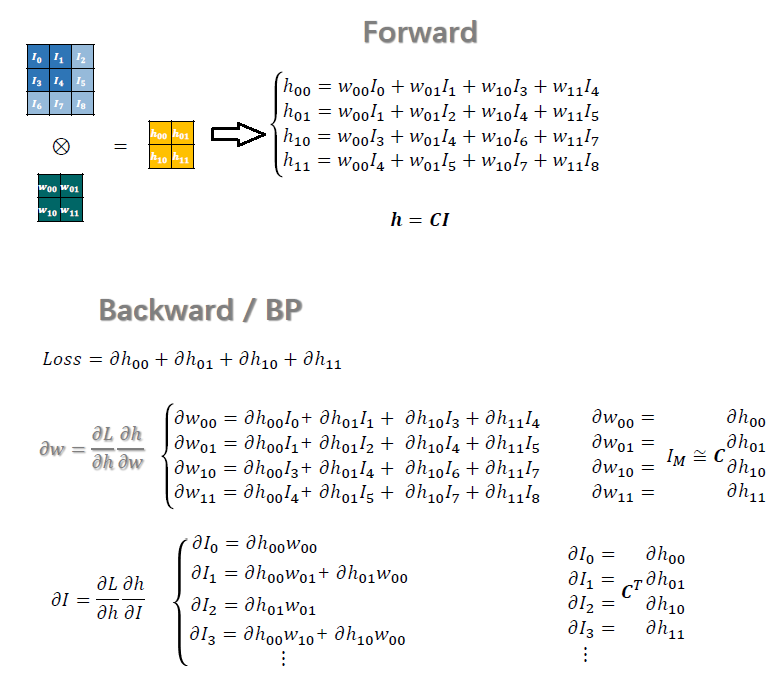

3.卷积

反卷积(Deconv / Transposed Convolution / Fractionally strided conv):

https://www.zhihu.com/question/48279880?sort=created

https://www.zhihu.com/question/48279880/answer/838063090

4. Gradient vanishing and explosion (梯度消失和梯度爆炸)

https://blog.csdn.net/qq_25737169/article/details/78847691

https://codertw.com/%E7%A8%8B%E5%BC%8F%E8%AA%9E%E8%A8%80/583004/

https://zhuanlan.zhihu.com/p/51490163

5.Backward(反向传播)

https://juejin.im/entry/5ac056dc6fb9a028de44d620

https://tigerneil.gitbooks.io/neural-networks-and-deep-learning-zh/content/chapter2.html

https://github.com/INTERMT/BP-Algorithm

https://jdhao.github.io/2016/01/19/back-propagation-in-mlp-explained/

图像分割模型:

1. FCN

https://zhuanlan.zhihu.com/p/62839949

https://zh.gluon.ai/chapter_computer-vision/fcn.html

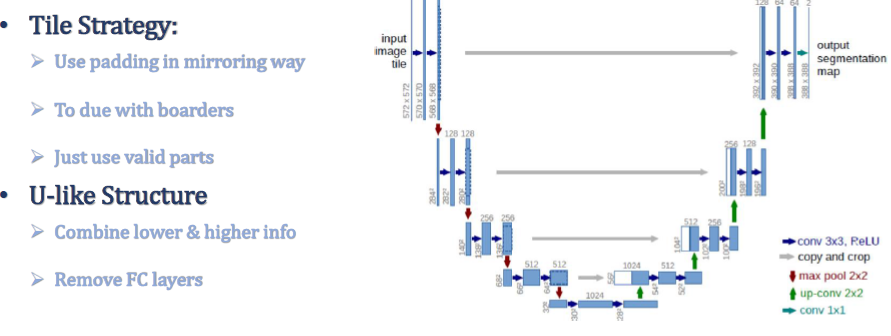

2.U-Net (E-Net)

https://blog.csdn.net/u012931582/article/details/70215756

https://juejin.im/post/5d63eb7bf265da03e05b2065

https://zhuanlan.zhihu.com/p/31428783

https://zhuanlan.zhihu.com/p/57530767

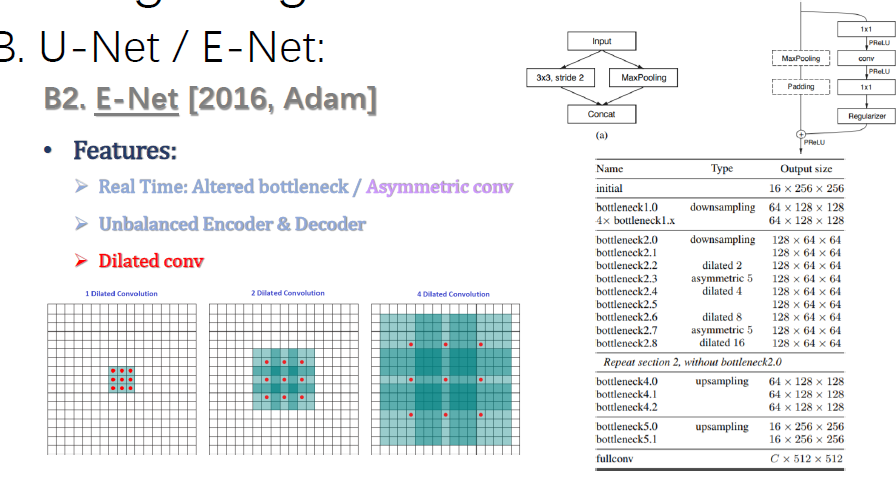

3. E-Net

https://zhuanlan.zhihu.com/p/39430439

http://hellodfan.com/2018/01/02/%E8%AF%AD%E4%B9%89%E5%88%86%E5%89%B2%E8%AE%BA%E6%96%87-ENet/

https://zhuanlan.zhihu.com/p/31379024

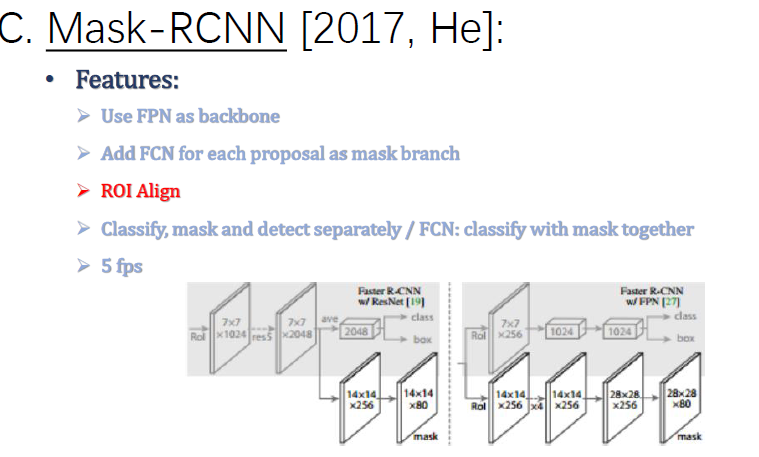

4. Mask-RCNN

https://zhuanlan.zhihu.com/p/37998710

https://zhuanlan.zhihu.com/p/40538057

Image Style Transfer(图像风格转变):

Perceptual Loss: Perceptual Losses for Real-Time Style Transferand Super-Resolution

Feature mimicking: Mimicking Very Efficient Network for Object Detection

Model distillation: Distilling the Knowledge in a Neural Network

Image Enhancement (图像增强):

Learning a Deep Single Image Contrast Enhancerfrom Multi-Exposure Images

A Generic Deep Architecture for Single Image Reflection Removaland Image Smoothing (反射移除)

深度学习框架

caffe教程:

https://blog.csdn.net/m0_38116269/article/details/88119001