# -*- coding: utf-8 -*- import urllib.request import json import time import random from urllib.request import urlopen from bs4 import BeautifulSoup import threading import requests from urllib.request import urlretrieve import re import sys import string import os import socket import urllib id = '5644764907' # 定义要爬取的微博id。杨超越微博https://m.weibo.cn/u/5644764907 proxy = [ {'http': '106.14.47.5:80'}, {'http': '61.135.217.7:80'}, {'http': '58.53.128.83:3128'}, {'http': '58.118.228.7:1080'}, {'http': '221.212.117.10:808'}, {'http': '115.159.116.98:8118'}, {'http': '121.33.220.158:808'}, {'http': '124.243.226.18:8888'}, {'http': '124.235.135.87:80'}, {'http': '14.118.135.10:808'}, {'http': '119.176.51.135:53281'}, {'http': '114.94.10.232:43376'}, {'http': '218.79.86.236:54166'}, {'http': '221.224.136.211:35101'}, {'http': '58.56.149.198:53281'}] # 设置代理IP # 定义页面打开函数 def use_proxy(url,proxy_addr): req = urllib.request.Request(url) req.add_header("User-Agent", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0") proxy = urllib.request.ProxyHandler(proxy_addr) opener = urllib.request.build_opener(proxy,urllib.request.HTTPHandler) urllib.request.install_opener(opener) data = urllib.request.urlopen(req).read().decode('utf-8','ignore') return data # 获取微博用户的基本信息,如:微博昵称、微博地址、微博头像、关注人数、粉丝数、性别、等级等 def get_userInfo(id): url = 'https://m.weibo.cn/api/container/getIndex?type=uid&value='+id # 个人信息接口 seed_num = random.randint(1,15)-1 proxy_addr = proxy[seed_num] data = use_proxy(url, proxy_addr) content = json.loads(data).get('data') profile_image_url = content.get('userInfo').get('profile_image_url') description = content.get('userInfo').get('description') profile_url = content.get('userInfo').get('profile_url') verified = content.get('userInfo').get('verified') guanzhu = content.get('userInfo').get('follow_count') name = content.get('userInfo').get('screen_name') fensi = content.get('userInfo').get('followers_count') gender = content.get('userInfo').get('gender') urank = content.get('userInfo').get('urank') print("微博昵称:"+name+" "+"微博主页地址:"+profile_url+" "+"微博头像地址:"+profile_image_url+" "+"是否认证:"+str(verified)+" "+"微博说明:"+description+" "+"关注人数:"+str(guanzhu)+" "+"粉丝数:"+str(fensi)+" "+"性别:"+gender+" "+"微博等级:"+str(urank)+" ") pass def save_pics(pics_info,m): print("pic_save start") for pic_info in pics_info: pic_url=pic_info['large']['url']#原图 #pic_url=pic_info['url']#低清图 pic_path=pics_dir + '\%d.jpg'%m try: #下载图片 with open(pic_path,'wb') as f: for chunk in requests.get(pic_url,stream=True).iter_content(): f.write(chunk) except: print(pic_path + '保存失败') else: print(pic_path + '保存成功') m+=1 # 获取微博主页的containerid,爬取微博内容时需要此id def get_containerid(url,proxy_addr): data = use_proxy(url, proxy_addr) content = json.loads(data).get('data') for data in content.get('tabsInfo').get('tabs'): if(data.get('tab_type') == 'weibo'): containerid = data.get('containerid') return containerid # 获取微博内容信息,并保存到文本中,内容包括:每条微博的内容、微博详情页面地址、点赞数、评论数、转发数等 def get_weibo(id, file,file_content): i = 1 m = 0 while True: num = random.randint(1,15)-1 proxy_addr = proxy[num] url = 'https://m.weibo.cn/api/container/getIndex?type=uid&value='+id weibo_url = 'https://m.weibo.cn/api/container/getIndex?type=uid&value='+id+'&containerid='+get_containerid(url,proxy_addr)+'&page='+str(i) print(url) print(weibo_url) try: data = use_proxy(weibo_url, proxy_addr) content = json.loads(data).get('data') cards = content.get('cards') if(len(cards)>0): threads = [] for j in range(len(cards)): print("第"+str(i)+"页,第"+str(j)+"条微博") card_type = cards[j].get('card_type') if(card_type == 9): mblog = cards[j].get('mblog') attitudes_count = mblog.get('attitudes_count') comments_count = mblog.get('comments_count') created_at = mblog.get('created_at') reposts_count = mblog.get('reposts_count') scheme = cards[j].get('scheme') print(i) #获取微博内容 try: text = mblog.get('text') text = re.sub(u"<.*?>", "", text) except: return None with open(file_content, 'a+',encoding='utf-8') as f1: f1.write(str(text)+" ") pass #下载图片 try: pics_info = mblog.get('pics') except: pass else: if pics_info: print("have pics") save_pics(pics_info,m) m += 1 with open(file, 'a+', encoding='utf-8') as fh: fh.write("第"+str(i)+"页,第"+str(j)+"条微博"+" ") fh.write("微博地址:"+str(scheme)+" "+"发布时间:"+str(created_at)+" "+"微博内容:"+text+" "+"点赞数:"+str(attitudes_count)+" "+"评论数:"+str(comments_count)+" "+"转发数:"+str(reposts_count)+" ") pass pass pass i += 1 time.sleep(random.randint(1,3)) pass else: break except Exception as e: print(e) pass pass pass if __name__ == "__main__": print('开始---') pics_dir = r"D:software_studymy_jupyter_notebookscrawlpics_origin" file_all = "ycy_all.txt" file_content = "ycy_content.txt" #pic_index get_userInfo(id) get_weibo(id, file_all, file_content) print('完成---') pass

代理获取;

import urllib.request import urllib import re import time import random import socket import threading #抓取代理IP ip_totle=[] #所有页面的内容列表 for page in range(1,11): url='https://www.xicidaili.com/nn/'+str(page) headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64)"} request=urllib.request.Request(url=url,headers=headers) response=urllib.request.urlopen(request) content=response.read().decode('utf-8') print('get page',page) pattern=re.compile('<td>(d.*?)</td>') #截取<td>与</td>之间第一个数为数字的内容 ip_page=re.findall(pattern,str(content)) ip_totle.extend(ip_page) time.sleep(random.choice(range(1,10))) #爬到的ip print(len(ip_totle)) #存储爬到的ip with open(r"D:software_studymy_jupyter_notebookscrawlproxy.txt",'a+') as f: for i in range(0,len(ip_totle),4): f.write(str(ip_totle[i]) + ":" + str(ip_totle[i+1]) + " " + str(ip_totle[i+2]) + " " + str(ip_totle[i+3] + " ")) #整理成代理IP格式 proxys = [] for i in range(0, len(ip_totle), 4): proxy_host = ip_totle[i] + ':' + ip_totle[i+1] proxy_temp = {"http":proxy_host} proxys.append(proxy_temp) def test(i): """ :desc; test ip """ socket.setdefaulttimeout(5)#设置全局超时时间 url = "http://quote.stockstar.com/stock" #打算爬取的网址 try: proxy_support = urllib.request.ProxyHandler(proxys[i]) opener = urllib.request.build_opener(proxy_support) opener.addheaders = [("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64)")] urllib.request.install_opener(opener) res = urllib.request.urlopen(url).read() lock.acquire() #获得锁 print(proxys[i], 'is OK') f.write('%s ' %str(proxys[i])) #写入该代理IP lock.release() #释放锁 except Exception as e: lock.acquire() print(proxys[i], e) lock.release() with open('proxy_ip_11_night.txt','a+') as f: #建立一个锁,保证线程安全 lock = threading.Lock() print("test ip start") #单线程验证 """ for i in range(len(proxys)): test(i) """ #多线程验证 threads = [] for i in range(len(proxys)): thread = threading.Thread(target=test,args=[i]) threads.append(thread) thread.start() #祖册主进程,等待所有子线程结束 for thread in threads: thread.join() print("test ip finished")

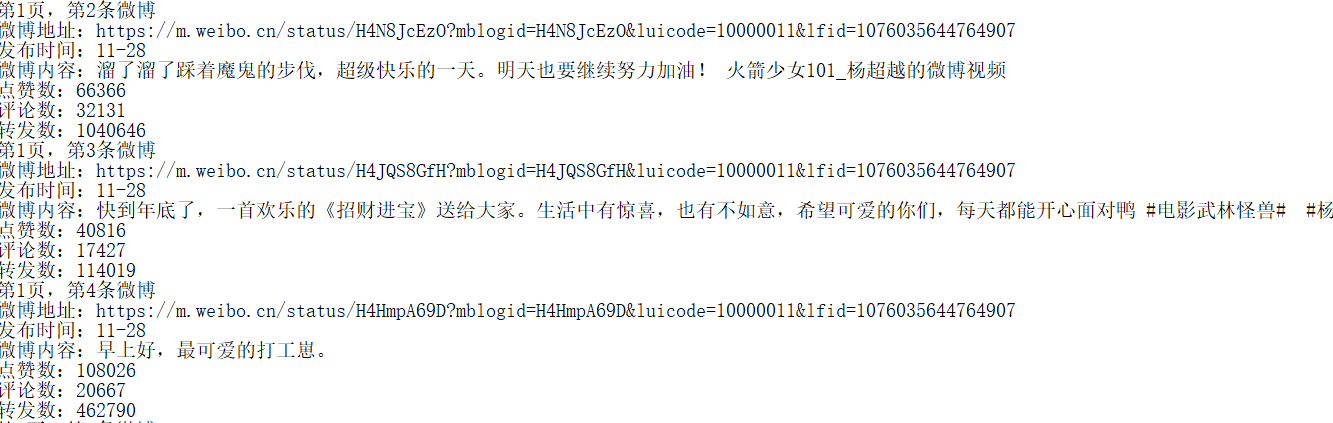

结果展示:

微博内容:

微博图片:

GO! 冲鸭!!!超越一切