1. 基础环境规划:

| 主机名 | IP地址 | 节点说明 |

|---|---|---|

| k8s-node01 | 192.168.1.154 | node1节点 |

| k8s-node02 | 192.168.1.155 | node2节点 |

| master01 | 192.168.1.151 | master1节点 |

| master02 | 192.168.1.152 | master2节点 |

| master03 | 192.168.1.153 | master3节点 |

| 虚拟vip | 192.168.1.160 | 虚拟IP |

docker配置信息:

| 配置信息 | 备注 |

|---|---|

| 系统版本 | CentOS Linux release 7.6.1810 |

| Docker版本 | 20.10 |

| Pod网段 | 172.168.0.0/12 |

| Service网段 | 10.96.0.0/12 |

注意:公有云上搭建VIP是公有云的负载均衡的IP,比如阿里云的内网SLB的地址,腾讯云内网ELB的地址

#花里胡哨的美化配置:

#命令行优化:

echo "export PS1='[�33[01;31m]u[�33[00m]@[�33[01;32m]h[�33[00m][[�33[01;33m] [�33[00m]]:[�33[01;34m]w[�33[00m]$ '" >>/etc/profile

source /etc/profile

#历史记录优化:

export HISTTIMEFORMAT='%F %T '

echo "export HISTTIMEFORMAT='%F %T '" >>/etc/profile

source /etc/profile

2.前期准备工作

2.1 所有节点hosts配置

#每个节点设置主机名:

hostnamectl set-hostname [主机名]

如:

hostnamectl set-hostname k8s-master01

#所有节点配置hosts,修改/etc/hosts如下:

[root@k8s-master01 ~]# cat /etc/hosts

192.168.1.151 k8s-master01

192.168.1.152 k8s-master02

192.168.1.153 k8s-master03

192.168.1.160 k8s-master-lb # 如果不是高可用集群,该IP为Master01的IP

192.168.1.154 k8s-node01

192.168.1.155 k8s-node02

cat <<EOF > /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.151 k8s-master01

192.168.1.152 k8s-master02

192.168.1.153 k8s-master03

192.168.1.160 k8s-master-lb

192.168.1.154 k8s-node01

192.168.1.155 k8s-node02

EOF

#命令行优化:

echo "export PS1='[�33[01;31m]u[�33[00m]@[�33[01;32m]h[�33[00m][[�33[01;33m] [�33[00m]]:[�33[01;34m]w[�33[00m]$ '" >>/etc/profile

source /etc/profile

#历史记录优化:

export HISTTIMEFORMAT='%F %T '

echo "export HISTTIMEFORMAT='%F %T '" >>/etc/profile

source /etc/profile

2.2 所有节点源配置[centos7]

mv /etc/yum.repos.d/* /root

cd /etc/yum.repos.d/

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

yum clean all

yum install -y epel-release

#安装必备工具:

#必备工具安装

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

2.3 所有节点优化

#所有节点关闭防火墙、selinux、dnsmasq、swap。服务器配置如下:

systemctl disable --now firewalld

systemctl disable --now dnsmasq

systemctl disable --now NetworkManager

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

#关闭swap分区

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

#安装ntpdate

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

#添加计划任务

yum install -y ntpdate

echo '*/5 * * * * ntpdate cn.pool.ntp.org' >>/var/spool/cron/root

systemctl restart crond

ntpdate time2.aliyun.com

#所有节点同步时间。时间同步配置如下:

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' >/etc/timezone

ntpdate time2.aliyun.com

# 加入到crontab

*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com

#limit优化

ulimit -SHn 65535

cat <<EOF >> /etc/security/limits.conf

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

2.4 配置免密[中控机操作]

#Master01节点免密钥登录其他节点,安装过程中生成配置文件和证书均在Master01上操作,集群管理也在Master01上操作,阿里云或者AWS上需要单独一台kubectl服务器。密钥配置如下:

cd /root

ssh-keygen -t rsa

for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

2.5 升级内核 [中控机操作]

#下载安装所有的源码文件

cd /root/

git clone https://github.com/dotbalo/k8s-ha-install.git

#如果无法下载请使用下面的重试:

git clone https://gitee.com/dukuan/k8s-ha-install.git

# 在master01节点下载内核,并缓存 [所有机器升级]

#CentOS7需要升级系统,CentOS8可以按需升级系统

yum update -y --exclude=kernel* && reboot

# 内核配置

#CentOS7 需要升级内核至4.18+,本地升级的版本为4.19

cd /root

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

#从master01节点传到其他节点:

for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/ ; done

#所有节点安装内核

cd /root && yum localinstall -y kernel-ml*

#所有节点更改内核启动顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

#检查默认内核是不是4.19

root@k8s-master01[17:05:33]:~$ grubby --default-kernel

/boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64

#所有节点重启,然后检查内核是不是4.19

root@k8s-master01[17:09:20]:~$ grubby --default-kernel

/boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64

#如果是,则说明内核配置正确

2.6 所有节点安装ipvsadm

yum install ipvsadm ipset sysstat conntrack libseccomp -y

#所有节点配置ipvs模块,在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack, 4.18以下使用nf_conntrack_ipv4即可:

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

#创建 /etc/modules-load.d/ipvs.conf 并加入以下内容:

cat >/etc/modules-load.d/ipvs.conf <<EOF

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

#设置为开机启动

systemctl enable --now systemd-modules-load.service

2.7 k8s内核优化

#开启一些k8s集群中必须的内核参数,所有节点配置k8s内核:

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

#所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

reboot

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

#重启后结果如下代表正常:

root@k8s-master01[17:19:15]:~$ lsmod | grep --color=auto -e ip_vs -e nf_conntrack

ip_vs_ftp 16384 0

nf_nat 32768 1 ip_vs_ftp

ip_vs_sed 16384 0

ip_vs_nq 16384 0

ip_vs_fo 16384 0

ip_vs_sh 16384 0

ip_vs_dh 16384 0

ip_vs_lblcr 16384 0

ip_vs_lblc 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs_wlc 16384 0

ip_vs_lc 16384 0

ip_vs 151552 24 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

nf_conntrack 143360 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 1 nf_conntrack

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

3. 基本组件安装

本节主要安装的是集群中用到的各种组件,比如Docker-ce、Kubernetes各组件等。

3.1 所有节点安装 docker-ce 20.10

yum install -y docker-ce-20.10.6-* docker-ce-cli-20.10.6-*.x86_64

rm -f /etc/docker/*

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://ajvcw8qn.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

systemctl enable --now docker.service

3.2 所有机器安装k8s组件kubeadm

yum list kubeadm.x86_64 --showduplicates | sort -r

#所有节点安装最新版本kubeadm:

yum install kubeadm-1.21* kubelet-1.21* kubectl-1.21* -y

#默认配置的pause镜像使用gcr.io仓库,国内可能无法访问,所以这里配置Kubelet使用阿里云的pause镜像

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2"

EOF

#设置开机启动

systemctl daemon-reload

systemctl enable --now kubelet

3.3 安装高可用组件[master相关服务器执行]

注意:如果不是高可用集群,haproxy和keepalived无需安装)

公有云要用公有云自带的负载均衡,比如阿里云的SLB,腾讯云的ELB,用来替代haproxy和keepalived,因为公有云大部分都是不支持keepalived的,另外如果用阿里云的话,kubectl控制端不能放在master节点,推荐使用腾讯云,因为阿里云的slb有回环的问题,也就是slb代理的服务器不能反向访问SLB,但是腾讯云修复了这个问题。

#所有Master节点通过yum安装HAProxy和KeepAlived:

root@k8s-master01[17:44:47]:~$ yum install keepalived haproxy -y

#配置HAProxy:

#所有Master节点配置HAProxy(详细配置参考HAProxy文档,所有Master节点的HAProxy配置相同):

mkdir /etc/haproxy

cat >/etc/haproxy/haproxy.cfg<<"EOF"

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 192.168.1.151:6443 check

server k8s-master02 192.168.1.152:6443 check

server k8s-master03 192.168.1.153:6443 check

EOF

#所有Master节点配置KeepAlived,配置不一样,注意区分

#[root@k8s-master01 pki]# vim /etc/keepalived/keepalived.conf ,注意每个节点的IP和网卡(interface参数)

#注意修改配置,下面IP替换为master的IP地址:

server k8s-master01 192.168.1.151:6443 check

server k8s-master02 192.168.1.152:6443 check

server k8s-master03 192.168.1.153:6443 check

#配置keepalived

#所有Master节点配置KeepAlived,配置不一样,注意区分

#每台服务器 优先级必须不同 priority 100 其他机器设置为 99 98

#master01 配置:

[root@k8s-master01 pki]# mkdir -p /etc/keepalived

[root@k8s-master01 pki]# cat >/etc/keepalived/keepalived.conf<<"EOF"

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface eth0

mcast_src_ip 192.168.1.151

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.1.160

}

track_script {

chk_apiserver

}

}

EOF

#Master02 配置:

[root@k8s-master02 pki]# cat >/etc/keepalived/keepalived.conf<<"EOF"

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 192.168.1.152

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.1.160

}

track_script {

chk_apiserver

}

}

EOF

#Master03 配置:

[root@k8s-master03 pki]# cat >/etc/keepalived/keepalived.conf<<"EOF"

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 192.168.1.153

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.1.160

}

EOF

#快捷办法[变量获取本机IP]:

host=$(hostname -i)

cat >/etc/keepalived/keepalived.conf<<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface eth0

mcast_src_ip $(hostname -i)

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.1.160

}

track_script {

chk_apiserver

}

}

EOF

3.4 健康检查配置[所有master服务器添加健康检查脚本]

cat > /etc/keepalived/check_apiserver.sh <<"EOF"

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_apiserver.sh

3.5 启动haproxy和keepalived[每台master启用]

systemctl daemon-reload

systemctl enable --now haproxy

systemctl enable --now keepalived

3.6 测试haproxy与keepalived是否正常

重要:如果安装了keepalived和haproxy,需要测试keepalived是否是正常的

所以这里需要测试VIP是否通

ping 192.168.1.160 -c 4

root@k8s-master01[18:17:06]:~$ ping 192.168.1.160 -c 4

PING 192.168.1.160 (192.168.1.160) 56(84) bytes of data.

64 bytes from 192.168.1.160: icmp_seq=1 ttl=64 time=0.045 ms

root@k8s-master02[18:17:10]:~$ ping 192.168.1.160 -c 4

PING 192.168.1.160 (192.168.1.160) 56(84) bytes of data.

64 bytes from 192.168.1.160: icmp_seq=1 ttl=64 time=1.00 ms

root@k8s-master03[18:17:13]:~$ ping 192.168.1.160 -c 4

PING 192.168.1.160 (192.168.1.160) 56(84) bytes of data.

64 bytes from 192.168.1.160: icmp_seq=1 ttl=64 time=2.48 ms

telnet测试:

root@k8s-master03[18:19:51]:~$ telnet 192.168.1.160 16443

Trying 192.168.1.160...

Connected to 192.168.1.160.

Escape character is '^]'.

Connection closed by foreign host.

注意: 如果ping不通且telnet没有出现 ] ,则认为VIP不可以,不可在继续往下执行,需要排查keepalived的问题,比如防火墙和selinux,haproxy和keepalived的状态,监听端口等

所有节点查看防火墙状态必须为disable和inactive:systemctl status firewalld

所有节点查看selinux状态,必须为disable:getenforce

master节点查看haproxy和keepalived状态:systemctl status keepalived haproxy

master节点查看监听端口:netstat -lntp

排查思路:

keepalived的问题,比如防火墙和selinux,haproxy和keepalived的状态,监听端口等

所有节点查看防火墙状态必须为disable和inactive:systemctl status firewalld

所有节点查看selinux状态,必须为disable:getenforce

master节点查看haproxy和keepalived状态:systemctl status keepalived haproxy

master节点查看监听端口:netstat -lntp

4.0 kubernetes集群初始化

官方初始化文档:

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

Master01节点创建 kubeadm-config.yaml 配置文件如下:

Master01:(# 注意,如果不是高可用集群,192.168.1.160:16443改为master01的地址,16443改为apiserver的端口,默认是6443,注意更改v1.18.5自己服务器kubeadm的版本:kubeadm version)

#查看办法:

kubectl version

root@k8s-master01[18:25:48]:~$ kubectl version

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.0", GitCommit:"cb303e613a121a29364f75cc67d3d580833a7479", GitTreeState:"clean", BuildDate:"2021-04-08T16:31:21Z", GoVersion:"go1.16.1", Compiler:"gc", Platform:"linux/amd64"}

因为安装的版本是 GitVersion:"v1.21.0"

下面的yaml文件中的对应版本需要改为 v1.21.0

cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.151

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- 192.168.1.160

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.1.160:16443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.21.0

networking:

dnsDomain: cluster.local

podSubnet: 172.16.0.0/12

serviceSubnet: 192.168.0.0/16

scheduler: {}

更新kubeadm文件[只有master01执行] 注意:需要修改的地方是 网段和版本 网段可以保持默认,但是版本建议与当前安装版本一致 kubeadm-config.yaml中的网段需要修改为 172.168.0.0/12 通过grep 'podSubnet:' /root/kubeadm-config.yaml

#更新kubeadm文件: kubeadm-config.yaml

kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

#将new.yaml文件复制到其他master节点,之后所有Master节点提前下载镜像,可以节省初始化时间:

for i in k8s-master02 k8s-master03; do scp new.yaml $i:/root/; done

#在其他master节点提前下载镜像,用于解决初始化时间

kubeadm config images pull --config /root/new.yaml

systemctl enable --now kubelet

#执行kubeadm config images pull --config /root/new.yaml如果出现如下报错

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

failed to pull image "registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0": output: Error response from daemon: manifest for registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0 not found: manifest unknown: manifest unknown

, error: exit status 1

To see the stack trace of this error execute with --v=5 or higher

#coredns如果没有成功

出现ImagePullBackOff:

coredns-57d4cbf879-gnh6j 0/1 ImagePullBackOff 0 6m

coredns-57d4cbf879-z79bt 0/1 ImagePullBackOff 0 6m

#请所有节点执行,用于下载镜像即可:

docker pull registry.cn-beijing.aliyuncs.com/dotbalo/coredns:1.8.0

docker tag registry.cn-beijing.aliyuncs.com/dotbalo/coredns:1.8.0 registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

#再次查看就会自动拉起

coredns-57d4cbf879-gnh6j 1/1 Running 0 16h

coredns-57d4cbf879-z79bt 1/1 Running 0 16h

所有节点设置开机自启动kubelet systemctl daemon-reload systemctl enable --now kubelet

4.2 master01初始化

Master01节点初始化,初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入Master01即可:

#master01节点执行初始化:

kubeadm init --config /root/new.yaml --upload-certs

#如果初始化失败,重置后再次初始化,命令如下:

kubeadm reset -f ; ipvsadm --clear ; rm -rf ~/.kube

关键提示信息:

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

[其他master加入集群]

kubeadm join 192.168.1.160:16443 --token 7t2weq.bjbawausm0jaxury

--discovery-token-ca-cert-hash sha256:aeb438bb077768d6626fbc5f2ff61a903bfea24c2eaaa3fde49bace433176384

--control-plane --certificate-key 530a5f56ce32e4ab69b384f41e6165327e23577f4924558b36efb6bb08a883e5

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

[其他node加入集群]

kubeadm join 192.168.1.160:16443 --token 7t2weq.bjbawausm0jaxury

--discovery-token-ca-cert-hash sha256:aeb438bb077768d6626fbc5f2ff61a903bfea24c2eaaa3fde49bace433176384

#优化

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

cat <<EOF >> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source /root/.bashrc

4.3 master02 master03 加入master集群

#Token过期后生成新的token:

kubeadm token create --print-join-command

#Master需要生成-certificate-key

root@k8s-master01[15:36:45]:~$ kubeadm init phase upload-certs --upload-certs

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

9b5153fe13fe5a9286eb68fae35311f7357b854a2f8ad925bc7e45b16d2b886e

#其他master加入集群

kubeadm join 192.168.1.160:16443 --token fgtxr1.bz6dw1tci1kbj977 --discovery-token-ca-cert-hash sha256:06ebf46458a41922ff1f5b3bc49365cf3dd938f1a7e3e4a8c8049b5ec5a3aaa5

--control-plane --certificate-key 9b5153fe13fe5a9286eb68fae35311f7357b854a2f8ad925bc7e45b16d2b886e

4.4 node01 node02 加入node集群

kubeadm join 192.168.1.160:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:aeb438bb077768d6626fbc5f2ff61a903bfea24c2eaaa3fde49bace433176384

#过程

root@k8s-node01[15:29:45]:~$ kubeadm join 192.168.1.160:16443 --token 7t2weq.bjbawausm0jaxury

> --discovery-token-ca-cert-hash sha256:aeb438bb077768d6626fbc5f2ff61a903bfea24c2eaaa3fde49bace433176384

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

4.5 加入结果概览

root@k8s-master01[16:06:10]:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane,master 6m15s v1.21.0

k8s-master02 NotReady control-plane,master 5m23s v1.21.0

k8s-master03 NotReady control-plane,master 4m21s v1.21.0

k8s-node01 NotReady <none> 11s v1.21.0

k8s-node02 NotReady <none> 0s v1.21.0

采用初始化安装方式,所有的系统组件均以容器的方式运行并且在kube-system命名空间内,此时可以查看Pod状态:

root@k8s-master01[20:18:57]:~$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-57d4cbf879-8vs6c 0/1 Pending 0 75m

coredns-57d4cbf879-p99nc 0/1 Pending 0 75m

etcd-k8s-master01 1/1 Running 0 75m

etcd-k8s-master02 1/1 Running 0 4m45s

etcd-k8s-master03 1/1 Running 0 4m23s

kube-apiserver-k8s-master01 1/1 Running 0 75m

kube-apiserver-k8s-master02 1/1 Running 0 4m45s

kube-apiserver-k8s-master03 1/1 Running 0 4m10s

kube-controller-manager-k8s-master01 1/1 Running 1 75m

kube-controller-manager-k8s-master02 1/1 Running 0 4m45s

kube-controller-manager-k8s-master03 1/1 Running 0 4m21s

kube-proxy-2zc6p 1/1 Running 0 4m46s

kube-proxy-djtbn 1/1 Running 0 3m30s

kube-proxy-g2ddr 1/1 Running 0 75m

kube-proxy-gf7w8 1/1 Running 0 3m34s

kube-proxy-mfsbz 1/1 Running 0 3m59s

kube-scheduler-k8s-master01 1/1 Running 1 75m

kube-scheduler-k8s-master02 1/1 Running 0 4m45s

kube-scheduler-k8s-master03 1/1 Running 0 4m19s

#出现 coredns Pending状态 重新下载这个包,然后再次查看

docker pull registry.cn-beijing.aliyuncs.com/dotbalo/coredns:1.8.0

docker tag registry.cn-beijing.aliyuncs.com/dotbalo/coredns:1.8.0 registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

#此时 coredns已经启动完毕

root@k8s-master01[20:44:59]:~$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-cdd5755b9-tfhp8 1/1 Running 0 7m31s

calico-node-4rsfw 1/1 Running 0 7m31s

calico-node-cgjjw 1/1 Running 0 7m31s

calico-node-gw2zz 1/1 Running 0 7m31s

calico-node-hzjn7 1/1 Running 0 7m31s

calico-node-sbjx4 1/1 Running 0 7m31s

coredns-57d4cbf879-8vs6c 1/1 Running 0 100m

coredns-57d4cbf879-p99nc 1/1 Running 0 100m

etcd-k8s-master01 1/1 Running 0 100m

etcd-k8s-master02 1/1 Running 0 29m

etcd-k8s-master03 1/1 Running 0 29m

kube-apiserver-k8s-master01 1/1 Running 0 100m

kube-apiserver-k8s-master02 1/1 Running 0 29m

kube-apiserver-k8s-master03 1/1 Running 0 29m

kube-controller-manager-k8s-master01 1/1 Running 1 100m

kube-controller-manager-k8s-master02 1/1 Running 0 29m

kube-controller-manager-k8s-master03 1/1 Running 0 29m

kube-proxy-2zc6p 1/1 Running 0 29m

kube-proxy-djtbn 1/1 Running 0 28m

kube-proxy-g2ddr 1/1 Running 0 100m

kube-proxy-gf7w8 1/1 Running 0 28m

kube-proxy-mfsbz 1/1 Running 0 28m

kube-scheduler-k8s-master01 1/1 Running 1 100m

kube-scheduler-k8s-master02 1/1 Running 0 29m

kube-scheduler-k8s-master03 1/1 Running 0 29m

4.6 Master01节点配置环境变量,用于访问Kubernetes集群:

cat <<EOF >> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source /root/.bashrc

4.7 安装calico网络组件[master01操作]

/** 以下步骤只在master01执行 **/

cd /root/k8s-ha-install && git checkout manual-installation-v1.21.x && cd calico/

修改calico-etcd.yaml的以下位置:

sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.1.151:2379,https://192.168.1.152:2379,https://192.168.1.153:2379"#g' calico-etcd.yaml

ETCD_CA=`cat /etc/kubernetes/pki/etcd/ca.crt " base64 " tr -d '

'`

ETCD_CERT=`cat /etc/kubernetes/pki/etcd/server.crt " base64 " tr -d '

'`

ETCD_KEY=`cat /etc/kubernetes/pki/etcd/server.key " base64 " tr -d '

'`

#修改网段

sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml

POD_SUBNET=`cat /etc/kubernetes/manifests/kube-controller-manager.yaml " grep cluster-cidr= " awk -F= '{print $NF}'`

注意下面的这个步骤是把calico-etcd.yaml文件里面的CALICO_IPV4POOL_CIDR下的网段改成自己的Pod网段,也就是把192.168.x.x/16改成自己的集群网段,并打开注释:

所以更改的时候请确保这个步骤的这个网段没有被统一替换掉,如果被替换掉了,还请改回来:

cd /root/k8s-ha-install/calico

sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yaml

修改完成后执行:

kubectl apply -f calico-etcd.yaml

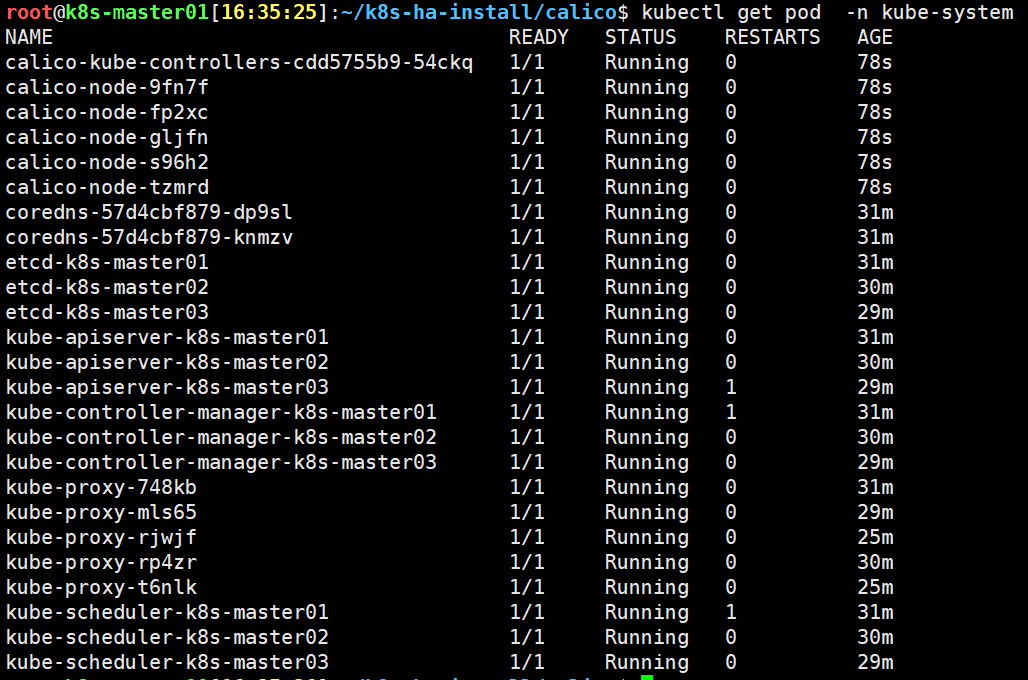

查看容器和节点状态:

kubectl get pod -n kube-system

5. Metrics部署

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率

#将Master01节点的front-proxy-ca.crt复制到所有Node节点

scp -r /etc/kubernetes/pki/front-proxy-ca.crt k8s-node01:/etc/kubernetes/pki/front-proxy-ca.crt

scp -r /etc/kubernetes/pki/front-proxy-ca.crt k8s-node02:/etc/kubernetes/pki/front-proxy-ca.crt

scp -r /etc/kubernetes/pki/front-proxy-ca.crt k8s-master02:/etc/kubernetes/pki/front-proxy-ca.crt

scp -r /etc/kubernetes/pki/front-proxy-ca.crt k8s-master03:/etc/kubernetes/pki/front-proxy-ca.crt

#安装Metrics

cd /root/k8s-ha-install/metrics-server-0.4.x-kubeadm/

kubectl create -f comp.yaml

#输出结果:

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

#查看安装结果:

命令:kubectl get po -n kube-system -l k8s-app=metrics-server

结果:

NAME READY STATUS RESTARTS AGE

metrics-server-d6c46b546-4sxb8 1/1 Running 0 114s

命令:kubectl top po --use-protocol-buffers -A

NAMESPACE NAME CPU(cores) MEMORY(bytes)

kube-system calico-kube-controllers 2m 20Mi

kube-system calico-node-4rsfw 22m 78Mi

kube-system calico-node-cgjjw 26m 75Mi

kube-system calico-node-gw2zz 22m 72Mi

kube-system calico-node-hzjn7 24m 75Mi

kube-system calico-node-sbjx4 23m 70Mi

kube-system coredns-57d4cbf879-8vs6c 2m 17Mi

kube-system coredns-57d4cbf879-p99nc 2m 18Mi

kube-system etcd-k8s-master01 29m 80Mi

kube-system etcd-k8s-master02 25m 79Mi

kube-system etcd-k8s-master03 25m 80Mi

kube-system kube-apiserver-k8s-master01 45m 360Mi

kube-system kube-apiserver-k8s-master02 31m 352Mi

kube-system kube-apiserver-k8s-master03 28m 344Mi

kube-system kube-controller-manager-k8s-master01 2m 27Mi

kube-system kube-controller-manager-k8s-master02 9m 63Mi

kube-system kube-controller-manager-k8s-master03 1m 29Mi

kube-system kube-proxy-2zc6p 1m 26Mi

kube-system kube-proxy-djtbn 1m 25Mi

kube-system kube-proxy-g2ddr 1m 24Mi

kube-system kube-proxy-gf7w8 1m 26Mi

kube-system kube-proxy-mfsbz 1m 23Mi

kube-system kube-scheduler-k8s-master01 2m 26Mi

kube-system kube-scheduler-k8s-master02 2m 30Mi

kube-system kube-scheduler-k8s-master03 2m 27Mi

kube-system metrics-server-d6c46b546-4sxb8 4m 23Mi

5.1 安装dashboard

#1. 安装老版本

cd /root/k8s-ha-install/dashboard/

kubectl create -f .

#2. 安装最新版:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

#授权:

vim admin.yaml

#--------------------------admin.yaml--------------------------#

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

#--------------------------admin.yaml--------------------------#

#执行安装

kubectl apply -f admin.yaml -n kube-system

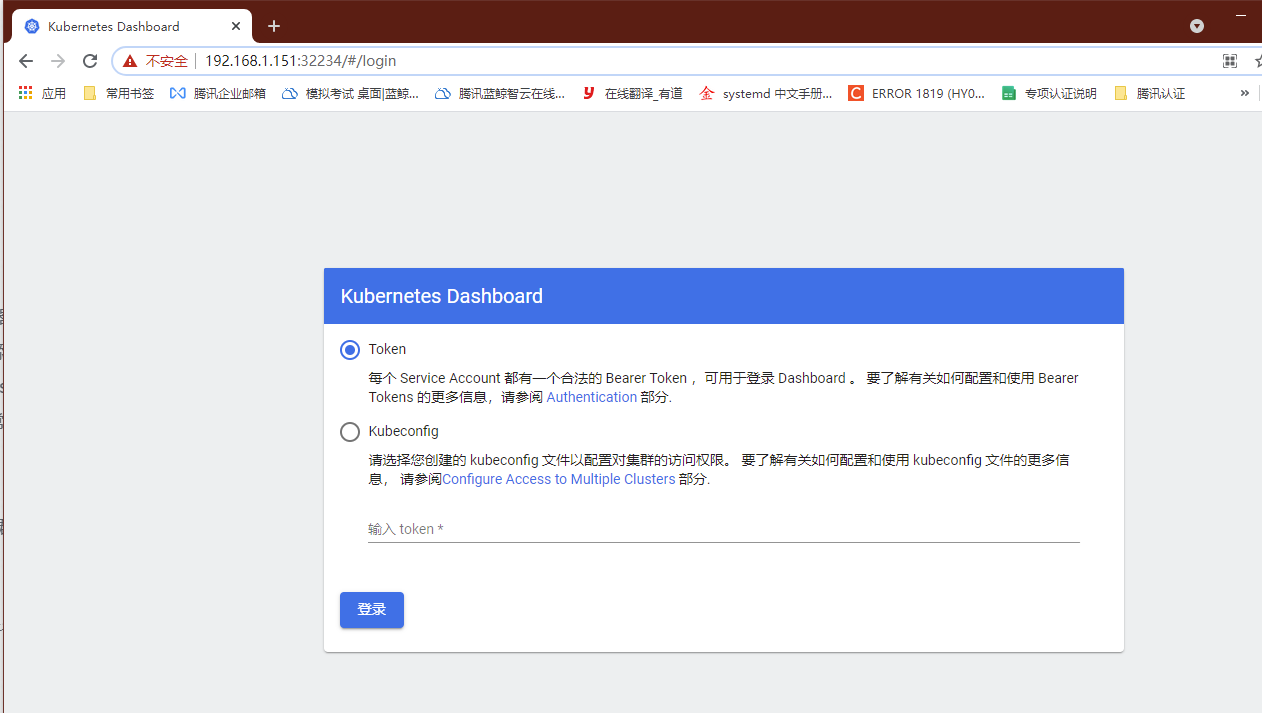

5.2 登录dashboard

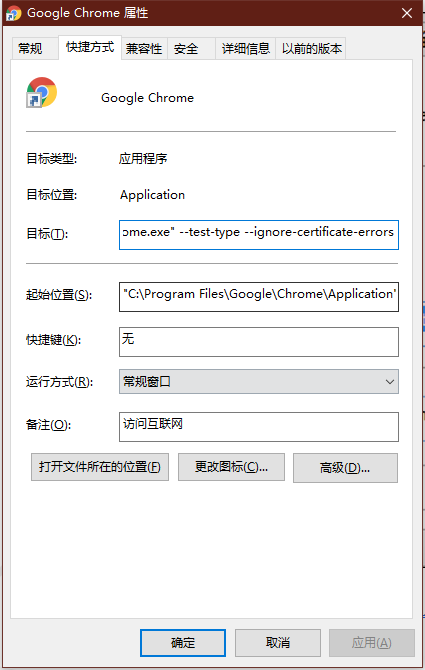

在谷歌浏览器(Chrome)启动文件中加入启动参数,用于解决无法访问Dashboard的问题,参考图1-1:

谷歌浏览器添加参数:

--test-type --ignore-certificate-errors

2.更改dashboard的svc为NodePort:

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

spec:

clusterIP: 10.108.157.21

clusterIPs:

- 10.108.157.21

externalTrafficPolicy: Cluster

ports:

- nodePort: 30195

port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: ClusterIP #改为 NodePort

#修改完成后如下:

spec:

clusterIP: 10.108.157.21

clusterIPs:

- 10.108.157.21

externalTrafficPolicy: Cluster

ports:

- nodePort: 30195

port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort #已经改为NodePort

修改完成后访问dashboard:

#查看端口号:

kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

通过https协议进行访问

https://192.168.1.151:32234/

#查看token值:

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

#得到 token:

eyJhbGciOiJSUzI1NiIsImtpZCI6IlFkM3BUd0xxVEZ6a0t4Njl2QnVaMWhLNUl4NFlzUkVrQngzbmlQeG4zczgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXRidjRkIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI0MzI2NDM1My1iNzY4LTRlNTEtYjljZS0wY2FlMzJlNThmOTgiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.jKtz39e-9EBlLhIW571Ms63ywad2z0s2hEa0ZalBRcEDXDKLN7jDejTLrrcyeNY5pRa8AUtbS1ckiYWI7OOlR3PBjD5Tgaz2HEKFw0FEoNMQnU8uLzR5WbUX4obOpzAyB4WYmCS9vK-ud98mmMHOT15Ee2BeaxIWTBL715m-NJcIxxByvsBtogVj7zWJayAVLOspMLps8hWk8XJDXpWEx0J8uU9KUPOey3YMiO5gNlk5TRHcZJOGg_7HV8_55MqKTQ8K9Jhsu5uVieB3kuJdwJdcGCGrMi1UVGx-RgJwGbZqMkXgy55QAp2he_sNFZmThhuxvz7FIclUyyoUZ43V9Q

将token粘贴到web页面上的token输入栏

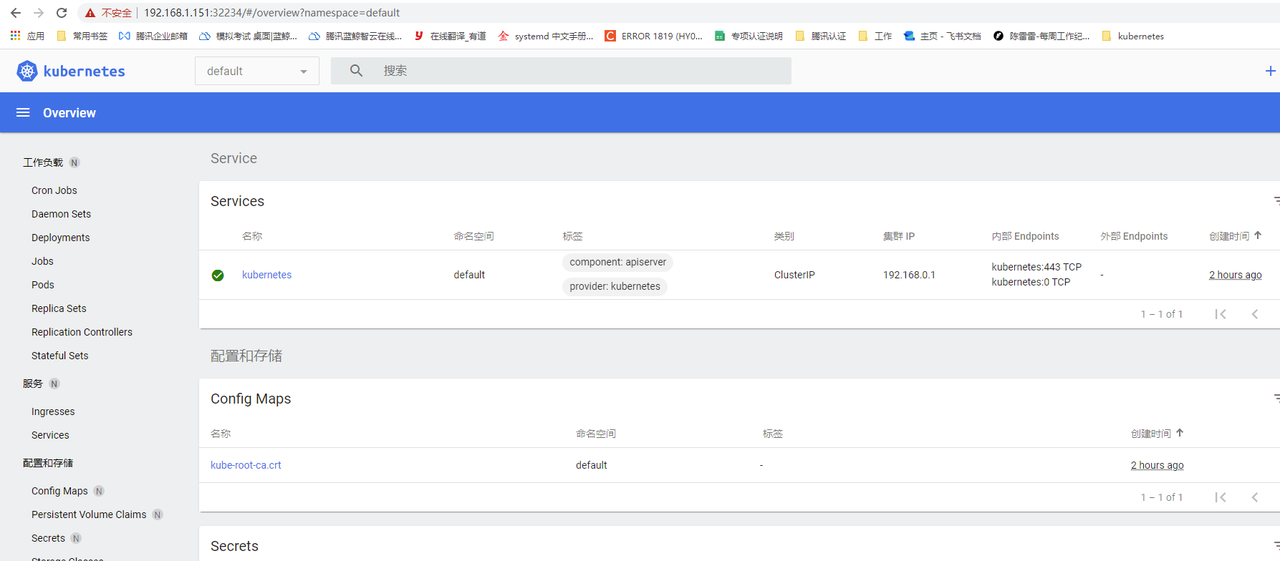

登录成功界面:

6. 配置修改

将Kube-proxy改为ipvs模式,因为在初始化集群的时候注释了ipvs配置,所以需要自行修改一下:

在master01节点执行

kubectl edit cm kube-proxy -n kube-system

mode: 修改为 mode: ipvs

更新Kube-Proxy的Pod:

kubectl patch daemonset kube-proxy -p "{"spec":{"template":{"metadata":{"annotations":{"date":"`date +'%s'`"}}}}}" -n kube-system

验证Kube-Proxy模式:

root@k8s-master01[14:52:28]:~/k8s-ha-install/dashboard$ curl 127.0.0.1:10249/proxyMode

kubectl edit cm kube-proxy -n kube-system

mode: ipvs

更新Kube-Proxy的Pod:

kubectl patch daemonset kube-proxy -p "{"spec":{"template":{"metadata":{"annotations":{"date":"`date +'%s'`"}}}}}" -n kube-system

验证Kube-Proxy模式:

[root@k8s-master01 1.1.1]# curl 127.0.0.1:10249/proxyMode

ipvs

集群部署完成状态:

root@k8s-master01[21:29:32]:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane,master 145m v1.21.0

k8s-master02 Ready control-plane,master 73m v1.21.0

k8s-master03 Ready control-plane,master 73m v1.21.0

k8s-node01 Ready <none> 72m v1.21.0

k8s-node02 Ready <none> 72m v1.21.0

root@k8s-master01[21:29:33]:~$ cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.151 k8s-master01

192.168.1.152 k8s-master02

192.168.1.153 k8s-master03

192.168.1.160 k8s-master-lb

192.168.1.154 k8s-node01

192.168.1.155 k8s-node02

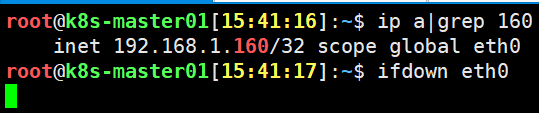

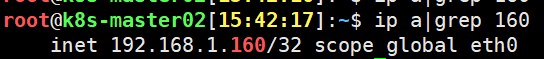

7. 高可用测试

k8s-master01是vip地址,停止eth0后vip消失

停止后,vip地址在master02上漂移成功

微信赞赏

微信赞赏

支付宝赞赏

支付宝赞赏