经过3天的努力,终于在Kubernetes上把HBase集群搭建起来了,搭建步骤如下。

创建HBase镜像

配置文件包含core-site.xml、hbase-site.xml、hdfs-site.xml和yarn-site.xml,因为我这里是基于我之前搭建和zookeeper和Hadoop环境进行的,所以配置文件里面很多地方都是根据这两套环境做的,如果要搭建高可用的HBase集群,需要另外做镜像,当前镜像的配置不支持。

core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-hdfs-master:9000/</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>

org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec,

org.apache.hadoop.io.compress.BZip2Codec

</value>

</property>

<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

<property>

<name>dfs.namenode.rpc-bind-host</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hadoop.security.token.service.use_ip</name>

<value>false</value>

</property>

</configuration>

hbase-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://@HDFS_PATH@/hbase/</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>@ZOOKEEPER_IP_LIST@</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>@ZOOKEEPER_PORT@</value>

</property>

<property>

<name>hbase.regionserver.restart.on.zk.expire</name>

<value>true</value>

</property>

<property>

<name>hbase.client.pause</name>

<value>50</value>

</property>

<property>

<name>hbase.client.retries.number</name>

<value>3</value>

</property>

<property>

<name>hbase.rpc.timeout</name>

<value>2000</value>

</property>

<property>

<name>hbase.client.operation.timeout</name>

<value>3000</value>

</property>

<property>

<name>hbase.client.scanner.timeout.period</name>

<value>10000</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>300000</value>

</property>

<property>

<name>hbase.hregion.max.filesize</name>

<value>1073741824</value>

</property>

<property>

<name>fs.hdfs.impl</name>

<value>org.apache.hadoop.hdfs.DistributedFileSystem</value>

</property>

<property>

<name>hbase.client.keyvalue.maxsize</name>

<value>1048576000</value>

</property>

</configuration>

hdfs-site.xml

<?xml version="1.0"?>

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///root/hdfs/namenode</value>

<description>NameNode directory for namespace and transaction logs storage.</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///root/hdfs/datanode</value>

<description>DataNode directory</description>

</property>

<property>

<name>dfs.namenode.datanode.registration.ip-hostname-check</name>

<value>false</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop-hdfs-master</value>

</property>

<property>

<name>yarn.resourcemanager.bind-host</name>

<value>0.0.0.0</value>

</property>

</configuration>

start-kubernetes-hbase.sh

#!/bin/bash

export HBASE_CONF_FILE=/opt/hbase/conf/hbase-site.xml

export HADOOP_USER_NAME=root

export HBASE_MANAGES_ZK=false

sed -i "s/@HDFS_PATH@/$HDFS_PATH/g" $HBASE_CONF_FILE

sed -i "s/@ZOOKEEPER_IP_LIST@/$ZOOKEEPER_SERVICE_LIST/g" $HBASE_CONF_FILE

sed -i "s/@ZOOKEEPER_PORT@/$ZOOKEEPER_PORT/g" $HBASE_CONF_FILE

sed -i "s/@ZNODE_PARENT@/$ZNODE_PARENT/g" $HBASE_CONF_FILE

# set fqdn

for i in $(seq 1 10)

do

if grep --quiet $CLUSTER_DOMAIN /etc/hosts; then

break

elif grep --quiet $POD_NAME /etc/hosts; then

cat /etc/hosts | sed "s/$POD_NAME/${POD_NAME}.${POD_NAMESPACE}.svc.${CLUSTER_DOMAIN} $POD_NAME/g" > /etc/hosts.bak

cat /etc/hosts.bak > /etc/hosts

break

else

echo "waiting for /etc/hosts ready"

sleep 1

fi

done

if [ "$HBASE_SERVER_TYPE" = "master" ]; then

/opt/hbase/bin/hbase master start

elif [ "$HBASE_SERVER_TYPE" = "regionserver" ]; then

/opt/hbase/bin/hbase regionserver start

fi

Dockerfile

FROM java:8

MAINTAINER leo.lee(lis85@163.com)

ENV HBASE_VERSION 1.2.6.1

ENV HBASE_INSTALL_DIR /opt/hbase

ENV JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

RUN mkdir -p ${HBASE_INSTALL_DIR} &&

curl -L http://mirrors.hust.edu.cn/apache/hbase/stable/hbase-${HBASE_VERSION}-bin.tar.gz | tar -xz --strip=1 -C ${HBASE_INSTALL_DIR}

RUN sed -i "s/httpredir.debian.org/mirrors.163.com/g" /etc/apt/sources.list

# build LZO

WORKDIR /tmp

RUN apt-get update &&

apt-get install -y build-essential maven lzop liblzo2-2 &&

wget http://www.oberhumer.com/opensource/lzo/download/lzo-2.10.tar.gz &&

tar zxvf lzo-2.10.tar.gz &&

cd lzo-2.10 &&

./configure --enable-shared --prefix /usr/local/lzo-2.10 &&

make && make install &&

cd .. && git clone https://github.com/twitter/hadoop-lzo.git && cd hadoop-lzo &&

git checkout release-0.4.20 &&

C_INCLUDE_PATH=/usr/local/lzo-2.10/include LIBRARY_PATH=/usr/local/lzo-2.10/lib mvn clean package &&

apt-get remove -y build-essential maven &&

apt-get clean autoclean &&

apt-get autoremove --yes &&

rm -rf /var/lib/{apt,dpkg,cache.log}/ &&

cd target/native/Linux-amd64-64 &&

tar -cBf - -C lib . | tar -xBvf - -C /tmp &&

mkdir -p ${HBASE_INSTALL_DIR}/lib/native &&

cp /tmp/libgplcompression* ${HBASE_INSTALL_DIR}/lib/native/ &&

cd /tmp/hadoop-lzo && cp target/hadoop-lzo-0.4.20.jar ${HBASE_INSTALL_DIR}/lib/ &&

echo "export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lzo-2.10/lib" >> ${HBASE_INSTALL_DIR}/conf/hbase-env.sh &&

rm -rf /tmp/lzo-2.10* hadoop-lzo lib libgplcompression*

ADD hbase-site.xml /opt/hbase/conf/hbase-site.xml

ADD core-site.xml /opt/hbase/conf/core-site.xml

ADD hdfs-site.xml /opt/hbase/conf/hdfs-site.xml

ADD start-kubernetes-hbase.sh /opt/hbase/bin/start-kubernetes-hbase.sh

RUN chmod +777 /opt/hbase/bin/start-kubernetes-hbase.sh

WORKDIR ${HBASE_INSTALL_DIR}

RUN echo "export HBASE_JMX_BASE="-Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false"" >> conf/hbase-env.sh &&

echo "export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10101"" >> conf/hbase-env.sh &&

echo "export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10102"" >> conf/hbase-env.sh &&

echo "export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10103"" >> conf/hbase-env.sh &&

echo "export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10104"" >> conf/hbase-env.sh &&

echo "export HBASE_REST_OPTS="$HBASE_REST_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10105"" >> conf/hbase-env.sh

ENV PATH=$PATH:/opt/hbase/bin

CMD /opt/hbase/bin/start-kubernetes-hbase.sh

把这些文件放入同一级目录,然后使用命令创建镜像

docker build -t leo/hbase:1.2.6.1 .

创建成功后通过通过命令【docker images】就可以查看到镜像了

【注意】,这里有一个坑,【start-kubernetes-hbase.sh】文件的格式,如果该文件是在Windows机器上创建的,默认的格式会是doc,如果不将格式修改为unix,就会报错【/bin/bash^M: bad interpreter: No such file or directory】,导致该脚本文件在Linux上无法运行,修改的方法很简单,使用vim命令修改文件,然后按下【ESC】,输入【:set ff=unix】,然后回车,wq保存。

编写yaml文件

hbase.yaml

apiVersion: v1

kind: Service

metadata:

name: hbase-master

spec:

clusterIP: None

selector:

app: hbase-master

ports:

- name: rpc

port: 16000

- name: http

port: 16010

---

apiVersion: v1

kind: Pod

metadata:

name: hbase-master

labels:

app: hbase-master

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: HBASE_SERVER_TYPE

value: master

- name: HDFS_PATH

value: hadoop-hdfs-master:9000

- name: ZOOKEEPER_SERVICE_LIST

value: zk-cs

- name: ZOOKEEPER_PORT

value: "2181"

image: registry.docker.uih/library/leo-hbase:1.2.6.1

imagePullPolicy: IfNotPresent

name: hbase-master

ports:

- containerPort: 16000

protocol: TCP

- containerPort: 16010

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: hbase-region-1

spec:

clusterIP: None

selector:

app: hbase-region-1

ports:

- name: rpc

port: 16020

- name: http

port: 16030

---

apiVersion: v1

kind: Service

metadata:

name: hbase-region-2

spec:

clusterIP: None

selector:

app: hbase-region-2

ports:

- name: rpc

port: 16020

- name: http

port: 16030

---

apiVersion: v1

kind: Service

metadata:

name: hbase-region-3

spec:

clusterIP: None

selector:

app: hbase-region-3

ports:

- name: rpc

port: 16020

- name: http

port: 16030

---

apiVersion: v1

kind: Pod

metadata:

labels:

app: hbase-region-1

name: hbase-region-1

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: HBASE_SERVER_TYPE

value: regionserver

- name: HDFS_PATH

value: hadoop-hdfs-master:9000

- name: ZOOKEEPER_SERVICE_LIST

value: zk-cs

- name: ZOOKEEPER_PORT

value: "2181"

image: registry.docker.uih/library/leo-hbase:1.2.6.1

imagePullPolicy: IfNotPresent

name: hbase-region-1

ports:

- containerPort: 16020

protocol: TCP

- containerPort: 16030

protocol: TCP

---

apiVersion: v1

kind: Pod

metadata:

labels:

app: hbase-region-2

name: hbase-region-2

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: HBASE_SERVER_TYPE

value: regionserver

- name: HDFS_PATH

value: hadoop-hdfs-master:9000

- name: ZOOKEEPER_SERVICE_LIST

value: zk-cs

- name: ZOOKEEPER_PORT

value: "2181"

image: registry.docker.uih/library/leo-hbase:1.2.6.1

imagePullPolicy: IfNotPresent

name: hbase-region-2

ports:

- containerPort: 16020

protocol: TCP

- containerPort: 16030

protocol: TCP

---

apiVersion: v1

kind: Pod

metadata:

labels:

app: hbase-region-3

name: hbase-region-3

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: HBASE_SERVER_TYPE

value: regionserver

- name: HDFS_PATH

value: hadoop-hdfs-master:9000

- name: ZOOKEEPER_SERVICE_LIST

value: zk-cs

- name: ZOOKEEPER_PORT

value: "2181"

image: registry.docker.uih/library/leo-hbase:1.2.6.1

imagePullPolicy: IfNotPresent

name: hbase-region-3

ports:

- containerPort: 16020

protocol: TCP

- containerPort: 16030

protocol: TCP

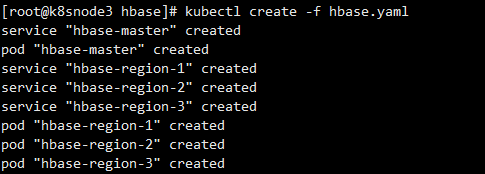

创建服务和POD

kubectl create -f hbase.yaml

create pods

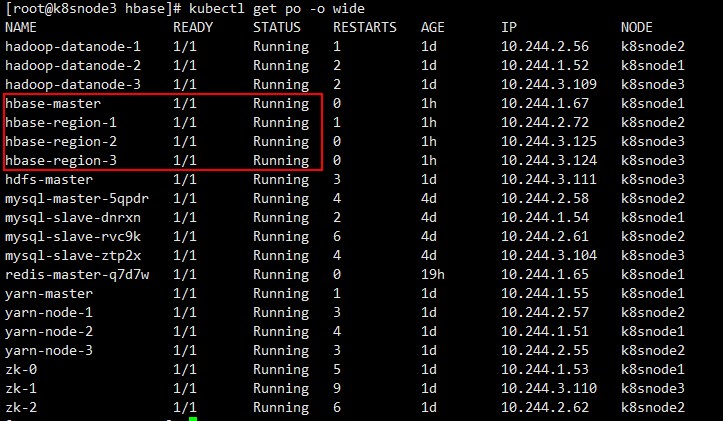

分别查看POD和service

kubectl get po -o wide

pods

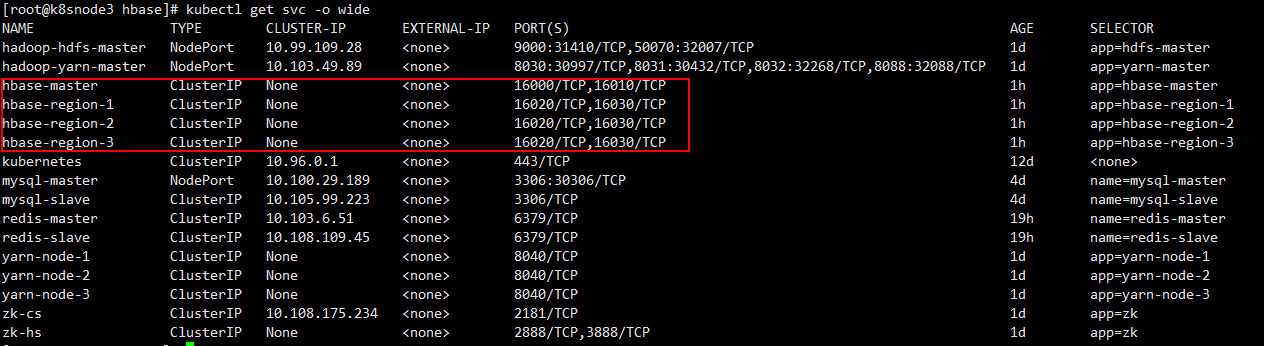

kubectl get svc -o wide

service

搭建成功!!