写在前面的话

写一个简单的播放器,通过RTP接收视频流,进行实时播放。最初,使用ffplay或者vlc接收按照SDP协议文件可以播放视频,但是视频中断后重启,不能正确的解包,时常会出现如下的错误信息。

[sdp @ 0x7ffb35034e00] RTP: dropping old packet received too late

Last message repeated 15 times

使用ffplay播放udp视频。

➜ view-show ffplay -protocol_whitelist "file,http,https,rtp,udp,tcp,tls" test.sdp ➜ view-show cat test.sdp m=video 6665 RTP/AVP 96 a=rtpmap:96 H264/90000 c=IN IP4 0.0.0.0

分析ffmpeg原代码,在rtpdec.c代码文件中,这个错误的原因是ffplay接收RTP视频流时,如果前一个RTP包的流水号大于后一个帧时,会将当前RTP报文丢弃。

static int rtp_parse_one_packet(RTPDemuxContext *s, AVPacket *pkt, uint8_t **bufptr, int len)

{

...

if ((s->seq == 0 && !s->queue) || s->queue_size <= 1) {

/* First packet, or no reordering */

return rtp_parse_packet_internal(s, pkt, buf, len);

} else {

uint16_t seq = AV_RB16(buf + 2);

int16_t diff = seq - s->seq;

if (diff < 0) {

/* 注意看这里 Packet older than the previously emitted one, drop */

av_log(s->ic, AV_LOG_WARNING,

"RTP: dropping old packet received too late

");

return -1;

} else if (diff <= 1) {

/* Correct packet */

rv = rtp_parse_packet_internal(s, pkt, buf, len);

return rv;

} else {

/* Still missing some packet, enqueue this one. */

rv = enqueue_packet(s, buf, len);

if (rv < 0)

return rv;

*bufptr = NULL;

/* Return the first enqueued packet if the queue is full,

* even if we're missing something */

if (s->queue_len >= s->queue_size) {

av_log(s->ic, AV_LOG_WARNING, "jitter buffer full

");

return rtp_parse_queued_packet(s, pkt);

}

return -1;

}

}

}

但是,实际的业务场合中,对于一个大的视频文件,会按照MTU(以太网1500)拆分成很多个RTP报文(1400大小),多帧视频拆包的个数远超过RTP协议中的流水号限制 65536。

为了绕开这个问题,计划重新做一个简单的视频播放器,功能类似于ffplay接收UDP报文,然后播放。

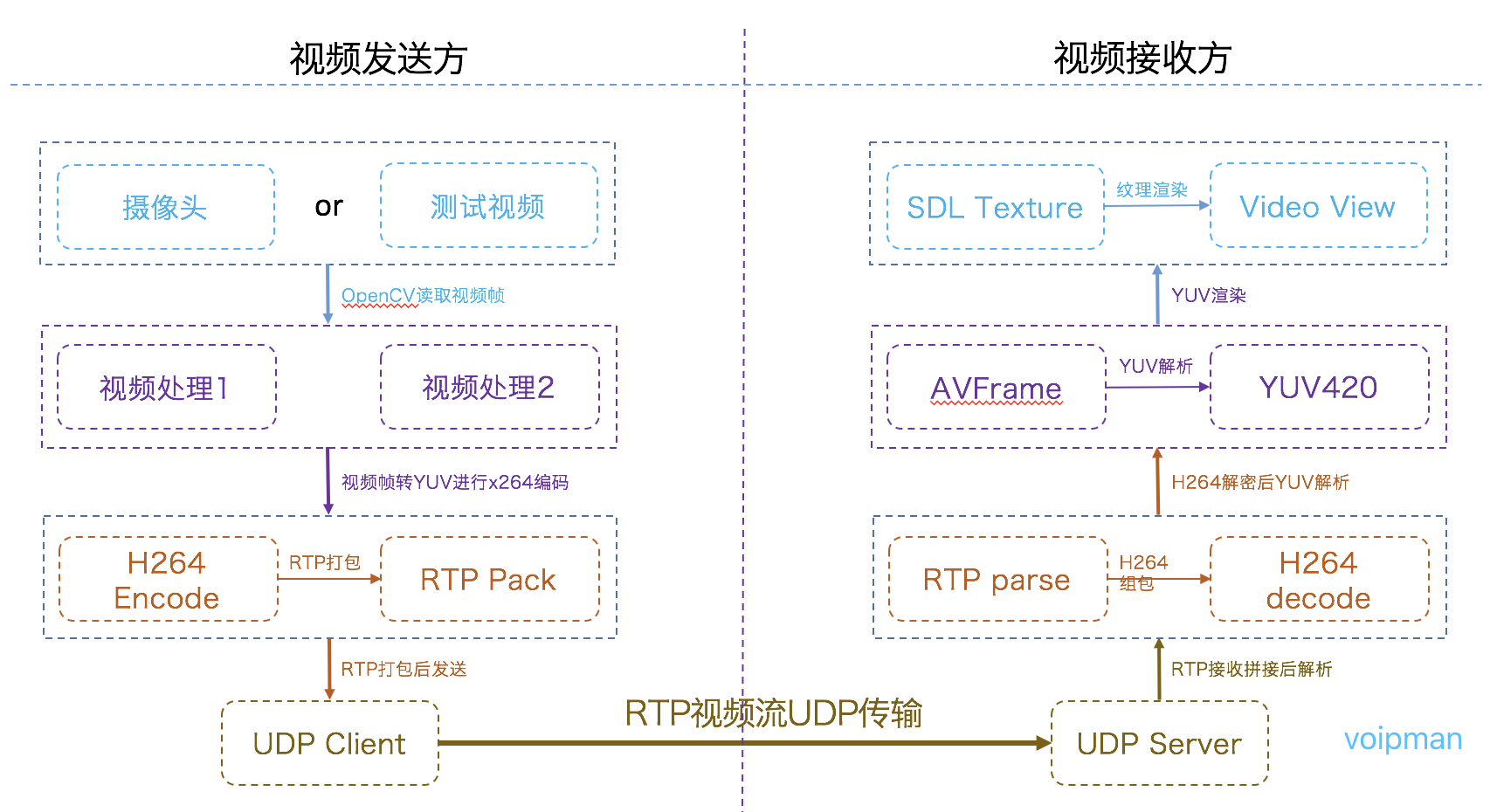

总体视频传输流程

使用的库简要说明

| 库 | 说明和作用 |

| opencv | 摄像头或视频文件读取,图像处理 |

| x264 |

H264视频编码 |

| ffmpeg | H264视频解码 |

| SDL2 | 视频播放 |

| RTP | RTP视频流打包 |

细节分析

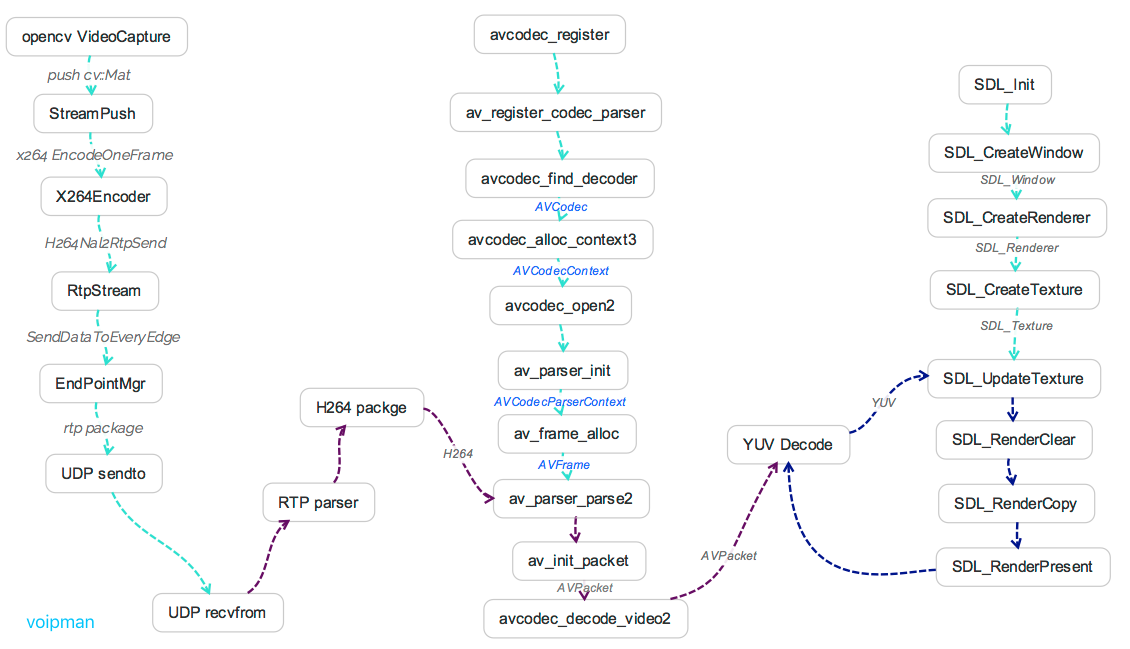

Opencv-x264-RTP视频编码流程

通过OpenCV读取视频文件代码

void handleVideo(const char* pFileName) {

Mat frame;

cv::VideoCapture capture(pFileName);

while (true) {

capture >> frame;

if (frame.empty()) {

break;

}

STREAM_PUSH_INS->push(&frame);

}

}

通过x264编码视频帧代码

bool X264Encoder::EncodeOneBuf(cv::Mat *yuvMat, Str *resStr) {

TimeMeasurer tm;

memset(yuv_buffer_, 0, m_width * m_height * 3);

uint8_t* yuv_buffer =(uint8_t*) yuvMat->data;

memcpy(picture_in_.img.plane[0], yuv_buffer, m_width*m_height);

yuv_buffer += m_width*m_height;

memcpy(picture_in_.img.plane[1], yuv_buffer, m_width*m_height / 4);

yuv_buffer += m_width*m_height / 4;

memcpy(picture_in_.img.plane[2], yuv_buffer, m_width*m_height / 4);

picture_in_.i_type = X264_TYPE_IDR;

int64_t i_pts = 0;

picture_in_.i_pts = i_pts++;

x264_nal_t *nals;

int nnal;

int h264size = 0;

x264_picture_t pic_out;

x264_picture_init(&pic_out);

x264_encoder_encode(x264_encoder_, &nals, &nnal, &picture_in_, &pic_out);

x264_nal_t *nal;

for (nal = nals; nal < nals + nnal; nal++) {

memcpy((char*)resStr->data + h264size,nal->p_payload,nal->i_payload);

h264size = h264size + nal->i_payload;

}

resStr->size = h264size;

LOG_INFO("x264.encode.cost: %lu", tm.Elapsed());

return true;

}

YUV格式分析

YUV编码中使用IYUV,也叫YUV420p或者I420,

如下是YUV420p的数据格式。

YUV420P分Y,U,V三个分量

U分量紧跟在Y分量之后,接着V分量(即:YUV)

因此数据格式为 YYYY YYYY UU VV

RTP协议定义

typedef struct rtp_header {

/* little-endian */

/* byte 0 */

uint8_t csrc_len: 4; /* bit: 0~3 */

uint8_t extension: 1; /* bit: 4 */

uint8_t padding: 1; /* bit: 5*/

uint8_t version: 2; /* bit: 6~7 */

/* byte 1 */

uint8_t payload_type: 7; /* bit: 0~6 */

uint8_t marker: 1; /* bit: 7 */

/* bytes 2, 3 */

uint16_t seq_no;

/* bytes 4-7 */

uint32_t timestamp;

/* bytes 8-11 */

uint32_t ssrc;

} __attribute__ ((packed)) rtp_header_t; /* 12 bytes */

ffmpeg做H264视频解码分析

ffmpeg做H264解码初始化代码

AVCodec *gCodec = NULL;

AVCodecContext *gCodec_ctx = NULL;

AVCodecParserContext *gParser = NULL;

AVFrame *gAVFrame = NULL;

void doAVCodecInit() {

avcodec_register(&ff_h264_decoder);

av_register_codec_parser(&ff_h264_parser);

gCodec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (!gCodec) {

fprintf(stderr, "Codec not found

");

exit(1);

}

gCodec_ctx = avcodec_alloc_context3(gCodec);

if (!gCodec_ctx) {

fprintf(stderr, "Could not allocate video codec context

");

exit(1);

}

if (avcodec_open2(gCodec_ctx, gCodec, NULL) < 0) {

fprintf(stderr, "Could not open codec

");

exit(1);

}

gParser = av_parser_init(AV_CODEC_ID_H264);

if (!gParser) {

fprintf(stderr, "Could not create H264 parser

");

exit(1);

}

gAVFrame = av_frame_alloc();

if (!gAVFrame) {

fprintf(stderr, "Could not allocate video frame

");

exit(1);

}

initPacket();

}

ffmpeg循环做H264解码代码

static int doDecodeFrame(AVPacket *pkt, unsigned int frame_index) {

int got_frame = 0;

do {

int len = avcodec_decode_video2(gCodec_ctx, gAVFrame, &got_frame, pkt);

if (len < 0) {

fprintf(stderr, "Error while decoding frame %d

", frame_index);

return len;

}

if (got_frame) {

//printf("Got frame %d

", frame_index);

//fflush(stdout);

yuv_show(gAVFrame->data, gAVFrame->linesize, gAVFrame->width, gAVFrame->height);

}

} while (0);

return 0;

}

int doPackDecode(struct ImagePacket *packetPtr) {

uint8_t *data = NULL;

int size = 0;

int bytes_used = av_parser_parse2(gParser, gCodec_ctx, &data, &size, packetPtr->buf_, packetPtr->len_, 0, 0,

AV_NOPTS_VALUE);

if (size == 0) {

return -1;

}

// We have data of one packet, decode it; or decode whatever when ending

AVPacket packet;

av_init_packet(&packet);

packet.data = data;

packet.size = size;

int ret = doDecodeFrame(&packet, packetPtr->frame_index_);

if (ret < 0) {

return -1;

}

return 0;

}

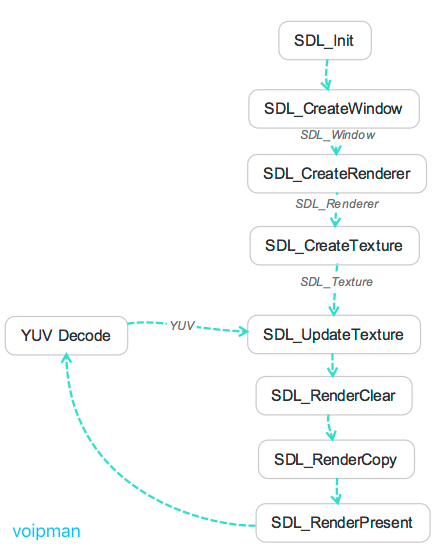

SDL视频渲染流程

SDL初始化代码

#define LOAD_YUV420P 0

#define HAS_BORDER 1

const int bpp = 12;

const int screen_w = 1434, screen_h = 806;

const int pixel_w = 1434, pixel_h = 806;

//const int screen_w=1920,screen_h=1080;

//const int pixel_w=1920,pixel_h=1080;

SDL_Window *gScreen = NULL;

SDL_Renderer *gSdlRenderer = NULL;

SDL_Texture *gSdlTexture = NULL;

SDL_Rect sdlRect;

//Refresh Event

#define REFRESH_EVENT (SDL_USEREVENT + 1)

int thread_exit = 0;

int refresh_video(void *opaque) {

while (thread_exit == 0) {

SDL_Event event;

event.type = REFRESH_EVENT;

SDL_PushEvent(&event);

SDL_Delay(10);

}

return 0;

}

int doSDLInit() {

if (SDL_Init(SDL_INIT_EVERYTHING)) {

printf("Could not initialize SDL - %s

", SDL_GetError());

return -1;

}

//SDL 2.0 Support for multiple windows

gScreen = SDL_CreateWindow("Video-View", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED,

screen_w, screen_h, SDL_WINDOW_OPENGL | SDL_WINDOW_RESIZABLE);

if (!gScreen) {

printf("SDL: could not create window - exiting:%s

", SDL_GetError());

return -1;

}

gSdlRenderer = SDL_CreateRenderer(gScreen, -1, 0);

int pixformat = SDL_PIXELFORMAT_IYUV;

gSdlTexture = SDL_CreateTexture(gSdlRenderer, pixformat, SDL_TEXTUREACCESS_STREAMING, pixel_w, pixel_h);

int border = 0;

sdlRect.x = 0 + border;

sdlRect.y = 0 + border;

sdlRect.w = screen_w - border * 2;

sdlRect.h = screen_h - border * 2;

SDL_Thread *refresh_thread = SDL_CreateThread(refresh_video, NULL, NULL);

return 0;

}

SDL循环渲染代码

SDL_Event event;

SDL_WaitEvent(&event);

if (event.type == REFRESH_EVENT) {

SDL_UpdateTexture(gSdlTexture, NULL, gImagePacket->buf_, pixel_w);

SDL_RenderClear(gSdlRenderer);

SDL_RenderCopy(gSdlRenderer, gSdlTexture, NULL, &sdlRect);

SDL_RenderPresent(gSdlRenderer);

//Delay 40ms

SDL_Delay(1);

} else if (event.type == SDL_QUIT) {

_exit(0);

}

参考:https://blog.csdn.net/leixiaohua1020/article/details/40525591/

发送和接收总的流程如下图所示

视频源码请参考我的github项目。

接收端启动方式:

./viewer 6665

发送端启动方式

/bin/video-pusher 127.0.0.1 6665 ./test-video/d001.mp4

测试视频使用1280*720的视频文件。可以找一段1280*720的视频验证。

视频读取编码和发送:

https://github.com/gityf/img-video/tree/master/video/opencv-x264-rtp-pusher

视频接收解密和播放:

https://github.com/gityf/img-video/tree/master/video/ffmpeg-h264-sdl-view

结束,祝玩的开心!