转:https://www.cnblogs.com/wdliu/p/9152347.html

| 一、master节点组件部署 |

承接上篇文章--基于TLS证书手动部署kubernetes集群(上),我们已经部署好了etcd集群、flannel网络以及每个节点的docker,接下来部署master节点

1.软件包下载:

下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.9.md

2.解压包、创建目录

# 解压下载包

tar zxvf kubernetes-server-linux-amd64.tar.gz

#创建目录,ssl 之前已经创建ssl目录可不用创建

mkdir -p /opt/kubernetes/{bin,conf,ssl}

#拷贝执行脚本

cp kube-controller-manager /opt/kubernetes/bin/

cp kube-apiserver /opt/kubernetes/bin/

cp kube-scheduler /opt/kubernetes/bin/

cp kubectl /opt/kubernetes/bin/

#添加执行权限

chmod a+x /opt/kubernetes/bin/*

3.为各个组件通讯创建TLS Bootstrapping Token

#进入到配置文件目录

cd /opt/kubernetes/conf/

#生成token

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

#保存到文件中

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

#查看token

cat token.csv

4.配置各个master组件

kube-apiserver

#配置文件 cat > /opt/kubernetes/conf/kube-apiserver <<EOF KUBE_APISERVER_OPTS="--logtostderr=true --v=4 --etcd-servers=https://10.1.210.32:2379,https://10.1.210.33:2379,https://10.1.210.34:2379 --insecure-bind-address=127.0.0.1 --bind-address=10.1.210.33 --insecure-port=8080 --secure-port=6443 --advertise-address=10.1.210.33 --allow-privileged=true --service-cluster-ip-range=10.10.10.0/24 --admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/conf/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/kubernetes/ssl/ca.pem --etcd-certfile=/opt/kubernetes/ssl/server.pem --etcd-keyfile=/opt/kubernetes/ssl/server-key.pem" EOF ##服务器启动文件 cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/conf/kube-apiserver ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

kube-scheduler

#配置文件 cat > /opt/kubernetes/conf/kube-scheduler <<EOF KUBE_SCHEDULER_OPTS="--logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect" EOF #启动文件 cat > /usr/lib/systemd/system/kube-scheduler.service <<EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/conf/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

kube-controller-manager

#配置文件 cat > cat /opt/kubernetes/conf/kube-controller-manager <<EOF KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.10.10.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem" EOF #启动脚本 cat > /usr/lib/systemd/system/kube-controller-manager.service <<EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/conf/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target [root@master soft]# cat /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/conf/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

5.启动master所有组件

#启动apiserver systemctl daemon-reload systemctl enable kube-apiserver systemctl restart kube-apiserver #启动kube-scheduler systemctl daemon-reload systemctl enable kube-scheduler systemctl restart kube-scheduler #启动kube-scheduler systemctl daemon-reload systemctl enable kube-scheduler systemctl restart kube-scheduler

6.查看各个组件状态,kubectl get cs如下图:

| 二、node节点组件部署 |

1.创建Node节点kubeconfig文件(此步骤在master上进行,创建完成下发到每个node),此步骤依赖上次环境变量中生成的token,请确保echo $BOOTSTRAP_TOKEN有token值

#进入到证书目录

cd /opt/kubernetes/ssl/

# 创建指明api-server地址

export KUBE_APISERVER="https://10.1.210.33:6443"

# 设置集群参数

kubectl config set-cluster kubernetes

--certificate-authority=./ca.pem

--embed-certs=true

--server=${KUBE_APISERVER}

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap

--token=${BOOTSTRAP_TOKEN}

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default

--cluster=kubernetes

--user=kubelet-bootstrap

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

2.下发kubeconfig文件(bootstrap.kubeconfig、kube-proxy.kubeconfig)

#下发node节点配置文件 scp *.kubeconfig node1:/opt/kubernetes/conf/ scp *.kubeconfig node2:/opt/kubernetes/conf/

3.选择一台node节点部署组件(下载server版本中已经有node组件)

为了方便,下面使用脚本生成配置文件和启动脚本:

kubelet组件

参数一:kubelet组件监听地址

参数二:dns,后续部署集群dns的地址

sh kubelet.sh 10.1.210.32 10.10.10.3

#!/bin/bash

NODE_ADDRESS=${1:-"10.1.210.32"}

DNS_SERVER_IP=${2:-"10.10.10.3"}

cat <<EOF >/opt/kubernetes/conf/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--address=${NODE_ADDRESS} \

--hostname-override=${NODE_ADDRESS} \

--kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig \

--experimental-bootstrap-kubeconfig=/opt/kubernetes/conf/bootstrap.kubeconfig \

--cert-dir=/opt/kubernetes/ssl \

--allow-privileged=true \

--cluster-dns=${DNS_SERVER_IP} \

--cluster-domain=cluster.local \

--fail-swap-on=false \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

cat <<EOF >/usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/opt/kubernetes/conf/kubelet

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

kube-proxy组件

参数一:kube-proxy 监听地址

sh proxy.sh 10.1.210.32

#!/bin/bash

NODE_ADDRESS=${1:-"10.1.210.32"}

cat <<EOF >/opt/kubernetes/conf/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true

--v=4

--hostname-override=${NODE_ADDRESS}

--kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfig"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/opt/kubernetes/conf/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

4.由于我们采用了RBAC授权机制,所以需要给kubelet组件授权(赋权操作在master上进行)

#创建角色并赋权可以使用kubectl create clusterrolebinding --help查看如何创建角色 kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

#重启kubelet和kube-proxy

systemctl restart kubelet

systemctl restart kube-proxy

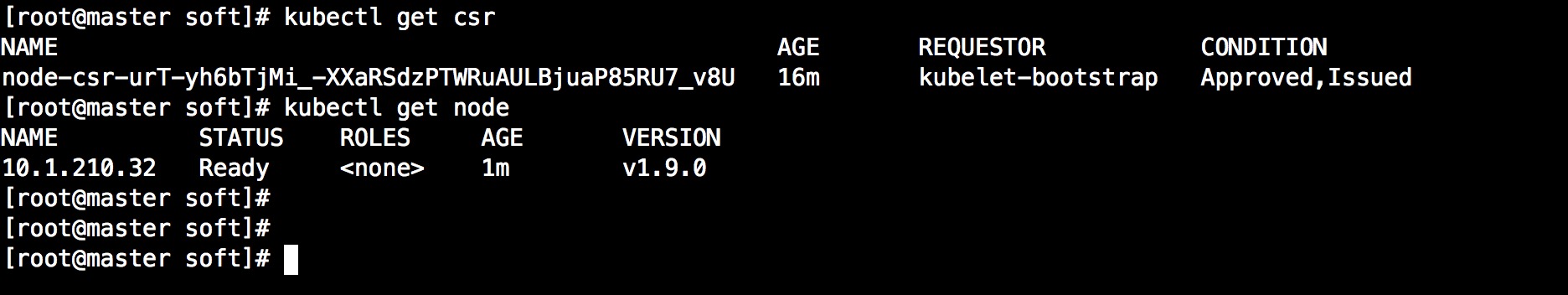

5.此时到mater查看(kubectl get csr)证书请求信息,是否有node请求集群证书,如下:

6.此时我们需要运行该节点请求证书文件

##使用kubectl certificate --help查看帮助 kubectl certificate approve node-csr-urT-yh6bTjMi_-XXaRSdzPTWRuAULBjuaP85RU7_v8U

7.查看节点是否加入,如果节点状态是Ready代表该节点已经加入到集群。

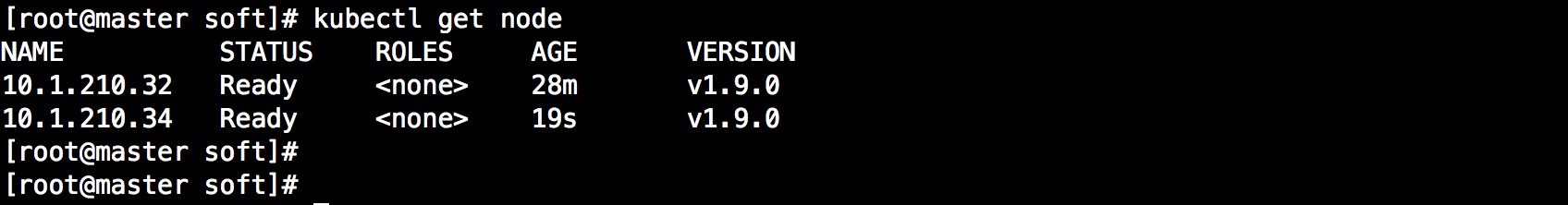

8.在另一个节点也做该操作,当然你也可以直接拷贝配置文件,修改配置信息,然后将宁一个节点加入到集群中,如图:

9.测试集群可用

#创建nginx pod kubectl run nginx --image=nginx --replicas=2 #查看pod kubectl get pod

| 三、部署Dashboard |

dashbord是k8s自带的一个webUI,可以查看一些基本信息,对我们了解集群状态有很大的帮助。

1.为了规范,我们将所有的yaml文件统一放在/opt/kubernetes/yaml下,在创建dasnbord之前需要创建角色。

kubectl create -f dashboard-rbac.yaml

dashboard-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

2.为dashboard创建控制器,需要注意的是,将镜像改为阿里的源,不然会去google找镜像,导致下载失败。

kubectl create -f dashboard-deployment.yaml

dashboard-deployment.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

serviceAccountName: kubernetes-dashboard

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.7.1

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 9090

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

3.创建service用于暴露服务

kubectl create -f dashboard-service.yaml

dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090

4.查看状态

#查看sevice kubectl get svc -n kube-system #查看pod kubectl get pods -n kube-system #查看所有信息 kubectl get all -n kube-system

5.根据以上信息80:18158,我们使用nodeip访问http://10.1.210.34:38158/查看仪表盘,到此,集群部署完毕。