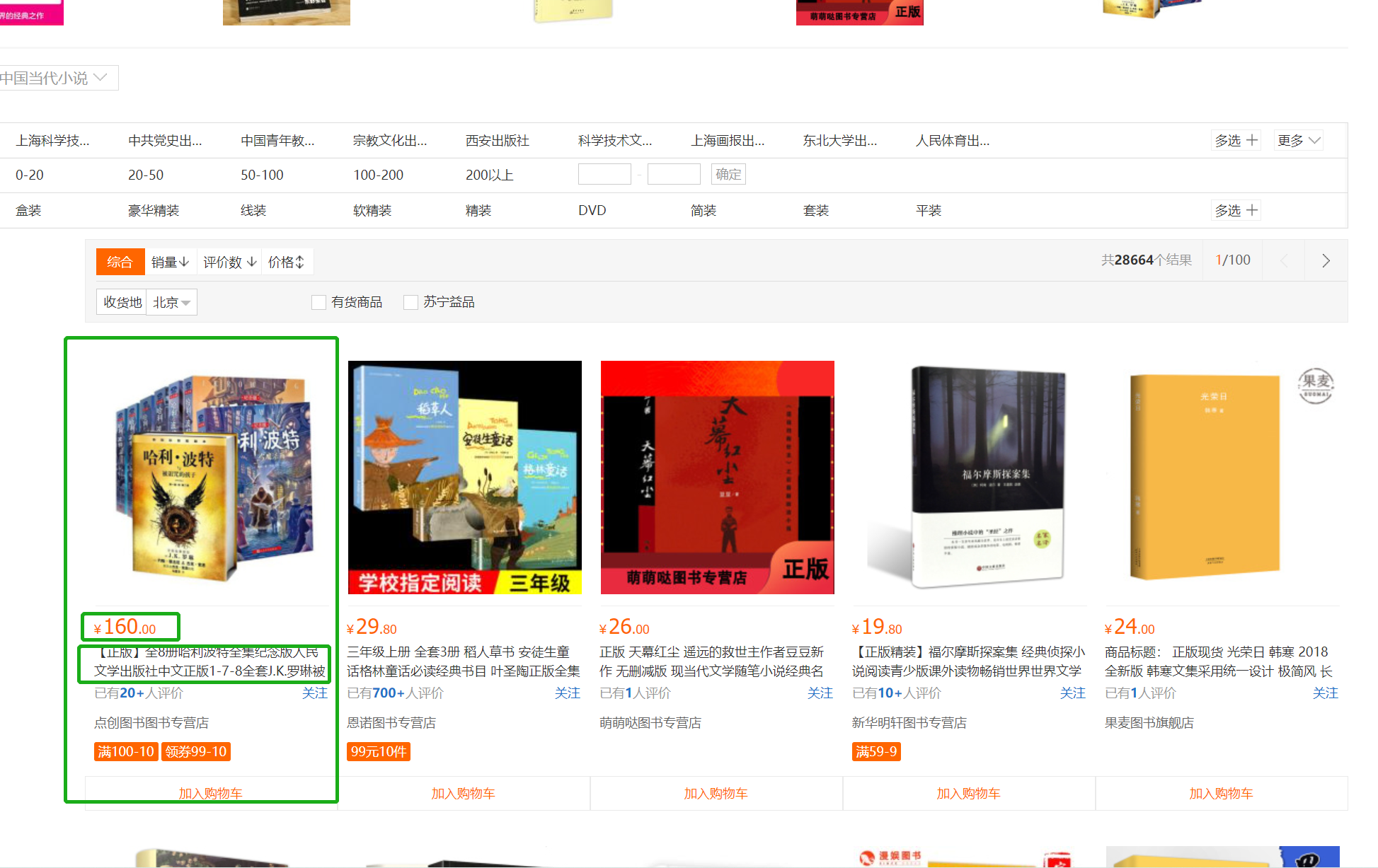

苏宁图书(https://book.suning.com/)

目标:

1、图书一级分类

2、图书二级分类

3、图书三级分类

4、图书名字

5、图书作者

6、图书价格

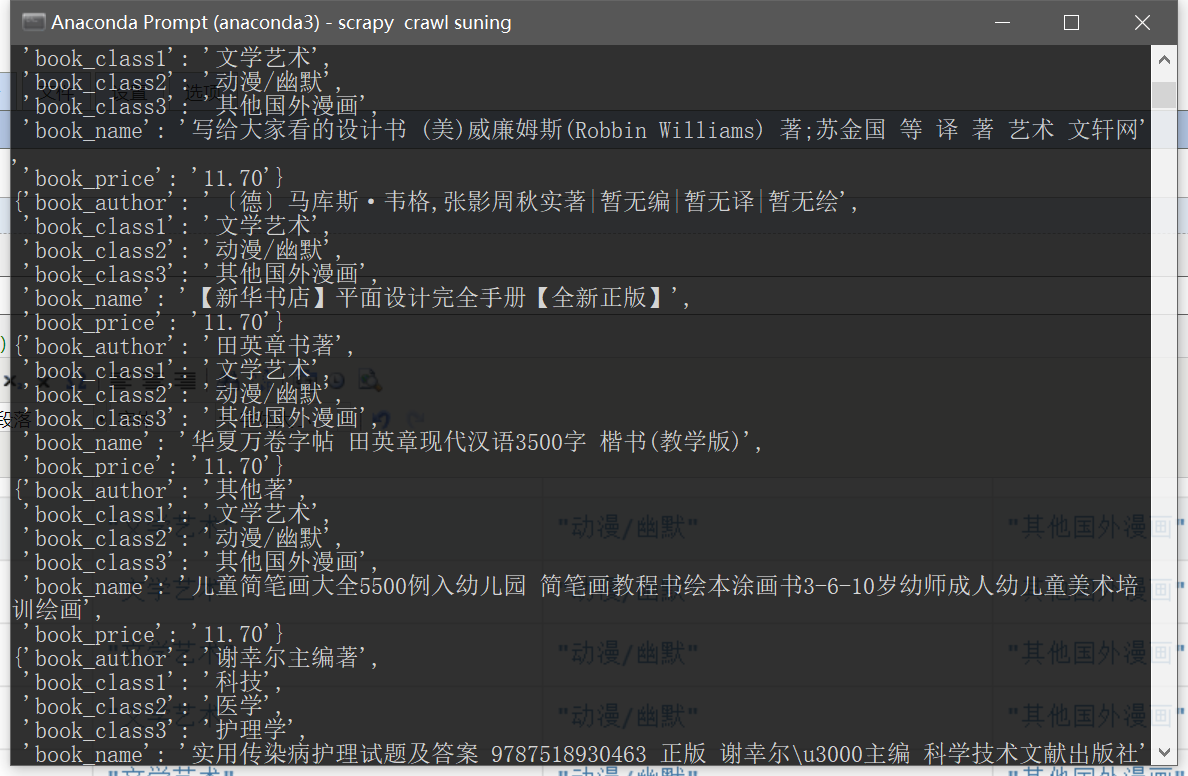

7、通过Scrapy获取以上数据并存储在MongoDB中

步骤:

1、创建项目

2、编写爬虫

- 发送主页请求,获取响应

- 利用xpath提取一级、二级、三级分类的名称和三级分类对应的图书列表页url

- 对图书列表页发送请求,获取响应

- 利用xpath提取图书的名称、价格信息以及下一页url

3、运行爬虫,保存数据

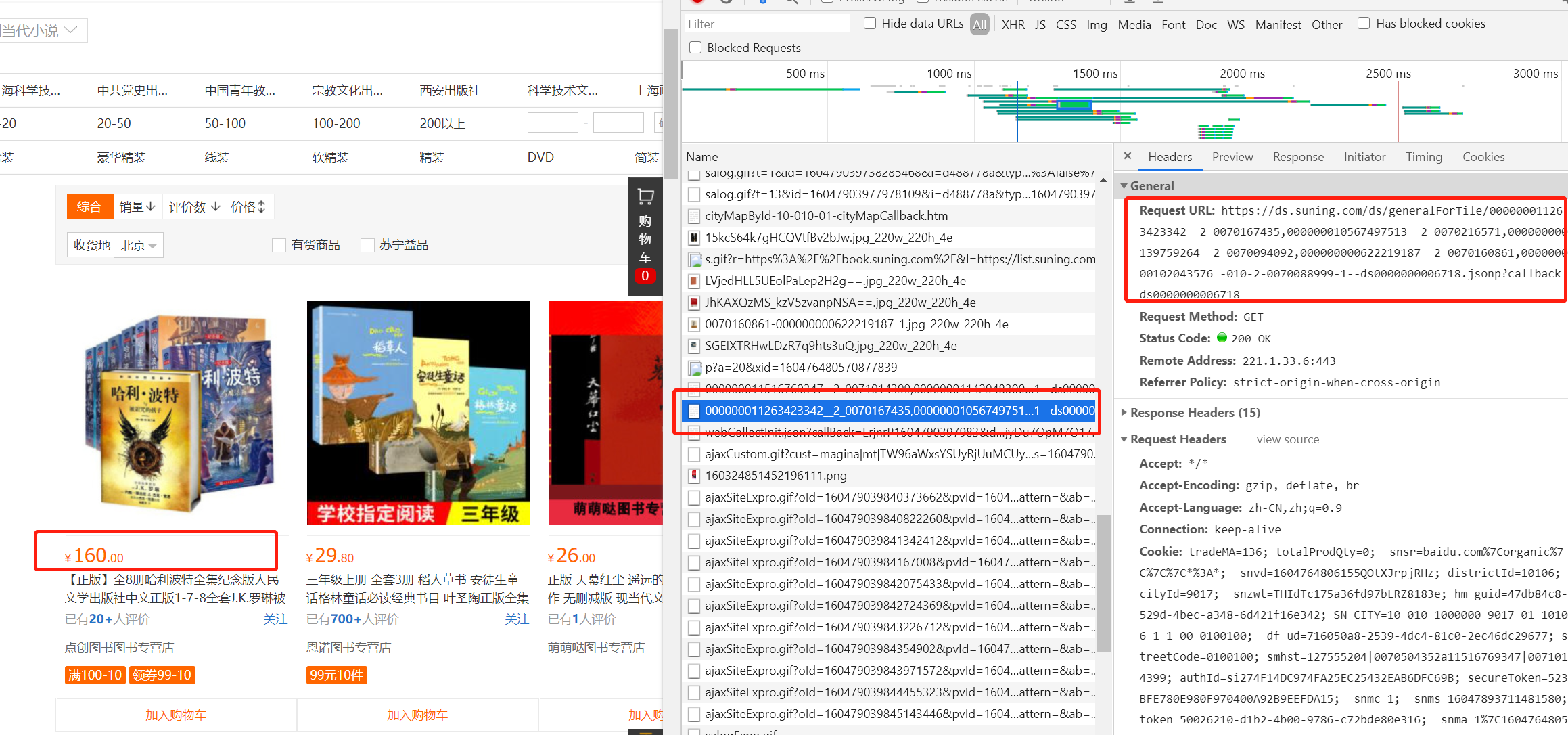

问题:

1、价格信息的获取,测试发现价格信息不在请求的列表页中。通过抓包确定了价格信息所在的URL,并且该URL随着网页的滚动动态生成,

于是通过对比多个URL,并尝试不断精简,最终发现规律:

url = "https://ds.suning.com/ds/generalForTile/{ }_-010-2-{ }-1--".format(prdid, shopid)

prdid, shopid可以在列表页中提取得到,进而构造出每本图书的价格地址

2、列表页下一页URL,通过判断每一页是否有“下一页”这个标志进行翻页循环

代码:

- item.py

import scrapy class BookItem(scrapy.Item): book_class1 = scrapy.Field() book_class2 = scrapy.Field() book_class3 = scrapy.Field() book_name = scrapy.Field() book_author = scrapy.Field() book_price = scrapy.Field()

- setting.py

BOT_NAME = 'book' SPIDER_MODULES = ['book.spiders'] NEWSPIDER_MODULE = 'book.spiders' LOG_LEVEL = "WARNING" # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36' # Obey robots.txt rules ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'book.middlewares.BookSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'book.middlewares.BookDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://docs.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'book.pipelines.BookPipeline': 300, } # Enable and configure the AutoThrottle extension (disabled by default) # See https://docs.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage' # MONGODB 主机名 MONGODB_HOST = "127.0.0.1" # MONGODB 端口号 MONGODB_PORT = 27017 # 数据库名称 MONGODB_DBNAME = "book" # 存放数据的表名称 MONGODB_SHEETNAME = "book"

- pipeline.py

from itemadapter import ItemAdapter import pymongo from scrapy.utils.project import get_project_settings settings = get_project_settings() class BookPipeline: def __init__(self): host = settings["MONGODB_HOST"] port = settings["MONGODB_PORT"] dbname = settings["MONGODB_DBNAME"] sheetname = settings["MONGODB_SHEETNAME"] # 创建MONGODB数据库链接 client = pymongo.MongoClient(host=host, port=port) # 指定数据库 mydb = client[dbname] # 存放数据的数据库表名 self.post = mydb[sheetname] def process_item(self, item, spider): data = dict(item) self.post.insert(data) print(item) return item

- spider.py

import scrapy from book.items import BookItem import re import json class SuningSpider(scrapy.Spider): name = 'suning' allowed_domains = ['suning.com'] start_urls = ['https://book.suning.com/'] def parse(self, response): class1_list = response.xpath("//div[@class='menu-item'][position()<8]") loc2 = "//div[@class='menu-sub'][{}]/div[@class='submenu-left']/p" # 二级分类定位 loc3 = "//div[@class='menu-sub'][{}]/div[@class='submenu-left']/ul/li" # 三级分类定位 for class1 in class1_list: item = BookItem() item["book_class1"] = class1.xpath(".//h3/a/text()").extract_first() # 一级分类 for class2 in response.xpath(loc2.format(class1_list.index(class1) + 1)): item["book_class2"] = class2.xpath("./a/text()").extract_first() # 二级分类 for class3 in response.xpath(loc3.format(class1_list.index(class1) + 1)): item["book_class3"] = class3.xpath("./a/text()").extract_first() # 三级分类 book_list_href = class3.xpath("./a/@href").extract_first() # 图书列表页url yield scrapy.Request(book_list_href, callback=self.parse_book_list, meta={"item": item}) # 图书列表页请求方法 def parse_book_list(self, response): # 通过meta参数实现item的传递 item = response.meta["item"] book_list = response.xpath("//div[@class='img-block']/a") for book in book_list: prdid = eval(book.xpath("./@sa-data").extract_first())["prdid"] shopid = eval(book.xpath("./@sa-data").extract_first())["shopid"] # 构建价格信息所在的url book_price_url = "https://ds.suning.com/ds/generalForTile/{}_-010-2-{}-1--".format(prdid, shopid) # 图书详情页URL book_href = "https:" + book.xpath("./@href").extract_first() yield scrapy.Request(book_price_url, callback=self.parse_book_price, meta={"item": item}) yield scrapy.Request(book_href, callback=self.parse_book_detail, meta={"item": item}) # 下一页URL next_url_tmp = response.xpath("//a[@title='下一页']/@href").extract_first() if next_url_tmp is not None: next_url = "https://list.suning.com" + next_url_tmp yield scrapy.Request(next_url, callback=self.parse_book_list) # 图书价格获取方法 def parse_book_price(self, response): item = response.meta["item"] item["book_price"] = json.loads(response.text)["rs"][0]["price"] # 图书详情页处理方法 def parse_book_detail(self, response): item = response.meta["item"] # 图书名字 item["book_name"] = "".join(response.xpath("//div[@class='proinfo-title']/h1/text()").extract()).strip() # 图书作者 author = response.xpath("//li[@class='pb-item']/text()").extract_first() # 图书作者信息字符串处理 if author is not None: item["book_author"] = re.sub(r" | |s", "", author) yield item