sklearn实战-乳腺癌细胞数据挖掘(博客主亲自录制视频教程)

https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

https://www.pythonprogramming.net/words-as-features-nltk-tutorial/

Converting words to Features with NLTK

In this tutorial, we're going to be building off the previous video and compiling feature lists of words from positive reviews and words from the negative reviews to hopefully see trends in specific types of words in positive or negative reviews.

To start, our code:

import nltk

import random

from nltk.corpus import movie_reviews

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]

random.shuffle(documents)

all_words = []

for w in movie_reviews.words():

all_words.append(w.lower())

all_words = nltk.FreqDist(all_words)

word_features = list(all_words.keys())[:3000]

Mostly the same as before, only with now a new variable, word_features, which contains the top 3,000 most common words. Next, we're going to build a quick function that will find these top 3,000 words in our positive and negative documents, marking their presence as either positive or negative:

def find_features(document):

words = set(document)

features = {}

for w in word_features:

features[w] = (w in words)

return features

Next, we can print one feature set like:

print((find_features(movie_reviews.words('neg/cv000_29416.txt'))))

Then we can do this for all of our documents, saving the feature existence booleans and their respective positive or negative categories by doing:

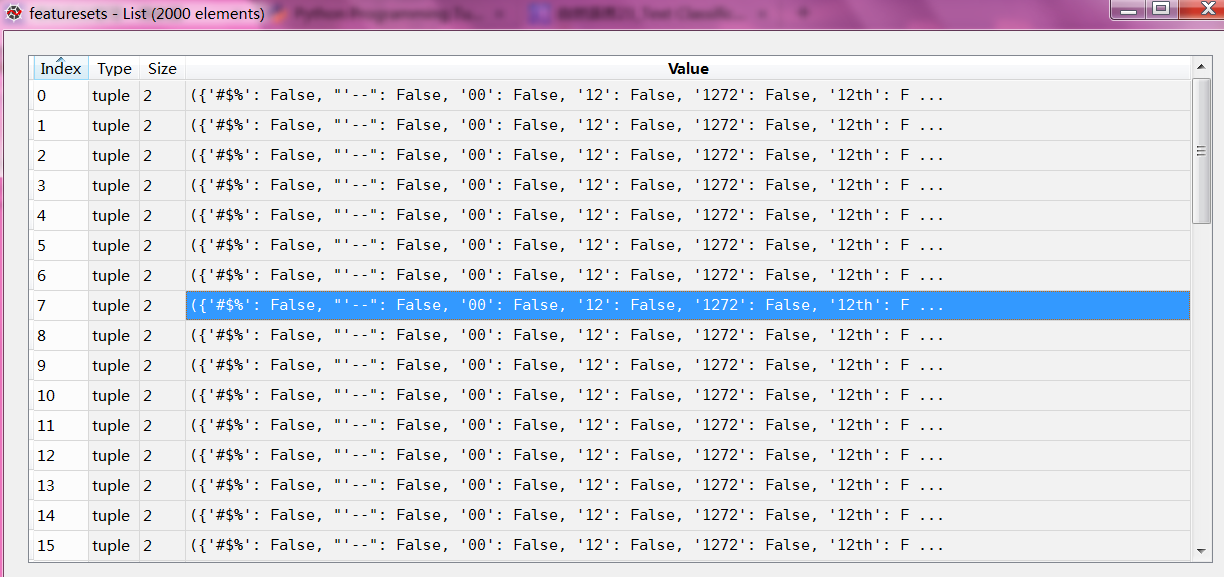

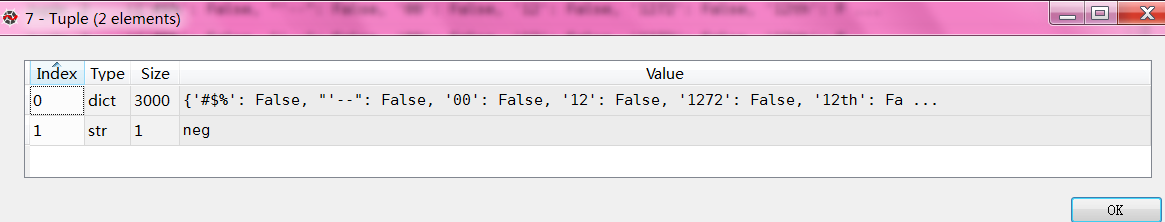

featuresets = [(find_features(rev), category) for (rev, category) in documents]

Awesome, now that we have our features and labels, what is next? Typically the next step is to go ahead and train an algorithm, then test it. So, let's go ahead and do that, starting with the Naive Bayes classifier in the next tutorial!

# -*- coding: utf-8 -*-

"""

Created on Sun Dec 4 09:27:48 2016

@author: daxiong

"""

import nltk

import random

from nltk.corpus import movie_reviews

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]

random.shuffle(documents)

all_words = []

for w in movie_reviews.words():

all_words.append(w.lower())

#dict_allWords是一个字典,存储所有文字的频率分布

dict_allWords = nltk.FreqDist(all_words)

#字典keys()列出所有单词,[:3000]表示列出前三千文字

word_features = list(dict_allWords.keys())[:3000]

'''

'combating',

'mouthing',

'markings',

'directon',

'ppk',

'vanishing',

'victories',

'huddleston',

...]

'''

def find_features(document):

words = set(document)

features = {}

for w in word_features:

features[w] = (w in words)

return features

words=movie_reviews.words('neg/cv000_29416.txt')

'''

Out[78]: ['plot', ':', 'two', 'teen', 'couples', 'go', 'to', ...]

type(words)

Out[65]: nltk.corpus.reader.util.StreamBackedCorpusView

'''

#去重,words1为集合形式

words1 = set(words)

'''

words1

{'!',

'"',

'&',

"'",

'(',

')',.......

'witch',

'with',

'world',

'would',

'wrapped',

'write',

'world',

'would',

'wrapped',

'write',

'years',

'you',

'your'}

'''

features = {}

#victories单词不在words1,输出false

('victories' in words1)

'''

Out[73]: False

'''

features['victories'] = ('victories' in words1)

'''

features

Out[75]: {'victories': False}

'''

print((find_features(movie_reviews.words('neg/cv000_29416.txt'))))

'''

'schwarz': False,

'supervisors': False,

'geyser': False,

'site': False,

'fevered': False,

'acknowledged': False,

'ronald': False,

'wroth': False,

'degredation': False,

...}

'''

featuresets = [(find_features(rev), category) for (rev, category) in documents]

featuresets 特征集合一共有2000个文件,每个文件是一个元组,元组包含字典(“glory”:False)和neg/pos分类