作业一

(1)在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

思路:

1.首先建立一个类,用于实现建立数据库,并将数据写入数据库

2.然后再建立一个用于实现爬取数据的类

实现代码:

1 # 031804127wl 2 3 from bs4 import BeautifulSoup 4 from bs4 import UnicodeDammit 5 import urllib.request 6 import sqlite3 7 8 9 class WeatherDB: 10 def openDB(self): 11 self.con = sqlite3.connect("weathers.db") 12 self.cursor = self.con.cursor() 13 try: 14 self.cursor.execute("create table weathers (wCity varchar(16), wDate varchar(16), wWeather varchar(64), " 15 "wTemp varchar(32), constraint pk_weather primary key(wCity, wDate))") 16 except: 17 self.cursor.execute("delete from weathers") 18 19 def closeDB(self): 20 self.con.commit() 21 self.con.close() 22 23 def insert(self, city, date, weather, temp): 24 try: 25 self.cursor.execute("insert into weathers (wCity, wDate, wWeather, wTemp)values(?, ?, ?, ?)", 26 (city, date, weather, temp)) 27 except Exception as err: 28 print(err) 29 30 def show(self): 31 self.cursor.execute("select * form weathers") 32 rows = self.cursor.fetchall() 33 print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp")) 34 for row in rows: 35 print("%-16s%-16s%-32s%-16s" % (row[0], row[1], row[2], row[3])) 36 37 38 class WeatherForecast: 39 def __init__(self): 40 self.headers = {"User-Agent": "Mozilla/5.0"} 41 self.cityCode = {"北京": "101010100", "上海": "101020100", "广州": "101280101", "深圳": "101280601"} 42 43 def forecastCity(self, city): 44 if city not in self.cityCode.keys(): 45 print(city + " code cannot be found") 46 return 47 48 url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml" 49 try: 50 req = urllib.request.Request(url, headers=self.headers) 51 data = urllib.request.urlopen(req) 52 data = data.read() 53 dammit = UnicodeDammit(data, ["utf-8", "gbk"]) 54 data = dammit.unicode_markup 55 soup = BeautifulSoup(data, "lxml") 56 lis = soup.select("ul[class='t clearfix'] li") 57 for li in lis: 58 try: 59 date = li.select('h1')[0].text 60 weather = li.select('p[class="wea"]')[0].text 61 temp = li.select('p[class="tem"] span')[0].text + "/" + li.select('p[class="tem"] i')[0].text 62 print(city, date, weather, temp) 63 except Exception as err: 64 print(err) 65 except Exception as err: 66 print(err) 67 68 def process(self, cities): 69 self.db = WeatherDB() 70 self.db.openDB() 71 72 for city in cities: 73 self.forecastCity(city) 74 75 # self.db.show() 76 self.db.closeDB() 77 78 79 ws = WeatherForecast() 80 ws.process(["北京", "上海", "广州", "深圳"]) 81 print("completed")

结果图片:

(2)实验心得:

学习了如何建立数据库并向其中存入数据,也算是对上个学期学过的一些内容的复习。

作业二:

(1)用requests和BeautifulSoup库方法定向爬取股票相关信息。

思路:

1.利用浏览器自带的抓包工具,查找股票列表加载使用的url

2.分析得到的数据,进行爬取

3.规范输出格式

实现代码:

1 # 031804127wl 2 3 import re 4 import requests 5 import json 6 import pandas as pd 7 8 # 显示所有列 9 pd.set_option('display.max_columns', None) 10 # 显示所有行 11 pd.set_option('display.max_rows', None) 12 # 列名与数据对其显示 13 pd.set_option('display.unicode.ambiguous_as_wide', True) 14 pd.set_option('display.unicode.east_asian_width', True) 15 # 横向展示5000个字符 16 pd.set_option('display.width', 5000) 17 18 19 def HTML(url): 20 gupiao_list = [] 21 headers = { 22 "user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) " 23 "Chrome/80.0.3987.149 Safari/537.36"} 24 try: 25 r = requests.get(url, headers=headers, timeout=30) 26 r.raise_for_status() 27 r.encoding = r.apparent_encoding 28 html = r.text 29 except Exception as e: 30 print("wrong:" + e) 31 # 使用正则表达式,爬取data下的所有数据 32 pat = re.compile("[{.*?}]") 33 data = pat.findall(html) 34 js = json.loads(data[0]) # 将json代码规范化 35 gupiao_list.append(("代码", "名称", "最新价", "涨跌幅", "涨跌额", "成交量", "成交额", "振幅", "最高", "最低", "今开", "昨收", "量比")) 36 for i in range(len(js)): 37 diezhangfu = str(js[i]["f3"]) + "%" 38 zhenfu = str(js[i]["f7"]) + "%" 39 gupiao_list.append((js[i]["f12"], js[i]["f14"], js[i]["f2"], diezhangfu, js[i]["f4"], js[i]["f5"], js[i]["f6"], 40 zhenfu, js[i]["f15"], js[i]["f16"], js[i]["f17"], js[i]["f18"], js[i]["f10"])) 41 # 将列表信息规范格式 42 df = pd.DataFrame(gupiao_list) 43 return df 44 45 def main(): 46 # 爬取前两页的内容 47 for i in range(1, 3): 48 url = "http://28.push2.eastmoney.com/api/qt/clist/get?cb=jQuery11240023072405517482908_1601430405294&pn=" + str( 49 i) + "&pz" 50 "=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80," 51 "m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24," 52 "f25,f22,f11,f62,f128,f136,f115,f152&_=1601430405304 " 53 print(HTML(url)) 54 55 56 main()

结果图片:

(2)实验心得

学会了使用抓包工具获取网页数据,初步了解了一下json库的使用方法。并且由于最后输出的数据量比较大,我使用了pandas库对数据的输出进行了规范,又掌握了一个使输出更加美观的方法。

作业三

(1)根据自选3位数+学号后3位选取股票,获取印股票信息。

思路:

我的学号后三位数是127,因此直接输出代码后缀是127的股票信息就可以了,由于这样的股票只是少数,因此不需要使用pandas库,直接手动规范输出格式。由于股票的位置随时在变动,因此当发现没有数据输出的时候,改变页码范围就可以了。

实现代码:

1 # 031804127wl 2 3 import re 4 import requests 5 import json 6 7 def HTML(url): 8 headers = { 9 "user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) " 10 "Chrome/80.0.3987.149 Safari/537.36"} 11 try: 12 r = requests.get(url, headers=headers, timeout=30) 13 r.raise_for_status() 14 r.encoding = r.apparent_encoding 15 html = r.text 16 except Exception as e: 17 print("wrong:" + e) 18 # 使用正则表达式,爬取data下的所有数据 19 pat = re.compile("[{.*?}]") 20 data = pat.findall(html) 21 js = json.loads(data[0]) # 将json代码规范化 22 for i in range(len(js)): 23 diezhangfu = str(js[i]["f3"]) + "%" 24 zhenfu = str(js[i]["f7"]) + "%" 25 daima = js[i]["f12"] 26 # 输出代码后缀为127的股票数据 27 if daima[3:] == '127': 28 print("代码:" + str(js[i]["f12"]) + " " + "名称:" + str(js[i]["f14"]) + " " + "最新价:" + str(js[i]["f2"]) 29 + " " + "涨跌幅:" + str(diezhangfu) + " " + "涨跌额:" + str(js[i]["f4"]) + " " + "成交量:" + str( 30 js[i]["f5"]) 31 + " " + "成交额:" + str(js[i]["f6"]) + " " + "振幅:" + str(zhenfu) + " " + "最高:" + str( 32 js[i]["f15"]) + " " + 33 "最低:" + str(js[i]["f16"]) + " " + "今开:" + str(js[i]["f17"]) + " " + "昨收:" + str(js[i]["f18"]) + 34 " " + "量比:" + str(js[i]["f10"])) 35 else: 36 continue 37 38 39 def main(): 40 # 爬取30-40页的内容 41 for i in range(30, 41): 42 url = "http://28.push2.eastmoney.com/api/qt/clist/get?cb=jQuery11240023072405517482908_1601430405294&pn=" + str( 43 i) + "&pz" 44 "=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80," 45 "m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24," 46 "f25,f22,f11,f62,f128,f136,f115,f152&_=1601430405304 " 47 HTML(url) 48 49 50 main()

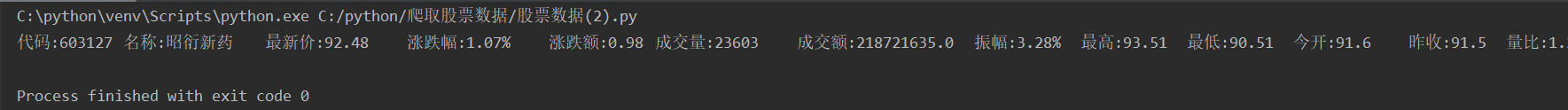

结果图片:

(2)实验心得

就是在前一个作业的基础上对输出进行一些修改。