下载一长篇中文文章。

从文件读取待分析文本。

news = open('gzccnews.txt','r',encoding = 'utf-8')

安装与使用jieba进行中文分词。

pip install jieba

import jieba

list(jieba.lcut(news))

生成词频统计

排序

排除语法型词汇,代词、冠词、连词

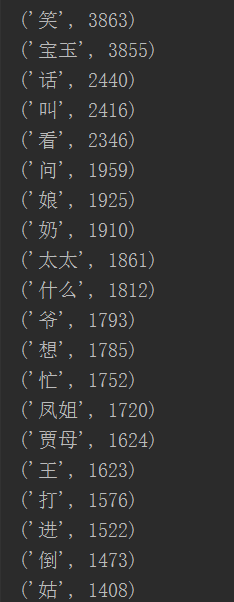

输出词频最大TOP20

import jieba f = open('novel.txt','r',encoding='utf-8') novel = f.read() f.close() exclude = { ' ','u3000','-',' ','了','的','不','一','来','道','人','是','说','我','这','他', '你','儿','着','也','去','有','个','玉','宝','子','里','贾','又','那','们','见','只', '太','么','好','在','家','上','便','姐','头','就','大','得','听','出','老','回','母', '要','知','日','下','都','二','心','事','还','过','自','起','没','到','两','呢','如', '些','时','今','小','凤','什','因','等','才','可','夫','面','之','和','中','罢','几', '一个','天','正','黛','此','后'} sep = ''',。“”‘’’、?!:''' for c in sep: novel = novel.replace(c,' ') novels = list(jieba.lcut(novel)) Dict= {} Set = set(novels) - exclude for w in Set: Dict[w] = novel.count(w) List = list(Dict.items()) List.sort(key=lambda x:x[1],reverse=True) for i in range(20): print(List[i])