数据集地址

基于sklearn接口的分类

from pprint import pprint

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import OneHotEncoder

from sklearn.externals import joblib

import numpy as np

from xgboost.sklearn import XGBClassifier

# 以分隔符,读取文件,得到的是一个二维列表

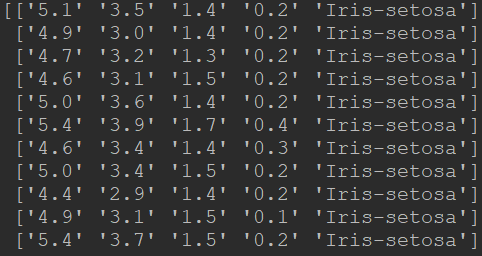

iris = np.loadtxt('iris.data', dtype=str, delimiter=',', unpack=False, encoding='utf-8')

# 前4列是特征

data = iris[:, :4].astype(np.float)

# 最后一列是标签,我们将其转换为二维列表

target = iris[:, -1][:, np.newaxis]

# 对标签进行onehot编码后还原成数字

enc = OneHotEncoder()

target = enc.fit_transform(target).astype(np.int).toarray()

target = [list(oh).index(1) for oh in target]

# 划分训练数据和测试数据

X_train, X_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=1)

# 模型训练

params = {

'n_estimators': 100,

'max_depth': 5,

'min_child_weight': 1,

'subsample': 0.8,

'colsample_bytree': 0.8,

'reg_alpha': 0,

'reg_lambda': 1,

'learning_rate': 0.1}

xgb = XGBClassifier(random_state=1, **params)

xgb.fit(X_train, y_train)

# 模型存储

joblib.dump(xgb, 'xgb_model.pkl')

# 模型加载

gbdt = joblib.load('xgb_model.pkl')

# 模型预测

y_pred = xgb.predict(X_test)

# 模型评估

print('The accuracy of prediction is:', accuracy_score(y_test, y_pred))

# 特征重要度

print('Feature importances:', list(xgb.feature_importances_))

结果

The accuracy of prediction is: 0.9666666666666667

Feature importances: [0.002148238569679191, 0.0046703830672789074, 0.33366676380518245, 0.6595146145578594]

基于sklearn接口的回归

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split

from xgboost.sklearn import XGBRegressor

from sklearn.metrics import mean_absolute_error

X, y = make_regression(n_samples=100, n_features=1, noise=20)

# 切分训练集、测试集

train_X, test_X, train_y, test_y = train_test_split(X, y, test_size=0.25, random_state=1)

# 调用XGBoost模型,使用训练集数据进行训练(拟合)

my_model = XGBRegressor(

max_depth=30,

learning_rate=0.01,

n_estimators=5,

silent=True,

objective='reg:linear',

booster='gblinear',

n_jobs=50,

nthread=None,

gamma=0,

min_child_weight=1,

max_delta_step=0,

subsample=1,

colsample_bytree=1,

colsample_bylevel=1,

reg_alpha=0,

reg_lambda=1,

scale_pos_weight=1,

base_score=0.5,

random_state=0,

seed=None,

missing=None,

importance_type='gain')

my_model.fit(train_X, train_y)

# 使用模型对测试集数据进行预测

predictions = my_model.predict(test_X)

# 对模型的预测结果进行评判(平均绝对误差)

print("Mean Absolute Error : " + str(mean_absolute_error(predictions, test_y)))

结果:

Mean Absolute Error : 47.98486383348952