Kubernetes网络插件体系及flannel基础

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.Kubernetes网络插件概述

关于Kubernetes集群的各Pod通信网络模型解决方案可参考官方文档: https://kubernetes.io/docs/concepts/cluster-administration/networking/ 关于Kubernetes集群的网络插件解决方案可参考: https://kubernetes.io/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/

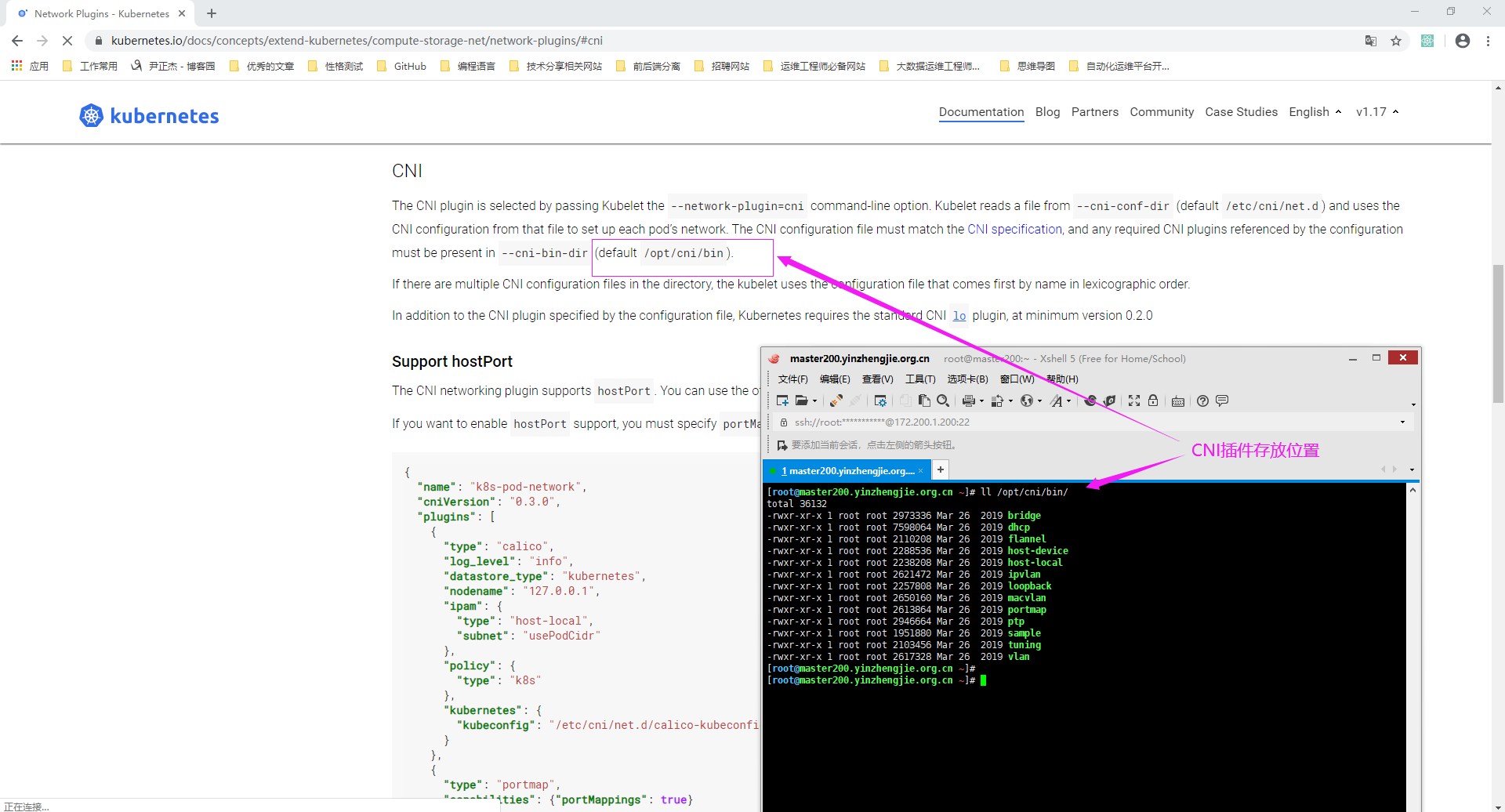

1>.查看CNI插件存放位置

[root@master200.yinzhengjie.org.cn ~]# ll /opt/cni/bin/ total 36132 -rwxr-xr-x 1 root root 2973336 Mar 26 2019 bridge -rwxr-xr-x 1 root root 7598064 Mar 26 2019 dhcp -rwxr-xr-x 1 root root 2110208 Mar 26 2019 flannel -rwxr-xr-x 1 root root 2288536 Mar 26 2019 host-device -rwxr-xr-x 1 root root 2238208 Mar 26 2019 host-local -rwxr-xr-x 1 root root 2621472 Mar 26 2019 ipvlan -rwxr-xr-x 1 root root 2257808 Mar 26 2019 loopback -rwxr-xr-x 1 root root 2650160 Mar 26 2019 macvlan -rwxr-xr-x 1 root root 2613864 Mar 26 2019 portmap -rwxr-xr-x 1 root root 2946664 Mar 26 2019 ptp -rwxr-xr-x 1 root root 1951880 Mar 26 2019 sample -rwxr-xr-x 1 root root 2103456 Mar 26 2019 tuning -rwxr-xr-x 1 root root 2617328 Mar 26 2019 vlan [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]#

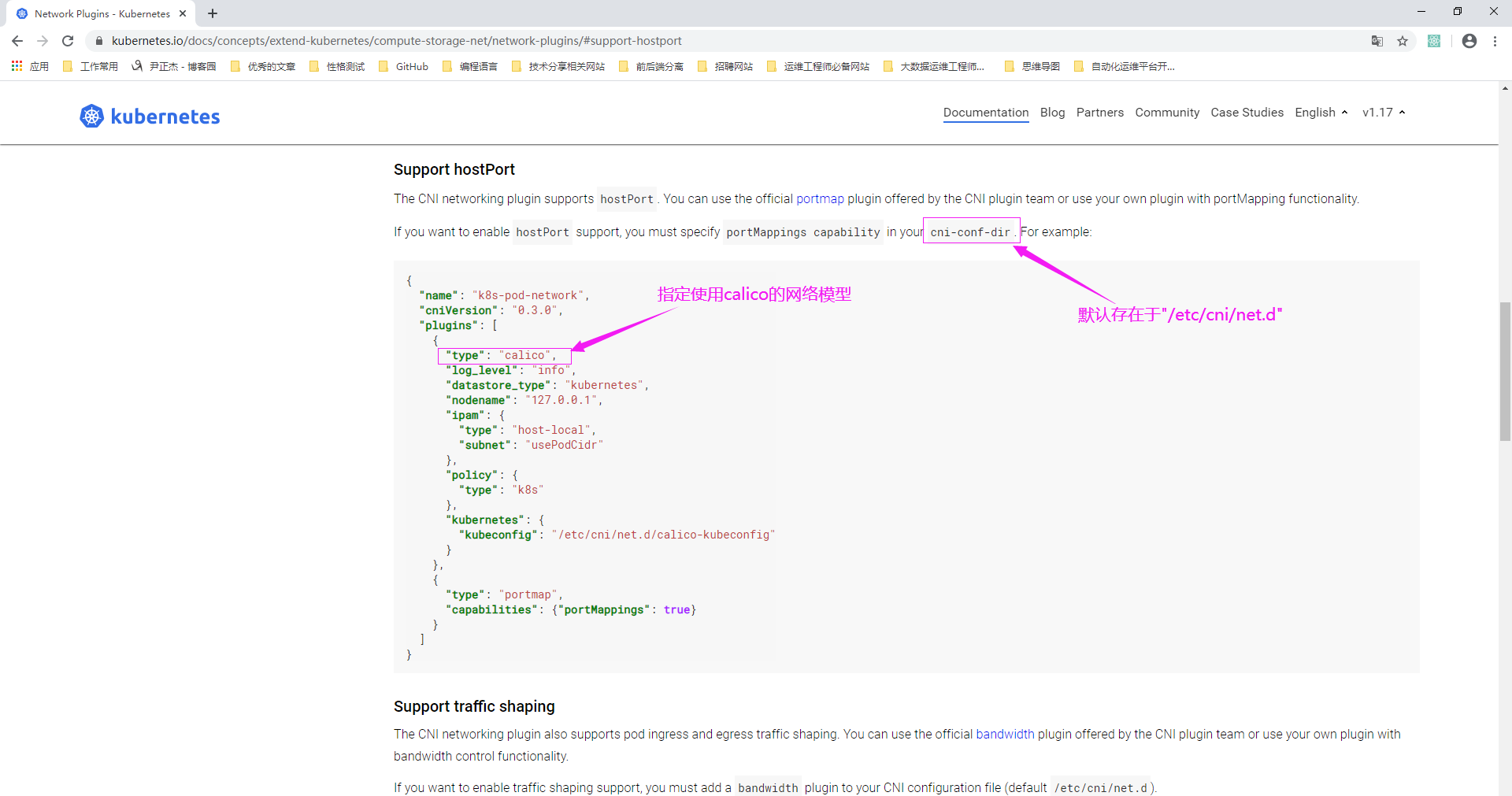

2>.使用calico网络模型的配置文件

3>.使用flannel网络模型的配置文件(flannel是基于vxlan的隧道机制实现,但是不支持网络策略)

[root@master200.yinzhengjie.org.cn ~]# ll /etc/cni/net.d/ total 4 -rw-r--r-- 1 root root 292 Feb 19 13:19 10-flannel.conflist [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]# cat /etc/cni/net.d/10-flannel.conflist { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]#

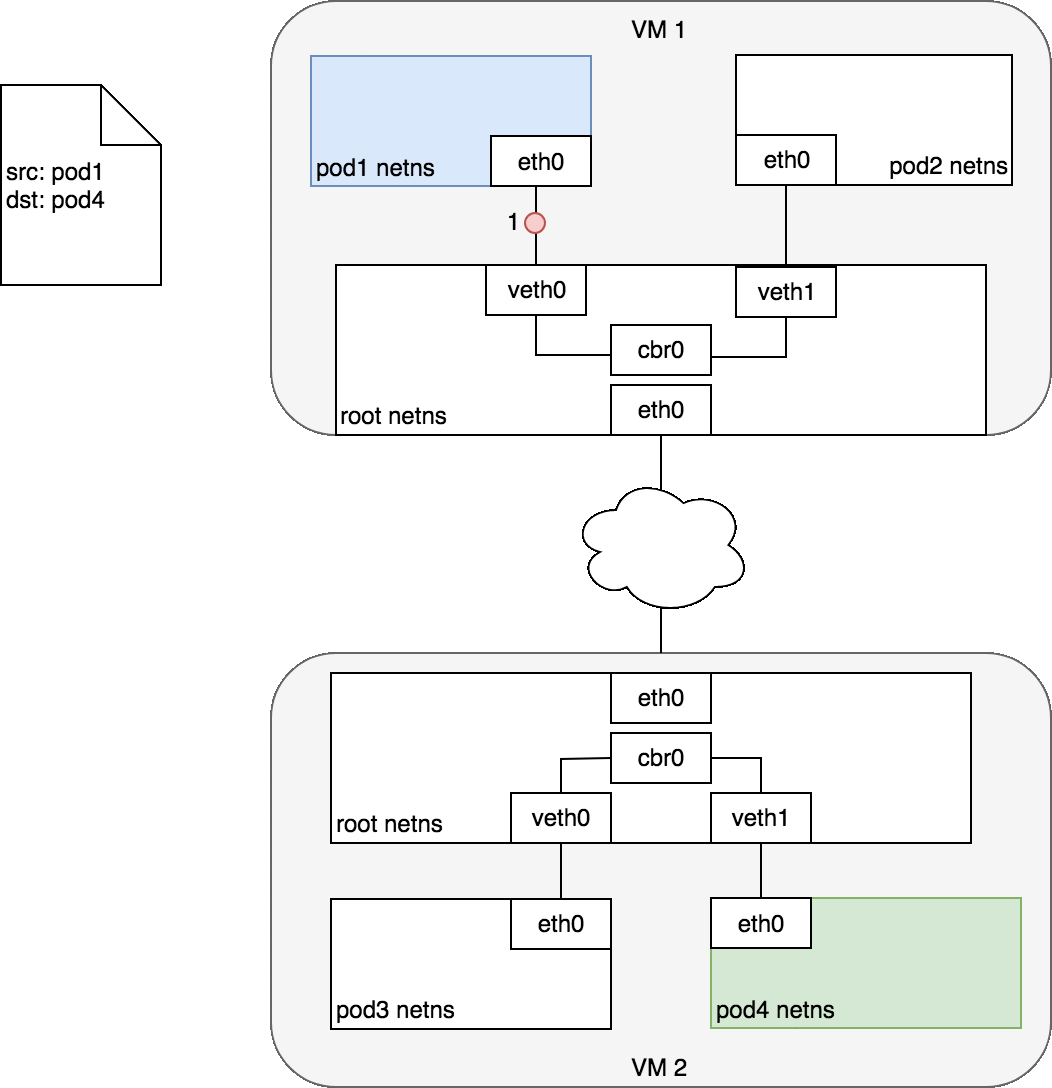

二.flannel网络模型的工作逻辑

如下图所示(图片引用自https://www.jianshu.com/p/3f2401d14c78),pod1和pod2在一个节点上,pod3和pod4在另外一个节点上,我们举例说明pod1和pod4之间进行通信。 下图中的cbr0的名称是咱们在flannel网络模型的配置文件中定义的,但实际上在服务器上是以cni0和flannel.1的两块虚拟网卡实现flannel功能的,因此我们简要赘述一下flannel网络模型的工作逻辑。 (1)Pod1中的容器将数据报文发送给cni0网卡; (2)在由cni0网卡发送给flannel.1虚拟网卡,由该虚拟网卡将数据报文进行封装并发送给宿主机的eth0网卡; (3)通过VM1的eth0网卡将数据报文发送给VM2的eth0网卡,再发送给VM2中的flannel.1虚拟网卡; (4)VM2中的flannel.1将数据解析后发送给VM2中的cni0网卡; (5)VM2中的cni0将数据报文发送给Pod4中的容器。 flannel支持的常见的封装协议隧道,我们称之为"Backend": VxLAN: 二层协议隧道,传输效率最高,性能最好。 UDP: 四层协议隧道,需要拆解报文,相对于VxLAN较差,适用于Linux内核不支持的VxLAN的场景。 host-gw: 直接将每一个节点的容器通过物理网桥接入Pod中的容器,性能很好但具有局限性,无法跨路由器。 其它协议隧道请参考官网CoreOS的官方文档: https://github.com/coreos/flannel/blob/master/Documentation/backends.md

[root@master200.yinzhengjie.org.cn ~]# ifconfig bond0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500 inet 172.200.1.200 netmask 255.255.248.0 broadcast 172.200.7.255 ether 00:0c:29:42:2c:27 txqueuelen 1000 (Ethernet) RX packets 467483 bytes 58595749 (55.8 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 482222 bytes 231824660 (221.0 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 bond1: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500 inet 192.200.1.200 netmask 255.255.248.0 broadcast 192.200.7.255 ether 00:0c:29:42:2c:31 txqueuelen 1000 (Ethernet) RX packets 885 bytes 80586 (78.6 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 6 bytes 360 (360.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.1 netmask 255.255.255.0 broadcast 0.0.0.0 ether 82:62:26:c2:07:27 txqueuelen 1000 (Ethernet) RX packets 290087 bytes 16695019 (15.9 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 312470 bytes 92797200 (88.4 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:50:ba:84:8a txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 00:0c:29:42:2c:27 txqueuelen 1000 (Ethernet) RX packets 435889 bytes 56686501 (54.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 482220 bytes 231824540 (221.0 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth1: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 00:0c:29:42:2c:31 txqueuelen 1000 (Ethernet) RX packets 441 bytes 40242 (39.2 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 6 bytes 360 (360.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth2: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 00:0c:29:42:2c:27 txqueuelen 1000 (Ethernet) RX packets 31594 bytes 1909248 (1.8 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2 bytes 120 (120.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth3: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 00:0c:29:42:2c:31 txqueuelen 1000 (Ethernet) RX packets 444 bytes 40344 (39.3 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.0 netmask 255.255.255.255 broadcast 0.0.0.0 ether 66:f5:c3:b5:f0:39 txqueuelen 0 (Ethernet) RX packets 6484 bytes 3418066 (3.2 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 7190 bytes 2952751 (2.8 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1000 (Local Loopback) RX packets 10833950 bytes 1745878370 (1.6 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 10833950 bytes 1745878370 (1.6 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 veth68beb83a: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 ether ea:9d:2f:9e:c6:db txqueuelen 0 (Ethernet) RX packets 145068 bytes 10379427 (9.8 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 156246 bytes 46398922 (44.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 vethe1876219: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 ether 1a:97:a5:41:11:ad txqueuelen 0 (Ethernet) RX packets 145019 bytes 10376810 (9.8 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 156231 bytes 46398572 (44.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@master200.yinzhengjie.org.cn ~]#

三.测试flannel的工作模式

flannel其实是和宿主机共享网络名称空间,生产环境我们可用以操作系统守护进程的方式部署flannel,也可以使用Pod方式部署,不过推荐使用后者,因为基于Pod部署起来方便,而且升级也很方便,如果使用系统守护进程的方式安装的话还需要单独部署etcd服务,相对来说没有Pod管理起来方便。

接下来我们来验证一些flannel网络模型是否基于宿主机的网络名称空间进行通信的。

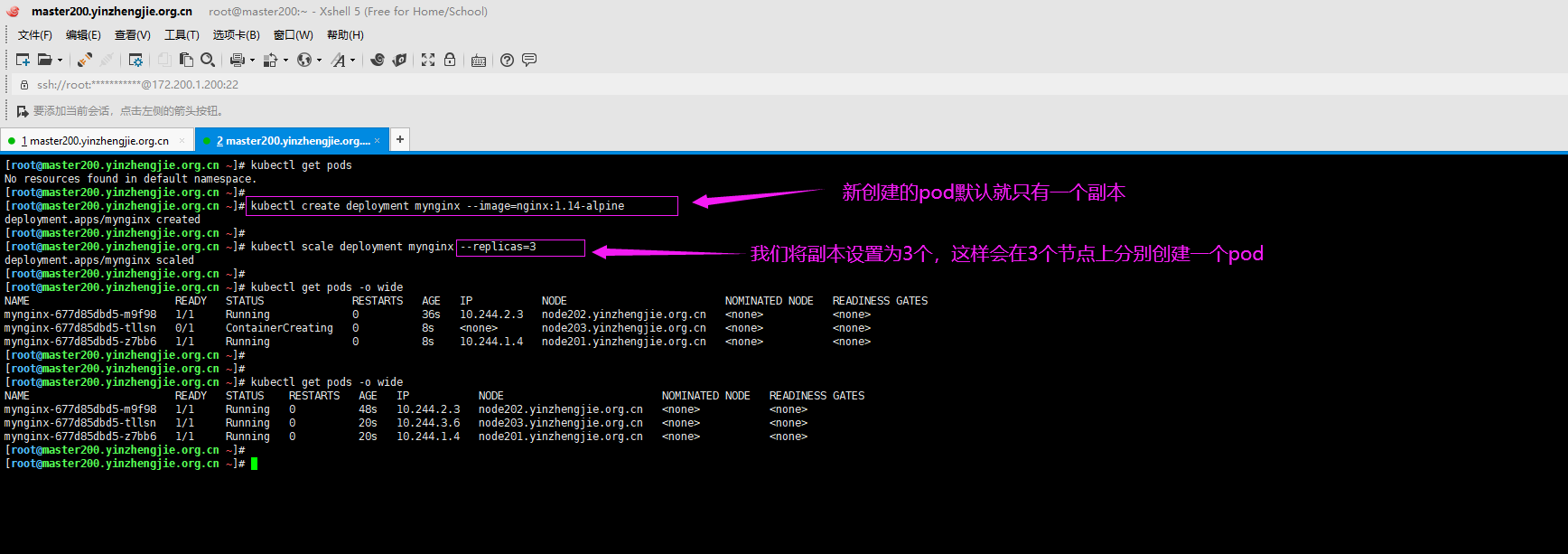

1>.在不同的宿主机上创建2个pod

[root@master200.yinzhengjie.org.cn ~]# kubectl create deployment mynginx --image=nginx:1.14-alpine deployment.apps/mynginx created [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]# kubectl scale deployment mynginx --replicas=3 deployment.apps/mynginx scaled [root@master200.yinzhengjie.org.cn ~]#

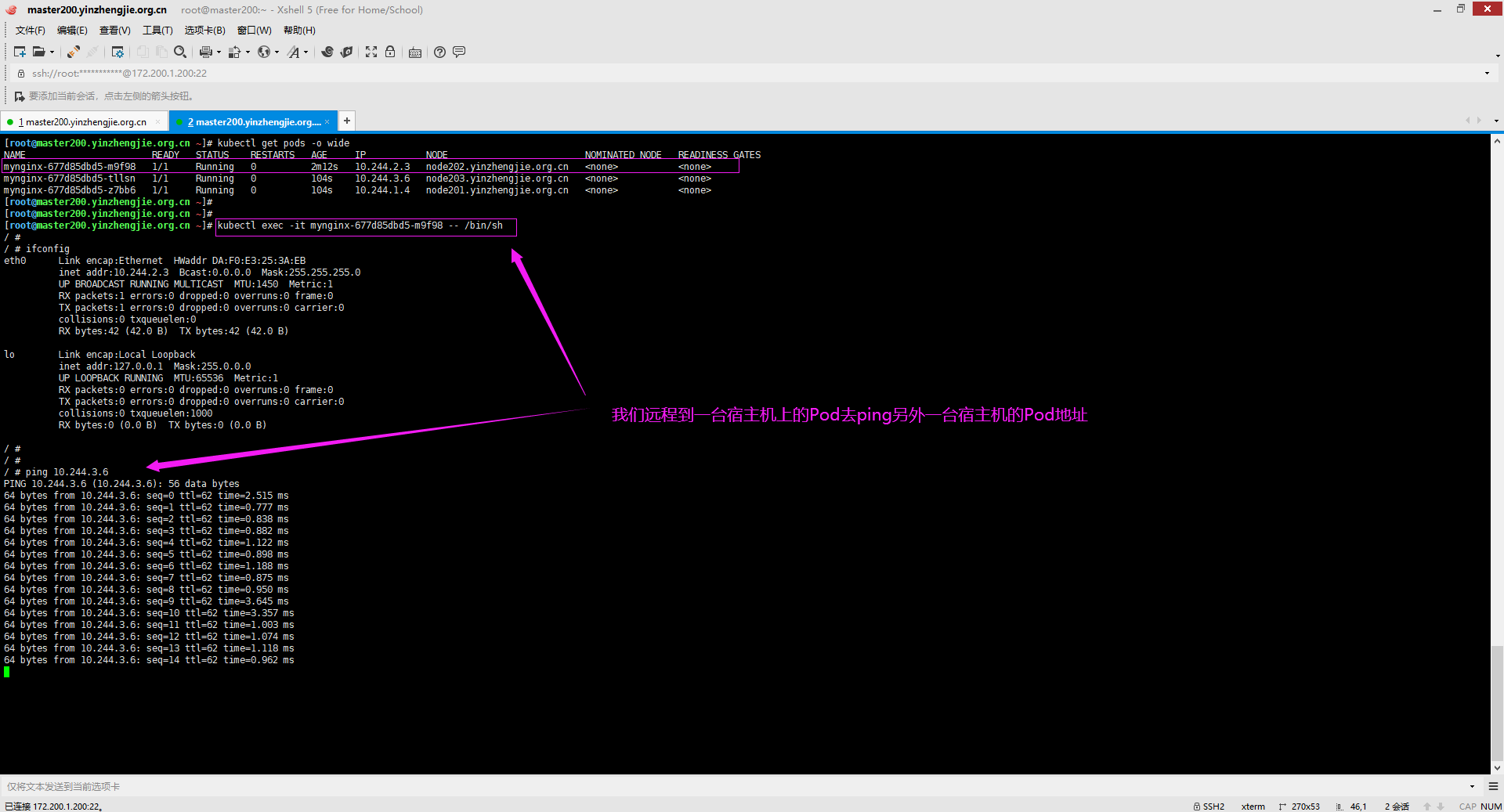

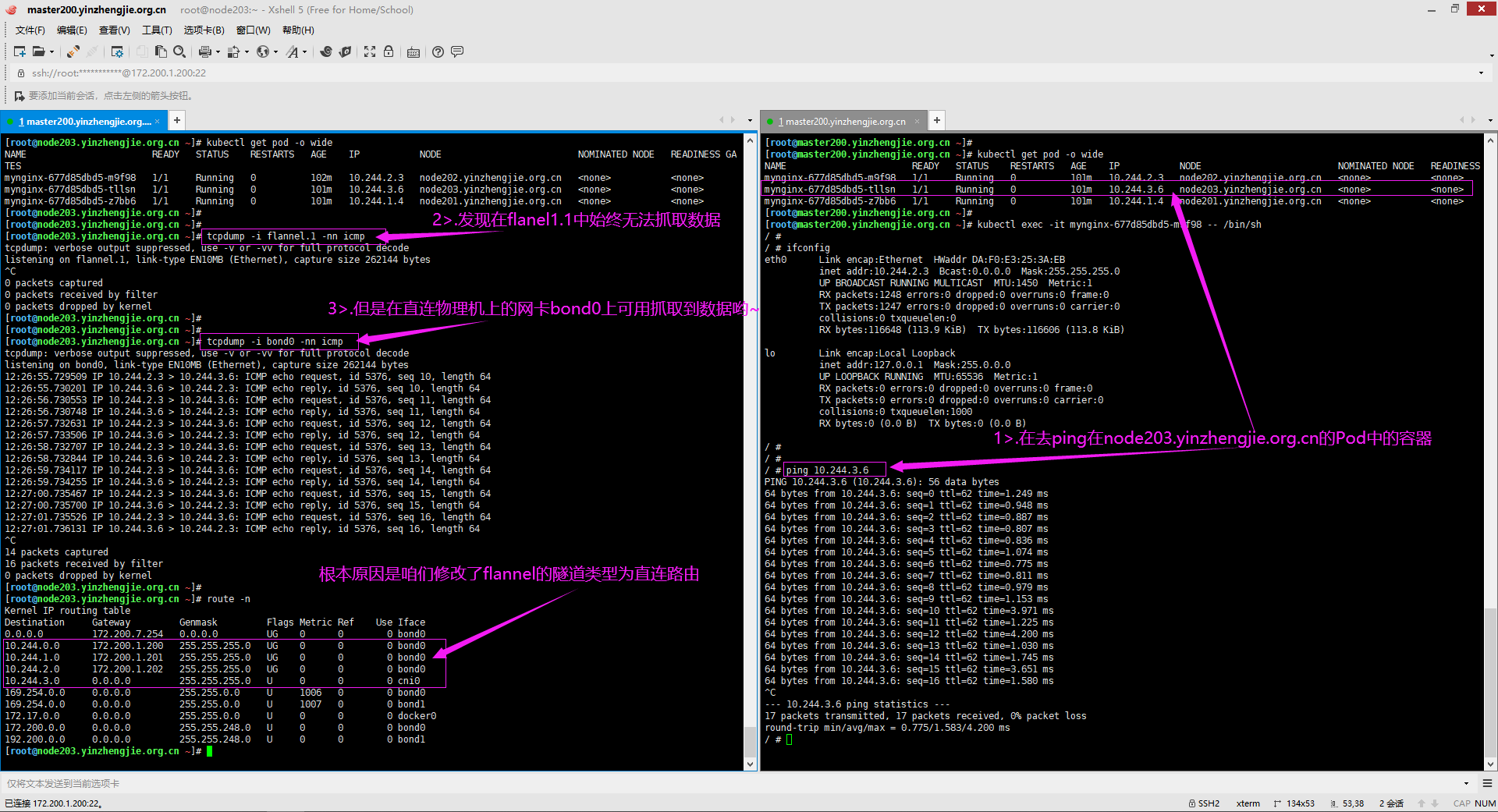

2>.连接到一台宿主机的Pod中的容器去ping另外一台宿主机的容器,如下图所示

[root@master200.yinzhengjie.org.cn ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mynginx-677d85dbd5-m9f98 1/1 Running 0 2m12s 10.244.2.3 node202.yinzhengjie.org.cn <none> <none> mynginx-677d85dbd5-tllsn 1/1 Running 0 104s 10.244.3.6 node203.yinzhengjie.org.cn <none> <none> mynginx-677d85dbd5-z7bb6 1/1 Running 0 104s 10.244.1.4 node201.yinzhengjie.org.cn <none> <none> [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]# kubectl exec -it mynginx-677d85dbd5-m9f98 -- /bin/sh / # / # ifconfig eth0 Link encap:Ethernet HWaddr DA:F0:E3:25:3A:EB inet addr:10.244.2.3 Bcast:0.0.0.0 Mask:255.255.255.0 UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1 RX packets:1 errors:0 dropped:0 overruns:0 frame:0 TX packets:1 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:42 (42.0 B) TX bytes:42 (42.0 B) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) / # / # / # ping 10.244.3.6 PING 10.244.3.6 (10.244.3.6): 56 data bytes 64 bytes from 10.244.3.6: seq=0 ttl=62 time=2.515 ms 64 bytes from 10.244.3.6: seq=1 ttl=62 time=0.777 ms 64 bytes from 10.244.3.6: seq=2 ttl=62 time=0.838 ms ......

3>.登录到被ping的宿主机进行抓包

[root@master200.yinzhengjie.org.cn ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS mynginx-677d85dbd5-m9f98 1/1 Running 0 5m43s 10.244.2.3 node202.yinzhengjie.org.cn <none> <none> mynginx-677d85dbd5-tllsn 1/1 Running 0 5m15s 10.244.3.6 node203.yinzhengjie.org.cn <none> <none> mynginx-677d85dbd5-z7bb6 1/1 Running 0 5m15s 10.244.1.4 node201.yinzhengjie.org.cn <none> <none> [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]# ssh node203.yinzhengjie.org.cn root@node203.yinzhengjie.org.cn's password: Last login: Tue Feb 4 17:50:35 2020 from 172.200.0.1 [root@node203.yinzhengjie.org.cn ~]# [root@node203.yinzhengjie.org.cn ~]# tcpdump -i cni0 -nn tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on cni0, link-type EN10MB (Ethernet), capture size 262144 bytes 10:50:11.792795 IP 10.244.2.3 > 10.244.3.6: ICMP echo request, id 3584, seq 60, length 64 10:50:11.793031 IP 10.244.3.6 > 10.244.2.3: ICMP echo reply, id 3584, seq 60, length 64 10:50:12.793321 IP 10.244.2.3 > 10.244.3.6: ICMP echo request, id 3584, seq 61, length 64 10:50:12.793397 IP 10.244.3.6 > 10.244.2.3: ICMP echo reply, id 3584, seq 61, length 64

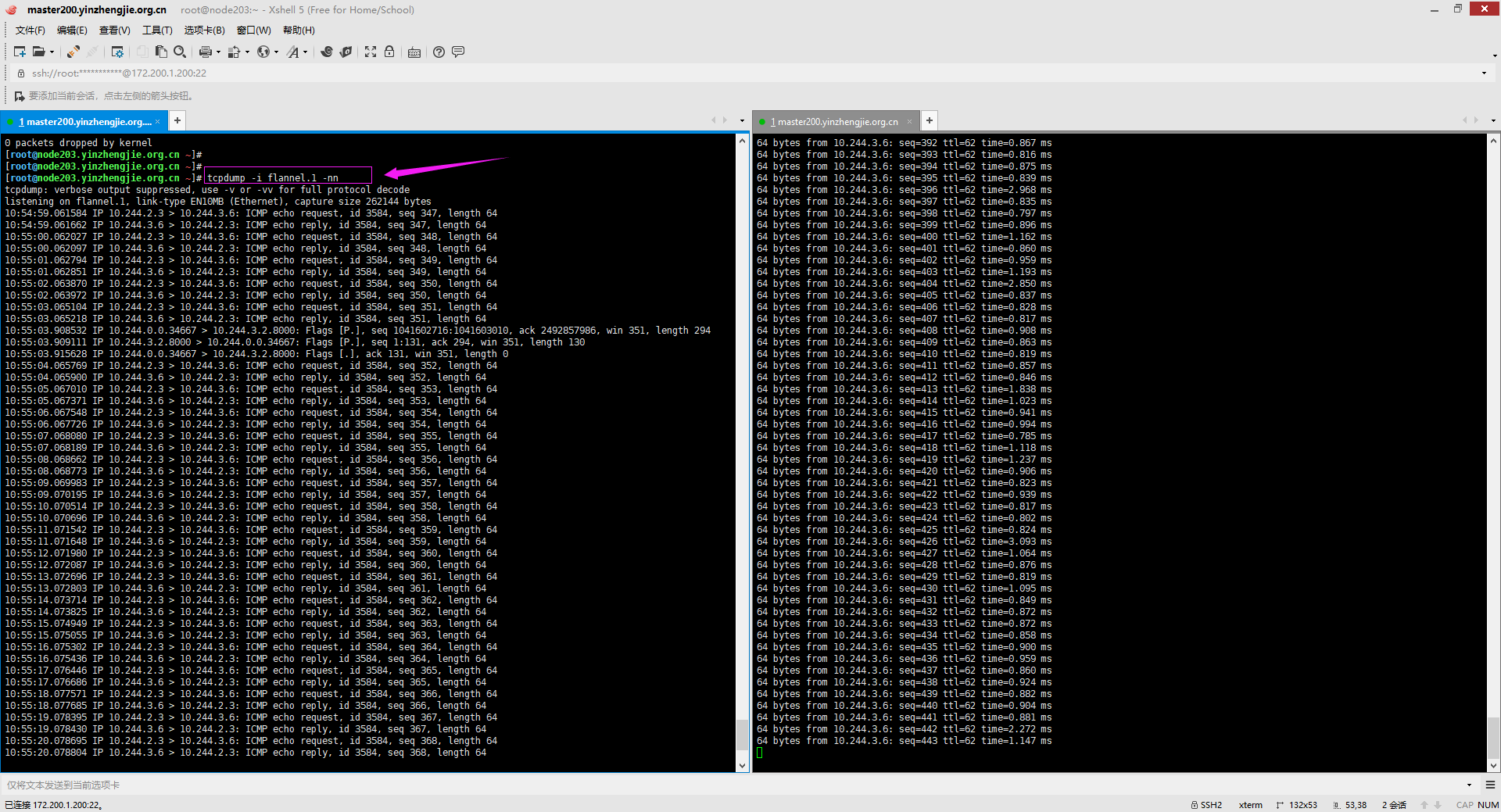

4>.通过flannel.1网卡接口也能抓到报文信息

[root@node203.yinzhengjie.org.cn ~]# tcpdump -i flannel.1 -nn

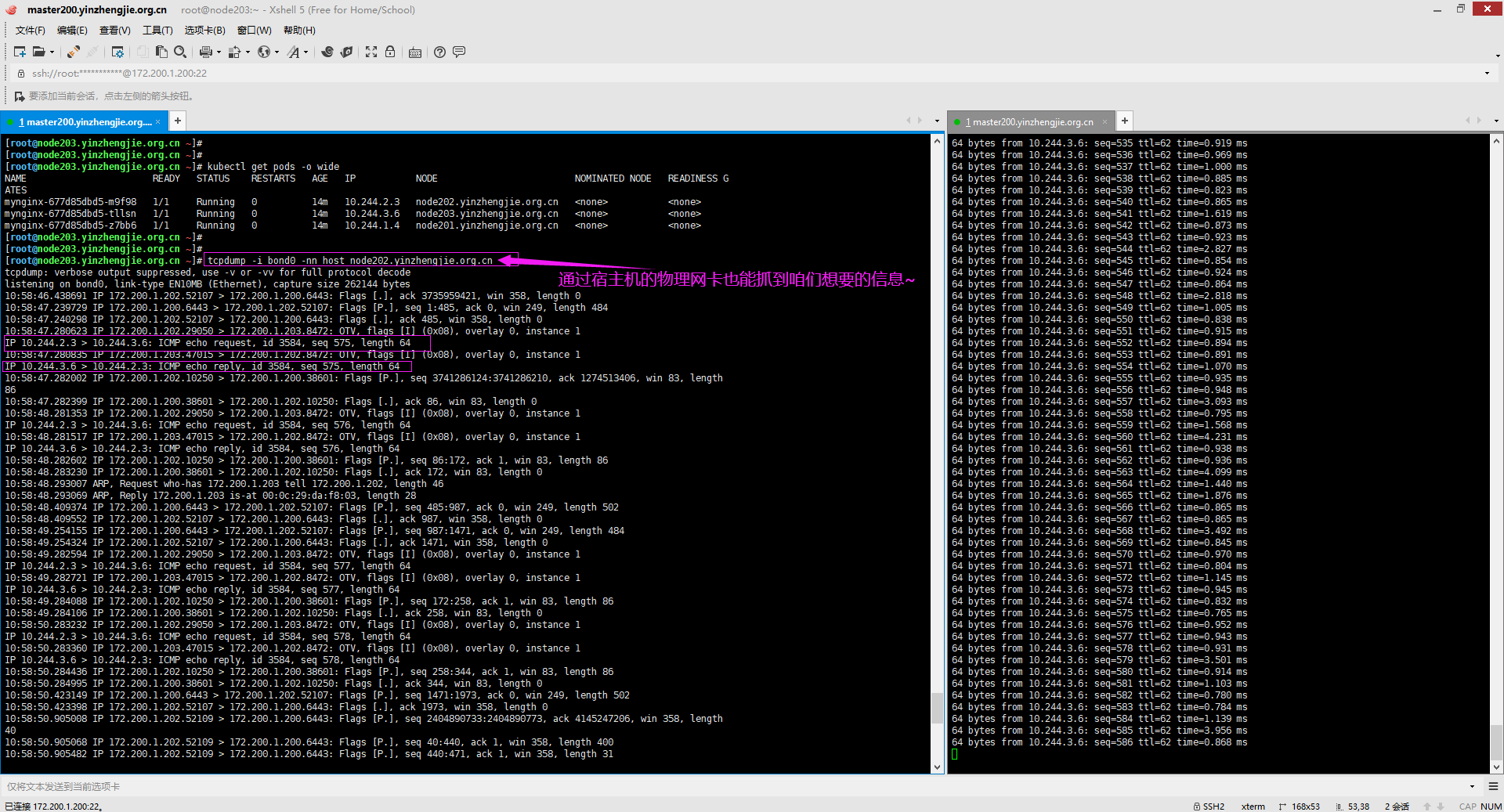

5>.通过宿主机进行的网卡地址进行抓包,依旧可用捕获到咱们想要的报文信息

[root@node203.yinzhengjie.org.cn ~]# tcpdump -i bond0 -nn host node202.yinzhengjie.org.cn

四.测试修改flannel网络模型的类型参数(生产环境请在一开始就配置好,不推荐在生产环境中频繁修改类似于网络模型这样的基础组件)

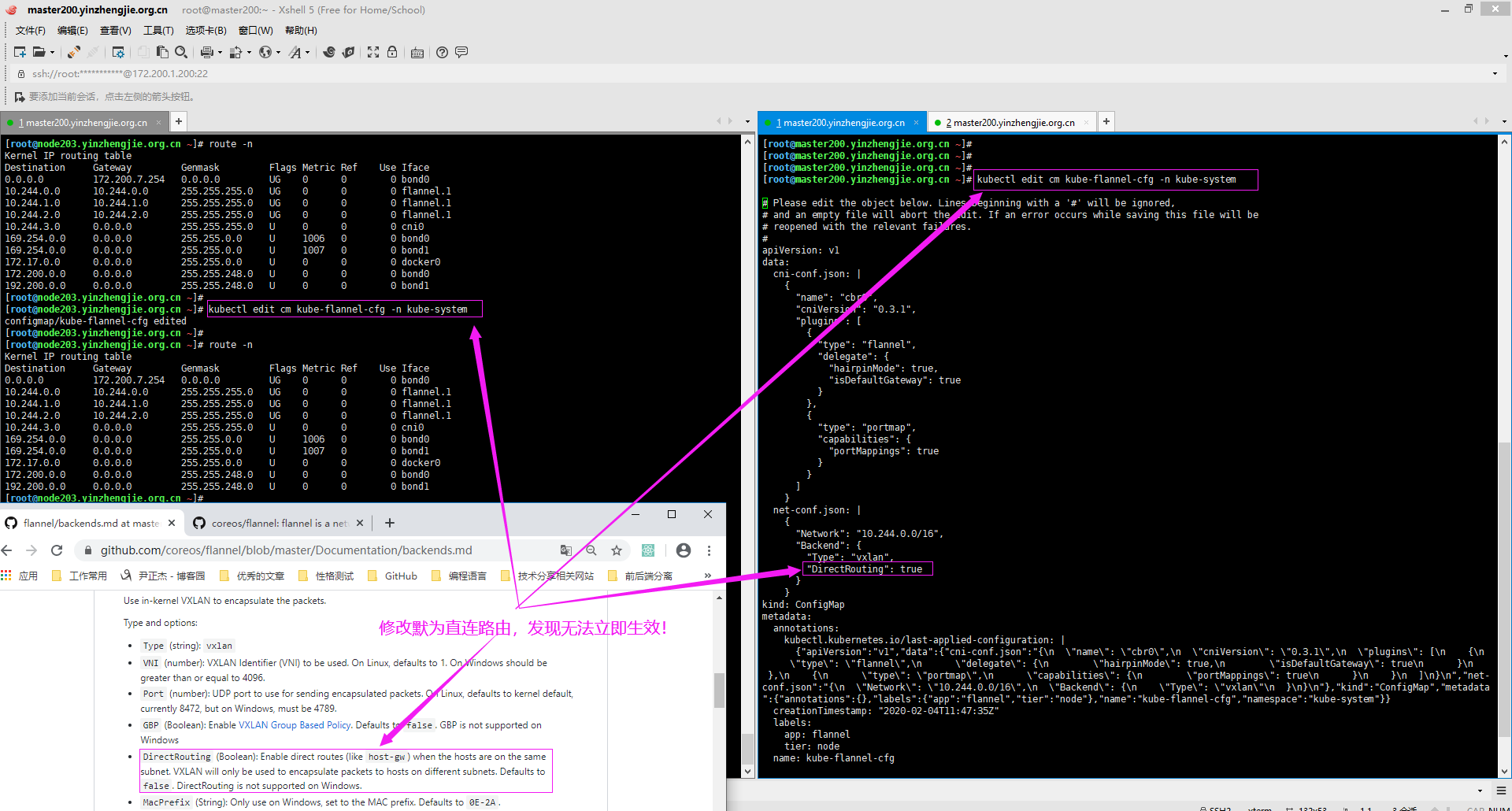

1>.修改flannel网络模型的配置文件后无法立即生效

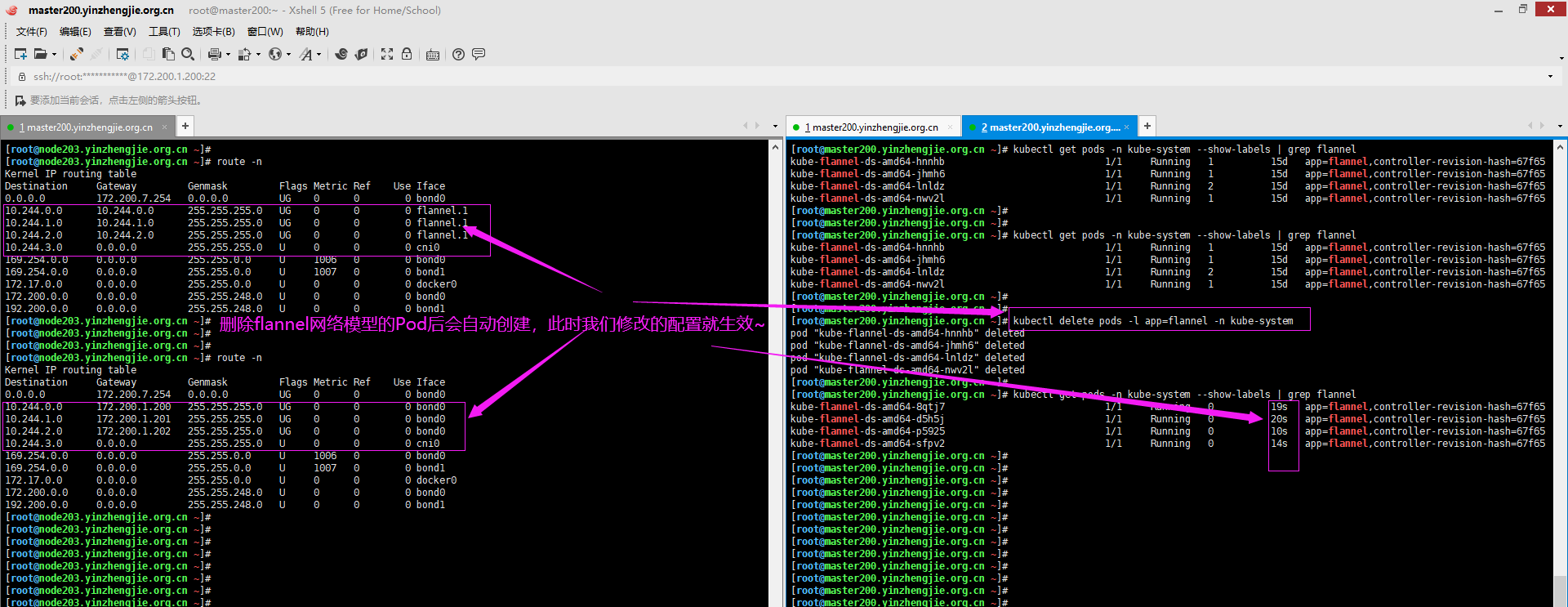

[root@node203.yinzhengjie.org.cn ~]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 172.200.7.254 0.0.0.0 UG 0 0 0 bond0 10.244.0.0 10.244.0.0 255.255.255.0 UG 0 0 0 flannel.1 10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 flannel.1 10.244.2.0 10.244.2.0 255.255.255.0 UG 0 0 0 flannel.1 10.244.3.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0 169.254.0.0 0.0.0.0 255.255.0.0 U 1006 0 0 bond0 169.254.0.0 0.0.0.0 255.255.0.0 U 1007 0 0 bond1 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 172.200.0.0 0.0.0.0 255.255.248.0 U 0 0 0 bond0 192.200.0.0 0.0.0.0 255.255.248.0 U 0 0 0 bond1 [root@node203.yinzhengjie.org.cn ~]# [root@node203.yinzhengjie.org.cn ~]# kubectl edit cm kube-flannel-cfg -n kube-system configmap/kube-flannel-cfg edited [root@node203.yinzhengjie.org.cn ~]# [root@node203.yinzhengjie.org.cn ~]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 172.200.7.254 0.0.0.0 UG 0 0 0 bond0 10.244.0.0 10.244.0.0 255.255.255.0 UG 0 0 0 flannel.1 10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 flannel.1 10.244.2.0 10.244.2.0 255.255.255.0 UG 0 0 0 flannel.1 10.244.3.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0 169.254.0.0 0.0.0.0 255.255.0.0 U 1006 0 0 bond0 169.254.0.0 0.0.0.0 255.255.0.0 U 1007 0 0 bond1 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 172.200.0.0 0.0.0.0 255.255.248.0 U 0 0 0 bond0 192.200.0.0 0.0.0.0 255.255.248.0 U 0 0 0 bond1 [root@node203.yinzhengjie.org.cn ~]# [root@node203.yinzhengjie.org.cn ~]#

2>.删除已经创建的的flannel对应的Pod虽说配置会立即生效(但是在删除flannel的Pod期间会导致整个K8S集群的网络瘫痪哟~,因此生产环境中并不推荐使用该方法,应该在部署集群时就提前配置好)

[root@master200.yinzhengjie.org.cn ~]# kubectl get pods -n kube-system --show-labels | grep flannel kube-flannel-ds-amd64-hnnhb 1/1 Running 1 15d app=flannel,controller-revision-hash=67f65 kube-flannel-ds-amd64-jhmh6 1/1 Running 1 15d app=flannel,controller-revision-hash=67f65 kube-flannel-ds-amd64-lnldz 1/1 Running 2 15d app=flannel,controller-revision-hash=67f65 kube-flannel-ds-amd64-nwv2l 1/1 Running 1 15d app=flannel,controller-revision-hash=67f65 [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]# kubectl delete pods -l app=flannel -n kube-system pod "kube-flannel-ds-amd64-hnnhb" deleted pod "kube-flannel-ds-amd64-jhmh6" deleted pod "kube-flannel-ds-amd64-lnldz" deleted pod "kube-flannel-ds-amd64-nwv2l" deleted [root@master200.yinzhengjie.org.cn ~]# [root@master200.yinzhengjie.org.cn ~]# kubectl get pods -n kube-system --show-labels | grep flannel kube-flannel-ds-amd64-8qtj7 1/1 Running 0 19s app=flannel,controller-revision-hash=67f65 kube-flannel-ds-amd64-d5h5j 1/1 Running 0 20s app=flannel,controller-revision-hash=67f65 kube-flannel-ds-amd64-p5925 1/1 Running 0 10s app=flannel,controller-revision-hash=67f65 kube-flannel-ds-amd64-sfpv2 1/1 Running 0 14s app=flannel,controller-revision-hash=67f65 [root@master200.yinzhengjie.org.cn ~]#

3>.再去模拟Pod之间的通信抓包效果如下图所示

五.使用kubeadm部署K8S集群时如果想要使用calico网络模式步骤

1>.初始化集群时指定Pod的网段为"192.168.0.0/16"(https://www.cnblogs.com/yinzhengjie/p/12257108.html)

[root@master200.yinzhengjie.org.cn ~]# kubeadm init --kubernetes-version="v1.17.2" --pod-network-cidr="192.168.0.0/16"

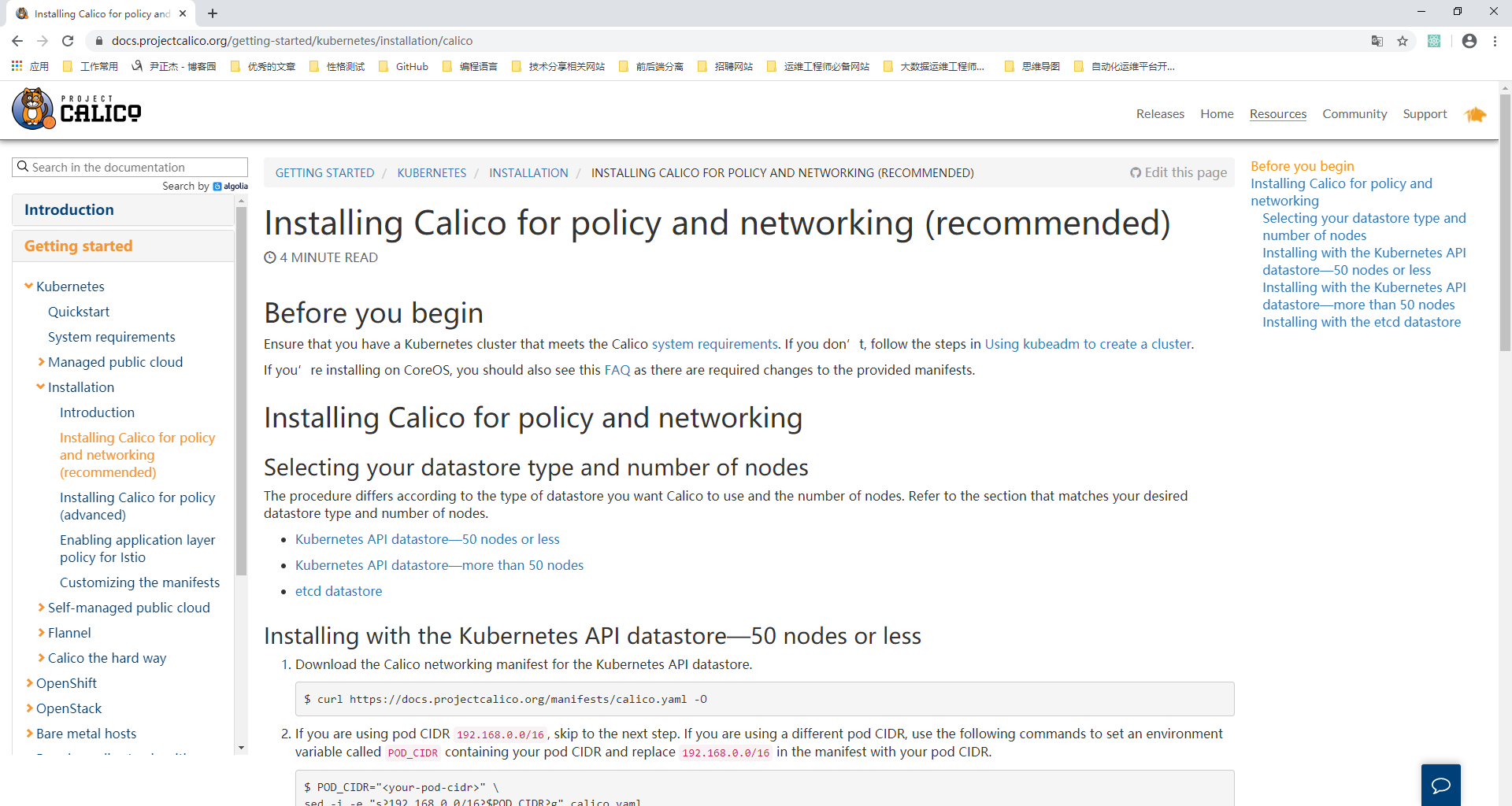

2>.部署calico网络模型

博主推荐阅读: https://docs.projectcalico.org/getting-started/kubernetes/installation/calico

3>.Network Policy及应用

博主推荐阅读: https://docs.projectcalico.org/getting-started/kubernetes/installation/flannel https://www.cnblogs.com/yinzhengjie/p/12324683.html