Hadoop生态圈-Flume的组件之自定义Sink

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

本篇博客主要介绍sink相关的API使用两个小案例,想要了解更多关于API的小技巧请参考官网:http://flume.apache.org/FlumeDeveloperGuide.html#client-sdk

一.自定义Sink的步骤

1>.编写自定义sink

1 /* 2 @author :yinzhengjie 3 Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/ 4 EMAIL:y1053419035@qq.com 5 */ 6 package cn.org.yinzhengjie.sink; 7 8 import org.apache.flume.*; 9 import org.apache.flume.conf.Configurable; 10 import org.apache.flume.sink.AbstractSink; 11 12 /** 13 * 自定义sink 14 */ 15 public class MySink extends AbstractSink implements Configurable { 16 17 18 //定义需要获取的key值 19 private String key = "name"; 20 //定义默认值 21 private static final String defaultValue = "yinzhengjie"; 22 //定义从配置文件中获取到的变量 23 private String res; 24 25 26 public void configure(Context context) { 27 //使用context.getString方法获取配置文件的数据,第一个参数key是值配置文件中所存在的key名称,而第二个参数表示当key不存在时,给其赋值默认值为defaultValue。 28 res = context.getString(key, defaultValue); 29 30 } 31 32 public Status process() throws EventDeliveryException { 33 //在开启事务之前状态为Status.READY 34 Status result = Status.READY; 35 //获取channel 36 Channel channel = getChannel(); 37 //得到事物 38 Transaction transaction = channel.getTransaction(); 39 //定义一个事件,它是一行数据的字节数组,是flume发送文件的基本单位 40 Event event = null; 41 42 try { 43 //获取event之前,需要开启事务 44 transaction.begin(); 45 //从channel中取数据 46 event = channel.take(); 47 48 if (event != null) { 49 System.out.println("配置数据 " + res); 50 //得到事件的真实数据 51 System.out.println("真实数据 " + new String(event.getBody())); 52 System.out.println("====================================="); 53 } else { 54 //在channel中数据为空的时候,预示此次会话结束,不再向channel中获取数据 55 result = Status.BACKOFF; 56 } 57 //事务处理成功后,需要提交事务 58 transaction.commit(); 59 } catch (Exception ex) { 60 //事务失败时候,需要回滚事务 61 transaction.rollback(); 62 throw new EventDeliveryException("Failed to log event: " + event, ex); 63 } finally { 64 //事务结束后,需要关闭事务 65 transaction.close(); 66 } 67 return result; 68 } 69 }

2>.打包并将其发送到 /soft/flume/lib下

[yinzhengjie@s101 ~]$ cd /soft/flume/lib/ [yinzhengjie@s101 lib]$ rz [yinzhengjie@s101 lib]$ [yinzhengjie@s101 lib]$ ll | grep MyFlume -rw-r--r-- 1 yinzhengjie yinzhengjie 3398 Jun 20 18:12 MyFlume-1.0-SNAPSHOT.jar [yinzhengjie@s101 lib]$

3>.编写agent的配置文件

[yinzhengjie@s101 ~]$ more /soft/flume/conf/yinzhengjie_mysink.conf # Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 8888 # Describe the sink a1.sinks.k1.type = cn.org.yinzhengjie.sink.MySink # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 100000 a1.channels.c1.transactionCapacity = 10000 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 [yinzhengjie@s101 ~]$

4>.启动flume并测试

a>.启动agent进程

[yinzhengjie@s101 ~]$ flume-ng agent -f /soft/flume/conf/yinzhengjie_mysink.conf -n a1 Warning: No configuration directory set! Use --conf <dir> to override. Warning: JAVA_HOME is not set! Info: Including Hadoop libraries found via (/soft/hadoop/bin/hadoop) for HDFS access Info: Including HBASE libraries found via (/soft/hbase/bin/hbase) for HBASE access Info: Including Hive libraries found via () for Hive access + exec /soft/jdk/bin/java -Xmx20m -cp '/soft/flume/lib/*:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/soft/hbase/bin/../conf:/soft/jdk//lib/tools.jar:/soft/hbase/bin/..:/soft/hbase/bin/../lib/activation-1.1.jar:/soft/hbase/bin/../lib/aopalliance-1.0.jar:/soft/hbase/bin/../lib/apacheds-i18n-2.0.0-M15.jar:/soft/hbase/bin/../lib/apacheds-kerberos-codec-2.0.0-M15.jar:/soft/hbase/bin/../lib/api-asn1-api-1.0.0-M20.jar:/soft/hbase/bin/../lib/api-util-1.0.0-M20.jar:/soft/hbase/bin/../lib/asm-3.1.jar:/soft/hbase/bin/../lib/avro-1.7.4.jar:/soft/hbase/bin/../lib/commons-beanutils-1.7.0.jar:/soft/hbase/bin/../lib/commons-beanutils-core-1.8.0.jar:/soft/hbase/bin/../lib/commons-cli-1.2.jar:/soft/hbase/bin/../lib/commons-codec-1.9.jar:/soft/hbase/bin/../lib/commons-collections-3.2.2.jar:/soft/hbase/bin/../lib/commons-compress-1.4.1.jar:/soft/hbase/bin/../lib/commons-configuration-1.6.jar:/soft/hbase/bin/../lib/commons-daemon-1.0.13.jar:/soft/hbase/bin/../lib/commons-digester-1.8.jar:/soft/hbase/bin/../lib/commons-el-1.0.jar:/soft/hbase/bin/../lib/commons-httpclient-3.1.jar:/soft/hbase/bin/../lib/commons-io-2.4.jar:/soft/hbase/bin/../lib/commons-lang-2.6.jar:/soft/hbase/bin/../lib/commons-logging-1.2.jar:/soft/hbase/bin/../lib/commons-math-2.2.jar:/soft/hbase/bin/../lib/commons-math3-3.1.1.jar:/soft/hbase/bin/../lib/commons-net-3.1.jar:/soft/hbase/bin/../lib/disruptor-3.3.0.jar:/soft/hbase/bin/../lib/findbugs-annotations-1.3.9-1.jar:/soft/hbase/bin/../lib/guava-12.0.1.jar:/soft/hbase/bin/../lib/guice-3.0.jar:/soft/hbase/bin/../lib/guice-servlet-3.0.jar:/soft/hbase/bin/../lib/hadoop-annotations-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-auth-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-hdfs-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-app-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-core-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-jobclient-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-shuffle-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-api-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-server-common-2.5.1.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-client-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-examples-1.2.6.jar:/soft/hbase/bin/../lib/hbase-external-blockcache-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop2-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-prefix-tree-1.2.6.jar:/soft/hbase/bin/../lib/hbase-procedure-1.2.6.jar:/soft/hbase/bin/../lib/hbase-protocol-1.2.6.jar:/soft/hbase/bin/../lib/hbase-resource-bundle-1.2.6.jar:/soft/hbase/bin/../lib/hbase-rest-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-shell-1.2.6.jar:/soft/hbase/bin/../lib/hbase-thrift-1.2.6.jar:/soft/hbase/bin/../lib/htrace-core-3.1.0-incubating.jar:/soft/hbase/bin/../lib/httpclient-4.2.5.jar:/soft/hbase/bin/../lib/httpcore-4.4.1.jar:/soft/hbase/bin/../lib/jackson-core-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-jaxrs-1.9.13.jar:/soft/hbase/bin/../lib/jackson-mapper-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-xc-1.9.13.jar:/soft/hbase/bin/../lib/jamon-runtime-2.4.1.jar:/soft/hbase/bin/../lib/jasper-compiler-5.5.23.jar:/soft/hbase/bin/../lib/jasper-runtime-5.5.23.jar:/soft/hbase/bin/../lib/javax.inject-1.jar:/soft/hbase/bin/../lib/java-xmlbuilder-0.4.jar:/soft/hbase/bin/../lib/jaxb-api-2.2.2.jar:/soft/hbase/bin/../lib/jaxb-impl-2.2.3-1.jar:/soft/hbase/bin/../lib/jcodings-1.0.8.jar:/soft/hbase/bin/../lib/jersey-client-1.9.jar:/soft/hbase/bin/../lib/jersey-core-1.9.jar:/soft/hbase/bin/../lib/jersey-guice-1.9.jar:/soft/hbase/bin/../lib/jersey-json-1.9.jar:/soft/hbase/bin/../lib/jersey-server-1.9.jar:/soft/hbase/bin/../lib/jets3t-0.9.0.jar:/soft/hbase/bin/../lib/jettison-1.3.3.jar:/soft/hbase/bin/../lib/jetty-6.1.26.jar:/soft/hbase/bin/../lib/jetty-sslengine-6.1.26.jar:/soft/hbase/bin/../lib/jetty-util-6.1.26.jar:/soft/hbase/bin/../lib/joni-2.1.2.jar:/soft/hbase/bin/../lib/jruby-complete-1.6.8.jar:/soft/hbase/bin/../lib/jsch-0.1.42.jar:/soft/hbase/bin/../lib/jsp-2.1-6.1.14.jar:/soft/hbase/bin/../lib/jsp-api-2.1-6.1.14.jar:/soft/hbase/bin/../lib/junit-4.12.jar:/soft/hbase/bin/../lib/leveldbjni-all-1.8.jar:/soft/hbase/bin/../lib/libthrift-0.9.3.jar:/soft/hbase/bin/../lib/log4j-1.2.17.jar:/soft/hbase/bin/../lib/metrics-core-2.2.0.jar:/soft/hbase/bin/../lib/MyHbase-1.0-SNAPSHOT.jar:/soft/hbase/bin/../lib/netty-all-4.0.23.Final.jar:/soft/hbase/bin/../lib/paranamer-2.3.jar:/soft/hbase/bin/../lib/phoenix-4.10.0-HBase-1.2-client.jar:/soft/hbase/bin/../lib/protobuf-java-2.5.0.jar:/soft/hbase/bin/../lib/servlet-api-2.5-6.1.14.jar:/soft/hbase/bin/../lib/servlet-api-2.5.jar:/soft/hbase/bin/../lib/slf4j-api-1.7.7.jar:/soft/hbase/bin/../lib/slf4j-log4j12-1.7.5.jar:/soft/hbase/bin/../lib/snappy-java-1.0.4.1.jar:/soft/hbase/bin/../lib/spymemcached-2.11.6.jar:/soft/hbase/bin/../lib/xmlenc-0.52.jar:/soft/hbase/bin/../lib/xz-1.0.jar:/soft/hbase/bin/../lib/zookeeper-3.4.6.jar:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*::/soft/hive/lib/*:/contrib/capacity-scheduler/*.jar:/conf:/lib/*' -Djava.library.path=:/soft/hadoop-2.7.3/lib/native:/soft/hadoop-2.7.3/lib/native org.apache.flume.node.Application -f /soft/flume/conf/yinzhengjie_mysink.conf -n a1 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/apache-flume-1.8.0-bin/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/phoenix-4.10.0-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 18/06/20 18:34:24 INFO node.PollingPropertiesFileConfigurationProvider: Configuration provider starting 18/06/20 18:34:24 INFO node.PollingPropertiesFileConfigurationProvider: Reloading configuration file:/soft/flume/conf/yinzhengjie_mysink.conf 18/06/20 18:34:24 INFO conf.FlumeConfiguration: Added sinks: k1 Agent: a1 18/06/20 18:34:24 INFO conf.FlumeConfiguration: Processing:k1 18/06/20 18:34:24 INFO conf.FlumeConfiguration: Processing:k1 18/06/20 18:34:24 INFO conf.FlumeConfiguration: Post-validation flume configuration contains configuration for agents: [a1] 18/06/20 18:34:24 INFO node.AbstractConfigurationProvider: Creating channels 18/06/20 18:34:24 INFO channel.DefaultChannelFactory: Creating instance of channel c1 type memory 18/06/20 18:34:24 INFO node.AbstractConfigurationProvider: Created channel c1 18/06/20 18:34:24 INFO source.DefaultSourceFactory: Creating instance of source r1, type netcat 18/06/20 18:34:24 INFO sink.DefaultSinkFactory: Creating instance of sink: k1, type: cn.org.yinzhengjie.sink.MySink 18/06/20 18:34:24 INFO node.AbstractConfigurationProvider: Channel c1 connected to [r1, k1] 18/06/20 18:34:24 INFO node.Application: Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: { source:org.apache.flume.source.NetcatSource{name:r1,state:IDLE} }} sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@618c8387 counterGroup:{ name:null counters:{} } }} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} } 18/06/20 18:34:24 INFO node.Application: Starting Channel c1 18/06/20 18:34:24 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean. 18/06/20 18:34:24 INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: c1 started 18/06/20 18:34:24 INFO node.Application: Starting Sink k1 18/06/20 18:34:24 INFO node.Application: Starting Source r1 18/06/20 18:34:24 INFO source.NetcatSource: Source starting 18/06/20 18:34:24 INFO source.NetcatSource: Created serverSocket:sun.nio.ch.ServerSocketChannelImpl[/127.0.0.1:8888]

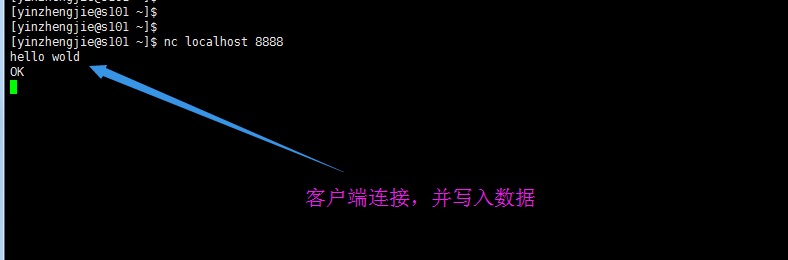

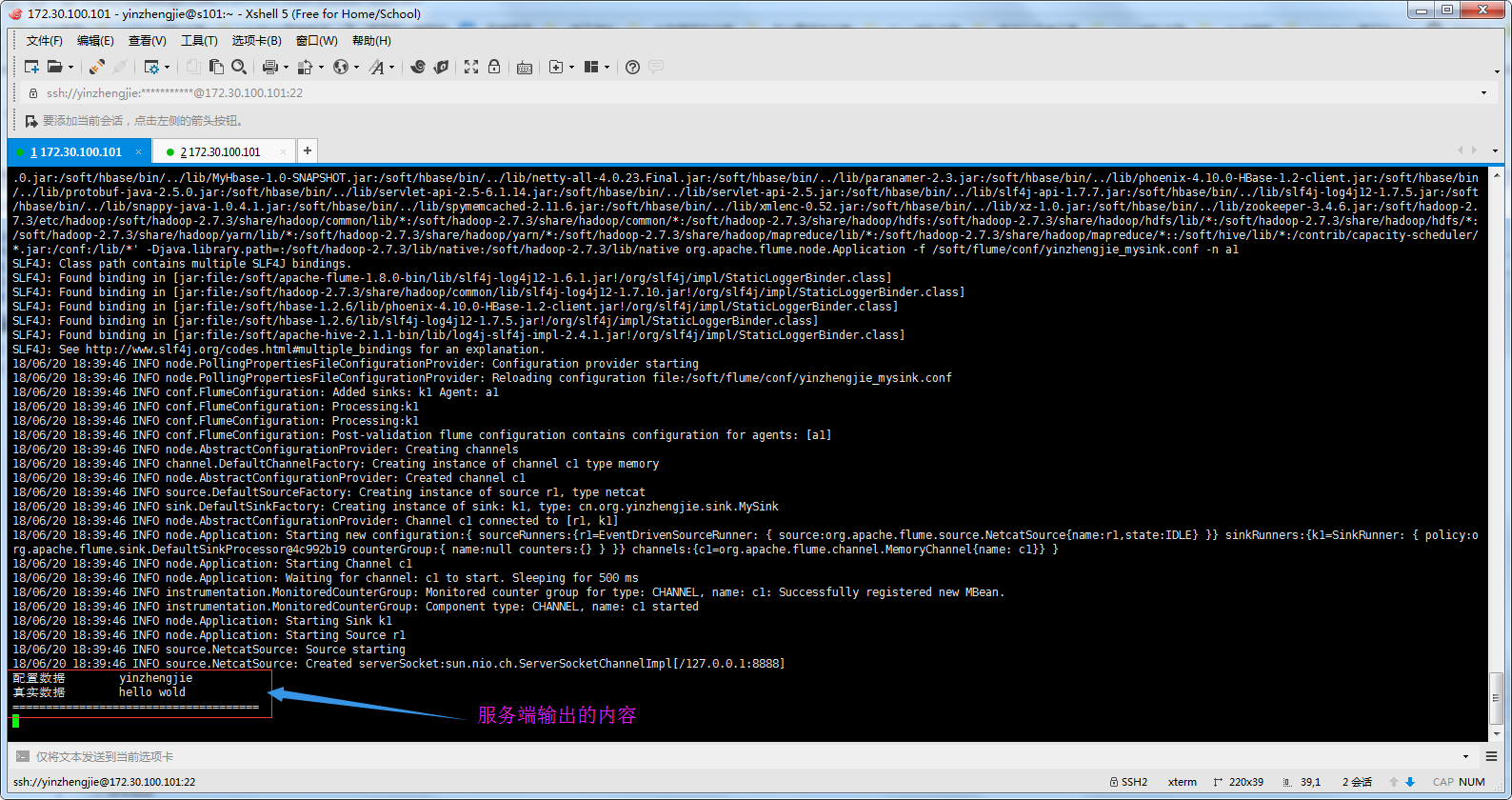

b>.客户端连接(nc)

c>.检查服务端

二.自定义hdfs的sink

1>.编写自定义sink

1 /* 2 @author :yinzhengjie 3 Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/ 4 EMAIL:y1053419035@qq.com 5 */ 6 package cn.org.yinzhengjie.sink; 7 8 import org.apache.flume.*; 9 import org.apache.flume.conf.Configurable; 10 import org.apache.flume.sink.AbstractSink; 11 import org.apache.hadoop.conf.Configuration; 12 import org.apache.hadoop.fs.FSDataOutputStream; 13 import org.apache.hadoop.fs.FileSystem; 14 import org.apache.hadoop.fs.Path; 15 16 17 /** 18 * 重写hdfs Sink 19 * 20 * 自定义路径 //context.getString("path"...); 21 * 22 */ 23 24 public class HdfsSink extends AbstractSink implements Configurable { 25 //定义需要写入到hdfs的路径 26 private String path = "path"; 27 //定义默认的写入路径 28 private static final String defaultPath = "/yinzhengjie/1.txt"; 29 //定义一个key为写入用户 30 private String username = "user"; 31 //定义默认的写入用户 32 private static final String defaultUser = "yinzhengjie"; 33 34 private String HdfsPath; 35 private String HdfsUser; 36 37 38 //configure方法主要是获取值,也就是为上面的HdfsPath和HdfsUser赋初值 39 public void configure(Context context) { 40 //获取到hdfs的路径 41 HdfsPath = context.getString(path, defaultPath); 42 //获取到写入用户 43 HdfsUser = context.getString(username,defaultUser); 44 45 } 46 47 //process方法主要负责具体的业务逻辑 48 public Status process() throws EventDeliveryException { 49 //在开启事务之前状态为Status.READY 50 Status result = Status.READY; 51 //获取channel 52 Channel channel = getChannel(); 53 //得到事物 54 Transaction transaction = channel.getTransaction(); 55 //定义一个事件,它是一行数据的字节数组,是flume发送文件的基本单位 56 Event event = null; 57 58 try { 59 //获取event之前,需要开启事务 60 transaction.begin(); 61 //从channel中取数据 62 event = channel.take(); 63 64 if (event != null) { 65 //设置写入hdfs的用户 66 System.setProperty("HADOOP_USER_NAME",HdfsUser); 67 Configuration conf = new Configuration(); 68 FileSystem fs = FileSystem.get(conf); 69 Path p2 = new Path(HdfsPath); 70 //如果路径存在就以追加的形式写入 71 if(fs.exists(p2)){ 72 FSDataOutputStream fos = fs.append(p2); 73 fos.write(event.getBody()); 74 fos.write(" ".getBytes()); 75 fos.close(); 76 77 } 78 //如果路径不存在就先创建该文件在写入数据 79 else { 80 FSDataOutputStream fos = fs.create(p2); 81 fos.write(event.getBody()); 82 fos.write(" ".getBytes()); 83 fos.close(); 84 } 85 } else { 86 //在channel中数据为空的时候,预示此次会话结束,不再向channel中获取数据 87 result = Status.BACKOFF; 88 } 89 //事务处理成功后,需要提交事务 90 transaction.commit(); 91 } catch (Exception ex) { 92 //事务失败时候,需要回滚事务 93 transaction.rollback(); 94 throw new EventDeliveryException("Failed to log event: " + event, ex); 95 } finally { 96 //事务结束后,需要关闭事务 97 transaction.close(); 98 } 99 return result; 100 } 101 }

2>.打包并将其发送到 /soft/flume/lib下

[yinzhengjie@s101 lib]$ pwd /soft/flume/lib [yinzhengjie@s101 lib]$ ll | grep MyFlume -rw-r--r-- 1 yinzhengjie yinzhengjie 3401 Jun 20 18:38 MyFlume-1.0-SNAPSHOT.jar [yinzhengjie@s101 lib]$ [yinzhengjie@s101 lib]$ rm -rf MyFlume-1.0-SNAPSHOT.jar [yinzhengjie@s101 lib]$ [yinzhengjie@s101 lib]$ rz [yinzhengjie@s101 lib]$ [yinzhengjie@s101 lib]$ ll | grep MyFlume -rw-r--r-- 1 yinzhengjie yinzhengjie 5231 Jun 20 18:53 MyFlume-1.0-SNAPSHOT.jar [yinzhengjie@s101 lib]$ [yinzhengjie@s101 lib]$

3>.编写agent的配置文件

[yinzhengjie@s101 ~]$ more /soft/flume/conf/yinzhengjie_hdfssink.conf # Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 8888 # Describe the sink a1.sinks.k1.type = cn.org.yinzhengjie.sink.HdfsSink a1.sinks.k1.user = yinzhengjie a1.sinks.k1.path = /yinzhengjie/file.txt # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 100000 a1.channels.c1.transactionCapacity = 10000 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 [yinzhengjie@s101 ~]$

4>.启动flume并测试

a>.启动hdfs服务

[yinzhengjie@s101 ~]$ more `which xzk.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -ne 1 ];then echo "无效参数,用法为: $0 {start|stop|restart|status}" exit fi #获取用户输入的命令 cmd=$1 #定义函数功能 function zookeeperManger(){ case $cmd in start) echo "启动服务" remoteExecution start ;; stop) echo "停止服务" remoteExecution stop ;; restart) echo "重启服务" remoteExecution restart ;; status) echo "查看状态" remoteExecution status ;; *) echo "无效参数,用法为: $0 {start|stop|restart|status}" ;; esac } #定义执行的命令 function remoteExecution(){ for (( i=102 ; i<=104 ; i++ )) ; do tput setaf 2 echo ========== s$i zkServer.sh $1 ================ tput setaf 9 ssh s$i "source /etc/profile ; zkServer.sh $1" done } #调用函数 zookeeperManger [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xzk.sh start 启动服务 ========== s102 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== s103 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== s104 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ start-dfs.sh SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Starting namenodes on [s101 s105] s101: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s101.out s105: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s105.out s102: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s102.out s104: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s104.out s105: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s105.out s103: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s103.out Starting journal nodes [s102 s103 s104] s102: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s102.out s104: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s104.out s103: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s103.out SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Starting ZK Failover Controllers on NN hosts [s101 s105] s101: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s101.out s105: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s105.out [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ more `which xcall.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -lt 1 ];then echo "请输入参数" exit fi #获取用户输入的命令 cmd=$@ for (( i=101;i<=105;i++ )) do #使终端变绿色 tput setaf 2 echo ============= s$i $cmd ============ #使终端变回原来的颜色,即白灰色 tput setaf 7 #远程执行命令 ssh s$i $cmd #判断命令是否执行成功 if [ $? == 0 ];then echo "命令执行成功" fi done [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 5185 NameNode 5500 DFSZKFailoverController 5581 Jps 命令执行成功 ============= s102 jps ============ 3075 Jps 2900 DataNode 3000 JournalNode 2841 QuorumPeerMain 命令执行成功 ============= s103 jps ============ 3060 Jps 2821 QuorumPeerMain 2885 DataNode 2985 JournalNode 命令执行成功 ============= s104 jps ============ 3057 Jps 2882 DataNode 2982 JournalNode 2823 QuorumPeerMain 命令执行成功 ============= s105 jps ============ 7808 DFSZKFailoverController 7658 DataNode 7580 NameNode 7868 Jps 命令执行成功 [yinzhengjie@s101 ~]$

b>.启动agent进程

[yinzhengjie@s101 ~]$ flume-ng agent -f /soft/flume/conf/yinzhengjie_hdfssink.conf -n a1 Warning: No configuration directory set! Use --conf <dir> to override. Warning: JAVA_HOME is not set! Info: Including Hadoop libraries found via (/soft/hadoop/bin/hadoop) for HDFS access Info: Including HBASE libraries found via (/soft/hbase/bin/hbase) for HBASE access Info: Including Hive libraries found via () for Hive access + exec /soft/jdk/bin/java -Xmx20m -cp '/soft/flume/lib/*:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/soft/hbase/bin/../conf:/soft/jdk//lib/tools.jar:/soft/hbase/bin/..:/soft/hbase/bin/../lib/activation-1.1.jar:/soft/hbase/bin/../lib/aopalliance-1.0.jar:/soft/hbase/bin/../lib/apacheds-i18n-2.0.0-M15.jar:/soft/hbase/bin/../lib/apacheds-kerberos-codec-2.0.0-M15.jar:/soft/hbase/bin/../lib/api-asn1-api-1.0.0-M20.jar:/soft/hbase/bin/../lib/api-util-1.0.0-M20.jar:/soft/hbase/bin/../lib/asm-3.1.jar:/soft/hbase/bin/../lib/avro-1.7.4.jar:/soft/hbase/bin/../lib/commons-beanutils-1.7.0.jar:/soft/hbase/bin/../lib/commons-beanutils-core-1.8.0.jar:/soft/hbase/bin/../lib/commons-cli-1.2.jar:/soft/hbase/bin/../lib/commons-codec-1.9.jar:/soft/hbase/bin/../lib/commons-collections-3.2.2.jar:/soft/hbase/bin/../lib/commons-compress-1.4.1.jar:/soft/hbase/bin/../lib/commons-configuration-1.6.jar:/soft/hbase/bin/../lib/commons-daemon-1.0.13.jar:/soft/hbase/bin/../lib/commons-digester-1.8.jar:/soft/hbase/bin/../lib/commons-el-1.0.jar:/soft/hbase/bin/../lib/commons-httpclient-3.1.jar:/soft/hbase/bin/../lib/commons-io-2.4.jar:/soft/hbase/bin/../lib/commons-lang-2.6.jar:/soft/hbase/bin/../lib/commons-logging-1.2.jar:/soft/hbase/bin/../lib/commons-math-2.2.jar:/soft/hbase/bin/../lib/commons-math3-3.1.1.jar:/soft/hbase/bin/../lib/commons-net-3.1.jar:/soft/hbase/bin/../lib/disruptor-3.3.0.jar:/soft/hbase/bin/../lib/findbugs-annotations-1.3.9-1.jar:/soft/hbase/bin/../lib/guava-12.0.1.jar:/soft/hbase/bin/../lib/guice-3.0.jar:/soft/hbase/bin/../lib/guice-servlet-3.0.jar:/soft/hbase/bin/../lib/hadoop-annotations-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-auth-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-hdfs-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-app-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-core-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-jobclient-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-shuffle-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-api-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-server-common-2.5.1.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-client-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-examples-1.2.6.jar:/soft/hbase/bin/../lib/hbase-external-blockcache-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop2-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-prefix-tree-1.2.6.jar:/soft/hbase/bin/../lib/hbase-procedure-1.2.6.jar:/soft/hbase/bin/../lib/hbase-protocol-1.2.6.jar:/soft/hbase/bin/../lib/hbase-resource-bundle-1.2.6.jar:/soft/hbase/bin/../lib/hbase-rest-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-shell-1.2.6.jar:/soft/hbase/bin/../lib/hbase-thrift-1.2.6.jar:/soft/hbase/bin/../lib/htrace-core-3.1.0-incubating.jar:/soft/hbase/bin/../lib/httpclient-4.2.5.jar:/soft/hbase/bin/../lib/httpcore-4.4.1.jar:/soft/hbase/bin/../lib/jackson-core-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-jaxrs-1.9.13.jar:/soft/hbase/bin/../lib/jackson-mapper-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-xc-1.9.13.jar:/soft/hbase/bin/../lib/jamon-runtime-2.4.1.jar:/soft/hbase/bin/../lib/jasper-compiler-5.5.23.jar:/soft/hbase/bin/../lib/jasper-runtime-5.5.23.jar:/soft/hbase/bin/../lib/javax.inject-1.jar:/soft/hbase/bin/../lib/java-xmlbuilder-0.4.jar:/soft/hbase/bin/../lib/jaxb-api-2.2.2.jar:/soft/hbase/bin/../lib/jaxb-impl-2.2.3-1.jar:/soft/hbase/bin/../lib/jcodings-1.0.8.jar:/soft/hbase/bin/../lib/jersey-client-1.9.jar:/soft/hbase/bin/../lib/jersey-core-1.9.jar:/soft/hbase/bin/../lib/jersey-guice-1.9.jar:/soft/hbase/bin/../lib/jersey-json-1.9.jar:/soft/hbase/bin/../lib/jersey-server-1.9.jar:/soft/hbase/bin/../lib/jets3t-0.9.0.jar:/soft/hbase/bin/../lib/jettison-1.3.3.jar:/soft/hbase/bin/../lib/jetty-6.1.26.jar:/soft/hbase/bin/../lib/jetty-sslengine-6.1.26.jar:/soft/hbase/bin/../lib/jetty-util-6.1.26.jar:/soft/hbase/bin/../lib/joni-2.1.2.jar:/soft/hbase/bin/../lib/jruby-complete-1.6.8.jar:/soft/hbase/bin/../lib/jsch-0.1.42.jar:/soft/hbase/bin/../lib/jsp-2.1-6.1.14.jar:/soft/hbase/bin/../lib/jsp-api-2.1-6.1.14.jar:/soft/hbase/bin/../lib/junit-4.12.jar:/soft/hbase/bin/../lib/leveldbjni-all-1.8.jar:/soft/hbase/bin/../lib/libthrift-0.9.3.jar:/soft/hbase/bin/../lib/log4j-1.2.17.jar:/soft/hbase/bin/../lib/metrics-core-2.2.0.jar:/soft/hbase/bin/../lib/MyHbase-1.0-SNAPSHOT.jar:/soft/hbase/bin/../lib/netty-all-4.0.23.Final.jar:/soft/hbase/bin/../lib/paranamer-2.3.jar:/soft/hbase/bin/../lib/phoenix-4.10.0-HBase-1.2-client.jar:/soft/hbase/bin/../lib/protobuf-java-2.5.0.jar:/soft/hbase/bin/../lib/servlet-api-2.5-6.1.14.jar:/soft/hbase/bin/../lib/servlet-api-2.5.jar:/soft/hbase/bin/../lib/slf4j-api-1.7.7.jar:/soft/hbase/bin/../lib/slf4j-log4j12-1.7.5.jar:/soft/hbase/bin/../lib/snappy-java-1.0.4.1.jar:/soft/hbase/bin/../lib/spymemcached-2.11.6.jar:/soft/hbase/bin/../lib/xmlenc-0.52.jar:/soft/hbase/bin/../lib/xz-1.0.jar:/soft/hbase/bin/../lib/zookeeper-3.4.6.jar:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*::/soft/hive/lib/*:/contrib/capacity-scheduler/*.jar:/conf:/lib/*' -Djava.library.path=:/soft/hadoop-2.7.3/lib/native:/soft/hadoop-2.7.3/lib/native org.apache.flume.node.Application -f /soft/flume/conf/yinzhengjie_hdfssink.conf -n a1 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/apache-flume-1.8.0-bin/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/phoenix-4.10.0-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 18/06/20 18:58:35 INFO node.PollingPropertiesFileConfigurationProvider: Configuration provider starting 18/06/20 18:58:35 INFO node.PollingPropertiesFileConfigurationProvider: Reloading configuration file:/soft/flume/conf/yinzhengjie_hdfssink.conf 18/06/20 18:58:35 INFO conf.FlumeConfiguration: Added sinks: k1 Agent: a1 18/06/20 18:58:35 INFO conf.FlumeConfiguration: Processing:k1 18/06/20 18:58:35 INFO conf.FlumeConfiguration: Processing:k1 18/06/20 18:58:35 INFO conf.FlumeConfiguration: Processing:k1 18/06/20 18:58:35 INFO conf.FlumeConfiguration: Processing:k1 18/06/20 18:58:35 INFO conf.FlumeConfiguration: Post-validation flume configuration contains configuration for agents: [a1] 18/06/20 18:58:35 INFO node.AbstractConfigurationProvider: Creating channels 18/06/20 18:58:35 INFO channel.DefaultChannelFactory: Creating instance of channel c1 type memory 18/06/20 18:58:35 INFO node.AbstractConfigurationProvider: Created channel c1 18/06/20 18:58:35 INFO source.DefaultSourceFactory: Creating instance of source r1, type netcat 18/06/20 18:58:35 INFO sink.DefaultSinkFactory: Creating instance of sink: k1, type: cn.org.yinzhengjie.sink.HdfsSink 18/06/20 18:58:35 INFO node.AbstractConfigurationProvider: Channel c1 connected to [r1, k1] 18/06/20 18:58:35 INFO node.Application: Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: { source:org.apache.flume.source.NetcatSource{name:r1,state:IDLE} }} sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@1ce60deb counterGroup:{ name:null counters:{} } }} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} } 18/06/20 18:58:35 INFO node.Application: Starting Channel c1 18/06/20 18:58:36 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean. 18/06/20 18:58:36 INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: c1 started 18/06/20 18:58:36 INFO node.Application: Starting Sink k1 18/06/20 18:58:36 INFO node.Application: Starting Source r1 18/06/20 18:58:36 INFO source.NetcatSource: Source starting 18/06/20 18:58:36 INFO source.NetcatSource: Created serverSocket:sun.nio.ch.ServerSocketChannelImpl[/127.0.0.1:8888]

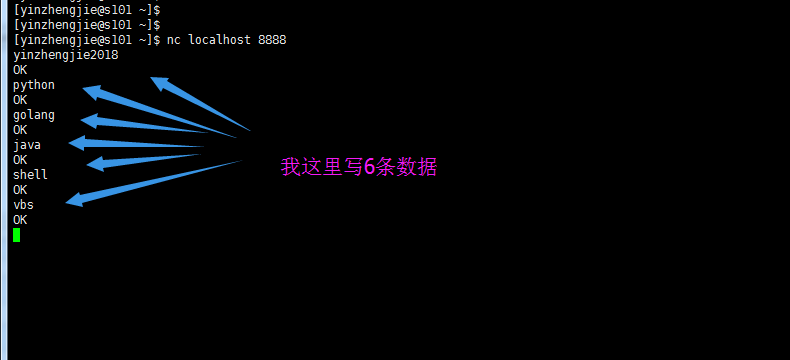

c>.source端进行连接操作

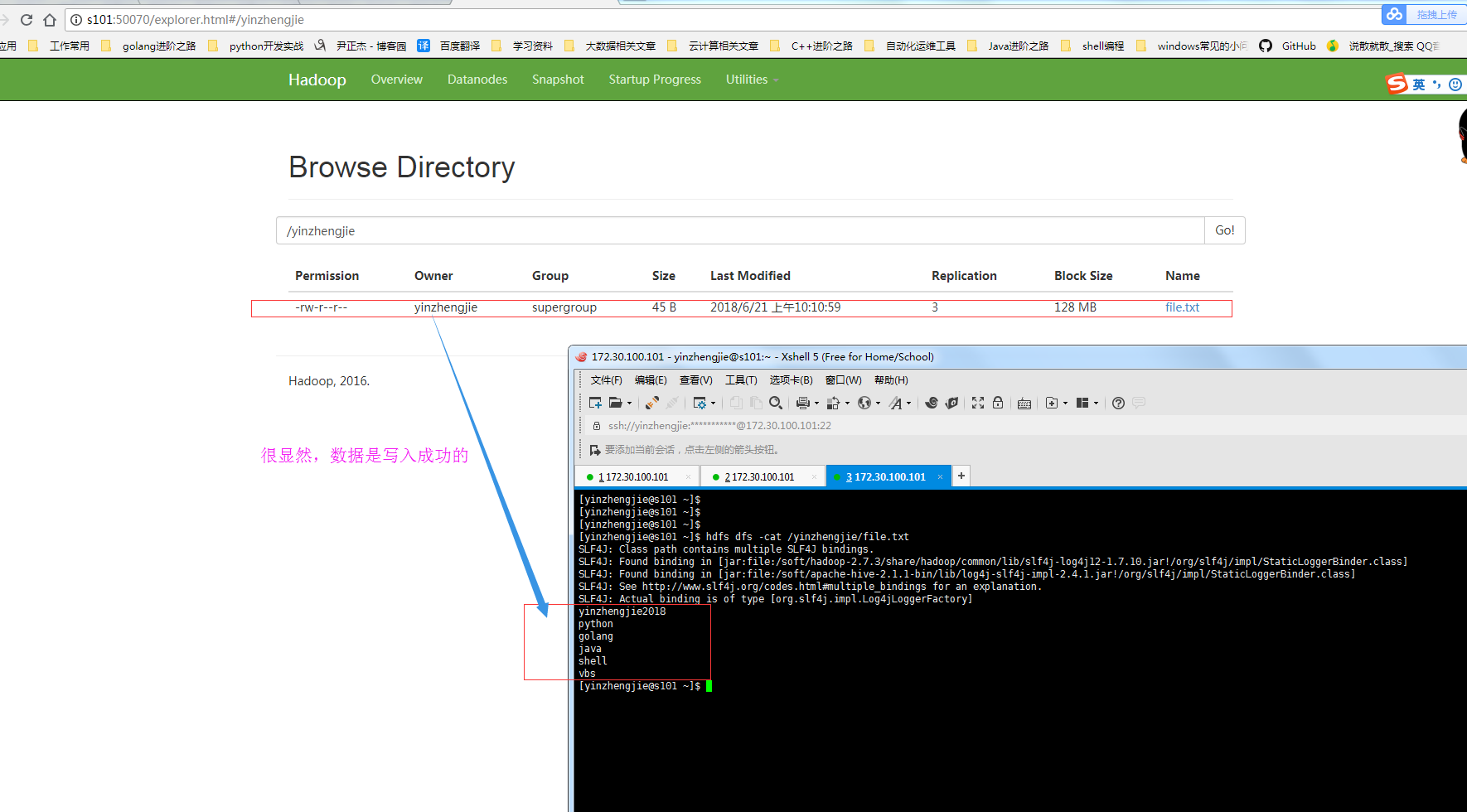

d>.检查hdfs系统是否写入成功

三.自定义HBase的sink

(未完待续.......)