部署 master 节点

kubernetes master 节点运行如下组件:

kube-apiserver

kube-scheduler

kube-controller-manager

kube-scheduler 和 kube-controller-manager 可以以集群模式运行,通过 leader 选举产生一个工作进程,其它进程处于阻塞模式。

对于 kube-apiserver,可以运行多个实例(本文档是 3 实例),但对其它组件需要提供统一的访问地址,该地址需要高可用。本文档使用 keepalived 和 haproxy 实现 kube-apiserver VIP 高可用和负载均衡。

下载最新版本的二进制文件

cd /root/work

wget https://dl.k8s.io/v1.9.9/kubernetes-server-linux-amd64.tar.gz

tar -xzvf kubernetes-server-linux-amd64.tar.gz

将二进制文件拷贝到所有 master 节点:

cd /root/work/kubernetes/server/bin/

scp kube-apiserver kube-controller-manager kube-scheduler 10.0.0.10:/usr/bin/

scp kube-apiserver kube-controller-manager kube-scheduler 10.0.0.11:/usr/bin/

scp kube-apiserver kube-controller-manager kube-scheduler 10.0.0.12:/usr/bin/

1、kube-apiserver

创建 kubernetes 证书和私钥

创建证书签名请求:

IP地址写上master还有vip的地址即可。

cd /root/work

cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.0.0.10",

"10.0.0.11",

"10.0.0.12",

"10.0.0.252",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}

EOF

-hosts 字段指定授权使用该证书的 IP 或域名列表,这里列出了 VIP 、apiserver 节点 IP、kubernetes 服务 IP 和域名;

-域名最后字符不能是 .(如不能为 kubernetes.default.svc.cluster.local.),否则解析时失败,提示: x509: cannot parse dnsName "kubernetes.default.svc.cluster.local.";

-如果使用非 cluster.local 域名,如 opsnull.com,则需要修改域名列表中的最后两个域名为:kubernetes.default.svc.opsnull、kubernetes.default.svc.opsnull.com

-kubernetes 服务 IP 是 apiserver 自动创建的,一般是 --service-cluster-ip-range 参数指定的网段的第一个IP,后续可以通过如下命令获取:

$ kubectl get svc kubernetes

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 1d

生成证书和私钥:

$cfssl gencert -ca=/root/work/ca.pem

-ca-key=/root/work/ca-key.pem

-config=/root/work/ca-config.json

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

ls kubernetes*pem

kubernetes-key.pem kubernetes.pem

将生成的证书和私钥文件拷贝到 master 节点:

mkdir -p /etc/kubernetes/cert 所有master节点

scp kubernetes*.pem 10.0.0.10:/etc/kubernetes/cert/

scp kubernetes*.pem 10.0.0.11:/etc/kubernetes/cert/

scp kubernetes*.pem 10.0.0.12:/etc/kubernetes/cert/

创建 kube-apiserver 使用的客户端 token 文件

kubelet 首次启动时向 kube-apiserver 发送 TLS Bootstrapping 请求,kube-apiserver 验证 kubelet 请求中的 token 是否与它配置的 token.csv 一致,如果一致则自动为 kubelet生成证书和秘钥。

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

86bff28f07a55e60ba1e61ab765c0b55 #这个值写入到 token.csv文件里面,后面kubelet配置文件也需要用到这个值,有个印象哦。

cat > token.csv <<EOF

86bff28f07a55e60ba1e61ab765c0b55,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

master和work节点都需要有。

scp token.csv 10.0.0.10:/etc/kubernetes/

scp token.csv 10.0.0.11:/etc/kubernetes/

scp token.csv 10.0.0.12:/etc/kubernetes/

scp token.csv 10.0.0.13:/etc/kubernetes/

scp token.csv 10.0.0.14:/etc/kubernetes/

创建日志目录

mkdir /etc/kubernetes/kube-apiserver 所有master节点创建。

为各节点创建和分发 kube-apiserver systemd unit 文件

10.0.0.10:

注意:

--advertise-address= 写入master本机地址

--bind-address= 写入master本机地址

--etcd-servers= 写入自己etcd集群地址

cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/etc/kubernetes/kube-apiserver

ExecStart=/usr/bin/kube-apiserver \

--admission-control=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--advertise-address=10.0.0.10 \

--bind-address=10.0.0.10 \

--feature-gates=RotateKubeletClientCertificate=true,RotateKubeletServerCertificate=true,Accelerators=true,DevicePlugins=true \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=30000-32767 \

--tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/cert/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \

--kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \

--service-account-key-file=/etc/kubernetes/cert/ca-key.pem \

--etcd-cafile=/etc/kubernetes/cert/ca.pem \

--etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \

--etcd-servers=https://10.0.0.10:2379,https://10.0.0.11:2379,https://10.0.0.12:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--max-mutating-requests-inflight=2000 \

--max-requests-inflight=4000 \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/etc/kubernetes/kube-apiserver/audit.log \

--event-ttl=168h \

--logtostderr=true \

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

--authorization-mode=Node,RBAC: 开启 Node 和 RBAC 授权模式,拒绝未授权的请求;

--admission-control:启用 ServiceAccount 和 NodeRestriction;

--service-account-key-file:签名 ServiceAccount Token 的公钥文件,kube-controller-manager 的 --service-account-private-key-file 指定私钥文件,两者配对使用;

--tls-*-file:指定 apiserver 使用的证书、私钥和 CA 文件。--client-ca-file 用于验证 client (kue-controller-manager、kube-scheduler、kubelet、kube-proxy 等)请求所带的证书;

--kubelet-client-certificate、--kubelet-client-key:如果指定,则使用 https 访问 kubelet APIs;需要为证书对应的用户(上面 kubernetes*.pem 证书的用户为 kubernetes) 用户定义 RBAC 规则,否则访问 kubelet API 时提示未授权;

--bind-address: 不能为 127.0.0.1,否则外界不能访问它的安全端口 6443;

--feature-gates=Accelerators=true,DevicePlugins=true:支持 GPU 加速功能;

--insecure-port=0:关闭监听非安全端口(8080);

--service-cluster-ip-range: 指定 Service Cluster IP 地址段;

--service-node-port-range: 指定 NodePort 的端口范围;

--runtime-config=api/all=true: 启用所有版本的 APIs,如 autoscaling/v2alpha1;

--enable-bootstrap-token-auth:启用 kubelet bootstrap 的 token 认证;

--apiserver-count=3:指定集群运行模式,多台 kube-apiserver 会通过 leader 选举产生一个工作节点,其它节点处于阻塞状态;

10.0.0.11:

cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/etc/kubernetes/kube-apiserver

ExecStart=/usr/bin/kube-apiserver \

--admission-control=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--advertise-address=10.0.0.11 \

--bind-address=10.0.0.11 \

--feature-gates=RotateKubeletClientCertificate=true,RotateKubeletServerCertificate=true,Accelerators=true,DevicePlugins=true \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=30000-32767 \

--tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/cert/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \

--kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \

--service-account-key-file=/etc/kubernetes/cert/ca-key.pem \

--etcd-cafile=/etc/kubernetes/cert/ca.pem \

--etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \

--etcd-servers=https://10.0.0.10:2379,https://10.0.0.11:2379,https://10.0.0.12:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--max-mutating-requests-inflight=2000 \

--max-requests-inflight=4000 \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/etc/kubernetes/kube-apiserver/audit.log \

--event-ttl=168h \

--logtostderr=true \

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

10.0.0.12:

cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/etc/kubernetes/kube-apiserver

ExecStart=/usr/bin/kube-apiserver \

--admission-control=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--advertise-address=10.0.0.12 \

--bind-address=10.0.0.12 \

--feature-gates=RotateKubeletClientCertificate=true,RotateKubeletServerCertificate=true,Accelerators=true,DevicePlugins=true \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=30000-32767 \

--tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/cert/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \

--kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \

--service-account-key-file=/etc/kubernetes/cert/ca-key.pem \

--etcd-cafile=/etc/kubernetes/cert/ca.pem \

--etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \

--etcd-servers=https://10.0.0.10:2379,https://10.0.0.11:2379,https://10.0.0.12:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--max-mutating-requests-inflight=2000 \

--max-requests-inflight=4000 \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/etc/kubernetes/kube-apiserver/audit.log \

--event-ttl=168h \

--logtostderr=true \

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

启动 kube-apiserver 服务

systemctl daemon-reload && systemctl enable kube-apiserver

systemctl start kube-apiserver && systemctl status kube-apiserver

打印 kube-apiserver 写入 etcd 的数据

ETCDCTL_API=3 etcdctl

--endpoints=https://10.0.0.10:2379,https://10.0.0.11:2379,https://10.0.0.12:2379

--cacert=/root/work/ca.pem

--cert=/etc/etcd/cert/etcd.pem

--key=/etc/etcd/cert/etcd-key.pem

get /registry/ --prefix --keys-only

检查集群信息

[root@master-ha-12 ~]# kubectl cluster-info dump

Kubernetes master is running at https://10.0.0.252:8443

[root@master-ha-12 ~]# kubectl get all --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default svc/kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 8m

[root@master-ha-12 ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: getsockopt: connection refused

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: getsockopt: connection refused

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

注意:

如果执行 kubectl 命令式时输出如下错误信息,则说明使用的 ~/.kube/config 文件不对,请切换到正确的账户后再执行该命令:

The connection to the server localhost:8080 was refused - did you specify the right host or port?

执行 kubectl get componentstatuses 命令时,apiserver 默认向 127.0.0.1 发送请求。当 controller-manager、scheduler 以集群模式运行时,有可能和 kube-apiserver 不在一台机器上,这时 controller-manager 或 scheduler 的状态为 Unhealthy,但实际上它们工作正常。

检查 kube-apiserver 监听的端口

[root@master-ha-12 ~]# netstat -lnpt|grep kube

tcp 0 0 10.0.0.12:6443 0.0.0.0:* LISTEN 2944/kube-apiserver

6443: 接收 https 请求的安全端口,对所有请求做认证和授权;

由于关闭了非安全端口,故没有监听 8080;

授予 kubernetes 证书访问 kubelet API 的权限

在执行 kubectl exec、run、logs 等命令时,apiserver 会转发到 kubelet。这里定义 RBAC 规则,授权 apiserver 调用 kubelet API。

定义 ClusterRole system:kubelet-api-admin 授予访问 kubelet 所有 API 的权限:

cat > kubelet-api-admin.yaml <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kubelet-api-admin

rules:

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- proxy

- apiGroups:

- ""

resources:

- nodes/log

- nodes/metrics

- nodes/proxy

- nodes/spec

- nodes/stats

verbs:

- '*'

EOF

[root@master-ha-10 work]# kubectl create -f kubelet-api-admin.yaml

clusterrole "system:kubelet-api-admin" created

授予 kubernetes 证书访问 kubelet API 的权限:

[root@master-ha-10 work]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

clusterrolebinding "kube-apiserver:kubelet-apis" created

查看 ClusterRole 结果:

[root@master-ha-10 work]# kubectl describe clusterrole system:kubelet-api-admin

Name: system:kubelet-api-admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate=true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

nodes [] [] [get list watch proxy]

nodes/log [] [] [*]

nodes/metrics [] [] [*]

nodes/proxy [] [] [*]

nodes/spec [] [] [*]

nodes/stats [] [] [*]

部署kube-controller-manager

本文档介绍部署高可用 kube-controller-manager 集群的步骤。

该集群包含 3 个节点,启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用后,剩余节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

为保证通信安全,本文档先生成 x509 证书和私钥,kube-controller-manager 在如下两种情况下使用该证书:

1、与 kube-apiserver 的安全端口通信时;

2、在安全端口(https,10252) 输出 prometheus 格式的 metrics;

创建 kube-controller-manager 证书和私钥

创建证书签名请求:

cat > kube-controller-manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"10.0.0.10",

"10.0.0.11",

"10.0.0.12"

],

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "4Paradigm"

}

]

}

EOF

hosts 列表包含所有 kube-controller-manager 节点 IP;

CN 为 system:kube-controller-manager、O 为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限。

生成证书和私钥:

$cfssl gencert -ca=/root/work/ca.pem

-ca-key=/root/work/ca-key.pem

-config=/root/work/ca-config.json

-profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

ls kube-controller-manager*pem

kube-controller-manager-key.pem kube-controller-manager.pem

分发到所有 master 节点:

scp kube-controller-manager*.pem 10.0.0.10:/etc/kubernetes/cert/

scp kube-controller-manager*.pem 10.0.0.11:/etc/kubernetes/cert/

scp kube-controller-manager*.pem 10.0.0.12:/etc/kubernetes/cert/

创建和分发 kubeconfig 文件

kubeconfig 文件包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书;

$kubectl config set-cluster kubernetes

--certificate-authority=/root/work/ca.pem

--embed-certs=true

--server=https://10.0.0.252:8443

--kubeconfig=kube-controller-manager.kubeconfig

$kubectl config set-credentials system:kube-controller-manager

--client-certificate=/root/work/kube-controller-manager.pem

--client-key=/root/work/kube-controller-manager-key.pem

--embed-certs=true

--kubeconfig=kube-controller-manager.kubeconfig

$kubectl config set-context system:kube-controller-manager

--cluster=kubernetes

--user=system:kube-controller-manager

--kubeconfig=kube-controller-manager.kubeconfig

$kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

分发 kubeconfig 到所有 master 节点:

scp kube-controller-manager.kubeconfig 10.0.0.10:/etc/kubernetes/

scp kube-controller-manager.kubeconfig 10.0.0.11:/etc/kubernetes/

scp kube-controller-manager.kubeconfig 10.0.0.12:/etc/kubernetes/

mkdir /etc/kubernetes/kube-controller-manager #所有master节点操作

分发 systemd unit 文件到所有 master 节点:

cat > /usr/lib/systemd/system/kube-controller-manager.service <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=/etc/kubernetes/kube-controller-manager

ExecStart=/usr/bin/kube-controller-manager \

--port=10252 \

--address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.254.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \

--experimental-cluster-signing-duration=8760h \

--root-ca-file=/etc/kubernetes/cert/ca.pem \

--service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \

--feature-gates=RotateKubeletClientCertificate=true,RotateKubeletServerCertificate=true,Accelerators=true,DevicePlugins=true \

--leader-elect=true \

--controllers=*,bootstrapsigner,tokencleaner \

--use-service-account-credentials=true \

--kube-api-qps=1000 \

--kube-api-burst=2000 \

--logtostderr=true \

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

EOF

--port=10252、--address=127.0.0.1:在 127.0.0.1:10252 监听 http /metrics 的请求;

--kubeconfig:指定 kubeconfig 文件路径,kube-controller-manager 使用它连接和验证 kube-apiserver;

--cluster-signing-*-file:签名 TLS Bootstrap 创建的证书;

--experimental-cluster-signing-duration:指定 TLS Bootstrap 证书的有效期;

--root-ca-file:放置到容器 ServiceAccount 中的 CA 证书,用来对 kube-apiserver 的证书进行校验;

--service-account-private-key-file:签名 ServiceAccount 中 Token 的私钥文件,必须和 kube-apiserver 的 --service-account-key-file 指定的公钥文件配对使用;

--service-cluster-ip-range :指定 Service Cluster IP 网段,必须和 kube-apiserver 中的同名参数一致;

--leader-elect=true:集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态;

--controllers=*,bootstrapsigner,tokencleaner:启用的控制器列表,tokencleaner 用于自动清理过期的 Bootstrap token;

--use-service-account-credentials=true: 各 controller 使用 ServiceAccount 访问 kube-apiserver;

--feature-gates:支持 Kubelet Client 和 Server 证书轮转,支持 GPU 加速;

kube-controller-manager 不对请求 https metrics 的 Client 证书进行校验,故不需要指定 --tls-ca-file 参数,而且该参数已被淘汰。

kube-controller-manager 的权限

-ClusteRole: system:kube-controller-manager 的权限很小,只能创建 secret、serviceaccount 等资源对象,各 controller 的权限分散到 ClusterRole system:controller:XXX 中。

-需要在 kube-controller-manager 的启动参数中添加 --use-service-account-credentials=true 参数,这样 main controller 会为各 controller 创建对应的 ServiceAccount XXX-controller。

-内置的 ClusterRoleBinding system:controller:XXX 将赋予各 XXX-controller ServiceAccount 对应的 ClusterRole system:controller:XXX 权限。

启动 kube-controller-manager 服务

systemctl daemon-reload && systemctl enable kube-controller-manager

systemctl restart kube-controller-manager && systemctl status kube-controller-manager

查看当前的 leader

[root@master-ha-10 work]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

apiVersion: v1

kind: Endpoints

metadata:

annotations:

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master-ha-12","leaseDurationSeconds":15,"acquireTime":"2021-02-14T06:36:15Z","renewTime":"2021-02-14T06:36:31Z","leaderTransitions":0}'

creationTimestamp: 2021-02-14T06:36:15Z

name: kube-controller-manager

namespace: kube-system

resourceVersion: "238"

selfLink: /api/v1/namespaces/kube-system/endpoints/kube-controller-manager

uid: f0099368-6e8e-11eb-80ef-000c295d04a3

subsets: null

部署kube-scheduler

本文档介绍部署高可用 kube-scheduler 集群的步骤。

该集群包含 3 个节点,启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用后,剩余节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

为保证通信安全,本文档先生成 x509 证书和私钥,kube-scheduler 在于 kube-apiserver 的安全端口通信时使用该证书。

创建 kube-scheduler 证书和私钥

创建证书签名请求:

cat > kube-scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"10.0.0.10",

"10.0.0.11",

"10.0.0.12"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-scheduler",

"OU": "4Paradigm"

}

]

}

EOF

hosts 列表包含所有 kube-scheduler 节点 IP;

CN 为 system:kube-scheduler、O 为 system:kube-scheduler,kubernetes 内置的 ClusterRoleBindings system:kube-scheduler 将赋予 kube-scheduler 工作所需的权限。

生成证书和私钥

cfssl gencert -ca=/root/work/ca.pem

-ca-key=/root/work/ca-key.pem

-config=/root/work/ca-config.json

-profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

[root@master-ha-10 work]# ls kube-scheduler*pem

kube-scheduler-key.pem kube-scheduler.pem

创建和分发 kubeconfig 文件

kubeconfig 文件包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书;

$kubectl config set-cluster kubernetes

--certificate-authority=/root/work/ca.pem

--embed-certs=true

--server=https://10.0.0.252:8443

--kubeconfig=kube-scheduler.kubeconfig

$kubectl config set-credentials system:kube-scheduler

--client-certificate=/root/work/kube-scheduler.pem

--client-key=/root/work/kube-scheduler-key.pem

--embed-certs=true

--kubeconfig=kube-scheduler.kubeconfig

$kubectl config set-context system:kube-scheduler

--cluster=kubernetes

--user=system:kube-scheduler

--kubeconfig=kube-scheduler.kubeconfig

$kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

上一步创建的证书、私钥以及 kube-apiserver 地址被写入到 kubeconfig 文件中;

分发 kubeconfig 到所有 master 节点:

scp kube-scheduler.kubeconfig 10.0.0.10:/etc/kubernetes/

scp kube-scheduler.kubeconfig 10.0.0.11:/etc/kubernetes/

scp kube-scheduler.kubeconfig 10.0.0.12:/etc/kubernetes/

mkdir /etc/kubernetes/kube-scheduler 所有master节点操作

创建和分发 kube-scheduler systemd unit 文件

所有master节点操作

cat > /usr/lib/systemd/system/kube-scheduler.service <<EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=/etc/kubernetes/kube-scheduler

ExecStart=/usr/bin/kube-scheduler \

--address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--kube-api-qps=100 \

--logtostderr=true \

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

EOF

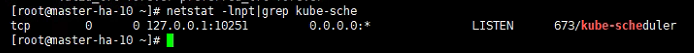

--address:在 127.0.0.1:10251 端口接收 http /metrics 请求;kube-scheduler 目前还不支持接收 https 请求;

--kubeconfig:指定 kubeconfig 文件路径,kube-scheduler 使用它连接和验证 kube-apiserver;

--leader-elect=true:集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态;

启动 kube-scheduler 服务

systemctl daemon-reload && systemctl enable kube-scheduler

systemctl restart kube-scheduler && systemctl status kube-scheduler

查看当前的 leader

kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml

apiVersion: v1

kind: Endpoints

metadata:

annotations:

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master-ha-10","leaseDurationSeconds":15,"acquireTime":"2021-02-14T06:46:16Z","renewTime":"2021-02-14T06:46:28Z","leaderTransitions":0}'

creationTimestamp: 2021-02-14T06:46:16Z

name: kube-scheduler

namespace: kube-system

resourceVersion: "543"

selfLink: /api/v1/namespaces/kube-system/endpoints/kube-scheduler

uid: 55f242ce-6e90-11eb-80ef-000c295d04a3

subsets: null

查看输出的 metrics

注意:以下命令在 kube-scheduler 节点上执行。

kube-scheduler 监听 10251 端口,接收 http 请求:

curl -s http://127.0.0.1:10251/metrics | head

# HELP go_gc_duration_seconds A summary of the GC invocation durations.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 7.8802e-05

go_gc_duration_seconds{quantile="0.25"} 8.7325e-05

go_gc_duration_seconds{quantile="0.5"} 0.000202452

go_gc_duration_seconds{quantile="0.75"} 0.000473563

go_gc_duration_seconds{quantile="1"} 0.000956121

go_gc_duration_seconds_sum 0.002215629

go_gc_duration_seconds_count 7

# HELP go_goroutines Number of goroutines that currently exist.