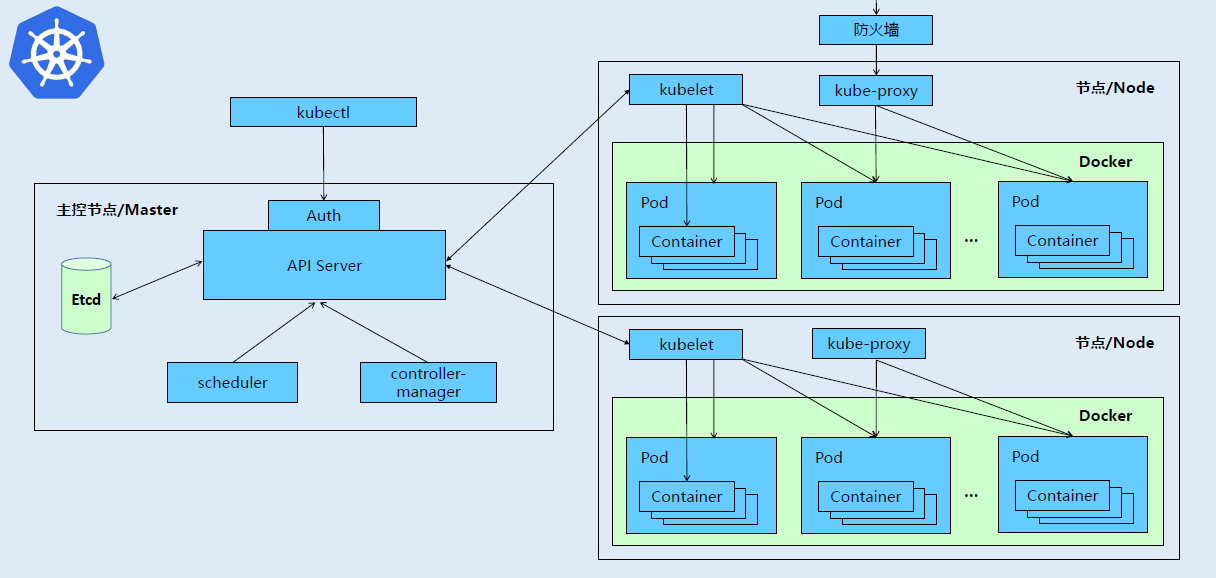

Kubernetes集群中主要存在两种类型的节点:master、minion节点。

Minion节点为运行 Docker容器的节点,负责和节点上运行的 Docker 进行交互,并且提供了代理功能。

Master节点负责对外提供一系列管理集群的API接口,并且通过和 Minion 节点交互来实现对集群的操作管理。

kubernetes必备组件

kube-apiserver:集群的统一入口,各组件协调者,以RESTful API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储。

kube-controller-manager:处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的。

kube-scheduler:根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在同一个节点上,也可以部署在不同的节点上。

etcd:分布式键值存储系统。用于保存集群状态数据,比如Pod、Service等对象信息。

kubelet:Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器。

kube-proxy:在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。

docker或rocket:容器引擎,运行容器。

Pod网络:Pod要能够相互间通信,K8S集群必须部署Pod网络,flannel是其中一种的可选方案,是CoreOS 团队针对 Kubernetes 设计的一个覆盖网络(Overlay Network)工具。

kubernetes集群架构与组建:

准备部署环境:

vip 192.168.0.130 keepalived

k8s-master1 192.168.0.123 kube-apiserver,kube-controller-manager,kube-scheduler,etcd

k8s-master2 192.168.0.124 kube-apiserver,kube-controller-manager,kube-scheduler

k8s-node01 192.168.0.125 kubelet,kube-proxy,docker,flannel,etcd

k8s-node02 192.168.0.126 kubelet,kube-proxy,docker,flannel,etcd

系统环境初始化:

#系统更新

yum install -y epel-release; yum update -y

#修改个节点主机名

hostnamectl set-hostname k8s-master1

hostnamectl set-hostname k8s-master2

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

#master1 IP

[root@k8s-master1 ~]# ifconfig ens32

ens32: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.0.123 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::20c:29ff:fe8a:2b5f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:8a:2b:5f txqueuelen 1000 (Ethernet)

RX packets 821 bytes 84238 (82.2 KiB)

RX errors 0 dropped 2 overruns 0 frame 0

TX packets 143 bytes 18221 (17.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

#master2 IP

[root@k8s-master2 ~]# ifconfig ens32

ens32: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.0.124 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::20c:29ff:fe77:dc9c prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:77:dc:9c txqueuelen 1000 (Ethernet)

RX packets 815 bytes 81627 (79.7 KiB)

RX errors 0 dropped 2 overruns 0 frame 0

TX packets 158 bytes 20558 (20.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

#node01 IP

[root@k8s-node01 ~]# ifconfig ens32

ens32: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.0.125 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::20c:29ff:fe80:7949 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:80:79:49 txqueuelen 1000 (Ethernet)

RX packets 830 bytes 85270 (83.2 KiB)

RX errors 0 dropped 2 overruns 0 frame 0

TX packets 152 bytes 19376 (18.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

#node02 IP

[root@k8s-node02 ~]# ifconfig ens32

ens32: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.0.126 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::20c:29ff:fe7a:e67b prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:7a:e6:7b txqueuelen 1000 (Ethernet)

RX packets 867 bytes 87775 (85.7 KiB)

RX errors 0 dropped 2 overruns 0 frame 0

TX packets 157 bytes 19866 (19.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

#同步时间

yum -y install ntp

systemctl start ntpd

ntpdate cn.pool.ntp.org

#关闭selinux

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

getenforce

#关闭swap

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

cat /etc/fstab

#设置内核

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

#准备部署目录

mkdir -p /opt/kubernetes/{bin,cfg,ssl,log}

echo 'export PATH=/opt/kubernetes/bin:$PATH' > /etc/profile.d/k8s.sh

source /etc/profile.d/k8s.sh

CA证书创建和分发:

从k8s的1.8版本开始,K8S系统各组件需要使用TLS证书对通信进行加密。每一个K8S集群都需要独立的CA证书体系。CA证书有以下三种:easyrsa、openssl、cfssl。这里使用cfssl证书,也是目前使用最多的,相对来说配置简单一些,通过json的格式,把证书相关的东西配置进去即可。这里使用cfssl的版本为1.2版本。

1、安装CFSSL

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl*

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl_linux-amd64 /usr/local/bin/cfssl

2、初始化cfssl

mkdir ssl && cd ssl

cfssl print-defaults config > config.json

cfssl print-defaults csr > csr.json

3、创建用来生成CA文件的json配置文件

[root@k8s-master1 ssl]# vim ca-config.json

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

signing: 表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

server auth: 表示 client 可以用该 CA 对 server 提供的证书进行验证;

client auth: 表示 server 可以用该 CA 对 client 提供的证书进行验证;

4、创建用来生成CA证书签名请求的json配置文件

[root@k8s-master1 ssl]# vim ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

“CN”:Common Name,kube-apiserver 从证书中提取该字段作为请求的用户名 (User Name);浏览器使用该字段验证网站是否合法;

“O”:Organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组 (Group);

5、生成CA证书(ca.pem)和密钥(ca-key.pem)

[root@k8s-master1 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2018/12/14 13:36:34 [INFO] generating a new CA key and certificate from CSR

2018/12/14 13:36:34 [INFO] generate received request

2018/12/14 13:36:34 [INFO] received CSR

2018/12/14 13:36:34 [INFO] generating key: rsa-2048

2018/12/14 13:36:34 [INFO] encoded CSR

2018/12/14 13:36:34 [INFO] signed certificate with serial number 685737089592185658867716737752849077098687904892

将证书和密钥分发到其他节点

cp ca.csr ca.pem ca-key.pem ca-config.json /opt/kubernetes/ssl

scp ca.csr ca.pem ca-key.pem ca-config.json 192.168.0.125:/opt/kubernetes/ssl

scp ca.csr ca.pem ca-key.pem ca-config.json 192.168.0.126:/opt/kubernetes/ssl

ETCD部署

所有持久化的状态信息以KV的形式存储在ETCD中。类似zookeeper,提供分布式协调服务。之所以说kubenetes各个组件是无状态的,就是因为其中把数据都存放在ETCD中。由于ETCD支持集群,这里在三台主机上都部署上ETCD。

创建etcd证书签名请求

[root@k8s-master1 ssl]# vim etcd-csr.json { "CN": "etcd", "hosts": [ "127.0.0.1", "192.168.0.123", "192.168.0.125", "192.168.0.126" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] }

生成etcd证书和私钥

cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem -ca-key=/opt/kubernetes/ssl/ca-key.pem -config=/opt/kubernetes/ssl/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

将证书复制到其他节点

cp etcd*.pem /opt/kubernetes/ssl/ scp etcd*.pem 192.168.0.125:/opt/kubernetes/ssl/ scp etcd*.pem 192.168.0.126:/opt/kubernetes/ssl/

准备二进制文件

二进制包下载地址:https://github.com/coreos/etcd/releases

cd /usr/local/src/ wget https://github.com/etcd-io/etcd/releases/download/v3.3.10/etcd-v3.3.10-linux-amd64.tar.gz tar xf etcd-v3.3.10-linux-amd64.tar.gz cd etcd-v3.3.10-linux-amd64 cp etcd etcdctl /opt/kubernetes/bin/ scp etcd etcdctl 192.168.0.125:/opt/kubernetes/bin/ scp etcd etcdctl 192.168.0.126:/opt/kubernetes/bin/

设置etcd配置文件

[root@k8s-master1 ~]# vim /opt/kubernetes/cfg/etcd.conf

#[member]

ETCD_NAME="etcd-node1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.0.123:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.0.123:2379,https://127.0.0.1:2379"

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.0.123:2380"

ETCD_INITIAL_CLUSTER="etcd-node1=https://192.168.0.123:2380,etcd-node2=https://192.168.0.125:2380,etcd-node3=https://192.168.0.126:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.0.123:2379"

#[security]

CLIENT_CERT_AUTH="true"

ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem"

ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"

ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"

PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"

ETCD_NAME 节点名称

ETCD_DATA_DIR 数据目录

ETCD_LISTEN_PEER_URLS 集群通信监听地址

ETCD_LISTEN_CLIENT_URLS 客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS 集群通告地址

ETCD_ADVERTISE_CLIENT_URLS 客户端通告地址

ETCD_INITIAL_CLUSTER 集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN 集群Token

ETCD_INITIAL_CLUSTER_STATE 加入集群的当前状态,new是新集群,existing表示加入已有集群

创建etcd系统服务

[root@k8s-master1 ~]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=simple

WorkingDirectory=/var/lib/etcd

EnvironmentFile=-/opt/kubernetes/cfg/etcd.conf

# set GOMAXPROCS to number of processors

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /opt/kubernetes/bin/etcd"

Type=notify

[Install]

WantedBy=multi-user.target

将配置文件和服务文件拷贝到其他两个节点 (记得修改对应的IP和ETCD_NAME)

scp /opt/kubernetes/cfg/etcd.conf 192.168.0.125:/opt/kubernetes/cfg scp /opt/kubernetes/cfg/etcd.conf 192.168.0.126:/opt/kubernetes/cfg scp /usr/lib/systemd/system/etcd.service 192.168.0.125:/usr/lib/systemd/system/ scp /usr/lib/systemd/system/etcd.service 192.168.0.126:/usr/lib/systemd/system/

创建ETCD工作目录

mkdir /var/lib/etcd

启动ETCD服务

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

systemctl status etcd

集群验证

etcdctl --endpoints=https://192.168.0.123:2379,https://192.168.0.125:2379,https://192.168.0.126:2379 --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/etcd.pem --key-file=/opt/kubernetes/ssl/etcd-key.pem cluster-health

返回如下信息说明ETCD集群配置正常:

member 3126a455a15179c6 is healthy: got healthy result from https://192.168.0.125:2379 member 40601cc6f27d1bf1 is healthy: got healthy result from https://192.168.0.123:2379 member 431013e88beab64c is healthy: got healthy result from https://192.168.0.126:2379 cluster is healthy