centos7容器

docker pull centos7 启动一个基础容器

docker cp elastic.tar.gz ab33344ef:/root

docker exec -ti ab33344ef /bin/bash

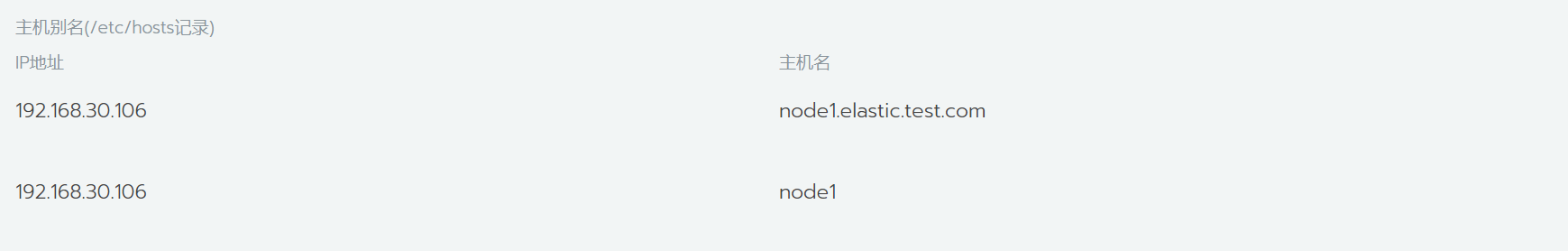

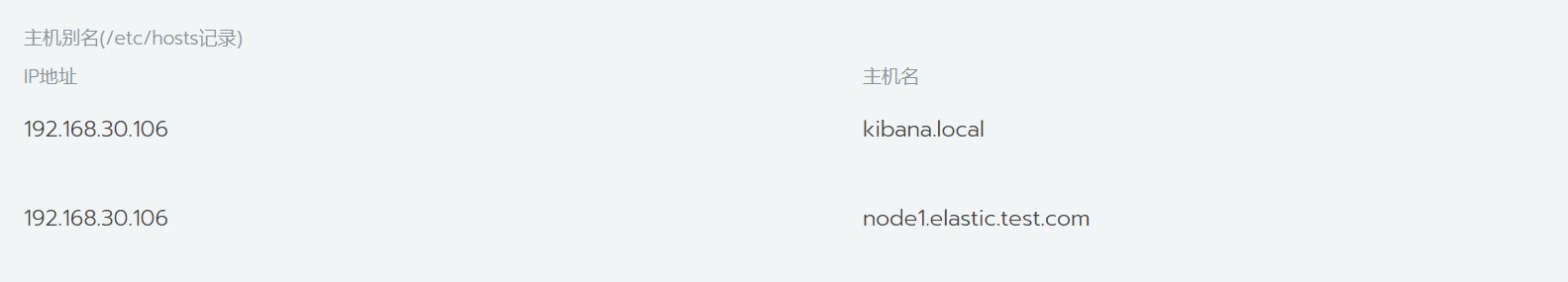

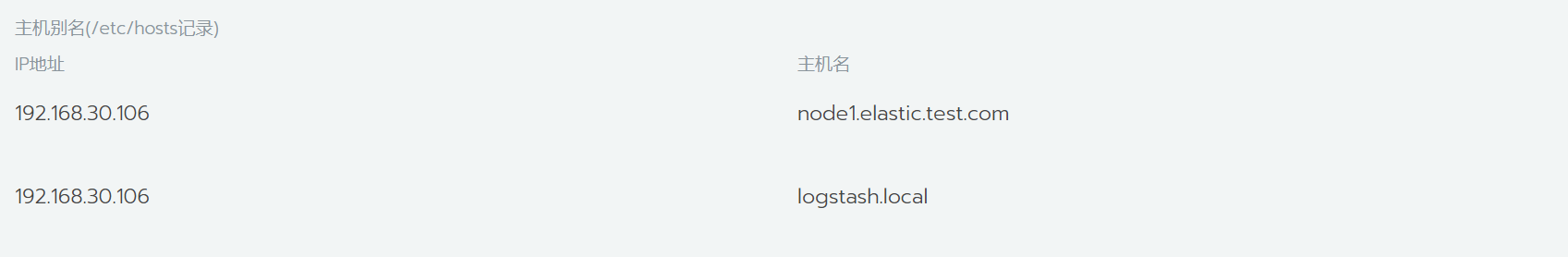

instances: - name: 'node1' dns: [ 'node1.elastic.test.com' ] - name: 'my-kibana' dns: [ 'kibana.local' ] - name: 'logstash' dns: [ 'logstash.local' ]

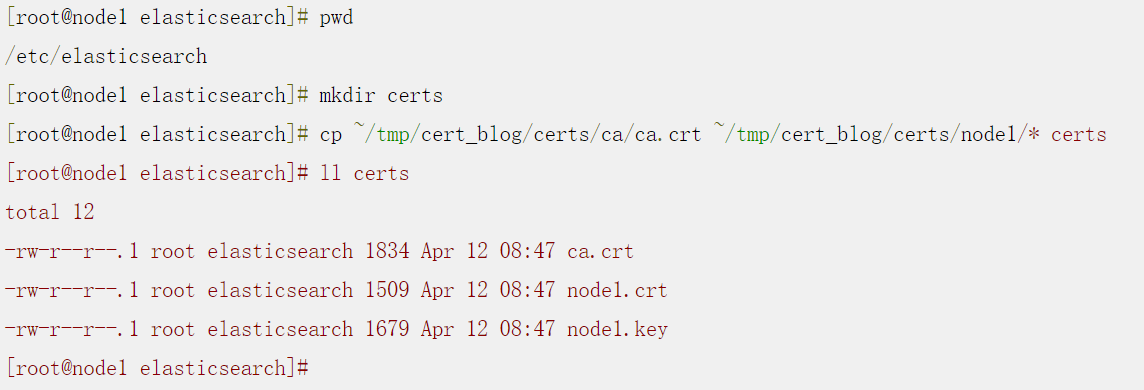

生成CA和服务器证书(Elasticsearch安装完毕之后)

bin/elasticsearch-certutil cert ca --pem --in ~/tmp/cert_blog/instance.yml --out ~/tmp/cert_blog/certs.zip

unzip certs.zip -d ./certs

配置elasticsearch.yml

node.name: node1 network.host: node1.elastic.test.com xpack.security.enabled: true xpack.security.http.ssl.enabled: true xpack.security.transport.ssl.enabled: true xpack.security.http.ssl.key: certs/node1.key xpack.security.http.ssl.certificate: certs/node1.crt xpack.security.http.ssl.certificate_authorities: certs/ca.crt xpack.security.transport.ssl.key: certs/node1.key xpack.security.transport.ssl.certificate: certs/node1.crt xpack.security.transport.ssl.certificate_authorities: certs/ca.crt discovery.seed_hosts: [ "node1.elastic.test.com" ] cluster.initial_master_nodes: [ "node1" ]

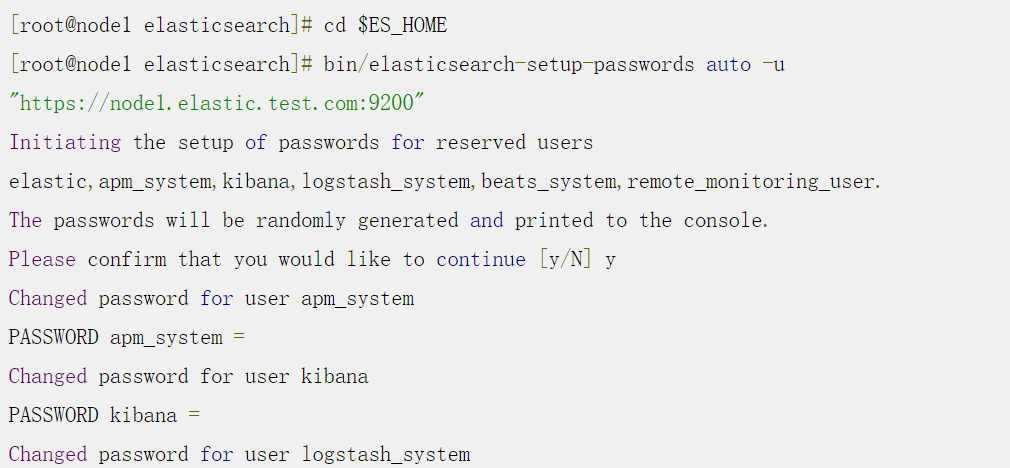

设置内置用户的密码

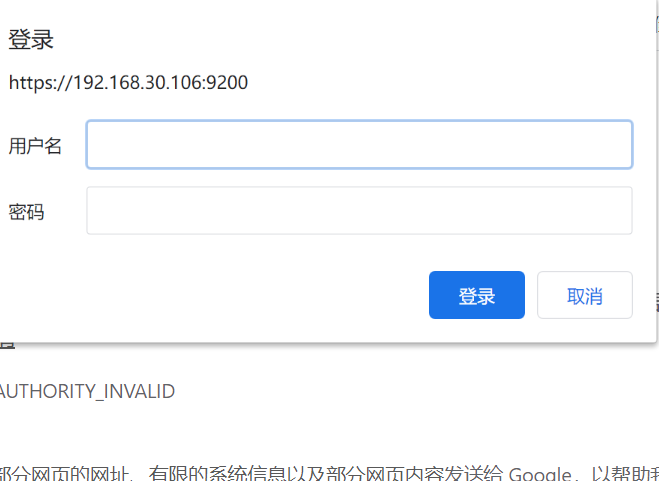

bin/elasticsearch-setup-passwords auto -u "https://node1.elastic.test.com:9200"

启动es

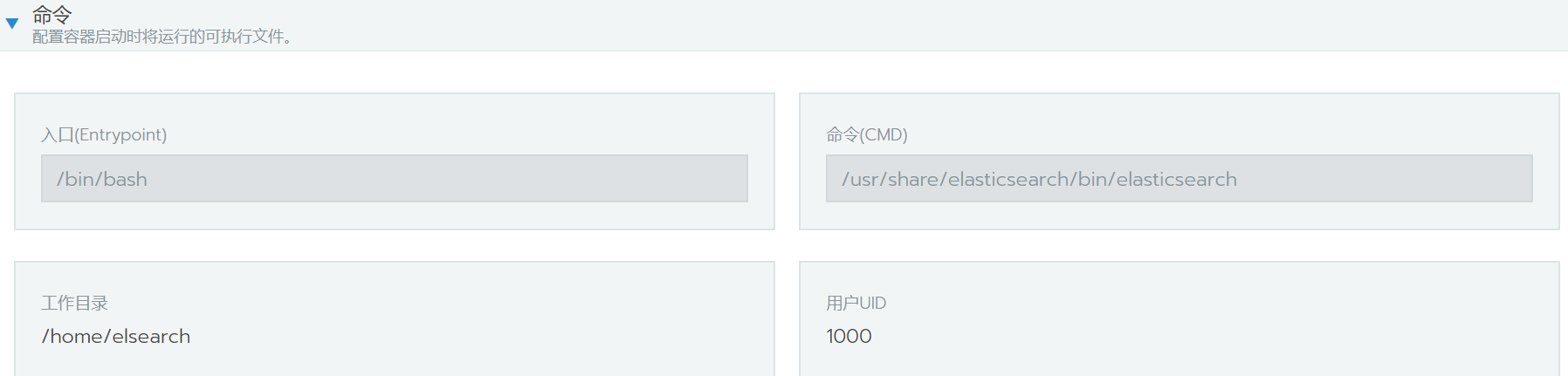

/usr/share/elasticsearch/bin/elasticsearch

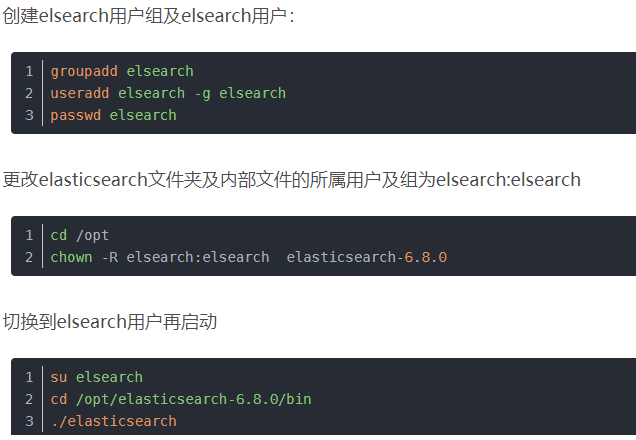

root@siem-cluster-node-09 /]# id elsearch

uid=1000(elsearch) gid=1000(elsearch) groups=1000(elsearch)

docker commit 2c7add484489 192.168.30.13/aabb/elastic

docker push 192.168.30.13/aabb/elastic

Elastic容器设置

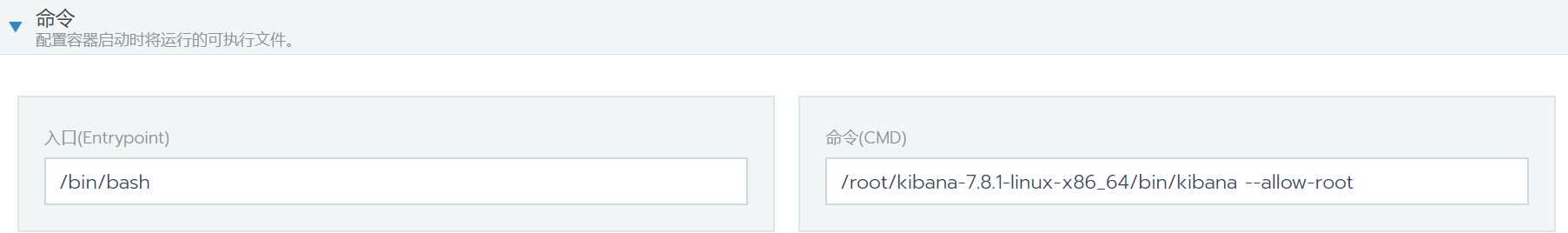

Kibana容器设置

kibana.yml中证书来源于在elasticsearch容器中生成certs和key以及生成的es内置用户账号

server.name: "my-kibana" server.host: "kibana.local" server.ssl.enabled: true server.ssl.certificate: /etc/kibana/config/certs/my-kibana.crt server.ssl.key: /etc/kibana/config/certs/my-kibana.key elasticsearch.hosts: ["https://node1.elastic.test.com:9200"] elasticsearch.username: "kibana" elasticsearch.password: "uFrZj1AW82vwSMeW02Cc" elasticsearch.ssl.certificateAuthorities: [ "/etc/kibana/config/certs/ca.crt" ] monitoring.enabled: false

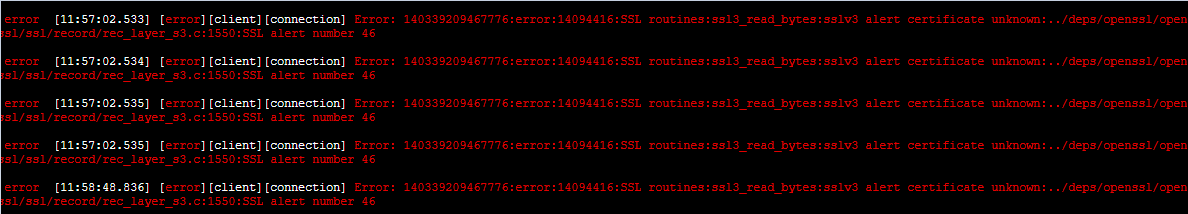

可忽略的错误 不影响kibana的使用

访问kibana

示例参考地址

https://www.elastic.co/cn/blog/configuring-ssl-tls-and-https-to-secure-elasticsearch-kibana-beats-and-logstash#create-ssl

问题

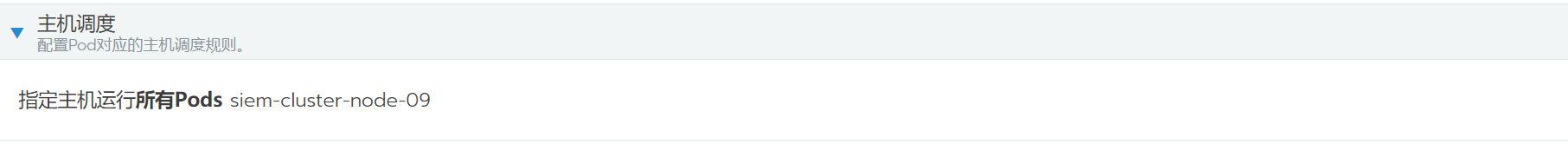

elasticsearch工作负载一旦重新创建 它生成的内置用户账号就会失效 kibana和logstash就会连接不上新启动的es Pod

所以elasticsearch一旦创建后不能随意进行创建

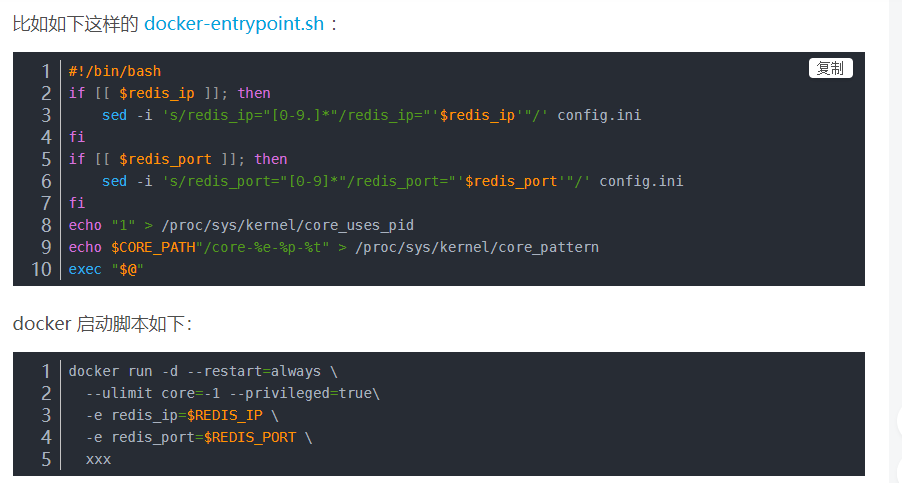

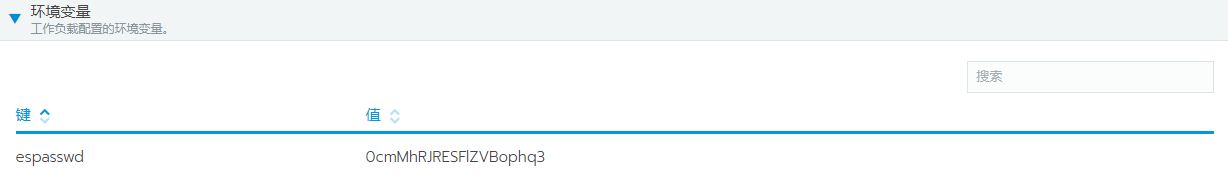

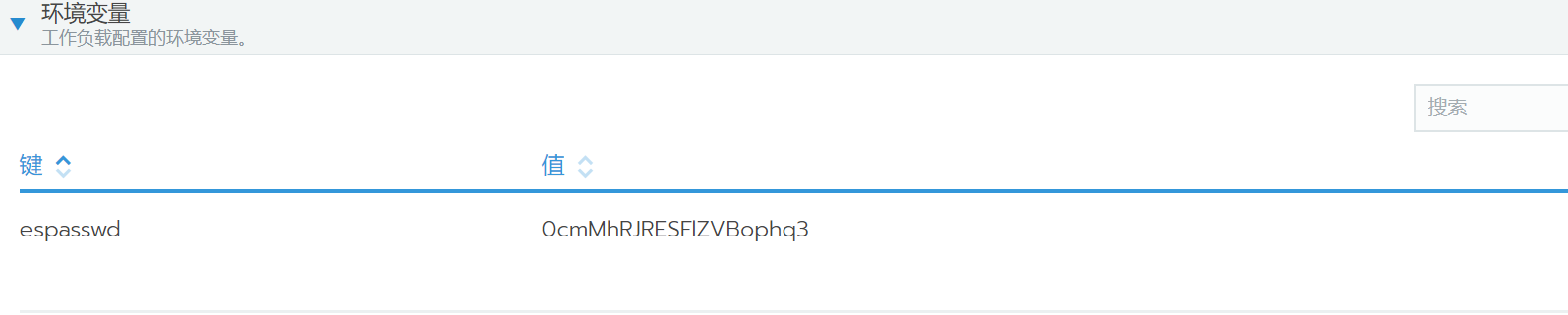

替换容器中的环境变量

sed -i 's/redis_ip="[0-9.]*"/redis_ip="'$redis_ip'"/' config.ini

docker run -d --restart=always --ulimit core=-1 --privileged=true -e redis_ip=$REDIS_IP -e redis_port=$REDIS_PORT

容器使用环境变量传递动态参数

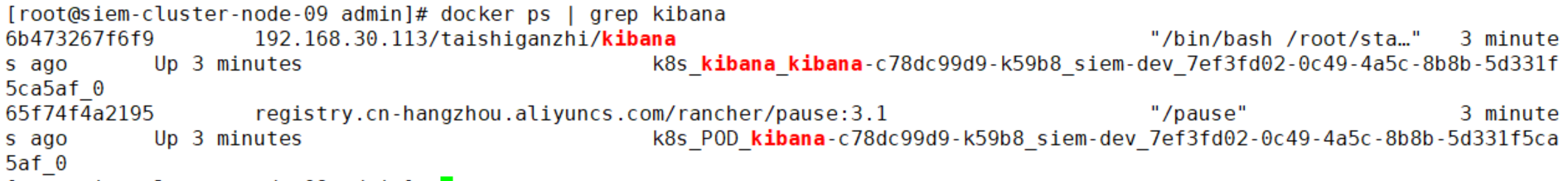

1.找到对应容器

2.登录容器

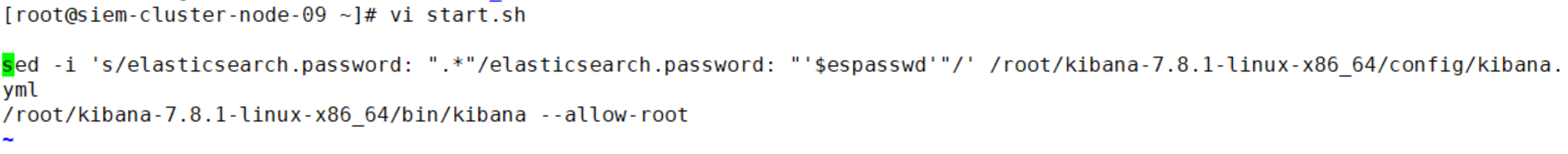

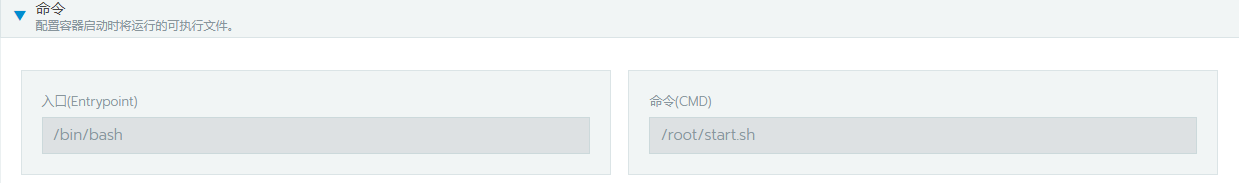

3.创建启动脚本start.sh

sed -i 's/elasticsearch.password: ".*"/elasticsearch.password: "'$espasswd'"/' /root/kibana-7.8.1-linux-x86_64/config/kibana.yml

1.动态使用环境变量修改配置文件

2.启动相关应用

4.保存镜像

docker commit 1d008b3396f6 192.168.30.11/9999/kibana:v2

docker push 192.168.30.11/9999/kibana:v2

5.启动容器时候的设置

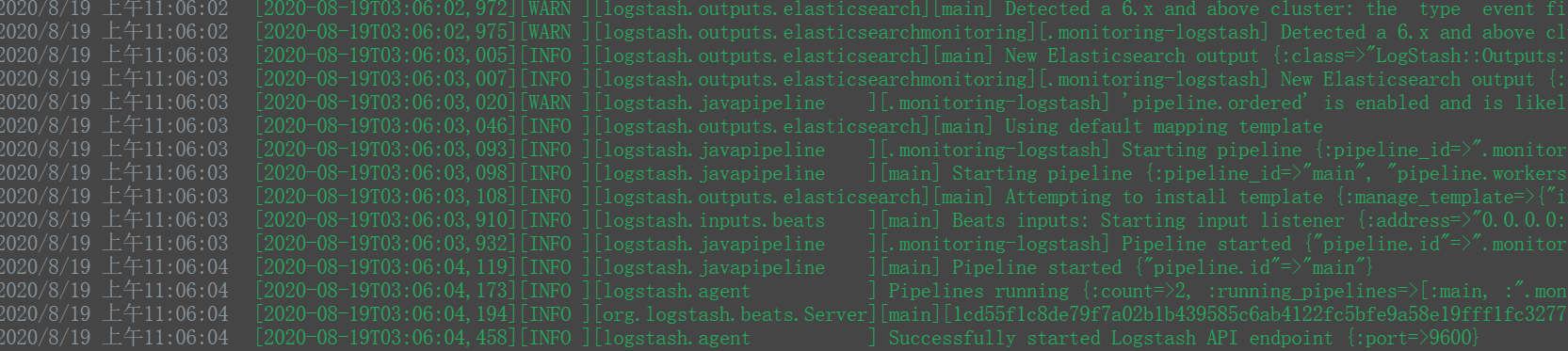

Logstash容器设置

node.name: logstash.local path.config: /etc/logstash/conf.d/*.conf xpack.monitoring.enabled: true xpack.monitoring.elasticsearch.username: 'elastic' xpack.monitoring.elasticsearch.password: 'AokJY29yc13rvdKuFzsM' xpack.monitoring.elasticsearch.hosts: [ 'https://node1.elastic.test.com:9200' ] xpack.monitoring.elasticsearch.ssl.certificate_authority: /etc/logstash/config/certs/ca.crt

input { beats { port => 5044 ssl => true ssl_key => '/etc/logstash/config/certs/logstash.pkcs8.key' ssl_certificate => '/etc/logstash/config/certs/logstash.crt' } } output { elasticsearch { hosts => ["https://node1.elastic.test.com:9200"] cacert => '/etc/logstash/config/certs/ca.crt' user => 'elastic' password => 'AokJY29yc13rvdKuFzsM' } }

vi start.sh sed -i 's/xpack.monitoring.elasticsearch.password: ".*"/xpack.monitoring.elasticsearch.password: "'$espasswd'"/' /root/logstash-7.8.1/config/logstash.yml sed -i 's/password => ".*"/password => "'$espasswd'"/' /etc/logstash/conf.d/example.conf /root/logstash-7.8.1/bin/logstash

搭建成功 elk

elasticsearch的pod重新创建后需要进入es容器重新生成密码 然后给kibana和logstashpod重新设置espasswd的环境变量

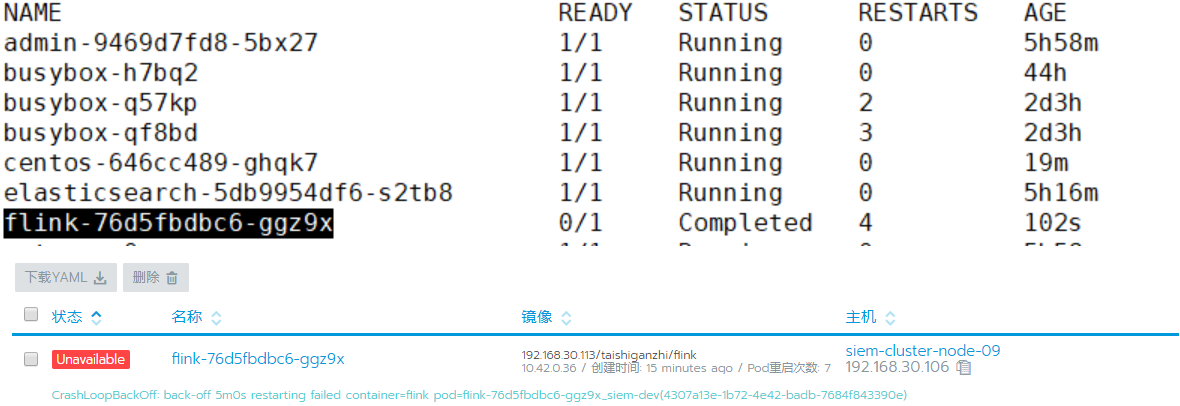

Flink容器安装

flink容器启动失败 经过分析是因为启动脚本是以后台进程的方式启动的导致脚本一结束容器便自动结束

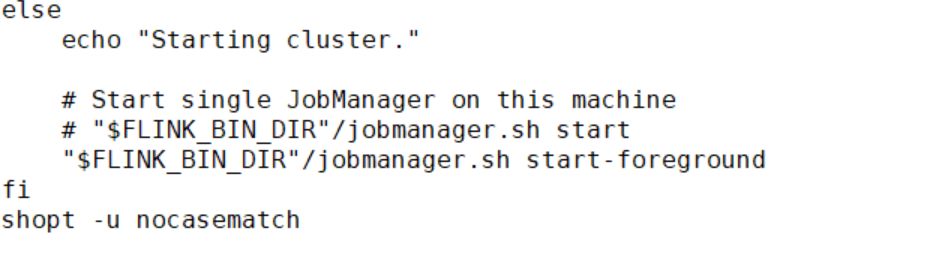

flink以前台进程方式启动是需要手动修改start-cluster.sh文件

vi start-cluster.sh 修改文件内容

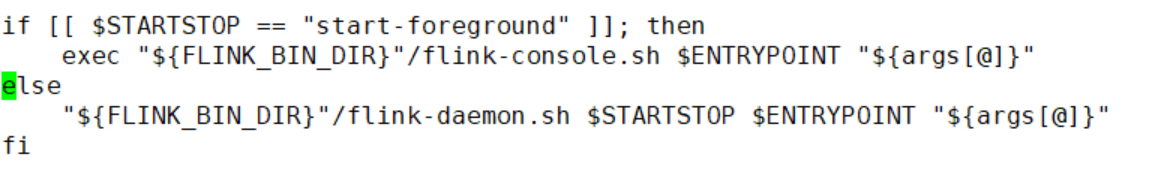

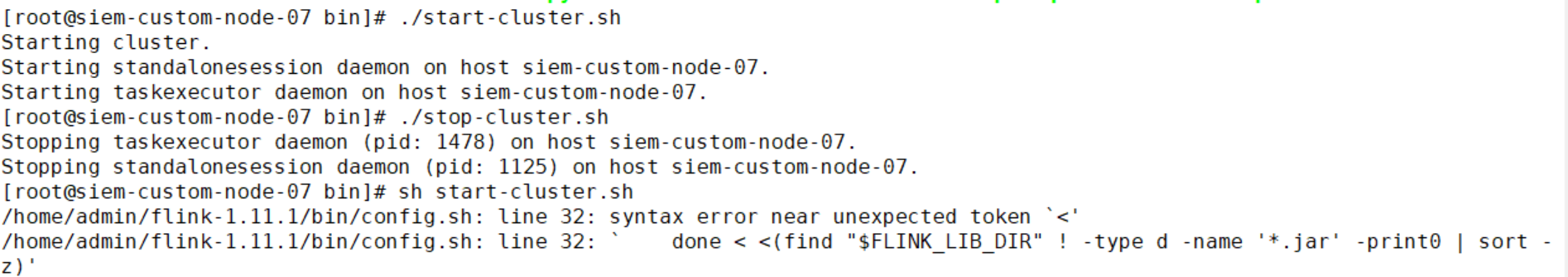

启动shell的方式不同导致结果不同

正确的启动脚本方式 ./start-cluster.sh

错误的启动脚本方式 sh start-cluster.sh 会提示语法错误

jobmanager.sh start 以后台进程方式启动

jobmanager.sh start-foreground 以前台进程的方式启动

以docker容器的方式启动主进程必须要以前台的方式运行

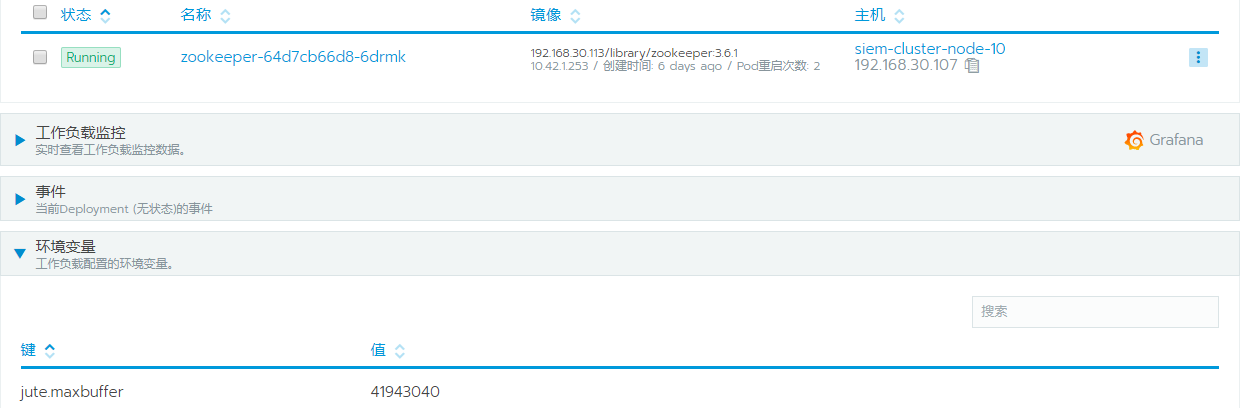

kafka部署

docker pull kafkamanager/kafka-manager

docker tag 14a7c1e556f7 192.168.30.113/library/kafka

docker push 192.168.30.113/library/kafka

kafka依赖zookeeper 必须先部署zookeeper

kafaka容器设置

kafka成功启动