背景

BERT的问世向世人宣告了无监督预训练的语言模型在众多NLP任务中成为“巨人肩膀”的可能性,接踵而出的GPT2、XL-Net则不断将NLP从业者的期望带向了新的高度。得益于这些力作模型的开源,使得我们在了解其论文思想的基础上,可以借力其凭借强大算力预训练的模型从而快速在自己的数据集上开展实验,甚至应用于真实的业务中。

在GitHub上已经存在使用多种语言/框架依照Google最初release的TensorFlow版本的代码进行实现的Pretrained-BERT,并且都提供了较为详细的文档。本文主要展示通过极简的代码调用Pytorch Pretrained-BERT并进行fine-tuning的文本分类任务。

下面的代码是使用pytorch-pretrained-BERT进行文本分类的官方实现,感兴趣的同学可以直接点进去阅读:

https://github.com/huggingface/pytorch-pretrained-BERT/blob/master/examples/run_classifier.py

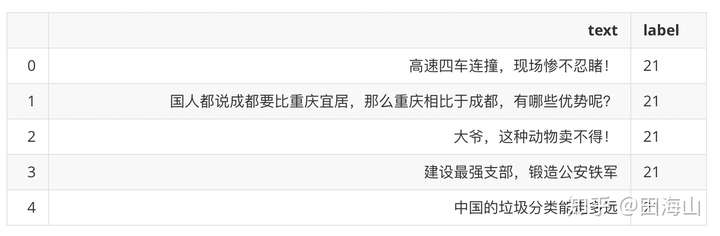

数据介绍

本文所使用的数据是标题及其对应的类别,如“中国的垃圾分类能走多远”对应“社会”类别,共有28个类别,每个类别的训练数据和测试数据各有1000条,数据已经同步至云盘,欢迎下载。链接:

https://pan.baidu.com/s/1r4SI6-IizlCcsyMGL7RU8Q

提取码: 6awx

加载库

import os

import sys

import pickle

import pandas as pd

import numpy as np

from concurrent.futures import ThreadPoolExecutor

import torch

import pickle

from sklearn.preprocessing import LabelEncoder

from torch.optim import optimizer

from torch.utils.data import DataLoader, RandomSampler, SequentialSampler, TensorDataset

from torch.nn import CrossEntropyLoss,BCEWithLogitsLoss

from tqdm import tqdm_notebook, trange

from pytorch_pretrained_bert import BertTokenizer, BertModel, BertForMaskedLM, BertForSequenceClassification

from pytorch_pretrained_bert.optimization import BertAdam, WarmupLinearSchedule

from sklearn.metrics import precision_recall_curve,classification_report

import matplotlib.pyplot as plt

%matplotlib inline

加载数据

# pandas读取数据

data = pd.read_pickle("title_category.pkl")

# 列名重新命名

data.columns = ['text','label']标签编码

因为label为中文格式,为了适应模型的输入需要进行ID化,此处调用sklearn中的label encoder方法快速进行变换。

le = LabelEncoder()

le.fit(data.label.tolist())

data['label'] = le.transform(data.label.tolist())观察数据

训练数据准备

本文需要使用的预训练bert模型为使用中文维基语料训练的字符级别的模型,在Google提供的模型列表中对应的名称为'bert-base-chinese',使用更多语言语料训练的模型名称可以参见下方链接:https://github.com/huggingface/pytorch-pretrained-BERT/blob/master/pytorch_pretrained_bert/modeling.py。

另外,首次执行下面的代码时因为本地没有cache,因此会自动启动下载,实践证明下载速度还是很快的。需要注意的是,do_lower_case参数需要手动显式的设置为False。

# 分词工具

bert_tokenizer = BertTokenizer.from_pretrained('bert-base-chinese', do_lower_case=False)

# 封装类

class DataPrecessForSingleSentence(object):

"""

对文本进行处理

"""

def __init__(self, bert_tokenizer, max_workers=10):

"""

bert_tokenizer :分词器

dataset :包含列名为'text'与'label'的pandas dataframe

"""

self.bert_tokenizer = bert_tokenizer

# 创建多线程池

self.pool = ThreadPoolExecutor(max_workers=max_workers)

# 获取文本与标签

def get_input(self, dataset, max_seq_len=30):

"""

通过多线程(因为notebook中多进程使用存在一些问题)的方式对输入文本进行分词、ID化、截断、填充等流程得到最终的可用于模型输入的序列。

入参:

dataset : pandas的dataframe格式,包含两列,第一列为文本,第二列为标签。标签取值为{0,1},其中0表示负样本,1代表正样本。

max_seq_len : 目标序列长度,该值需要预先对文本长度进行分别得到,可以设置为小于等于512(BERT的最长文本序列长度为512)的整数。

出参:

seq : 在入参seq的头尾分别拼接了'CLS'与'SEP'符号,如果长度仍小于max_seq_len,则使用0在尾部进行了填充。

seq_mask : 只包含0、1且长度等于seq的序列,用于表征seq中的符号是否是有意义的,如果seq序列对应位上为填充符号,

那么取值为1,否则为0。

seq_segment : shape等于seq,因为是单句,所以取值都为0。

labels : 标签取值为{0,1},其中0表示负样本,1代表正样本。

"""

sentences = dataset.iloc[:, 0].tolist()

labels = dataset.iloc[:, 1].tolist()

# 切词

tokens_seq = list(

self.pool.map(self.bert_tokenizer.tokenize, sentences))

# 获取定长序列及其mask

result = list(

self.pool.map(self.trunate_and_pad, tokens_seq,

[max_seq_len] * len(tokens_seq)))

seqs = [i[0] for i in result]

seq_masks = [i[1] for i in result]

seq_segments = [i[2] for i in result]

return seqs, seq_masks, seq_segments, labels

def trunate_and_pad(self, seq, max_seq_len):

"""

1. 因为本类处理的是单句序列,按照BERT中的序列处理方式,需要在输入序列头尾分别拼接特殊字符'CLS'与'SEP',

因此不包含两个特殊字符的序列长度应该小于等于max_seq_len-2,如果序列长度大于该值需要那么进行截断。

2. 对输入的序列 最终形成['CLS',seq,'SEP']的序列,该序列的长度如果小于max_seq_len,那么使用0进行填充。

入参:

seq : 输入序列,在本处其为单个句子。

max_seq_len : 拼接'CLS'与'SEP'这两个特殊字符后的序列长度

出参:

seq : 在入参seq的头尾分别拼接了'CLS'与'SEP'符号,如果长度仍小于max_seq_len,则使用0在尾部进行了填充。

seq_mask : 只包含0、1且长度等于seq的序列,用于表征seq中的符号是否是有意义的,如果seq序列对应位上为填充符号,

那么取值为1,否则为0。

seq_segment : shape等于seq,因为是单句,所以取值都为0。

"""

# 对超长序列进行截断

if len(seq) > (max_seq_len - 2):

seq = seq[0:(max_seq_len - 2)]