本文适用范文

- 使用AWS海外账号

- 对aws、terraform、k8s有一定的了解

- 新建一个独立的VPC

Terraform简介

terraform是一个云端的资源编排工具,官方对自己的定位:Terraform is an open-source infrastructure as code software。

类似的,在AWS云上有CloudFormation,我们选择terraform是因为它更加的通用,能够同时管理AWS云,Azure云,阿里云等常见的云服务。

使用方式详见:https://registry.terraform.io/namespaces/hashicorp

前置条件

- 有一个AWS海外账号,并且拥有管理员权限

- 执行脚本的服务器以赋予角色,角色拥有administrator权限(实际用不到这么高的权限,仅为了演示,未做最小权限配置)

- 服务器已安装terraform程序(安装方式见terraform官网)

- 服务器已安装awscli和kubectl

说明

为了方便阅读,tf脚本按照aws资源类型拆分(实际上将所有配置打包到一个tf脚本里也能执行)

脚本内容

脚本中已标记注释,这里就不解释每段的含义了。

main.tf

各位客官根据自己需要,修改此脚本中的参数。其他脚本均不需要修改

# author zhenglisai

# 使用当前脚本所在服务器角色权限

provider "aws" {

region = "us-west-2"

}

# 获取当前可用区

data "aws_availability_zones" "available" {

state = "available"

}

# 本地参数,下面参数均可根据自己需要修改。

locals {

# EKS集群名

cluster_name = "tf-cluster-zhenglisai"

# EKS集群角色名

cluster_role_name = "tf-cluster-zhenglisai"

# EKS计算节点名

node_name = "tf-node-zhenglisai"

# EKS计算节点角色

node_role_name = "tf-node-zhenglisai"

# EKS计算节点使用的启动模板名

launch_template_name = "tf-launch_template-zhenglisai"

# image_id每个区域不同,此ID仅适用于us-west-2区域,其他区域的镜像请参见AWS文档

launch_template_image_id = "ami-0cb182e3037115aa0"

# EKS计算节点使用的实例类型

launch_template_instance_type = "t3.small"

# 服务器登录密钥,需要提前在EC2的密钥管理中配置好

launch_template_key_name = "你的密钥名"

# EKS集群使用的VPC网段

vpc_cidr_block = "10.2.0.0/16"

# EKS集群使用的子网网段

subnet_1_cidr_block = "10.2.0.0/20"

# EKS集群使用的子网网段

subnet_2_cidr_block = "10.2.16.0/20"

}

eks.tf

# author zhenglisai

# 集群

resource "aws_eks_cluster" "eks-cluster" {

name = local.cluster_name

role_arn = aws_iam_role.eks-cluster.arn

vpc_config {

subnet_ids = [aws_subnet.subnet_1.id, aws_subnet.subnet_2.id]

security_group_ids = [aws_security_group.eks-cluster.id]

}

}

# 计算节点

resource "aws_eks_node_group" "eks-node" {

cluster_name = aws_eks_cluster.eks-cluster.name

node_group_name = local.node_name

node_role_arn = aws_iam_role.eks-node.arn

subnet_ids = [aws_subnet.subnet_1.id, aws_subnet.subnet_2.id]

scaling_config {

desired_size = 2

max_size = 3

min_size = 1

}

launch_template {

version = aws_launch_template.eks-template.latest_version

id = aws_launch_template.eks-template.id

}

}

ec2.tf

# author zhenglisai

resource "aws_launch_template" "eks-template" {

name = local.launch_template_name

image_id = local.launch_template_image_id

instance_type = local.launch_template_instance_type

key_name = local.launch_template_key_name

vpc_security_group_ids = [aws_security_group.eks-node.id]

user_data = base64encode("#!/bin/bash\n/etc/eks/bootstrap.sh ${aws_eks_cluster.eks-cluster.name}")

}

iam.tf

# author zhenglisai

data "aws_iam_policy" "AmazonEKSClusterPolicy" {

name = "AmazonEKSClusterPolicy"

}

data "aws_iam_policy" "AmazonEKSWorkerNodePolicy" {

name = "AmazonEKSWorkerNodePolicy"

}

data "aws_iam_policy" "AmazonEC2ContainerRegistryReadOnly" {

name = "AmazonEC2ContainerRegistryReadOnly"

}

data "aws_iam_policy" "AmazonEKS_CNI_Policy" {

name = "AmazonEKS_CNI_Policy"

}

data "aws_iam_policy_document" "ec2-instance" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["ec2.amazonaws.com"]

}

}

}

data "aws_iam_policy_document" "eks-instance" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["eks.amazonaws.com"]

}

}

}

resource "aws_iam_role" "eks-cluster" {

name = local.cluster_role_name

assume_role_policy = data.aws_iam_policy_document.eks-instance.json

managed_policy_arns = [data.aws_iam_policy.AmazonEKSClusterPolicy.arn]

}

resource "aws_iam_role" "eks-node" {

name = local.node_role_name

assume_role_policy = data.aws_iam_policy_document.ec2-instance.json

managed_policy_arns = [data.aws_iam_policy.AmazonEC2ContainerRegistryReadOnly.arn, data.aws_iam_policy.AmazonEKS_CNI_Policy.arn, data.aws_iam_policy.AmazonEKSWorkerNodePolicy.arn]

}

securitygroup.tf

# author zhenglisai

resource "aws_security_group" "eks-cluster" {

name = "eks-cluster"

description = "Allow local vpc"

vpc_id = aws_vpc.eks.id

ingress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = [local.vpc_cidr_block]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "eks-cluster"

}

}

resource "aws_security_group" "eks-node" {

name = "eks-node"

description = "Allow local vpc"

vpc_id = aws_vpc.eks.id

ingress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = [local.vpc_cidr_block]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "eks-node"

}

}

vpc.tf

# author zhenglisai

resource "aws_vpc" "eks" {

cidr_block = local.vpc_cidr_block

enable_dns_hostnames = "true"

tags = {

Name = "eks"

}

}

# 定义Subnet子网

resource "aws_subnet" "subnet_1" {

vpc_id = aws_vpc.eks.id

map_public_ip_on_launch = true

cidr_block = local.subnet_1_cidr_block

availability_zone = data.aws_availability_zones.available.names[0]

tags = {

Name = "subnet_1"

"kubernetes.io/role/elb" = "1"

}

}

resource "aws_subnet" "subnet_2" {

vpc_id = aws_vpc.eks.id

map_public_ip_on_launch = true

cidr_block = local.subnet_2_cidr_block

availability_zone = data.aws_availability_zones.available.names[1]

tags = {

Name = "subnet_2"

"kubernetes.io/role/elb" = "1"

}

}

# 创建公网接口

resource "aws_internet_gateway" "igw-eks" {

vpc_id = aws_vpc.eks.id

tags = {

Name = "igw-eks"

}

}

# 修改路由表

data "aws_route_table" "route_table_eks" {

vpc_id = aws_vpc.eks.id

filter {

name = "association.main"

values = [true]

}

}

resource "aws_route" "route_table_eks" {

route_table_id = data.aws_route_table.route_table_eks.id

destination_cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igw-eks.id

}

将以上文件保存在一个目录中,比如eks_demo目录

开始执行

进入目录,并初始化terraform资源

cd eks_demo && terraform init

初始化完成后,开始执行terraform部署

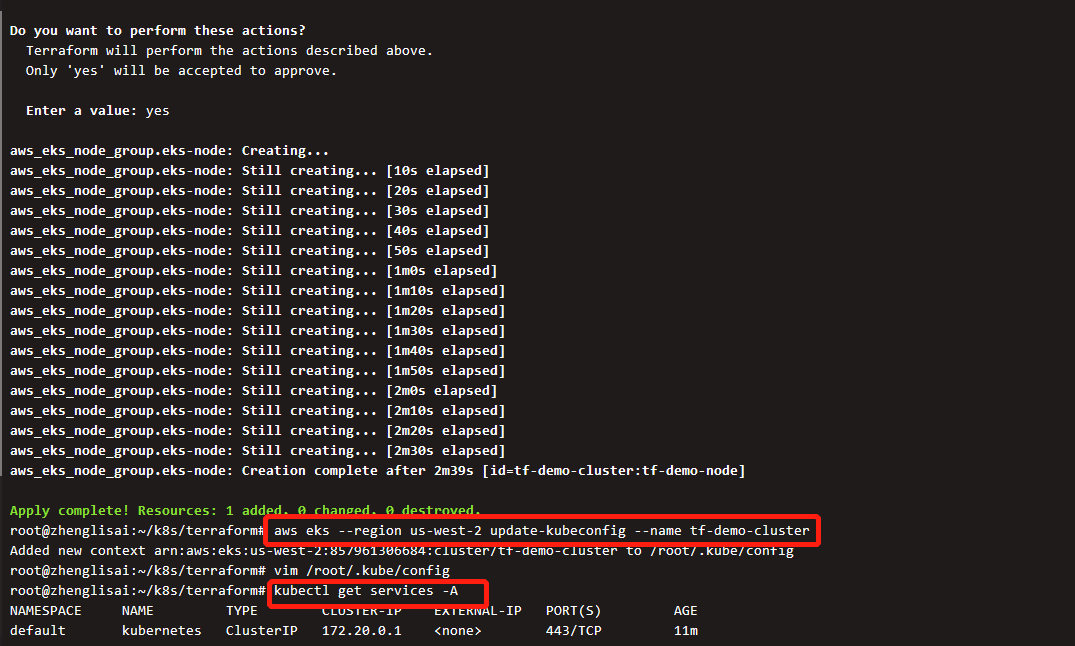

terraform apply

执行后会开始检查资源,等待检查完毕后,确认输入yes开始部署

整个部署过程大概需要持续15分钟左右

部署完成后,配置kubectl权限,之后便可与EKS开始交互

删除资源

实验结束后,如果不需要保留资源,在tf脚本所在目录执行

terraform destroy

即可删除所有terraform创建的资源