集群搭建

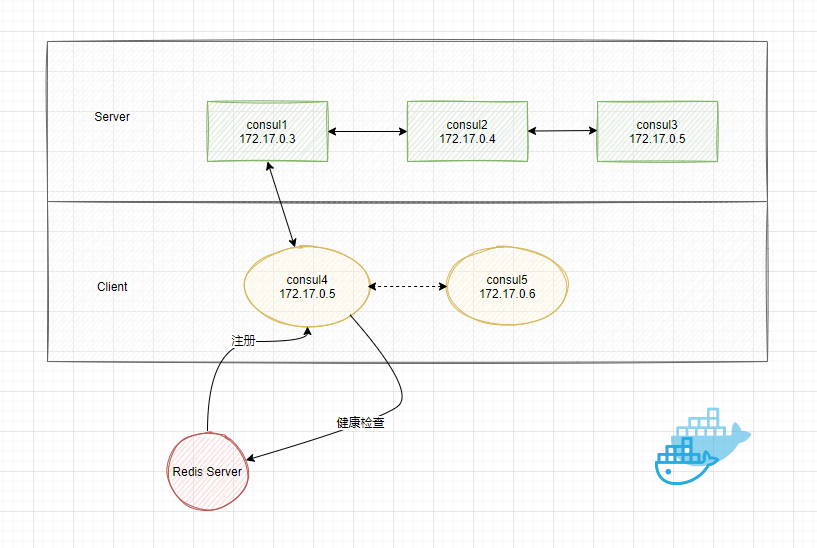

通过 Docker 来搭建一个由3个 Server 组成的数据中心集群,再启动一个 Client 容器来做服务注册和发现的入口,开模拟看看

Server 启动命令

# 拉取最新镜像

$ docker pull consul

# 启动 server1,将容器的8500端口映射到主机的8900端口,提供UI界面

$ docker run -d --name=consul1 -p 8900:8500 -e CONSUL_BIND_INTERFACE=eth0 consul agent --server=true --bootstrap-expect=3 --client=0.0.0.0 -ui

076d7658951753309e2b052315190921aa89ddf9e63b7055558867769747a954

# 查看容器 server1 的IP地址

$ docker inspect cunsul1

[

{

…

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "1cf2fe7613c4b319b47058da0f61460f6d70c9829645741d0c9eadc23f7846af",

"EndpointID": "18840278673f45308e2b0333f054aeb89e817af0d53a1a5bcd84202017937cdf",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.3",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": “02:32:ac:10:00:03",

"DriverOpts": null

}

}

}

]

# 启动 server2,并加入集群

$ docker run -d --name=consul2 -e CONSUL_BIND_INTERFACE=eth0 consul agent --server=true --client=0.0.0.0 --join 172.17.0.3

2543282e512bf6150bf340dde19460f85166568dc706bcd9b797eb66bbe9437a

# 启动 server3,并加入集群

$ docker run -d --name=consul3 -e CONSUL_BIND_INTERFACE=eth0 consul agent --server=true --client=0.0.0.0 --join 172.17.0.3

cd333ecbb37d828560c4fdb4b65b103fa343565408e1d1e62eaf8a8f61711263

启动完成后,通过命令查看三个 server 间的交互

$ docker logs -f consul1 | grep "agent.server"

$ docker logs -f consul2 | grep "agent.server"

$ docker logs -f consul3 | grep "agent.server"

通过日志可以看到,server2、server3 加入到了集群中,并且认定server1是 leader

登录任意一台 server,查看以集群 member 情况,三个 server 已经

$ docker exec -it consul1 sh

/ # consul members

Node Address Status Type Build Protocol DC Segment

076d76589517 172.17.0.3:8301 alive server 1.10.1 2 dc1 <all>

2543282e517b 172.17.0.4:8301 alive server 1.10.1 2 dc1 <all>

cd333ecbb37d 172.17.0.5:8301 alive server 1.10.1 2 dc1 <all>

通过 server1 暴露的端口来访问 web ui 界面

除了 Services、Nodes 面板,还可以看到 Key/Value 面板,不要忘记,Consul 还支持简单的 KV 存储。

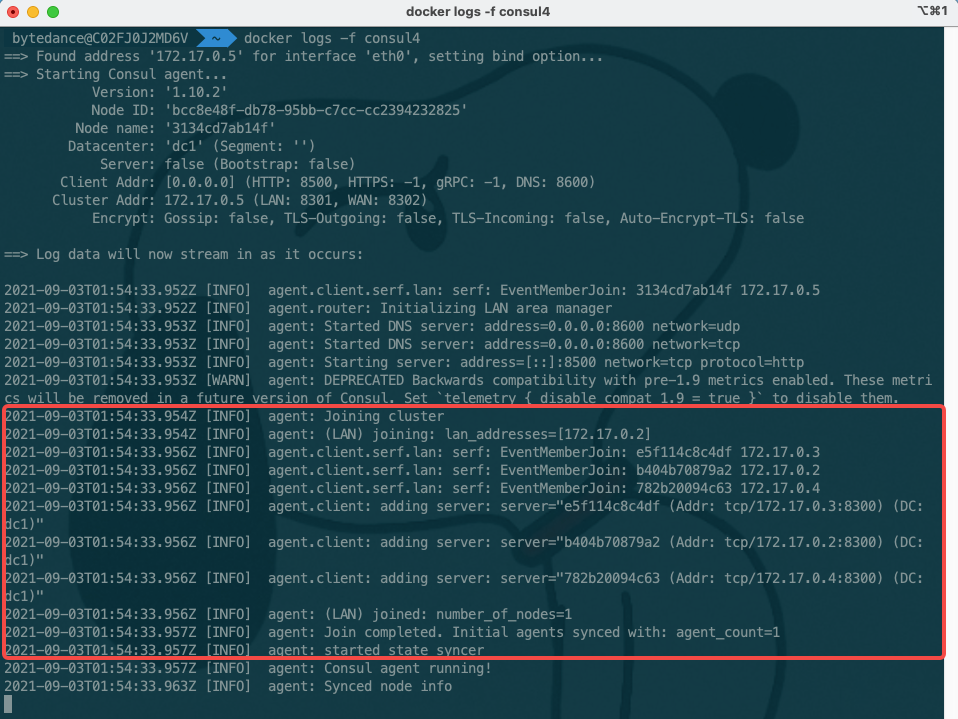

Client 启动命令

$ docker run -d --name=consul4 -e CONSUL_BIND_INTERFACE=eth0 consul agent --server=false --client=0.0.0.0 --join 172.17.0.2

313r3d7ab14f021c335b0b4e7d4c5bdcad890531d80fa13273a3657702233112

再次查看 consul 集群成员情况

$ docker exec -it consul2 consul members

Node Address Status Type Build Protocol DC Segment

782b20094c63 172.17.0.4:8301 alive server 1.10.2 2 dc1 <all>

b404b70879a2 172.17.0.2:8301 alive server 1.10.2 2 dc1 <all>

e5f114c8c4df 172.17.0.3:8301 alive server 1.10.2 2 dc1 <all>

3134cd7ab14f 172.17.0.5:8301 alive client 1.10.2 2 dc1 <default>

现在一套完整的 Consul 集群搭建完成。

服务注册

编写一个redis服务的配置文件redis-service.json

$ cat <<EOF> redis-service.json

{

"service": [

{

"name": "redis",

"tags": ["master"],

"address": "127.0.0.1",

"port": 6379

}

]

}

EOF

上传到 client 容器即 consul4 的/consul/config目录,并重新加载consul

$ docker cp redis-service.json consul4:/consul/config

$ docker exec -it consul4 consul reload

Configuration reload triggered

再次查 web ui,可以发现我们的服务中多了 redis

健康检查

修改redis服务的配置,增加健康检查

$ cat <<EOF> redis-service.json

{

"service": [

{

"name": "redis",

"tags": ["master"],

"address": "172.17.0.6",

"port": 6379,

"check": {

"name": "nc",

"args": ["nc", "172.17.0.6", "6379"],

"interval": "3s"

}

}

]

}

EOF

在重新上次reload,发现报错

$ docker exec -it consul4 consul reload

Error reloading: Unexpected response code: 500 (Failed reloading services: Failed to register service "redis": Scripts are disabled on this agent; to enable, configure 'enable_script_checks' or 'enable_local_script_checks' to true)

原来需要在启动client时开启脚本检查功能

$ docker run -d --name=consul4 -e CONSUL_BIND_INTERFACE=eth0 -e CONSUL_LOCAL_CONFIG='{"enable_script_checks": true}' consul agent --server=false --client=0.0.0.0 --join 172.17.0.2

从log中可以看到已经开启健康检查,再查看下web ui,监看检查是通过的

此时如果我们将redis服务关闭(这里使用Docker Desktop 控制redis容器),以此来模拟redis服务故障的情况。

查看一下log和web ui

此时redis服务经过consul的健康检查,已经认得为不健康,我们使用client提供的HTTP服务发现接口去查询,过滤健康的服务,返回为空,符合预期:

$ docker exec -it consul4 curl http://127.0.0.1:8500/v1/health/service/redis?passing=true

[]

将redis服务开启,再来对比一下:

$ docker exec -it consul4 curl http://127.0.0.1:8500/v1/health/service/redis?passing=true

[{"Node":{"ID":"8a73e872-c378-7197-0cf8-9f6f08c529ea","Node":"5f9387a8e3af","Address":"172.17.0.5","Datacenter":"dc1","TaggedAddresses":{"lan":"172.17.0.5","lan_ipv4":"172.17.0.5","wan":"172.17.0.5","wan_ipv4":"172.17.0.5"},"Meta":{"consul-network-segment":""},"CreateIndex":412,"ModifyIndex":412},"Service":{"ID":"redis","Service":"redis","Tags":["master"],"Address":"172.17.0.6","TaggedAddresses":{"lan_ipv4":{"Address":"172.17.0.6","Port":6379},"wan_ipv4":{"Address":"172.17.0.6","Port":6379}},"Meta":null,"Port":6379,"Weights":{"Passing":1,"Warning":1},"EnableTagOverride":false,"Proxy":{"Mode":"","MeshGateway":{},"Expose":{}},"Connect":{},"CreateIndex":416,"ModifyIndex":416},"Checks":[{"Node":"5f9387a8e3af","CheckID":"serfHealth","Name":"Serf Health Status","Status":"passing","Notes":"","Output":"Agent alive and reachable","ServiceID":"","ServiceName":"","ServiceTags":[],"Type":"","Interval":"","Timeout":"","ExposedPort":0,"Definition":{},"CreateIndex":413,"ModifyIndex":413},{"Node":"5f9387a8e3af","CheckID":"service:redis","Name":"nc","Status":"passing","Notes":"","Output":"","ServiceID":"redis","ServiceName":"redis","ServiceTags":["master"],"Type":"script","Interval":"","Timeout":"","ExposedPort":0,"Definition":{},"CreateIndex":416,"ModifyIndex":662}]}]

再看看其他场景

- 如果在再启动一个client consul5,consul4的数据会同步过来

$ docker run -d --name=consul5 -e CONSUL_BIND_INTERFACE=eth0 -e CONSUL_LOCAL_CONFIG='{"enable_script_checks": true}' consul agent --server=false --client=0.0.0.0 --join 172.17.0.2

$ docker exec -it consul5 curl http://127.0.0.1:8500/v1/health/service/redis?passing=true

# 返回redis信息

- 如果我们将consul1(即server端的Leader)关掉,剩余的两个 server 会重新进行选举consul2为新的leader

从 consul4 和 consul5 仍可访问到 redis 服务信息

$ docker exec -it consul4 curl http://127.0.0.1:8500/v1/health/service/redis?passing=true

# 返回redis信息

$ docker exec -it consul5 curl http://127.0.0.1:8500/v1/health/service/redis?passing=true

# 返回redis信息

总结

本篇讲述了通过 Docker 搭建一个高可用 Consul 集群的方式,通过日志理解 Consul 集群里,Server间、Client间、Server和Client间通信的过程。尝试以一个 Redis 服务为例,配置服务注册和健康检查,查看其实际效果。