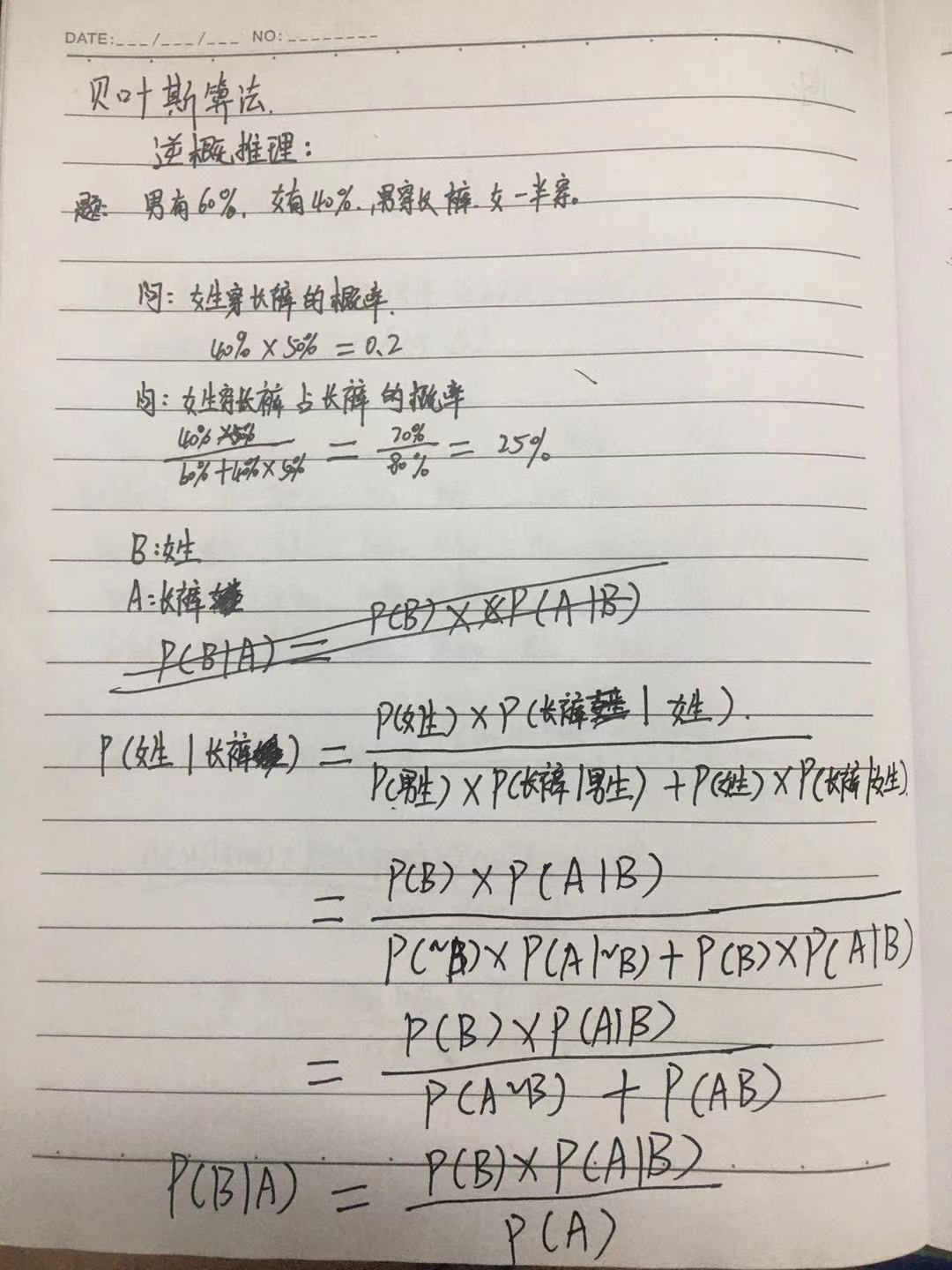

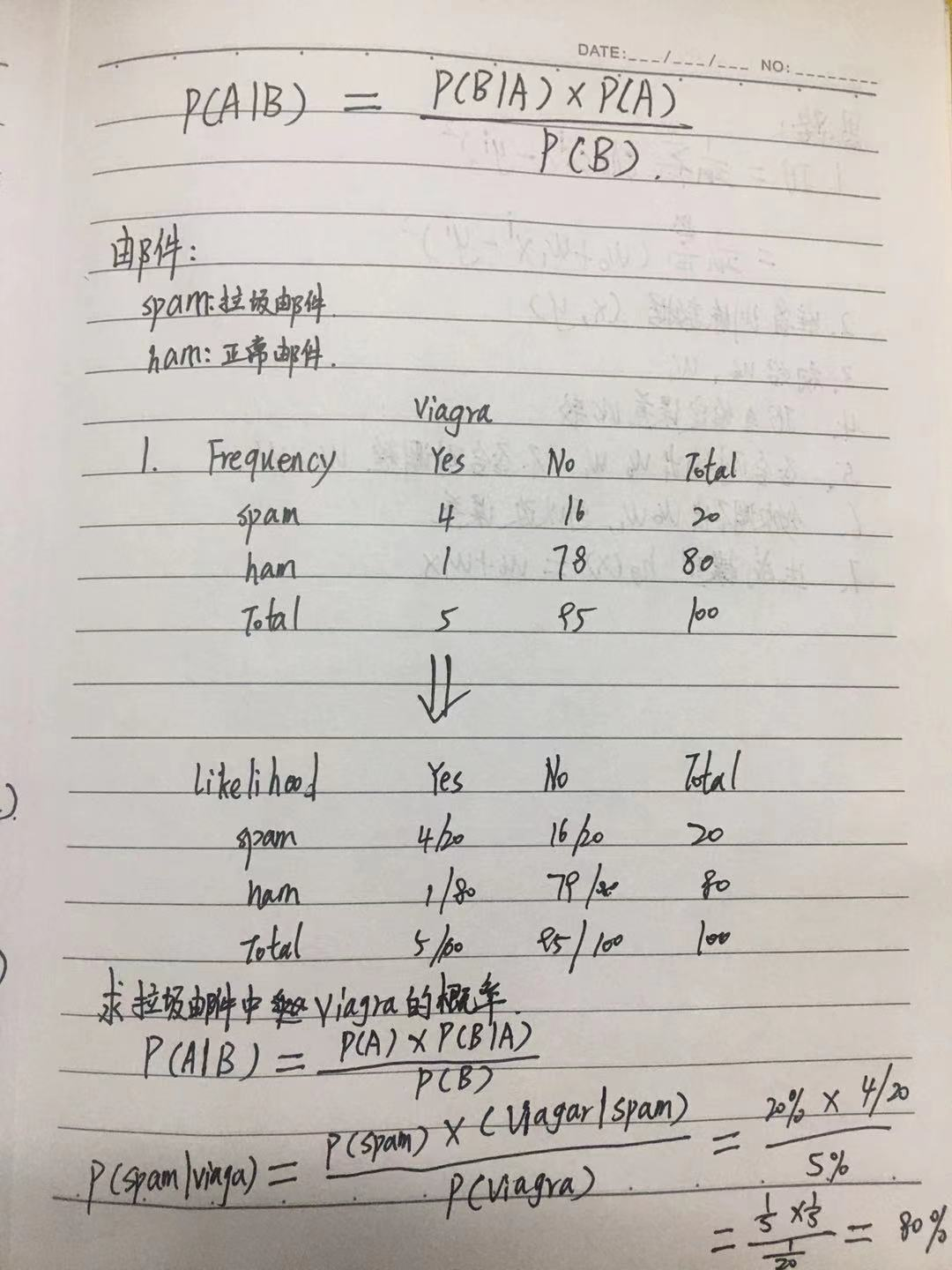

手动推导

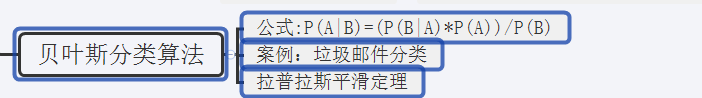

代码:

from sklearn.naive_bayes import MultinomialNB from sklearn.feature_extraction.text import CountVectorizer if __name__ == '__main__': # 读取文本构建语料库 # corpus存的是训练集中每封邮件的正文 corpus = [] # labels存的是训练集中每封邮件的标签 labels = [] # corpus_test测试集中邮件的正文 corpus_test = [] # labels_test测试集中邮件的标签 labels_test = [] # 将邮件内容放进上面的容器中 file = open('D:/code/python/test2/data/data_sms_spam.txt', mode='r', encoding='utf-8') index = 0 while True: line = file.readline() if index == 0: index = index+1 continue if line : index = index +1 lineArr = line.split(',') label = lineArr[0] sentence = lineArr[1] if index <= 5550: corpus.append(sentence) if "ham" == label: labels.append(0) # 垃圾邮件是1 elif "spam" == label: labels.append(1) if index > 5550: corpus_test.append(sentence) if "ham" == label: labels_test.append(0) elif "spam" == label: labels_test.append(1) else: break #CountVectorizer()函数只考虑每个单词出现的频率;然后构成一个特征矩阵,每一行表示一个训练文本的词频统计结果。其思想是,先根据所有训练文本,不考虑其出现顺序, # 只将训练文本中每个出现过的词汇单独视为一列特征, # 构成一个词汇表(vocabulary list),该方法又称为词袋法(Bag of Words)。 vectorizer = CountVectorizer() # print("- - - -- - - - - - - - - - 打印词袋内容: 所有邮件的内容去重-- -- -- - - - - - - - -") # print(vectorizer.get_feature_names()) fea_train = vectorizer.fit_transform(corpus) print("- - - -- - - - - - - 打印二维数组,里面是每封邮件每个单词出现频率的向量- - - -- -- -- - - - - - - - -") print(fea_train.toarray()) print("- - - -- - - - - - - 测试集每封邮件每个单词出现频率的向量- - - -- -- -- - - - - - - - -") vectorizer2 = CountVectorizer(vocabulary=vectorizer.vocabulary_) fea_test = vectorizer2.fit_transform(corpus_test) print(fea_test.toarray()) # alpha=1 每封邮件内容都加一,不让出现概率为0的情况 clf = MultinomialNB(alpha=1) clf.fit(fea_train, labels) # 此时clf相当于概率表 # 概率 0 1 将50%作为分类阈值 人为干预 医疗 pred = clf.predict(fea_test) for p in pred: if p ==0: print("正常邮件") else: print("垃圾邮件")