1.分类问题

二分类

- $f:x

ightarrow p(y=1|x)$

- $p(y=1|x)$ 解释成给定x,求y=1的概率,如果概率>0.5,预测为1,否则预测为0

- minimize MSE

多分类

- $f:x

ightarrow p(y|x)$

- $[p(y=0|x),p(y=1|x),...,p(y=9|x)]$

- $p(y|x)epsilon [0,1]$

- $sum_{i=0}^{9}p(y=i|x)=1$

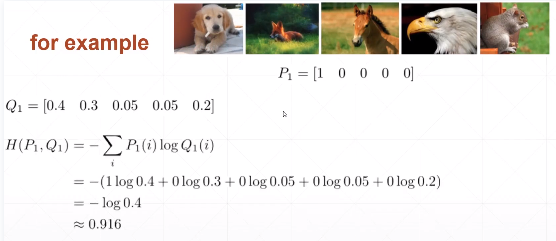

2.交叉熵

信息熵:描述信息的不确定性。$H(U)=E(-logp_i)=-sum _{i=1}^{n}p_{i}logp_{i}$

描述一个分布,不确定性越高,熵越低。

1 a=torch.full([4],1/4.) 2 print(-(a*torch.log2(a)).sum()) #tensor(2.) 3 4 b=torch.tensor([0.1,0.1,0.1,0.7]) 5 print(-(b*torch.log2(b)).sum()) #tensor(1.3568) 6 7 c=b=torch.tensor([0.001,0.001,0.001,0.999]) 8 print(-(c*torch.log2(c)).sum()) #tensor(0.0313)

2.1交叉熵

$H(p,q)=-sum _{xepsilon X}p(x)logq(x)$

$H(p,q)=H(p)+D_{KL}(p|q)$ (KL散度=交叉熵-信息熵,衡量p,q分布的重叠情况)

- p=q,H(p,q)=H(p)

- 对独热编码来说,H(p)=0

2.2二分类问题的交叉熵

$H(p,q)=-sum _{iepsilon cat,dog}P(i)logQ(i)$ P(i)指i的真实值,Q(i)指i的预测值。

$=-P(cat)logQ(cat)-P(dog)logQ(dog)$ $P(dog)=(1-P(cat))$

$=-sum _{i=1}^{n}y_ilog(p_i)+(1-y_i)log(1-p_i)$ $y_i$指i的真实值,$p_i$指i的预测值。

1 import torch 2 from torch.nn import functional as F 3 4 x=torch.randn(1,784) 5 w=torch.randn(10,784) 6 logits=x@w.t() #shape=torch.Size([1,10]) 7 8 print(F.cross_entropy(logits, torch.tensor([3]))) #tensor(77.1405) 9 10 pred=F.softmax(logits,dim=1) #shape=torch.Size([1,10]) 11 pred_log=torch.log(pred) 12 print(F.nll_loss(pred_log, torch.tensor([3]))) #tensor(77.1405)

cross_entropy函数=>softmax->log->nll_loss

3.多分类实战

识别手写数据集

1 import torch 2 import torch.nn as nn 3 import torch.nn.functional as F 4 import torch.optim as optim 5 from torchvision import datasets, transforms 6 7 #超参数 8 batch_size=200 9 learning_rate=0.01 10 epochs=10 11 12 #获取训练集 13 train_loader = torch.utils.data.DataLoader( 14 datasets.MNIST('../data', train=True, download=True, #train=True则得到的是训练集 15 transform=transforms.Compose([ #transform进行数据预处理 16 transforms.ToTensor(), #转成Tensor类型的数据 17 transforms.Normalize((0.1307,), (0.3081,)) #进行数据标准化(减去均值除以方差) 18 ])), 19 batch_size=batch_size, shuffle=True) #按batch_size分出一个batch维度在最前面,shuffle=True打乱顺序 20 21 22 23 #获取测试集 24 test_loader = torch.utils.data.DataLoader( 25 datasets.MNIST('../data', train=False, transform=transforms.Compose([ 26 transforms.ToTensor(), 27 transforms.Normalize((0.1307,), (0.3081,)) 28 ])), 29 batch_size=batch_size, shuffle=True) 30 31 #设定参数w和b 32 w1, b1 = torch.randn(200, 784, requires_grad=True), 33 torch.zeros(200, requires_grad=True) #w1(out,in) 34 w2, b2 = torch.randn(200, 200, requires_grad=True), 35 torch.zeros(200, requires_grad=True) 36 w3, b3 = torch.randn(10, 200, requires_grad=True), 37 torch.zeros(10, requires_grad=True) 38 39 torch.nn.init.kaiming_normal_(w1) 40 torch.nn.init.kaiming_normal_(w2) 41 torch.nn.init.kaiming_normal_(w3) 42 43 44 def forward(x): 45 x = x@w1.t() + b1 46 x = F.relu(x) 47 x = x@w2.t() + b2 48 x = F.relu(x) 49 x = x@w3.t() + b3 50 x = F.relu(x) 51 return x 52 53 54 #定义sgd优化器,指明优化参数、学习率 55 optimizer = optim.SGD([w1, b1, w2, b2, w3, b3], lr=learning_rate) 56 criteon = nn.CrossEntropyLoss() 57 58 for epoch in range(epochs): 59 60 for batch_idx, (data, target) in enumerate(train_loader): 61 data = data.view(-1, 28*28) #将二维的图片数据摊平[样本数,784] 62 63 logits = forward(data) #前向传播 64 loss = criteon(logits, target) #nn.CrossEntropyLoss()自带Softmax 65 66 optimizer.zero_grad() #梯度信息清空 67 loss.backward() #反向传播获取梯度 68 optimizer.step() #优化器更新 69 70 if batch_idx % 100 == 0: #每100个batch输出一次信息 71 print('Train Epoch: {} [{}/{} ({:.0f}%)] Loss: {:.6f}'.format( 72 epoch, batch_idx * len(data), len(train_loader.dataset), 73 100. * batch_idx / len(train_loader), loss.item())) 74 75 76 test_loss = 0 77 correct = 0 #correct记录正确分类的样本数 78 for data, target in test_loader: 79 data = data.view(-1, 28 * 28) 80 logits = forward(data) 81 test_loss += criteon(logits, target).item() #其实就是criteon(logits, target)的值,标量 82 83 pred = logits.data.max(dim=1)[1] #也可以写成pred=logits.argmax(dim=1) 84 correct += pred.eq(target.data).sum() 85 86 test_loss /= len(test_loader.dataset) 87 print(' Test set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%) '.format( 88 test_loss, correct, len(test_loader.dataset), 89 100. * correct / len(test_loader.dataset)))

Train Epoch: 0 [0/60000 (0%)] Loss: 2.669597

Train Epoch: 0 [20000/60000 (33%)] Loss: 0.815616

Train Epoch: 0 [40000/60000 (67%)] Loss: 0.531289

Test set: Average loss: 0.0029, Accuracy: 8207/10000 (82%)

Train Epoch: 1 [0/60000 (0%)] Loss: 0.629100

Train Epoch: 1 [20000/60000 (33%)] Loss: 0.534102

Train Epoch: 1 [40000/60000 (67%)] Loss: 0.372538

Test set: Average loss: 0.0015, Accuracy: 9135/10000 (91%)

Train Epoch: 2 [0/60000 (0%)] Loss: 0.387911

Train Epoch: 2 [20000/60000 (33%)] Loss: 0.285009

Train Epoch: 2 [40000/60000 (67%)] Loss: 0.239334

Test set: Average loss: 0.0012, Accuracy: 9266/10000 (93%)

Train Epoch: 3 [0/60000 (0%)] Loss: 0.190144

Train Epoch: 3 [20000/60000 (33%)] Loss: 0.227097

Train Epoch: 3 [40000/60000 (67%)] Loss: 0.212044

Test set: Average loss: 0.0011, Accuracy: 9342/10000 (93%)

Train Epoch: 4 [0/60000 (0%)] Loss: 0.209257

Train Epoch: 4 [20000/60000 (33%)] Loss: 0.158875

Train Epoch: 4 [40000/60000 (67%)] Loss: 0.163808

Test set: Average loss: 0.0010, Accuracy: 9391/10000 (94%)

Train Epoch: 5 [0/60000 (0%)] Loss: 0.171966

Train Epoch: 5 [20000/60000 (33%)] Loss: 0.155037

Train Epoch: 5 [40000/60000 (67%)] Loss: 0.207052

Test set: Average loss: 0.0010, Accuracy: 9431/10000 (94%)

Train Epoch: 6 [0/60000 (0%)] Loss: 0.253138

Train Epoch: 6 [20000/60000 (33%)] Loss: 0.144874

Train Epoch: 6 [40000/60000 (67%)] Loss: 0.245244

Test set: Average loss: 0.0009, Accuracy: 9441/10000 (94%)

Train Epoch: 7 [0/60000 (0%)] Loss: 0.139446

Train Epoch: 7 [20000/60000 (33%)] Loss: 0.189258

Train Epoch: 7 [40000/60000 (67%)] Loss: 0.136632

Test set: Average loss: 0.0009, Accuracy: 9477/10000 (95%)

Train Epoch: 8 [0/60000 (0%)] Loss: 0.178528

Train Epoch: 8 [20000/60000 (33%)] Loss: 0.141744

Train Epoch: 8 [40000/60000 (67%)] Loss: 0.148902

Test set: Average loss: 0.0008, Accuracy: 9508/10000 (95%)

Train Epoch: 9 [0/60000 (0%)] Loss: 0.074641

Train Epoch: 9 [20000/60000 (33%)] Loss: 0.095056

Train Epoch: 9 [40000/60000 (67%)] Loss: 0.261752

Test set: Average loss: 0.0008, Accuracy: 9514/10000 (95%)