一、datanode添加新节点

1 在dfs.include文件中包含新节点名称,该文件在名称节点的本地目录下

[白名单]

[s201:/soft/hadoop/etc/hadoop/dfs.include]

2 在hdfs-site.xml文件中添加属性

<property>

<name>dfs.hosts</name>

<value>/soft/hadoop/etc/dfs.include.txt</value>

</property>

3 在nn上刷新节点

Hdfs dfsadmin -refreshNodes

4 在slaves文件中添加新节点ip(主机名)

5 单独启动新节点中的datanode

Hadoop-daemon.sh start datanode

二、datanode退役旧节点

1 添加退役节点的ip到黑名单 dfs.hosts.exclude,不要更新白名单

[/soft/hadoop/etc/dfs.hosts.exclude]

2 配置hdfs-site.xml

<property>

<name>dfs.hosts.exclude</name>

<value>/soft/hadoop/etc/dfs.hosts.exclude.txt</value>

</property>

3 刷新nn的节点

hdfs dfsadmin -refreshNodes

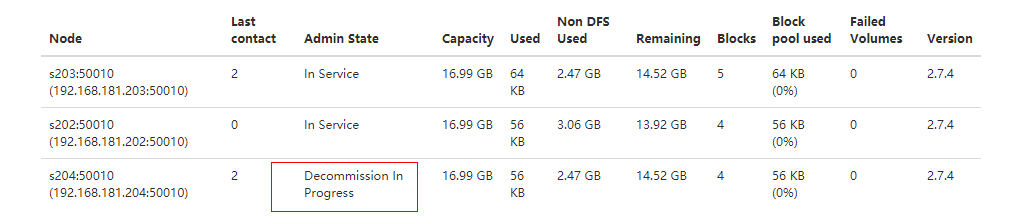

4 查看WEBUI,节点状态在Decommission In Progress

5 当所有的要退役的节点都报告为Decommissioned,数据转移工作已经完成

6 从白名单删除节点,并刷新节点

hdfs dfsadmin -refreshNodes

yarn rmadmin -refreshNodes

7 从slaves文件中删除退役的节点

8 hdfs-site.xml文件内容

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/soft/hadoop/name/</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/soft/hadoop/data</value>

</property>

<property>

<name>dfs.namenode.fs-limits.min-block-size</name>

<value>1024</value>

</property>

<property>

<name>dfs.hosts.exclude</name>

<value>/soft/hadoop/etc/dfs.hosts.exclude.txt</value>

</property>

</configuration>

三、黑白名单组合结构

Include //dfs.hosts

Exclude //dfs.hosts.exclude

| 白名单 | 黑名单 | 组合结构 |

| No | No | Node may not connect |

| No | Yes | Node may not connect |

| Yes | No | Node may connect |

| Yes | Yes | Node may connect and will be decommissioned |

四、图文

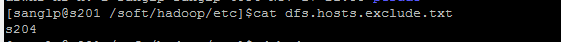

黑名单文件

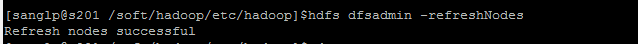

刷新节点信息

退役中

五、yarn添加新节点

1 在dfs.include文件中包含新节点名称,该文件在名称节点的本地目录下

[白名单]

[s201:/soft/hadoop/etc/hadoop/dfs.include]

2 在yarn-site.xml文件中添加属性

<property>

<name>yarn.resourcemanager.nodes.include-path</name>

<value>/soft/hadoop/etc/dfs.include.txt</value>

</property>

3 在rm上刷新节点

yarn rmadmin-refreshNodes

4 在slaves文件中添加新节点ip(主机名)

5 单独启动新节点中的资源管理器

yarn-daemon.sh start nodemanager

六、yarn退役新节点

1 添加退役节点的ip到黑名单 dfs.hosts.exclude,不要更新白名单

[/soft/hadoop/etc/dfs.hosts.exclude]

2 配置yarn-site.xml

<property>

<name>yarn-resourcemanager.nodes.exclude-path</name>

<value>/soft/hadoop/etc/dfs.hosts.exclude.txt</value>

</property>

3 刷新rm的节点

yarn rmadmin -refreshNodes

4 查看WEBUI,节点状态在Decommission In Progress

5 当所有的要退役的节点都报告为Decommissioned,数据转移工作已经完成

6 从白名单删除节点,并刷新节点

yarn rmadmin -refreshNodes

7 从slaves文件中删除退役的节点

注意:因为退役的时候副本数没有改变。所以要在退役的时候增加新的节点,只是做退役的话,因为不满足最小副本数要求,一直会停留在退役中,如果想更快的退役的话要使得退役后的副本数仍然达到最小副本数要求。