前面安装过程待补充,安装完成hadoop安装之后,开始执行相关命令,让hadoop跑起来

使用命令启动所有服务:

hadoop@ubuntu:/usr/local/gz/hadoop-2.4.1$ ./sbin/start-all.sh

当然在目录hadoop-2.4.1/sbin下面会有很多启动文件:

里面会有所有服务各自启动的命令,而start-all.sh则是把所有服务一起启动,以下为.sh的内容:

#!/usr/bin/env bash # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # Start all hadoop daemons. Run this on master node. echo "This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh" #这里说明了这个脚本已经被弃用了,要我们使用start-dfs.sh和start-yarn.sh来进行启动

bin=`dirname "${BASH_SOURCE-$0}"` bin=`cd "$bin"; pwd` DEFAULT_LIBEXEC_DIR="$bin"/../libexec HADOOP_LIBEXEC_DIR=${HADOOP_LIBEXEC_DIR:-$DEFAULT_LIBEXEC_DIR} . $HADOOP_LIBEXEC_DIR/hadoop-config.sh #这里执行相关配置文件在hadoop/libexec/hadoop-config.sh,该配置文件里面全是配置相关路径的,CLASSPATH, export相关的

#真正执行的是以下两个,也就是分别执行start-dfs.sh和start-yarn.sh两个脚本,以后还是自己分别执行这两个命令

# start hdfs daemons if hdfs is present if [ -f "${HADOOP_HDFS_HOME}"/sbin/start-dfs.sh ]; then "${HADOOP_HDFS_HOME}"/sbin/start-dfs.sh --config $HADOOP_CONF_DIR fi # start yarn daemons if yarn is present if [ -f "${HADOOP_YARN_HOME}"/sbin/start-yarn.sh ]; then "${HADOOP_YARN_HOME}"/sbin/start-yarn.sh --config $HADOOP_CONF_DIR fi

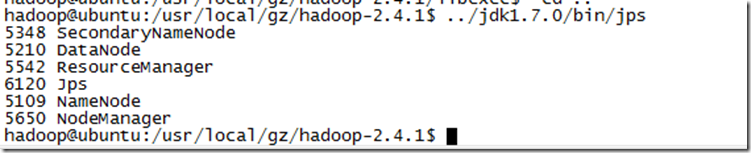

执行完成之后调用jps查看是否所有服务都已经启动起来了:

这里注意,一定要有6个服务,我启动的时候当时只有5个服务,打开两个连接都成功http://192.168.1.107:50070/,http://192.168.1.107:8088/,但是在执行wordcount示例的时候,发现执行失败,查找原因之后,才发起我启动的时候少了datanode服务

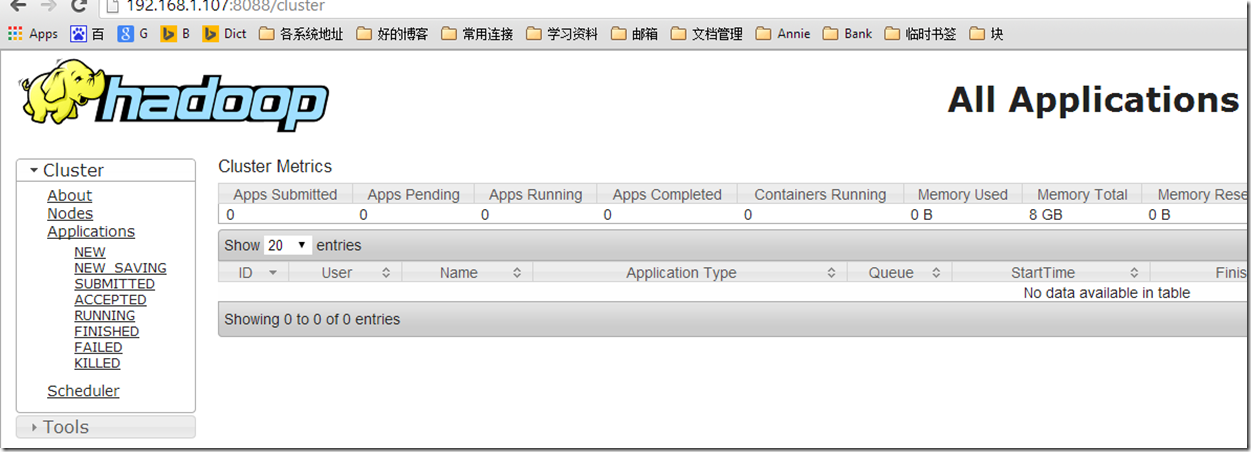

下面这个是application的运行情况:

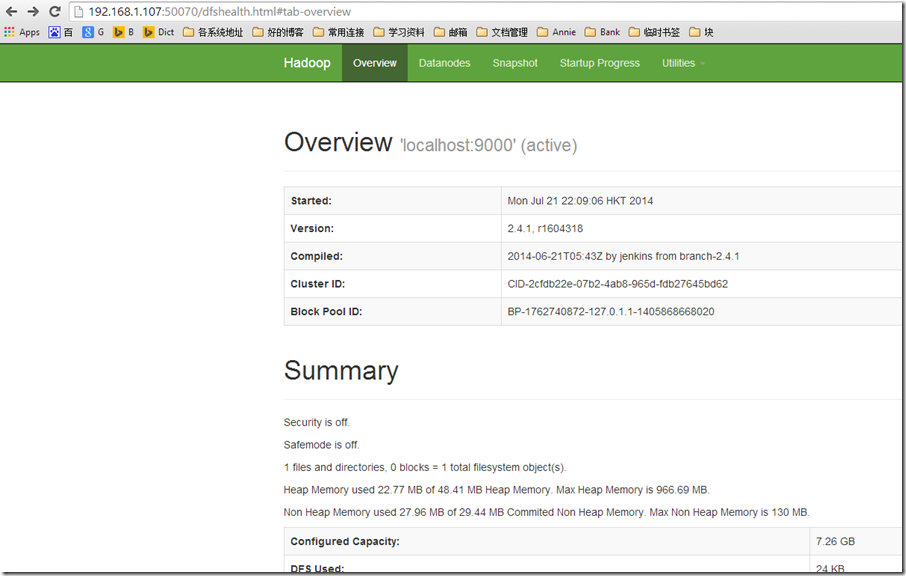

下面这个是dfs的健康状态:

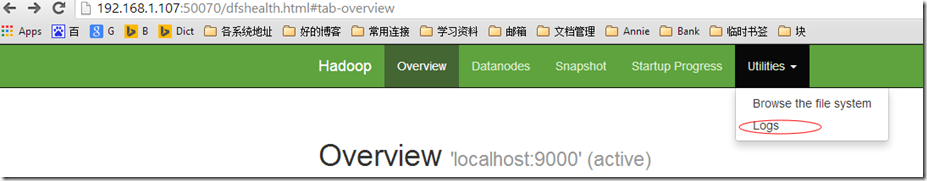

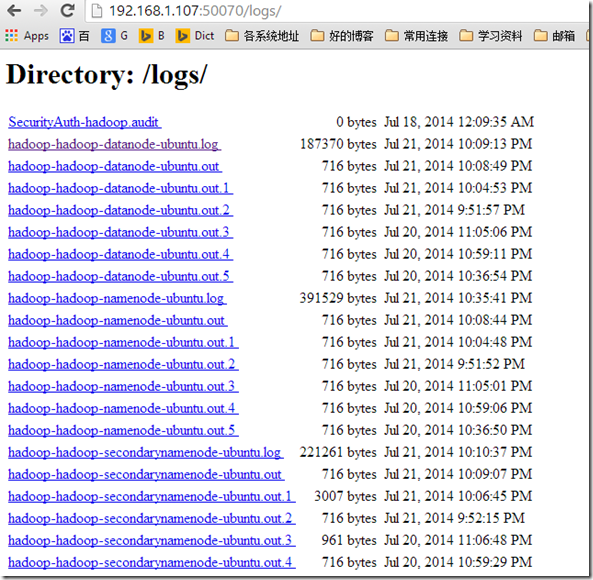

http://192.168.1.107:50070/打开的页面可以查看hadoop启动及运行的日志,详情如下:

我就是通过这里的日志找到问题原因的,打开日志之后是各个服务运行的日志文件:

此时打开datanode-ubuntu.log文件,有相关异常抛出:

2014-07-21 22:05:21,064 INFO org.apache.hadoop.hdfs.server.common.Storage: Lock on /usr/local/gz/hadoop-2.4.1/dfs/data/in_use.lock acquired by nodename 3312@ubuntu 2014-07-21 22:05:21,075 FATAL org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to localhost/127.0.0.1:9000. Exiting. java.io.IOException: Incompatible clusterIDs in /usr/local/gz/hadoop-2.4.1/dfs/data: namenode clusterID = CID-2cfdb22e-07b2-4ab8-965d-fdb27645bd62; datanode clusterID = ID-2cfdb22e-07b2-4ab8-965d-fdb27645bd62 at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:477) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:226) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:254) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:974) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:945) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:278) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:220) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:816) at java.lang.Thread.run(Thread.java:722) 2014-07-21 22:05:21,084 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Ending block pool service for: Block pool <registering> (Datanode Uuid unassigned) service to localhost/127.0.0.1:9000 2014-07-21 22:05:21,102 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Removed Block pool <registering> (Datanode Uuid unassigned) 2014-07-21 22:05:23,103 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Exiting Datanode 2014-07-21 22:05:23,106 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 0 2014-07-21 22:05:23,112 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down DataNode at ubuntu/127.0.1.1 ************************************************************/

根据错误日志搜索之后发现是由于先前启动Hadoop之后,再使用命令格式化namenode会导致,datanode和namenode的clusterID不一致:

找到hadoop/etc/hadoop/hdfs-site.xml配置文件里配置的datanode和namenode下的./current/VERSION,文件,对比两个文件中的clusterID,不一致,将Datanode下的clusterID改为namenode下的此ID,重新启动之后即可。

参考连接:

http://www.cnblogs.com/kinglau/p/3796274.html