spark on yarn模式下配置spark-sql访问hive元数据

目的:在spark on yarn模式下,执行spark-sql访问hive的元数据。并对比一下spark-sql 和hive的效率。

软件环境:

- hadoop2.7.3

- apache-hive-2.1.1-bin

- spark-2.1.0-bin-hadoop2.7

- jd1.8

hadoop是伪分布式安装的,1个节点,2core,4G内存。

hive是远程模式。

-

spark的下载地址:

http://spark.apache.org/downloads.html

解压安装spark

tar -zxvf spark-2.1.0-bin-hadoop2.7.tgz.tar

cd spark-2.1.0-bin-hadoop2.7/conf

cp spark-env.sh.template spark-env.sh

cp slaves.template slaves

cp log4j.properties.template log4j.properties

cp spark-defaults.conf.template spark-defaults.conf -

修改spark的配置文件

cd $SPARK_HOME/conf

vi spark-env.shexport JAVA_HOME=/usr/local/jdk export HADOOP_HOME=/home/fuxin.zhao/soft/hadoop-2.7.3 export HDFS_CONF_DIR=${HADOOP_HOME}/etc/hadoop export YARN_CONF_DIR=${HADOOP_HOME}/etc/hadoopvi spark-defaults.conf

spark.master spark://ubuntuServer01:7077 spark.eventLog.enabled true spark.eventLog.dir hdfs://ubuntuServer01:9000/tmp/spark spark.serializer org.apache.spark.serializer.KryoSerializer spark.driver.memory 512m spark.executor.extraJavaOptions -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three" #spark.yarn.jars hdfs://ubuntuServer01:9000/tmp/spark/lib_jars/*.jarvi slaves

ubuntuServer01 -

** 配置spark-sql读取hive的元数据**

##将hive-site.xml 软连接到spark的conf配置目录中: cd $SPARK_HOME/conf ln -s /home/fuxin.zhao/soft/apache-hive-2.1.1-bin/conf/hive-site.xml hive-site.xml ##将连接 mysql-connector-java-5.1.35-bin.jar拷贝到spark的jars目录下 cp $HIVE_HOME/lib/mysql-connector-java-5.1.35-bin.jar $SPARK_HOME/jars -

测试spark-sql:

先使用hive创建几个数据库和数据表,测试spark-sql是否可以访问

我向 temp.s4_order表导入了6万行,9M大小的数据。#先使用hive创建一下数据库和数据表,测试spark-sql是否可以访问 hive -e " create database temp; create database test; use temp; CREATE EXTERNAL TABLE t_source( `sid` string, `uid` string ); load data local inpath '/home/fuxin.zhao/t_data' into table t_source; CREATE EXTERNAL TABLE s4_order( `orderid` int , `retailercode` string , `orderstatus` int, `paystatus` int, `payid` string, `paytime` timestamp, `payendtime` timestamp, `salesamount` int, `description` string, `usertoken` string, `username` string, `mobile` string, `createtime` timestamp, `refundstatus` int, `subordercount` int, `subordersuccesscount` int, `subordercreatesuccesscount` int, `businesstype` int, `deductedamount` int, `refundorderstatus` int, `platform` string, `subplatform` string, `refundnumber` string, `refundpaytime` timestamp, `refundordertime` timestamp, `primarysubordercount` int, `primarysubordersuccesscount` int, `suborderprocesscount` int, `isshoworder` int, `updateshowordertime` timestamp, `devicetoken` string, `lastmodifytime` timestamp, `refundreasontype` int ) PARTITIONED BY ( `dt` string); load data local inpath '/home/fuxin.zhao/20170214003514' OVERWRITE into table s4_order partition(dt='2017-02-13'); load data local inpath '/home/fuxin.zhao/20170215000514' OVERWRITE into table s4_order partition(dt='2017-02-14'); "

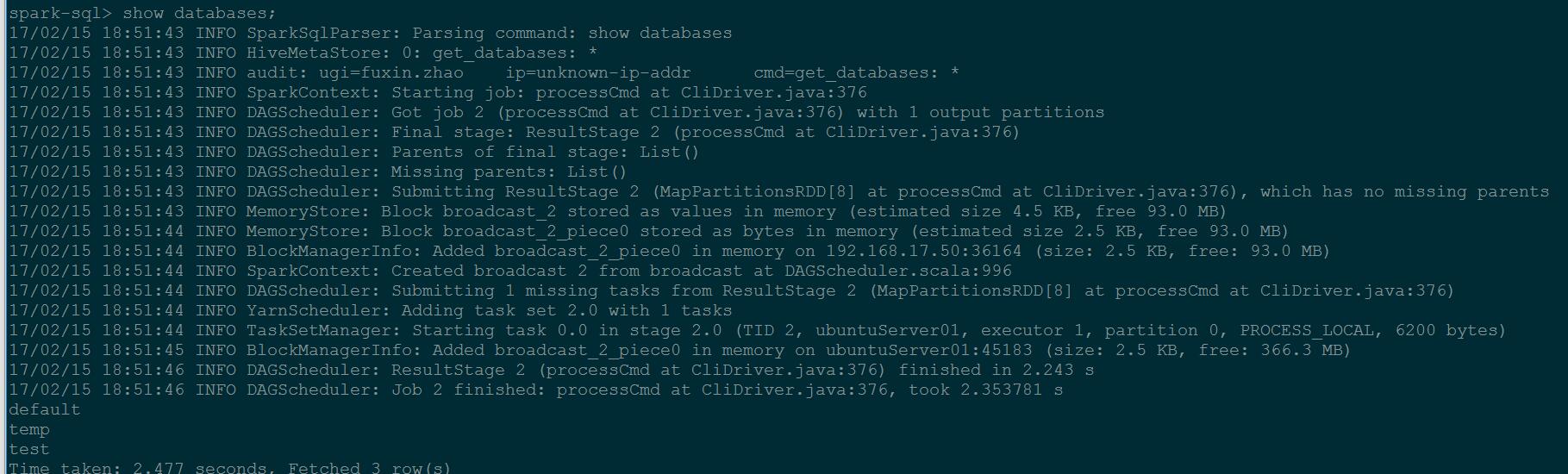

输入spark-sql命令,在终端中执行如下一些sql命令:

启动spark-sql客户端:

spark-sql --master yarn

在启动的命令行中执行如下sql:

show database;

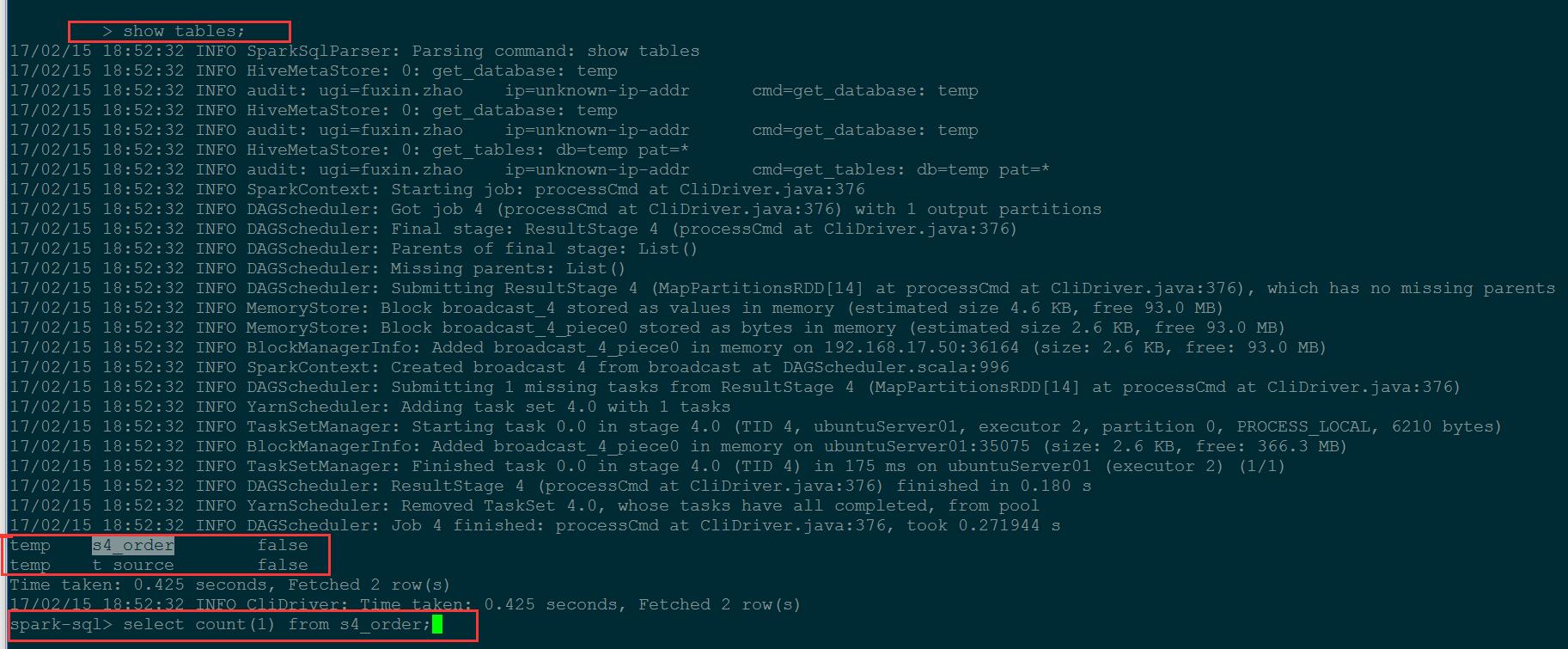

use temp;

show tables;

select * from s4_order limit 100;

select count(*) ,dt from s4_order group dt;

select count(*) from s4_order ;

insert overwrite table t_source select orderid,createtime from s4_order;

select count() ,dt from s4_order group dt; // spark-sql耗时 11s; hive执行耗时30秒

select count() from s4_order ; // spark-sql耗时2s;hive执行耗时25秒。

直观的感受是spark-sql 的效率大概是hive的 3到10倍,由于我的测试是本地的虚拟机单机环境,hadoop也是伪分布式环境,资源较匮乏,在生产环境中随着集群规模,数据量,执行逻辑的变化,执行效率应该不是这个比例。