如果需要代做算法,可以联系我...博客右侧有联系方式。

一、相关概念

1.梯度下降

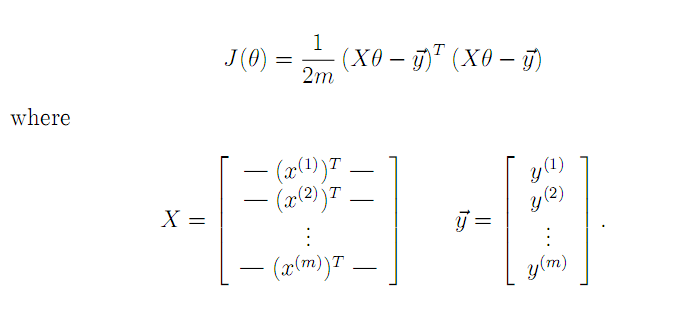

由于Z= X*theta - y是列向量,所以Z'*Z就是平方和连加,就是2范数;如果Z是矩阵呢,那么Z'*Z的对角线就是Z矩阵每列的2范数。

2.正规方程(Normal Equation)

θ = (XTX)-1XTY。

二、代码实现

2104,3,399900

1600,3,329900

2400,3,369000

1416,2,232000

3000,4,539900

1985,4,299900

1534,3,314900

1427,3,198999

1380,3,212000

1494,3,242500

1940,4,239999

2000,3,347000

1890,3,329999

4478,5,699900

1268,3,259900

2300,4,449900

1320,2,299900

1236,3,199900

2609,4,499998

3031,4,599000

1767,3,252900

1888,2,255000

1604,3,242900

1962,4,259900

3890,3,573900

1100,3,249900

1458,3,464500

2526,3,469000

2200,3,475000

2637,3,299900

1839,2,349900

1000,1,169900

2040,4,314900

3137,3,579900

1811,4,285900

1437,3,249900

1239,3,229900

2132,4,345000

4215,4,549000

2162,4,287000

1664,2,368500

2238,3,329900

2567,4,314000

1200,3,299000

852,2,179900

1852,4,299900

1203,3,239500

......................

%% Clear and Close Figures

clear ; close all; clc

data = load('ex1data2.txt');

X = data(:, 1:2);

y = data(:, 3);

m = length(y);

% Scale features and set them to zero mean

%不对Y 规范化

[X mu sigma] = featureNormalize(X);

% Add intercept term to X

%为了矩阵的形式(theta0) theta'*X

X = [ones(m, 1) X];

%% ================ Part 2: Gradient Descent ================

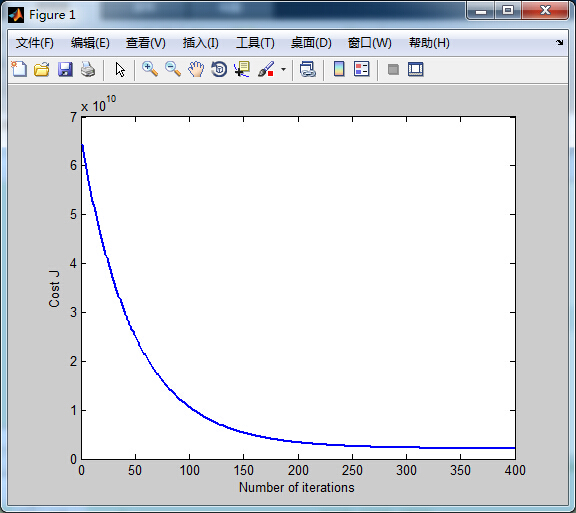

alpha = 0.01;

num_iters = 400;

theta = zeros(3, 1);

[theta, J_history] = gradientDescentMulti(X, y, theta, alpha, num_iters);

%convergence : 收敛

% Plot the convergence graph

figure;

%numel 矩阵中元素个数

plot(1:numel(J_history), J_history, '-b', 'LineWidth', 2);

xlabel('Number of iterations');

ylabel('Cost J');

% Display gradient descent's result

fprintf('Theta computed from gradient descent:

');

fprintf(' %f

', theta);

fprintf('

');

% Estimate the price of a 1650 sq-ft, 3 br house

% ====================== YOUR CODE HERE ======================

% Recall that the first column of X is all-ones. Thus, it does

% not need to be normalized.

price = 0; % You should change this

Xp = [1 1650 3];

Xp = [Xp(1),(Xp(2) - mu(1))/sigma(1),(Xp(3) - mu(2))/sigma(2)];

price = Xp*theta;

fprintf(['Predicted price of a 1650 sq-ft, 3 br house ' ...

'(using gradient descent):

$%f

'], price);

%% ================ Part 3: Normal Equations ================

data = csvread('ex1data2.txt');

X = data(:, 1:2);

y = data(:, 3);

m = length(y);

% Add intercept term to X

X = [ones(m, 1) X];

% Calculate the parameters from the normal equation

theta = normalEqn(X, y);

% Display normal equation's result

fprintf('Theta computed from the normal equations:

');

fprintf(' %f

', theta);

fprintf('

');

% Estimate the price of a 1650 sq-ft, 3 br house

% ====================== YOUR CODE HERE ======================

price = 0; % You should change this

Xexample = [1 1650 3];

price = Xexample*theta;

fprintf(['Predicted price of a 1650 sq-ft, 3 br house ' ...

'(using normal equations):

$%f

'], price);

...............

function [theta] = normalEqn(X, y)

%NORMALEQN Computes the closed-form solution to linear regression

% NORMALEQN(X,y) computes the closed-form solution to linear

% regression using the normal equations.

theta = zeros(size(X, 2), 1);

% 1.对于方阵A,如果为非奇异方阵,则存在逆矩阵inv(A)

% 2.对于奇异矩阵或者非方阵,并不存在逆矩阵,但可以使用pinv(A)求其伪逆

%

% 很多时候你不需要求逆矩阵,例如

% inv(A)*B

% 实际上可以写成

% AB

% B*inv(A)

% 实际上可以写成

% B/A

% 这样比求逆之后带入精度要高

theta = pinv((X'*X))*X'*y;

end

..............

function [theta, J_history] = gradientDescentMulti(X, y, theta, alpha, num_iters)

% Initialize some useful values

m = length(y); % number of training examples

J_history = zeros(num_iters, 1);

for iter = 1:num_iters

%theta 是 3*1 是列向量 X的第一列是1 恰好乘以theta0

% (1*3 X 3*47 - 1*47)'*

theta = theta - alpha * ((theta'*X'-y')*X)'/m;

% Save the cost J in every iteration

J_history(iter) = computeCostMulti(X, y, theta);

end

end

..........

function J = computeCostMulti(X, y, theta)

% Initialize some useful values

m = length(y); % number of training examples

J = 0;

J = sum(((theta'*(X')-y').^2))/(2*m);

end

三、结果

1.代价函数

2.预测结果

Theta computed from gradient descent:

334302.063993

100087.116006

3673.548451

Predicted price of a 1650 sq-ft, 3 br house (using gradient descent):

$289314.620338

Theta computed from the normal equations:

89597.909545

139.210674

-8738.019113

Predicted price of a 1650 sq-ft, 3 br house (using normal equations):

$293081.464335