题目:数据清洗以及结果展示

要求:

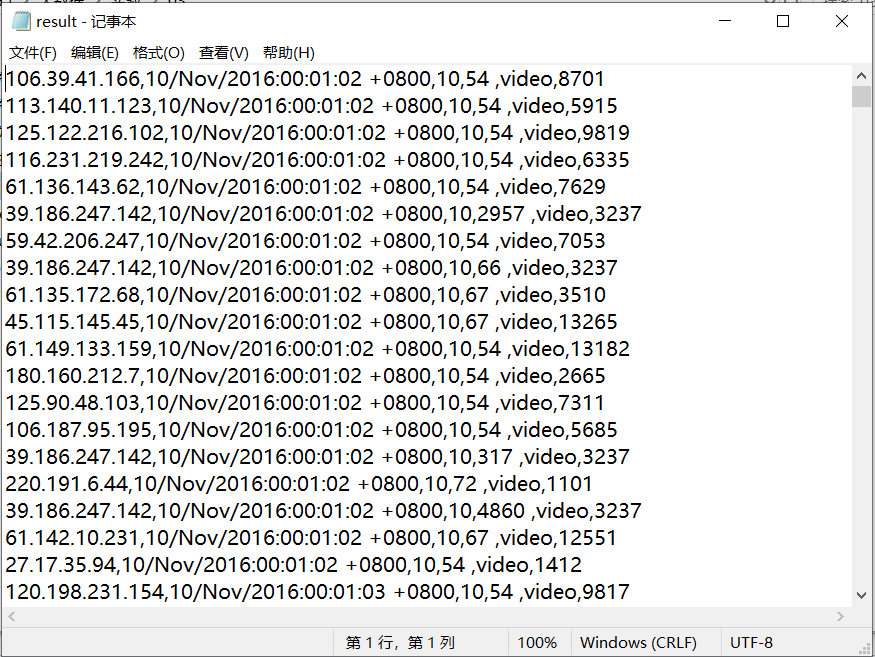

Result文件数据说明:

Ip:106.39.41.166,(城市)

Date:10/Nov/2016:00:01:02 +0800,(日期)

Day:10,(天数)

Traffic: 54 ,(流量)

Type: video,(类型:视频video或文章article)

Id: 8701(视频或者文章的id)

测试要求:

1、 数据清洗:按照进行数据清洗,并将清洗后的数据导入hive数据库中。

两阶段数据清洗:

(1)第一阶段:把需要的信息从原始日志中提取出来

ip: 199.30.25.88

time: 10/Nov/2016:00:01:03 +0800

traffic: 62

文章: article/11325

视频: video/3235

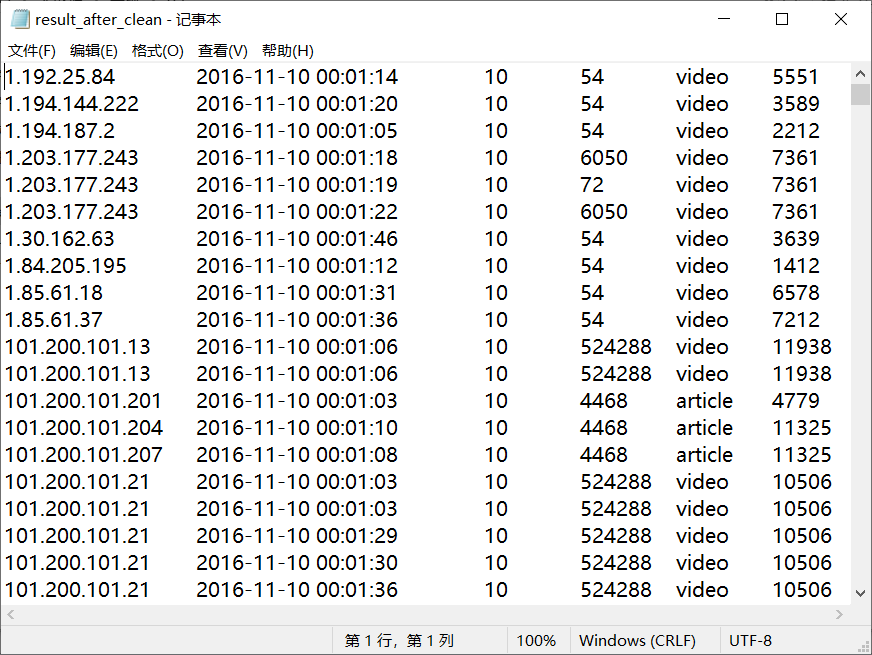

(2)第二阶段:根据提取出来的信息做精细化操作

ip: 城市 city(IP)

time: 2016-11-10 00:01:03

day: 10

traffic: 62

type: article/video

id: 11325

(3)hive数据库表结构:

create table data01(ip string, time string, day string, traffic bigint, type string, id string)

2、数据处理:

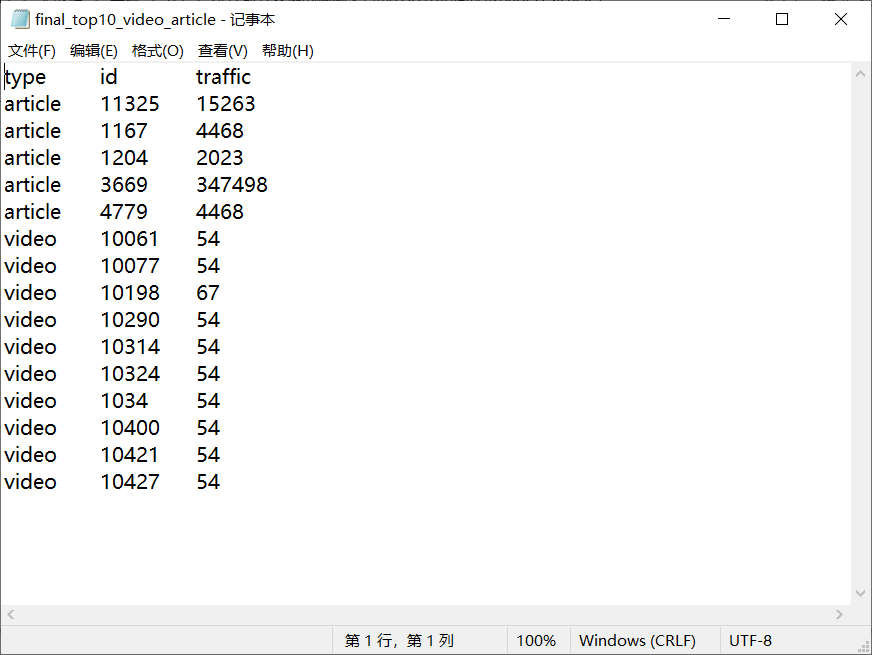

·统计最受欢迎的视频/文章的Top10访问次数 (video/article)

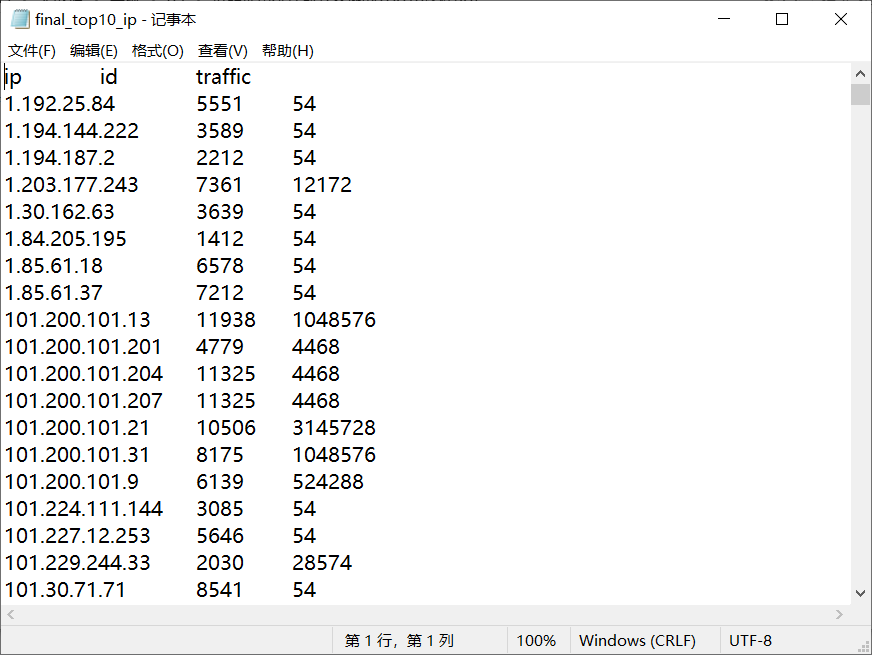

·按照地市统计最受欢迎的Top10课程 (ip)

·按照流量统计最受欢迎的Top10课程 (traffic)

3、数据可视化:将统计结果倒入MySql数据库中,通过图形化展示的方式展现出来。

解答:

1、 数据清洗:按照进行数据清洗,并将清洗后的数据导入hive数据库中。

1.1 数据清洗

原始数据格式

将原始数据文件result.txt上传到HDFS中,然后进行读取清洗

cleanDate.java:(读取清洗)

package com.Use; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; public class cleanData { public static class Map extends Mapper<Object , Text , Text , IntWritable>{ private static Text newKey=new Text(); private static String chage(String data) { char[] str = data.toCharArray(); String[] time = new String[7]; int j = 0; int k = 0; for(int i=0;i<str.length;i++) { if(str[i]=='/'||str[i]==':'||str[i]==32) { time[k] = data.substring(j,i); j = i+1; k++; } } time[k] = data.substring(j, data.length()); switch(time[1]) { case "Jan":time[1]="01";break; case "Feb":time[1]="02";break; case "Mar":time[1]="03";break; case "Apr":time[1]="04";break; case "May":time[1]="05";break; case "Jun":time[1]="06";break; case "Jul":time[1]="07";break; case "Aug":time[1]="08";break; case "Sep":time[1]="09";break; case "Oct":time[1]="10";break; case "Nov":time[1]="11";break; case "Dec":time[1]="12";break; } data = time[2]+"-"+time[1]+"-"+time[0]+" "+time[3]+":"+time[4]+":"+time[5]; return data; } public void map(Object key,Text value,Context context) throws IOException, InterruptedException{ String line=value.toString(); System.out.println(line); String arr[]=line.split(","); String ip = arr[0]; String date = arr[1]; String day = arr[2]; String traffic = arr[3]; String type = arr[4]; String id = arr[5]; date = chage(date); traffic = traffic.substring(0, traffic.length()-1); newKey.set(ip+' '+date+' '+day+' '+traffic+' '+type); //newKey.set(ip+','+date+','+day+','+traffic+','+type); int click=Integer.parseInt(id); context.write(newKey, new IntWritable(click)); } } public static class Reduce extends Reducer<Text, IntWritable, Text, IntWritable>{ public void reduce(Text key,Iterable<IntWritable> values,Context context) throws IOException, InterruptedException{ for(IntWritable val : values){ context.write(key, val); } } } public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException{ Configuration conf=new Configuration(); System.out.println("start"); Job job =new Job(conf,"cleanData"); job.setJarByClass(cleanData.class); job.setMapperClass(Map.class); job.setReducerClass(Reduce.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); job.setInputFormatClass(TextInputFormat.class); job.setOutputFormatClass(TextOutputFormat.class); Path in=new Path("hdfs://192.168.137.112:9000/tutorial/in/result.txt"); Path out=new Path("hdfs://192.168.137.112:9000/tutorial/out"); FileInputFormat.addInputPath(job,in); FileOutputFormat.setOutputPath(job,out); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

清洗后格式

2、数据处理:

2.1统计最受欢迎的视频/文章的Top10访问次数 (video/article)

读取清洗后数据的.txt文件进行mapreduce

2.2按照地市统计最受欢迎的Top10课程 (ip)

读取清洗后数据的.txt文件进行mapreduce

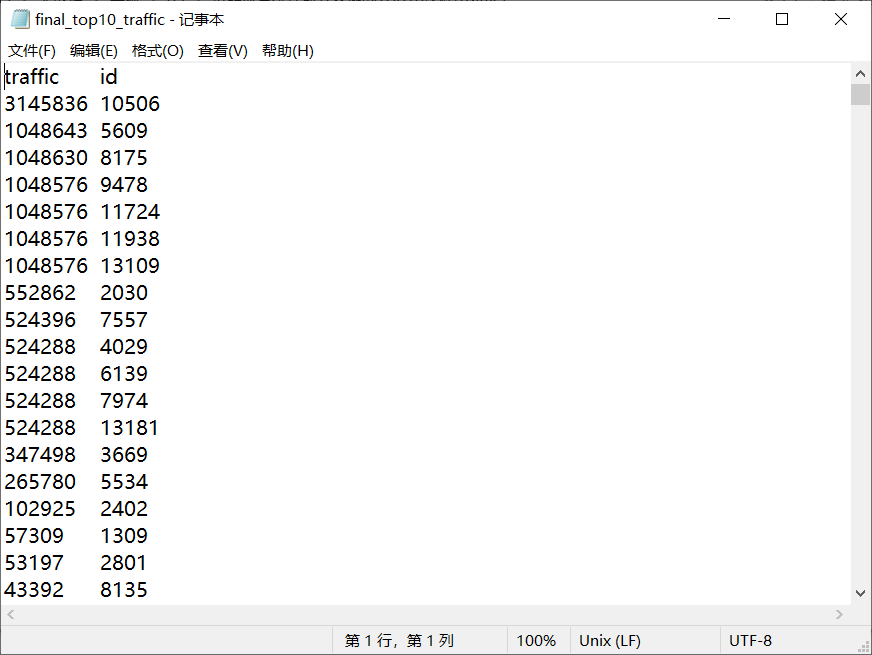

2.3按照流量统计最受欢迎的Top10课程 (traffic)

读取清洗后数据的.txt文件进行mapreduce

3、数据可视化:将统计结果倒入MySql数据库中,通过图形化展示的方式展现出来。

2.2的统计结果:图形化展示暂未写出

2.1、2.3的统计结果:将统计结果的.txt导入到mysql数据库中,用EChart图形化进行可视化

-----------------------------------------------------------------------------------------------------------------------------