Kafka 是现在大数据中流行的消息中间件,其中 kafka 中由 topic 组成,而 topic 下又可以由多个 partition 构成。有时候我们在消费 kafka 中的数据想要保证消费 kafka 中的所有的分区下数据是全局有序的,这种情况下就需要将 topic 下的 partition 的数量设置为一个这样才会保证全局有序,但是这种情况消费数据并没有多并发,也就影响效率。

在 Flink 中则可以即保证消费 kafka 中的数据全局有序,又可以构成多并发,这就是 flink 中的时间特性带来的效果。Flink 在创建 kafka 的数据源时可以将其中的所有数据都存有时间并设置对应的 watermark,这样利用 event time 对 kafka 中的数据已经形成了时间概念上的全局有序性,当 flink 在消费其中的数据时则根据时间处理即可保证 kafka 中数据的全局有序性。

下面一部分内容来至于 flink 的官网,链接:

https://ci.apache.org/projects/flink/flink-docs-release-1.8/dev/event_timestamps_watermarks.html

Timestamps per Kafka Partition

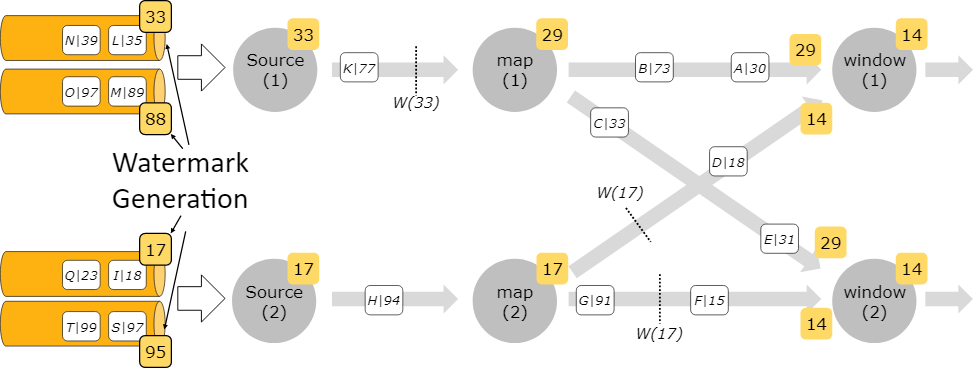

When using Apache Kafka as a data source, each Kafka partition may have a simple event time pattern (ascending timestamps or bounded out-of-orderness). However, when consuming streams from Kafka, multiple partitions often get consumed in parallel, interleaving the events from the partitions and destroying the per-partition patterns (this is inherent in how Kafka’s consumer clients work).

In that case, you can use Flink’s Kafka-partition-aware watermark generation. Using that feature, watermarks are generated inside the Kafka consumer, per Kafka partition, and the per-partition watermarks are merged in the same way as watermarks are merged on stream shuffles.

For example, if event timestamps are strictly ascending per Kafka partition, generating per-partition watermarks with the ascending timestamps watermark generator will result in perfect overall watermarks.

The illustrations below show how to use the per-Kafka-partition watermark generation, and how watermarks propagate through the streaming dataflow in that case.

FlinkKafkaConsumer09<MyType> kafkaSource = new FlinkKafkaConsumer09<>("myTopic", schema, props);

kafkaSource.assignTimestampsAndWatermarks(new AscendingTimestampExtractor<MyType>() {

@Override

public long extractAscendingTimestamp(MyType element) {

return element.eventTimestamp();

}

});

DataStream<MyType> stream = env.addSource(kafkaSource);