以往文章参考:

Windows玩转Kubernetes系列1-VirtualBox安装Centos

Windows玩转Kubernetes系列2-Centos安装Docker

安装K8S

yum install -y kubeadm

相应的依赖包也会kubelet、kubeadm、kubectl、kubernetes-cni也会安装上

- kubeadm:k8集群的一键部署工具,通过把k8的各类核心组件和插件以pod的方式部署来简化安装过程

- kubelet:运行在每个节点上的node agent,k8集群通过kubelet真正的去操作每个节点上的容器,由于需要直接操作宿主机的各类资源,所以没有放在pod里面,还是通过服务的形式装在系统里面

- kubectl:kubernetes的命令行工具,通过连接api-server完成对于k8的各类操作

- kubernetes-cni:k8的虚拟网络设备,通过在宿主机上虚拟一个cni0网桥,来完成pod之间的网络通讯,作用和docker0类似

关闭虚拟机

复制工作结点

复制出计算节点“centos7-k8s-node1”和“centos7-k8s-node2”

MAC地址设定需要注意一下:

网络设置如下:

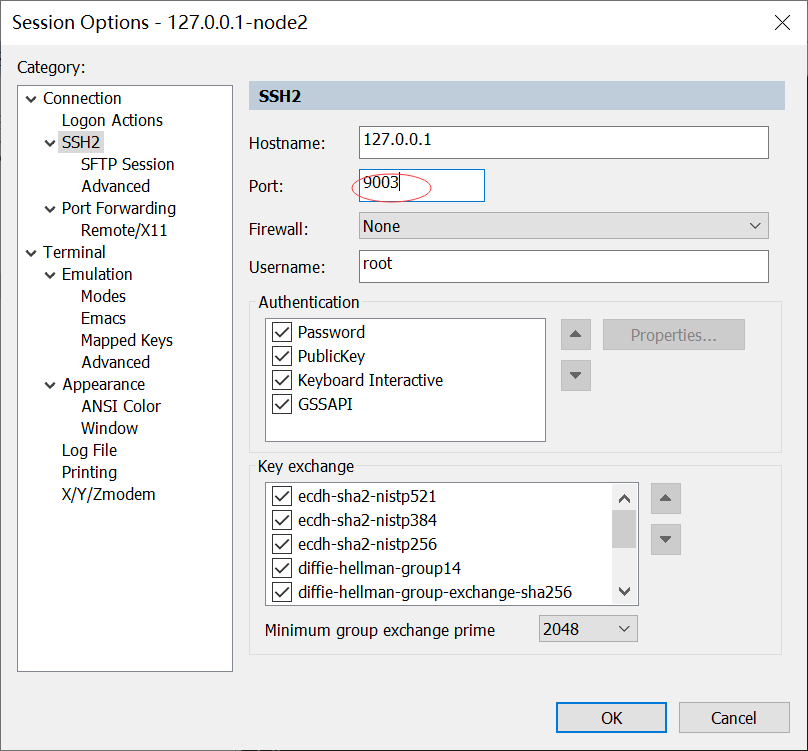

同样复制另外一个结点并设置网络为9003->22

结点最终如下:

添加网卡2

由于这3个结点只有一个网卡,与宿主机器通信,它们之间是无法通信的,所以需要添加网卡2,设置如下,让虚拟机之间也可以通信

Winscp添加两个终端

设置虚拟网络

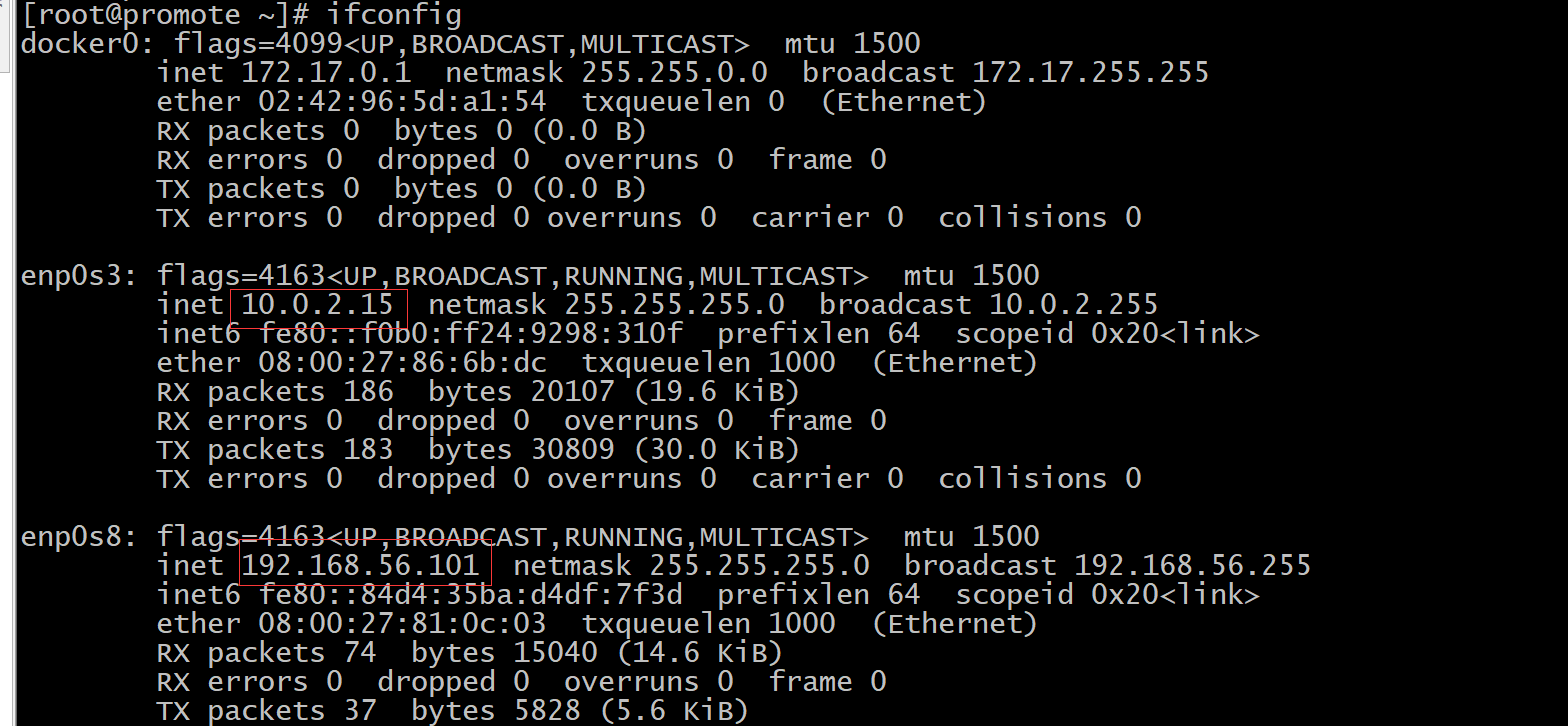

在master节点中,输入命令:

ifconfig

找到网卡1与网卡2的ip地址

enp0s3其实对应的就是“网卡1”,而enp0s8就是我们刚添加的“网卡2”,笔者获取三个结点的ip信息如下:

- master: 网卡1-IP: 10.0.2.15,网卡2-IP:192.168.56.101

- node1:网卡1-IP: 10.0.2.15,网卡2-IP:192.168.56.102

- node2:网卡1-IP: 10.0.2.15,网卡2-IP:192.168.56.103

对master结点如下配置:

vi /etc/hostname

将hostname修改为master-node

vi /etc/hosts

内容如下:

192.168.56.101 master-node

192.168.56.102 work-node1

192.168.56.103 work-node2

node2,node3以同样的方法设置一下,hostname为work-node1,work-node2,hosts里的内容一样

主节点初始化K8S

执行下列代码,开始master节点的初始化工作

kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.56.104

注意这边的--pod-network-cidr=10.244.0.0/16,是k8的网络插件所需要用到的配置信息,用来给node分配子网段,用到的网络插件是flannel,就是这么配,其他的插件也有相应的配法。选项--apiserver-advertise-address表示绑定的网卡IP,这里一定要绑定前面提到的enp0s8网卡,否则会默认使用enp0s3网卡。

如果有以下报错:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

则运行

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

再运行以上初始化命令,发现有以下错误

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.17.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.17.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.17.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-proxy:v1.17.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/pause:3.1: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/etcd:3.4.3-0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/coredns:1.6.5: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

这个得翻墙了,不过没墙怎么办?只能从国内的镜像源下载了:

先下载并改tag

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2 k8s.gcr.io/kube-apiserver:v1.17.2

运行以下命令,运行完成后,在work-node1与work-node2中也执行一下:

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2 k8s.gcr.io/kube-apiserver:v1.17.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2 k8s.gcr.io/kube-controller-manager:v1.17.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.2 k8s.gcr.io/kube-scheduler:v1.17.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.2 k8s.gcr.io/kube-proxy:v1.17.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5 k8s.gcr.io/coredns:1.6.5

再次运行

kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.56.104

可以看到安装成功后,kudeadm帮你做了大量的工作,包括kubelet配置、各类证书配置、kubeconfig配置、插件安装等等。注意最后一行,kubeadm提示你,其他节点需要加入集群的话,只需要执行这条命令就行了,以下是我三个node的token信息

kubeadm join 192.168.56.104:6443 --token qi6rn1.yd8oidvo10hefayk

--discovery-token-ca-cert-hash sha256:29f4541851f0ff1f318e4e103aa67139dd4af85903850451862089379620e8c1

kubeadm还提醒你,要完成全部安装,还需要安装一个网络插件kubectl apply -f [podnetwork].yaml

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

启动kubelet

安装kubelet、kubeadm、kubectl三者后,要求启动kubelet

systemctl enable kubelet && systemctl start kubelet

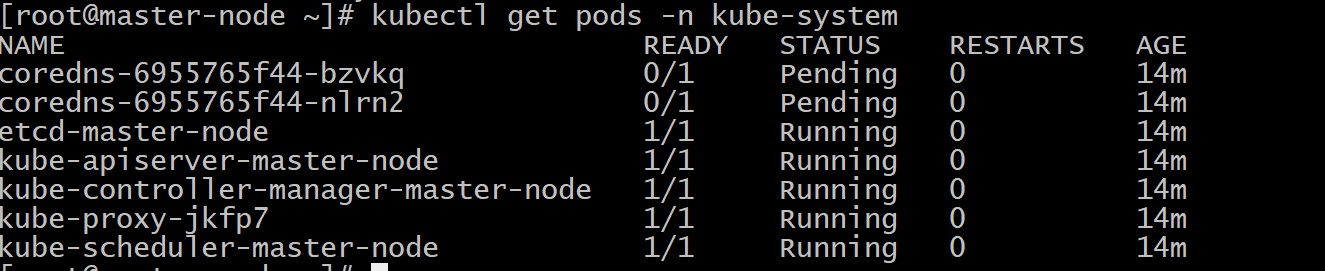

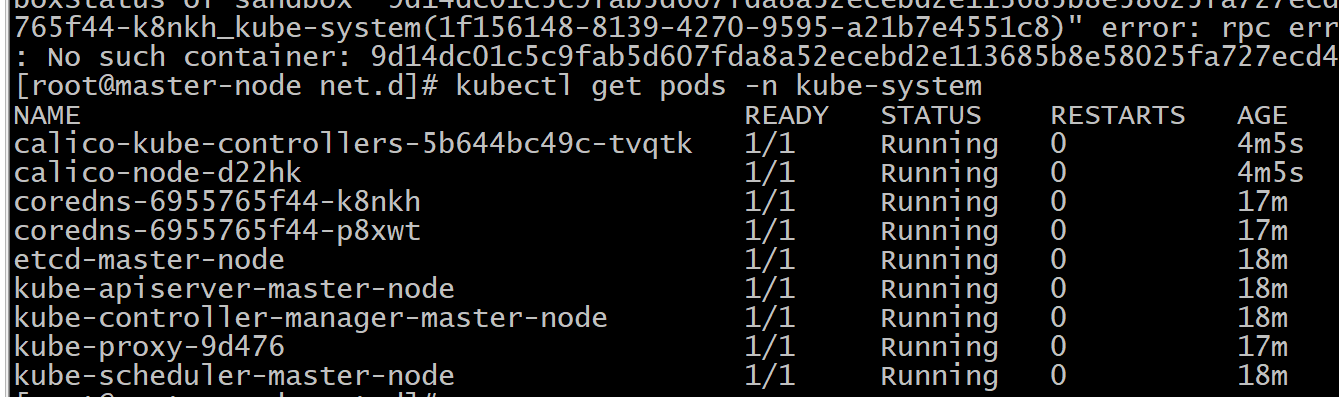

发现只有主节点,我们还没有把计算结点加进来,查看一下pod情况

kubectl get pods -n kube-system

可以看到coredns的两个pod都是pending状态,这是因为网络插件还没有安装。这里用到的flannel

weget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl create -f kube-flannel.yml

docker pull quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

docker tag quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64

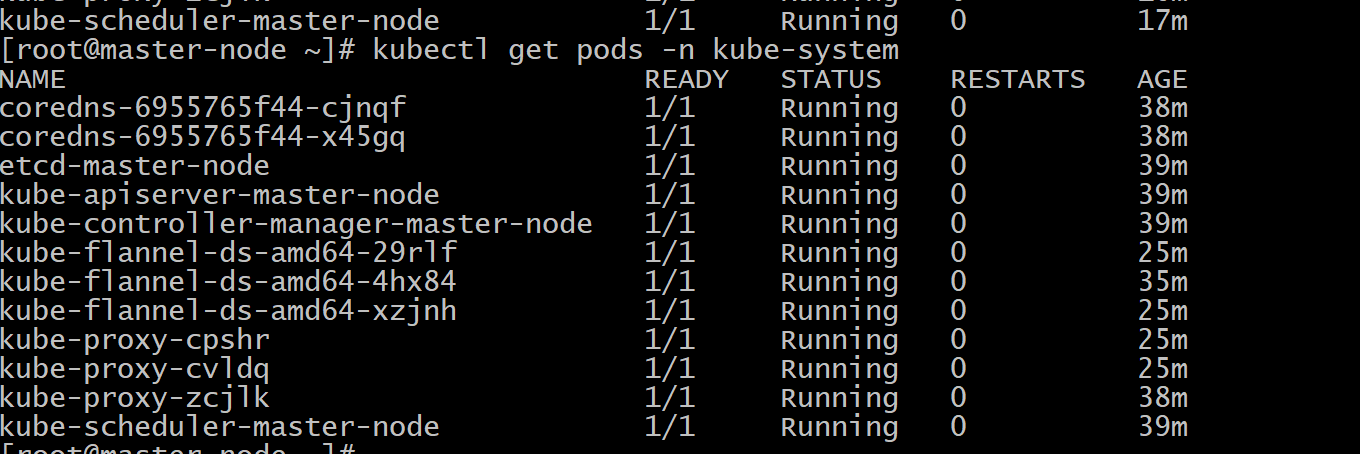

再次查看pod状态,都是运行状态了

kubectl get pods -n kube-system

如果用Calico的话,执行代码如下:

curl https://docs.projectcalico.org/v3.11/manifests/calico.yaml -O

kubectl apply -f calico.yaml

查看kubelet状态

systemctl status kubelet -l

将Master作为工作节点

K8S集群默认不会将Pod调度到Master上,这样Master的资源就浪费了。在Master(即master-node)上,可以运行以下命令使其作为一个工作节点:

kubectl taint nodes --all node-role.kubernetes.io/master-

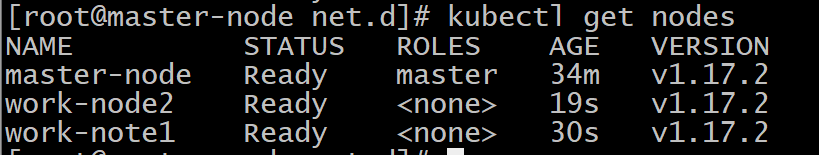

将其他节点加入集群

在其他两个节点work-node1和work-node2上,执行主节点生成的kubeadm join命令即可加入集群:

kubeadm join 192.168.56.101:6443 --token vo1jir.ok9bd13hy940kqsf

--discovery-token-ca-cert-hash sha256:8b2f5e3e2776610b33ce3020c2a7e8a31fc1be891887949e7df6f65a3c3088b0

稍等片刻,会出现以下结果:

文章参考:

Windows玩转Kubernetes系列1-VirtualBox安装Centos

Windows玩转Kubernetes系列2-Centos安装Docker

本文由博客一文多发平台 OpenWrite 发布!