fenbushi.py

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from scrapy_redis.spiders import RedisCrawlSpider

from FenbushiProject.items import FenbushiprojectItem

class FenbishiSpider(RedisCrawlSpider):

name = 'fenbishi'

# start_urls = ['https://www.1905.com/vod/list/n_1_t_1/o3.html?fr=vodhome_js_lx']

redis_key = 'jianliQuene' #调度器队列的名称

link = LinkExtractor(allow=r'https://www.1905.com/vod/list/n_1_t_1/o3pd+.html')

rules = (

Rule(link, callback='parse_item', follow=True),

)

def parse_item(self, response):

divs = response.xpath('//*[@id="content"]/section[4]/div')

for div in divs:

# href = div.xpath('./a/@href')[0].extract()

title = div.xpath('./a/@title')[0].extract()

item = FenbushiprojectItem()

# item["href"] = href

item["title"] = title

print(title)

yield item

items.py

import scrapy

class FenbushiprojectItem(scrapy.Item):

title = scrapy.Field()

settings.py

USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'

ROBOTSTXT_OBEY = False

LOG_LEVEL = 'ERROR'

# 使用scrapy-redis组件的去重队列

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

# 使用scrapy-redis组件自己的调度器

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

# 是否允许暂停

SCHEDULER_PERSIST = True

# 指定管道

ITEM_PIPELINES = {

'scrapy_redis.pipelines.RedisPipeline': 400

}

# 指定数据库

REDIS_HOST = '127.0.0.1'

REDIS_PORT = 6379

运行项目

配置redis

打开 redis.windows.conf

56行:#bind 127.0.0.1

75行:protected-mode no

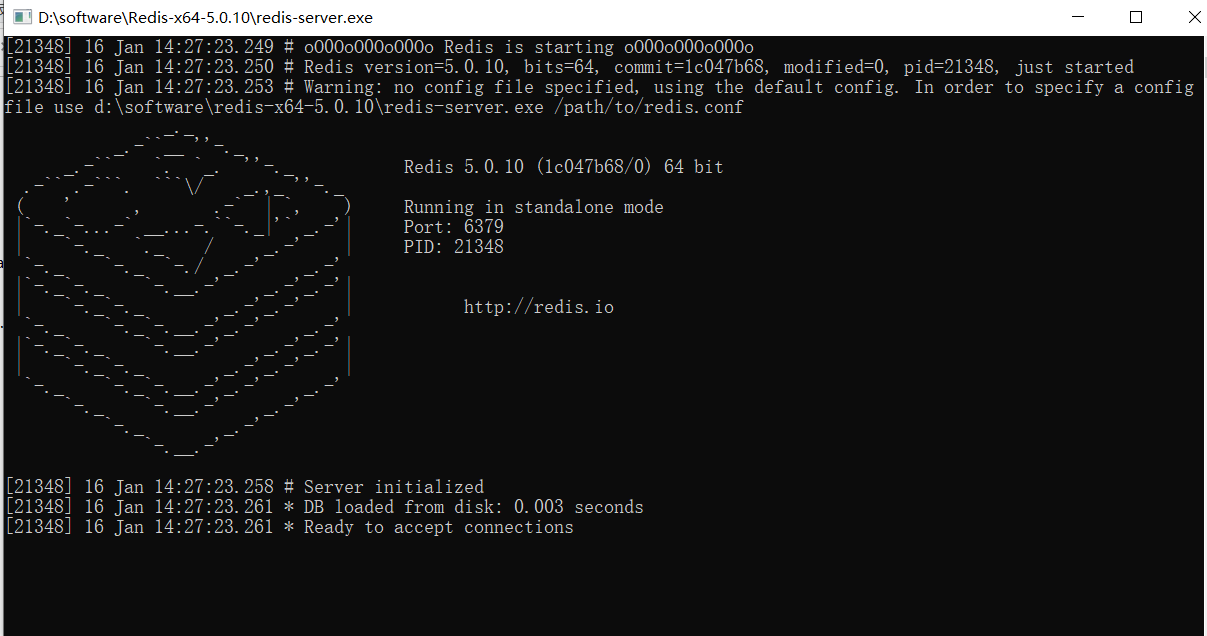

运行redis

运行成功效果图

执行项目

终端执行代码: scrapy crawl fenbushi

cmd执行代码: 切换到redis目录

输入代码: redis-cli.exe lpush jianliQuene https://www.1905.com/vod/list/n_1_t_1/o3.html?fr=vodhome_js_lx

最后可以下载RedisDesktopManager查看数据