(base) [root@pyspark sbin]# ls

slaves.sh start-all.sh start-mesos-shuffle-service.sh start-thriftserver.sh stop-mesos-dispatcher.sh stop-slaves.sh

spark-config.sh start-history-server.sh start-shuffle-service.sh stop-all.sh stop-mesos-shuffle-service.sh stop-thriftserver.

spark-daemon.sh start-master.sh start-slave.sh stop-history-server.sh stop-shuffle-service.sh

spark-daemons.sh start-mesos-dispatcher.sh start-slaves.sh stop-master.sh stop-slave.sh

(base) [root@pyspark sbin]# ./start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /root/spark-2.4.4/logs/spark-root-org.apache.spark.deploy.master.Master-1-pyspark.out

192.168.80.13: starting org.apache.spark.deploy.worker.Worker, logging to /root/spark-2.4.4/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-py

(base) [root@pyspark sbin]# jps

4388 Worker

3928 Main

2782 SparkSubmit

4302 Master

4462 Jps

(base) [root@pyspark bin]# ls

beeline find-spark-home.cmd pyspark2.cmd spark-class sparkR2.cmd spark-shell.cmd spark-submit Untitled1.ipynb

beeline.cmd load-spark-env.cmd pyspark.cmd spark-class2.cmd sparkR.cmd spark-sql spark-submit2.cmd Untitled.ipynb

docker-image-tool.sh load-spark-env.sh run-example spark-class.cmd spark-shell spark-sql2.cmd spark-submit.cmd

find-spark-home pyspark run-example.cmd sparkR spark-shell2.cmd spark-sql.cmd startspark.sh

(base) [root@pyspark bin]# ./spark-shell --master spark://192.168.80.13:7077 --executor-memory 500m

20/04/16 11:24:08 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

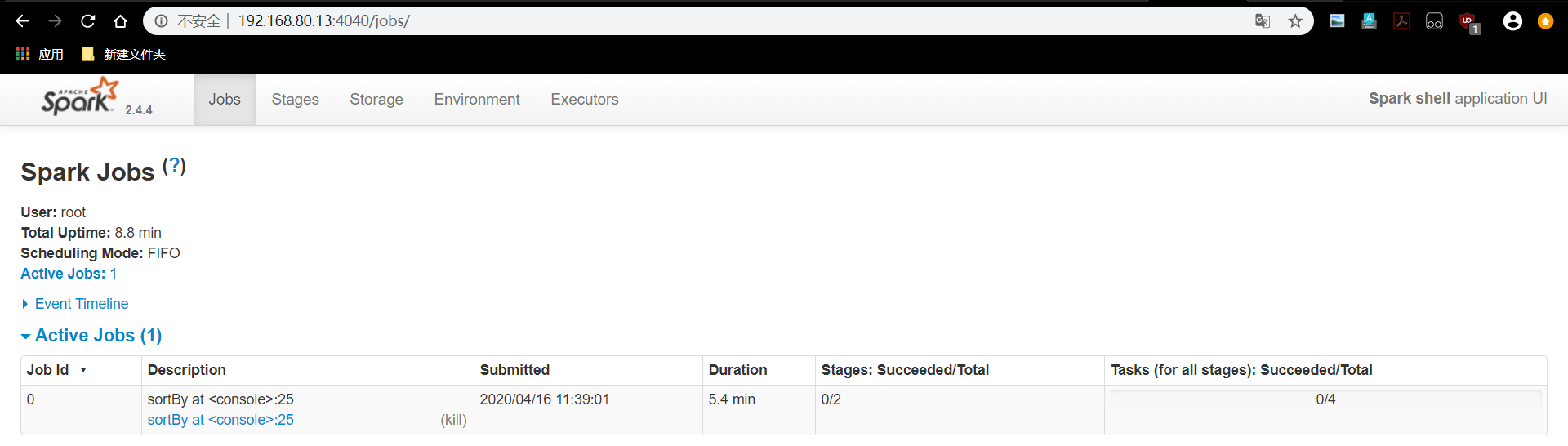

Spark context Web UI available at http://pyspark:4040

Spark context available as 'sc' (master = spark://192.168.80.13:7077, app id = app-20200416112425-0001).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_ / _ / _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_ version 2.4.4

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_144)

Type in expressions to have them evaluated.

Type :help for more information.

scala> sc.textFile("/root/spark-2.4.4/README.md").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).collect

res0: Array[(String, Int)] = Array((package,1), (this,1), (integration,1), (Python,2), (page](http://spark.apache.org/documentation.html).,1), (cluster.,1), (its,1), ([run,1), (There,1), (general,3), (have,1), (pre-built,1), (Because,1), (YARN,,1), (locally,2), (changed,1), (locally.,1), (sc.parallelize(1,1), (only,1), (several,1), (This,2), (basic,1), (Configuration,1), (learning,,1), (documentation,3), (first,1), (graph,1), (Hive,2), (info,1), (["Specifying,1), ("yarn",1), ([params]`.,1), ([project,1), (prefer,1), (SparkPi,2), (<http://spark.apache.org/>,1), (engine,1), (version,1), (file,1), (documentation,,1), (MASTER,1), (example,3), (["Parallel,1), (are,1), (params,1), (scala>,1), (DataFrames,,1), (provides,1), (refer,2), (configure,1), (Interactive,2), (R,,1), (can,7), (build,4),...