前言

本文介绍在k8s集群中使用node-exporter、prometheus、grafana对集群进行监控。

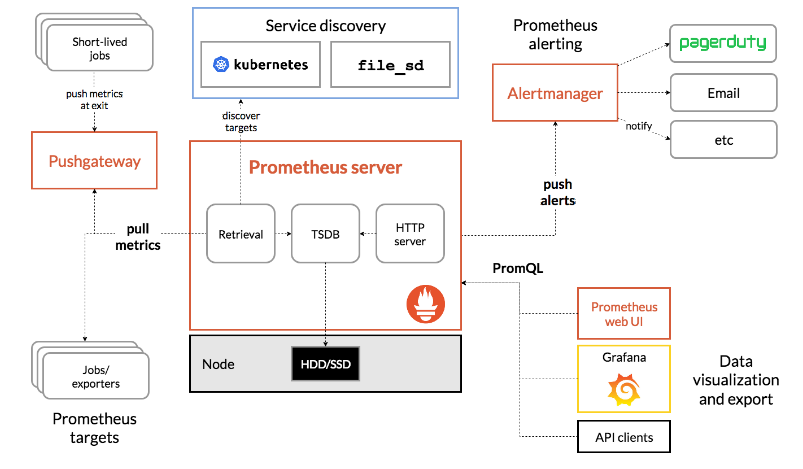

其实现原理有点类似ELK、EFK组合。node-exporter组件负责收集节点上的metrics监控数据,并将数据推送给prometheus, prometheus负责存储这些数据,grafana将这些数据通过网页以图形的形式展现给用户。

在开始之前有必要了解下Prometheus是什么?

Prometheus (中文名:普罗米修斯)是由 SoundCloud 开发的开源监控报警系统和时序列数据库(TSDB).自2012年起,许多公司及组织已经采用 Prometheus,并且该项目有着非常活跃的开发者和用户社区.现在已经成为一个独立的开源项目。Prometheus 在2016加入 CNCF ( Cloud Native Computing Foundation ), 作为在 kubernetes 之后的第二个由基金会主持的项目。 Prometheus 的实现参考了Google内部的监控实现,与源自Google的Kubernetes结合起来非常合适。另外相比influxdb的方案,性能更加突出,而且还内置了报警功能。它针对大规模的集群环境设计了拉取式的数据采集方式,只需要在应用里面实现一个metrics接口,然后把这个接口告诉Prometheus就可以完成数据采集了,下图为prometheus的架构图。

Prometheus的特点:

1、多维数据模型(时序列数据由metric名和一组key/value组成)

2、在多维度上灵活的查询语言(PromQl)

3、不依赖分布式存储,单主节点工作.

4、通过基于HTTP的pull方式采集时序数据

5、可以通过中间网关进行时序列数据推送(pushing)

6、目标服务器可以通过发现服务或者静态配置实现

7、多种可视化和仪表盘支持

prometheus 相关组件,Prometheus生态系统由多个组件组成,其中许多是可选的:

1、Prometheus 主服务,用来抓取和存储时序数据

2、client library 用来构造应用或 exporter 代码 (go,java,python,ruby)

3、push 网关可用来支持短连接任务

4、可视化的dashboard (两种选择,promdash 和 grafana.目前主流选择是 grafana.)

4、一些特殊需求的数据出口(用于HAProxy, StatsD, Graphite等服务)

5、实验性的报警管理端(alartmanager,单独进行报警汇总,分发,屏蔽等 )

promethues 的各个组件基本都是用 golang 编写,对编译和部署十分友好.并且没有特殊依赖.基本都是独立工作。

部署

现在我们正式开始部署工作。这里假设你已经为你的K8S集群部署过kube-dns或者coredns了。

一、环境介绍

操作系统环境:centos linux 7.5 64bit

K8S软件版本: 1.12.3

Master节点IP: 10.40.0.151/24

Node01节点IP: 10.40.0.152/24

Node02节点IP: 10.40.0.153/24

二、采用daemonset方式部署node-exporter组件

cat node-exporter.yaml apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: node-exporter namespace: kube-system labels: k8s-app: node-exporter spec: template: metadata: labels: k8s-app: node-exporter spec: containers: - image: prom/node-exporter name: node-exporter ports: - containerPort: 9100 protocol: TCP name: http --- apiVersion: v1 kind: Service metadata: labels: k8s-app: node-exporter name: node-exporter namespace: kube-system spec: ports: - name: http port: 9100 nodePort: 31672 protocol: TCP type: NodePort selector: k8s-app: node-exporter

三、部署prometheus组件

1、rbac文件

cat rbac-setup.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - nodes/proxy - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: - extensions resources: - ingresses verbs: ["get", "list", "watch"] - nonResourceURLs: ["/metrics"] verbs: ["get"] --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: kube-system

2、以configmap的形式管理prometheus组件的配置文件

cat configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config namespace: kube-system data: prometheus.yml: | global: scrape_interval: 15s evaluation_interval: 15s scrape_configs: - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-nodes' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics - job_name: 'kubernetes-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name - job_name: 'kubernetes-services' kubernetes_sd_configs: - role: service metrics_path: /probe params: module: [http_2xx] relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__address__] target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] target_label: kubernetes_name - job_name: 'kubernetes-ingresses' kubernetes_sd_configs: - role: ingress relabel_configs: - source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path] regex: (.+);(.+);(.+) replacement: ${1}://${2}${3} target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_ingress_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_ingress_name] target_label: kubernetes_name - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name

3、Prometheus deployment 文件

cat prometheus.yaml apiVersion: apps/v1beta2 kind: Deployment metadata: labels: name: prometheus-deployment name: prometheus namespace: kube-system spec: replicas: 1 selector: matchLabels: app: prometheus template: metadata: labels: app: prometheus spec: containers: - image: prom/prometheus:v2.0.0 name: prometheus command: - "/bin/prometheus" args: - "--config.file=/etc/prometheus/prometheus.yml" - "--storage.tsdb.path=/prometheus" - "--storage.tsdb.retention=24h" ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: "/prometheus" name: data - mountPath: "/etc/prometheus" name: config-volume resources: requests: cpu: 100m memory: 100Mi limits: cpu: 500m memory: 2500Mi serviceAccountName: prometheus volumes: - name: data emptyDir: {} - name: config-volume configMap: name: prometheus-config --- kind: Service apiVersion: v1 metadata: labels: app: prometheus name: prometheus namespace: kube-system spec: type: NodePort ports: - port: 9090 targetPort: 9090 nodePort: 30003 selector: app: prometheus

4、通过上述yaml文件创建相应的对象

kubectl create -f node-exporter.yaml kubectl create -f rbac-setup.yaml kubectl create -f configmap.yaml kubectl create -f promethues.yaml

5、查看相关pod和service

# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-779dfc4d59-rtpmk 1/1 Running 0 48s kubernetes-dashboard-b54f75c69-tnn4h 1/1 Running 0 90m node-exporter-sflqg 1/1 Running 0 9m44s node-exporter-xfsf8 1/1 Running 0 9m44s prometheus-58dc44f44c-z86rv 1/1 Running 0 8m44s

# kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-dns ClusterIP 10.250.0.2 <none> 53/UDP,53/TCP 117s kubernetes-dashboard NodePort 10.250.1.89 <none> 443:38443/TCP 102m node-exporter NodePort 10.250.0.165 <none> 9100:31672/TCP 10m prometheus NodePort 10.250.0.53 <none> 9090:30003/TCP 9m53s

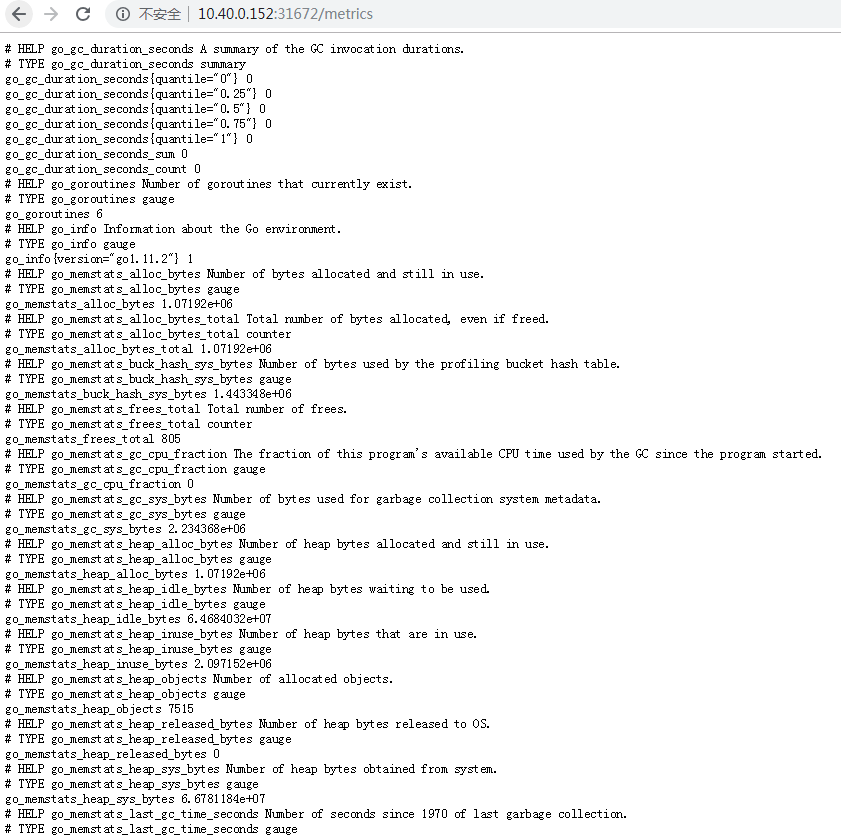

6、Node-exporter对应的nodeport端口为31672,通过访问http://10.40.0.152:31672/metrics 可以看到对应的metrics

7、prometheus对应的nodeport端口为30003,通过访问http://10.40.0.152:30003/targets 可以看到prometheus已经成功连接上了k8s的apiserver

8、在prometheus的WEB界面上提供了基本的查询K8S集群中每个POD的CPU使用情况,可以使用如下查询条件查询:

sum by (pod_name)( rate(container_cpu_usage_seconds_total{image!="", pod_name!=""}[1m] ) )

上述的查询有出现数据,说明node-exporter往prometheus中写入数据正常,接下来我们就可以部署grafana组件,实现更友好的webui展示数据了。

五、部署grafana组件

1、grafana deployment配置文件

cat grafana.yaml

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: grafana-core namespace: kube-system labels: app: grafana component: core spec: replicas: 1 template: metadata: labels: app: grafana component: core spec: containers: - image: grafana/grafana:5.0.0 name: grafana-core imagePullPolicy: IfNotPresent resources: limits: cpu: 100m memory: 100Mi requests: cpu: 100m memory: 100Mi env: - name: GF_AUTH_BASIC_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ENABLED value: "false" readinessProbe: httpGet: path: /login port: 3000 volumeMounts: - name: grafana-persistent-storage mountPath: /var volumes: - name: grafana-persistent-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: name: grafana namespace: kube-system labels: app: grafana component: core spec: type: NodePort ports: - port: 3000 nodePort: 31000 selector: app: grafana

部署grafana

kubectl create -f grafana.yaml

查看grafana pod和service

# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-779dfc4d59-rtpmk 1/1 Running 0 101m grafana-core-6759c8945-5f4sv 1/1 Running 0 91m kubernetes-dashboard-b54f75c69-tnn4h 1/1 Running 0 3h11m node-exporter-sflqg 1/1 Running 0 110m node-exporter-xfsf8 1/1 Running 0 110m prometheus-58dc44f44c-z86rv 1/1 Running 0 109m

# kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE grafana NodePort 10.250.1.230 <none> 3000:31000/TCP 93m kube-dns ClusterIP 10.250.0.2 <none> 53/UDP,53/TCP 103m kubernetes-dashboard NodePort 10.250.1.89 <none> 443:38443/TCP 3h23m node-exporter NodePort 10.250.0.165 <none> 9100:31672/TCP 112m prometheus NodePort 10.250.0.53 <none> 9090:30003/TCP 111m

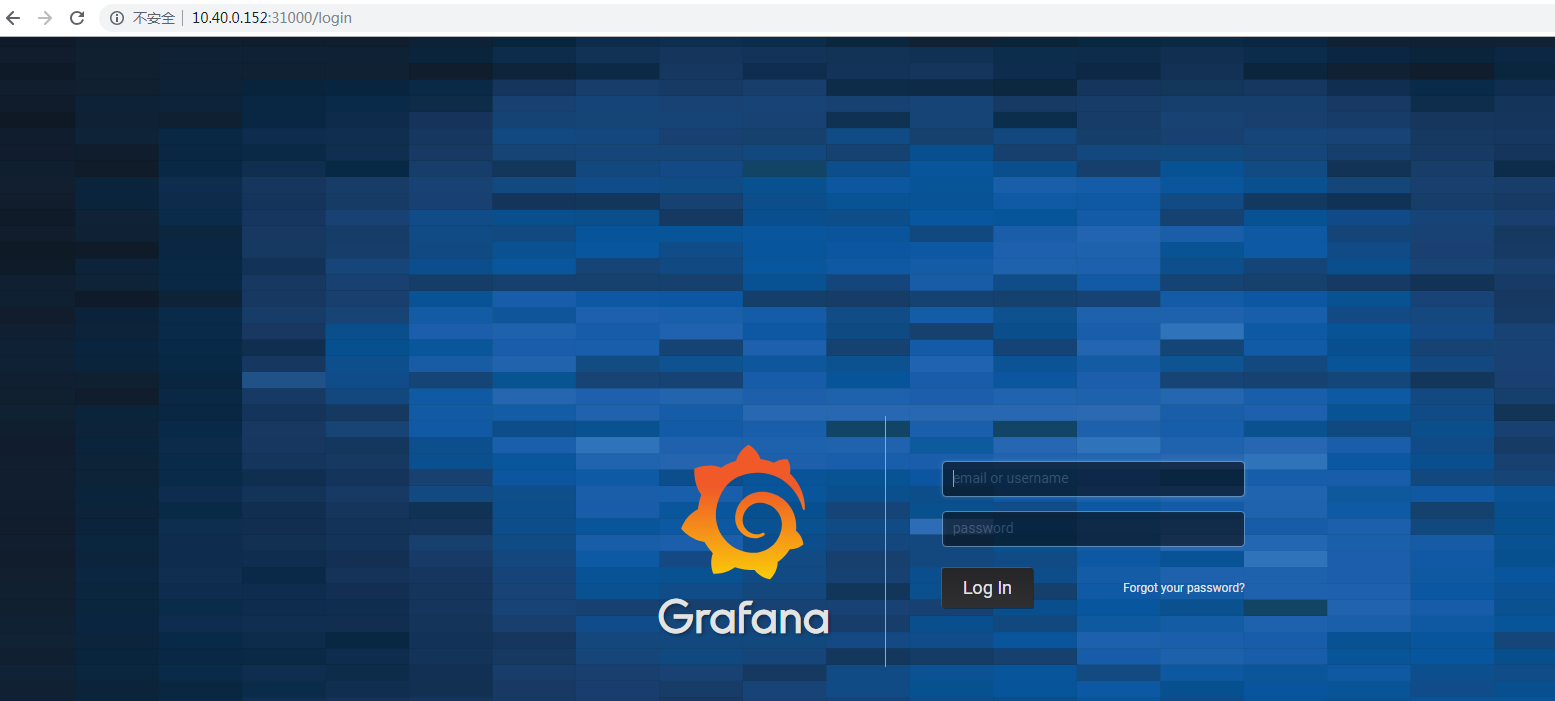

可以看到grafana nodeport端口为31000,可使用nodeip+nodeport的方式访问grafana http://10.40.0.152:31000

默认用户名和密码都是admin

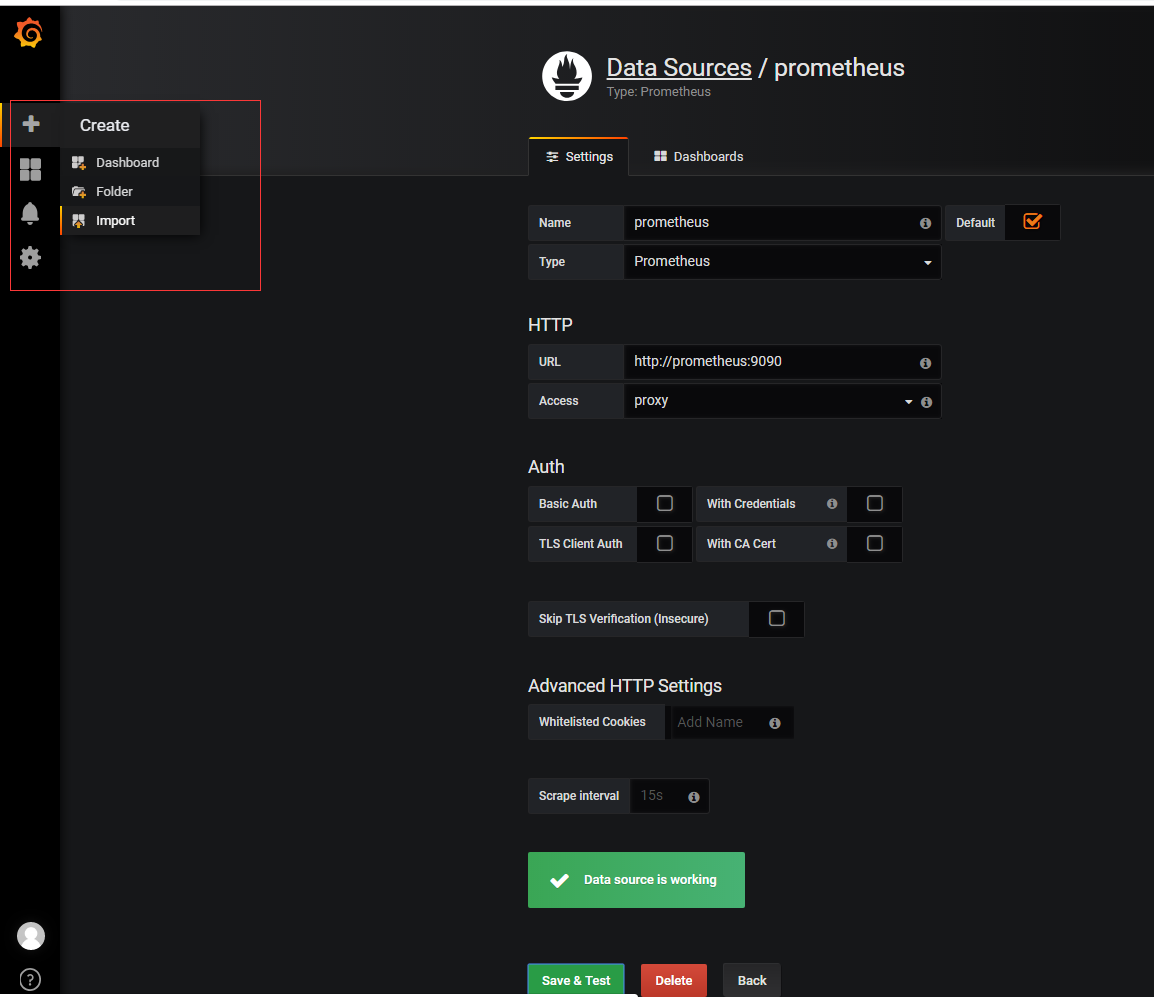

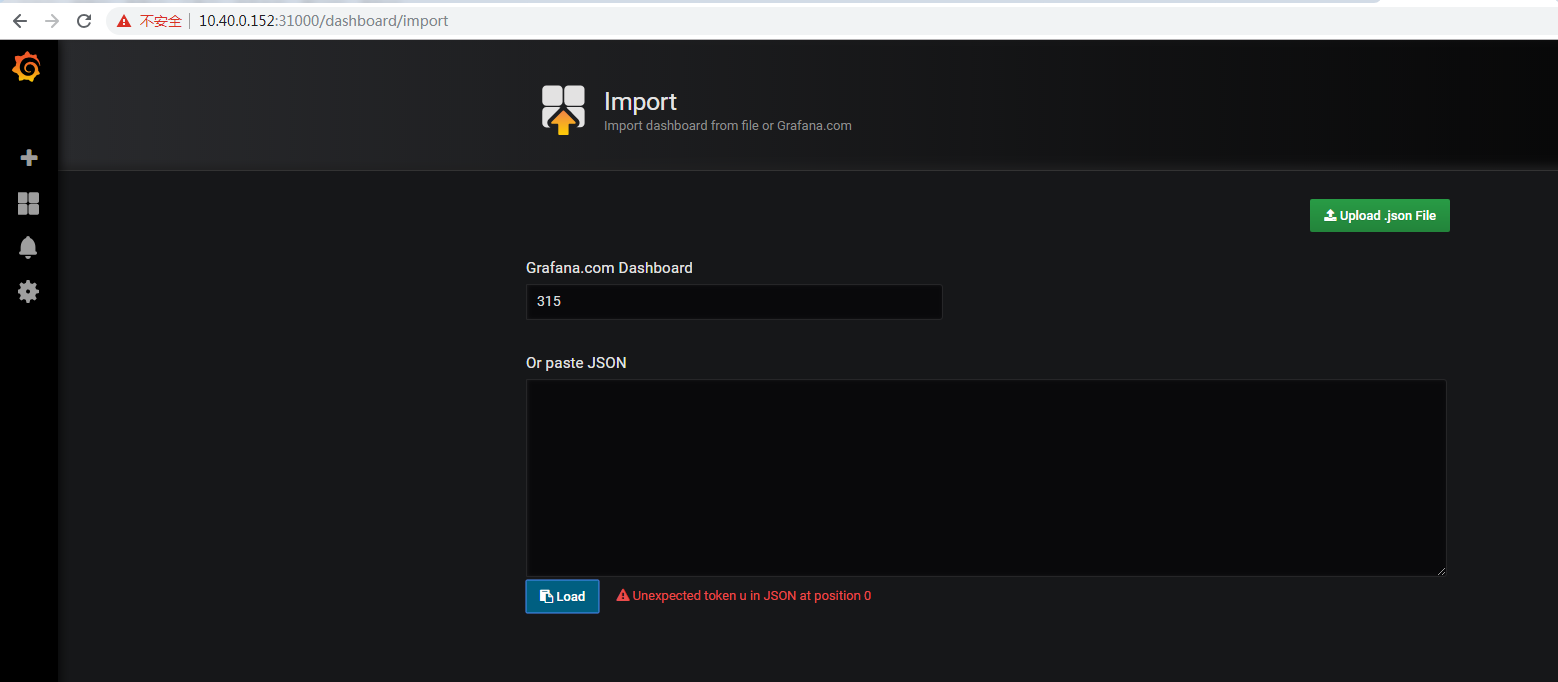

配置数据库源为prometheus,导入面板

可以直接输入模板编号315在线导入,或者下载好对应的json模板文件本地导入,面板模板下载地址https://grafana.com/dashboards/315

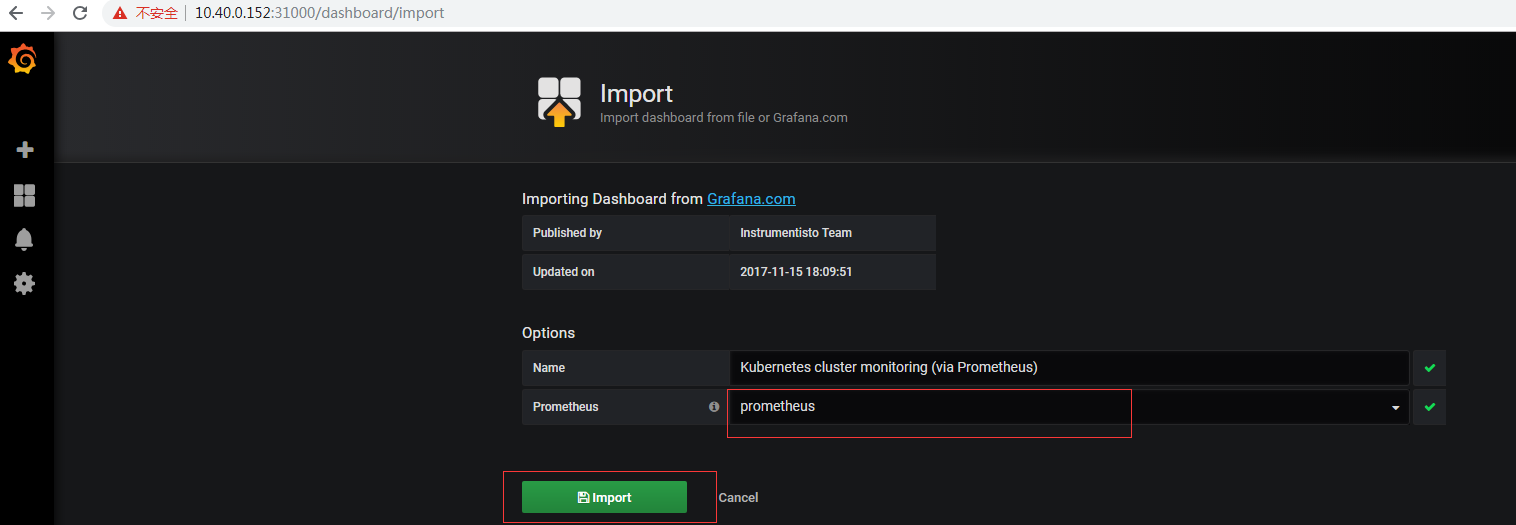

在线加载模板OK,选择prometheus数据库实例

大功告成,可以看到炫酷的监控页面了。