一、控制器介绍

controller用于控制pod,生产环境中都是使用控制器控制pod的。

1.1控制器的分类

- ReplicaSet 副本集,控制pod扩容,裁减

- Deployments 部署控制器,控制pod升级,回退,该控制器包含ReplicaSet

- StatefulSets 部署有状态的pod应用

- DaemonSet 守护进程的集合,运行在所有集群节点(包括master), 比如使用Filebeat,node_exporter(普罗米修士在客户端收集日志的工具)

- Jobs 一次性的任务

- Cronjob 周期性的任务

补充:ReplicationController也叫RC控制器,相当于ReplicaSet的老版本,现在不建议使用,现在使用Deployments加ReplicaSet替代RC,Deployments中是包含了ReplicaSet控制器的

1.2 有状态应用跟无状态应用

无状态应用(Stateless Application)是指应用不会在会话中保存下次会话所需要的客户端数据。每一个会话都像首次执行一样,不会依赖之前的数据进行响应;

举一个简单案例,无状态的服务器程序,就像web服务这样,每次HTTP请求和以前都没有啥关系,只是获取目标URI资源。得到目标内容之后,这次连接就会断开,没有任何痕迹。

有状态的应用(Stateful Application)是指应用会在会话中保存客户端的数据,并在客户端下一次的请求中来使用那些数据。

举一个简单案例,有状态的应用就指的是使用用户名密码登录的web服务器,需要使用COOKIE记录你的登录状态,服务端在响应客户端的请求的时候,会向客户端推送一个COOKIE,这个COOKIE记录服务端上面的一些信息。客户端在后续的请求中,可以携带这个COOKIE,这样访问该网站就不用每次都要输入账号密码了。

二、Deployment

2.1 Deployment简介

Replicaset控制器的功能:

控制Pod副本数量实现Pod的扩容和缩容。

Deployment控制器的功能:

Deployment集成了上线部署、滚动升级、创建副本、回滚等功能

Deployment里包含并使用了ReplicaSet控制器

Deployment用于部署无状态应用,适用于web微服务

2.2 创建Deployment

1.查看帮助

kubectl create -h

查看deployment帮助

#查看deployment帮助

kubectl explain deployment

2.使用命令创建deployment

1.创建一个名为nginx的deployment

#模拟创建一个deployment,不是真正的创建

kubectl create deployment nginx --image=nginx:1.15-alpine --port=80 --replicas=2 --dry-run=client

#上一条命令没报错,我们实际创建一个

kubectl create deployment nginx --image=nginx:1.15-alpine --port=80 --replicas=2

说明:

- --port=80 相当于docker里的暴露端口

- --replicas=2 指定副本数,默认为1

- --dry-run=client为测试模式,相当于不是真的跑,只是先模拟测试一下

2.验证

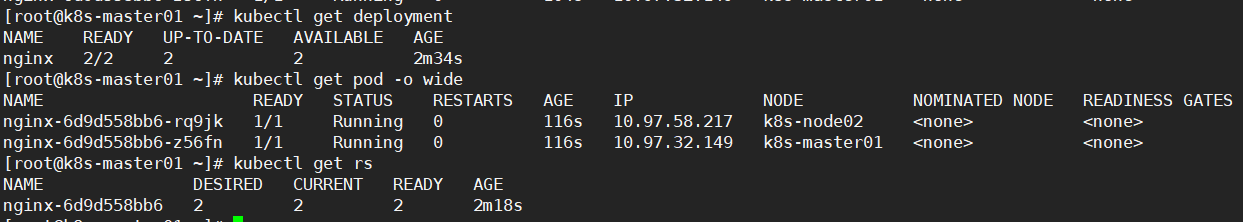

kubectl get deployment

kubectl get pod -o wide

此时nginx是deployment控制器名,replicas副本数为2,也就是说有两个pod,pod名是nginx-6d9d558bb6-xxxxx,每个pod中只有一个容器,

3.查看deployment和pod详情

kubectl describe deployment nginx

kubectl describe pod nginx-6d9d558bb6-xxxxx

4.删除deployment

kubectl delete deployment nginx

3.YAML文件创建deployment

可以将前面创建的控制器导出为yaml文件

kubectl get deployment nginx -o yaml > deployment.yml

1, 准备YAML文件

Deployment控制器中包含了副本集,副本集在spec中定义

[root@k8s-master01 ~]# vim nginx-deployment.yml

apiVersion: apps/v1 #版本信息使用kubectl explain deployment查看

kind: Deployment

metadata:

name: nginx # deployment控制器名

labels:

app: nginx-dep #deployment的标签名

# deployment里使用了副本集

spec:

replicas: 2

selector:

matchLabels:

app: nginx-dep #注意:这里是关联pod标签为nginx-dep的pod

template: # 代表pod的配置模板

metadata:

name: nginx #这是pod名,随便写

labels:

app: nginx-dep # 注意:这里指pod的标签,要跟matchLabels里的一致,他们是相关联的

spec:

containers:

- name: nginx #容器名随便写,多个容器不能重名

image: nginx:1.15-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

这里需要注意replicas定义的matchLabels名要跟template里定义的pod的标签名一致,这样他们才能关联起来

2, 应用YAML文件创建deployment

kubectl apply -f nginx-deployment.yml

3, 查看验证

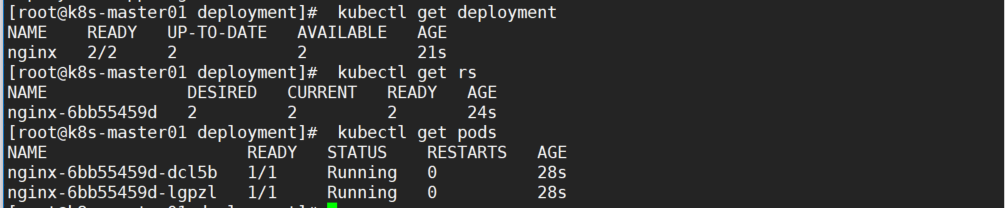

kubectl get deployment

kubectl get rs

kubectl get pods

补充:YAML单独创建replicaset

注意replicas必须跟template写在一起。

1, 编写YAML文件

[root@k8s-master01 ~]# vim replicaset.yml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: nginx-rs #ReplicaSet控制器名

namespace: default

spec:

replicas: 2 # 副本数

selector: # 标签选择器,对应下面pod的标签

matchLabels:

app: nginx # 匹配的label

template:

metadata:

name: nginx-pod

labels:

app: nginx #对应上面定义的标签选择器selector里面的名字

spec:

containers:

- name: nginx

image: nginx:1.15-alpine

ports:

- name: http

containerPort: 80

这里要注意的是selector标签选择器指定的标签名要跟template标签的名字对应,他们是相关联的

2, 应用YAML文件

kubectl apply -f replicaset.yml

3, 验证

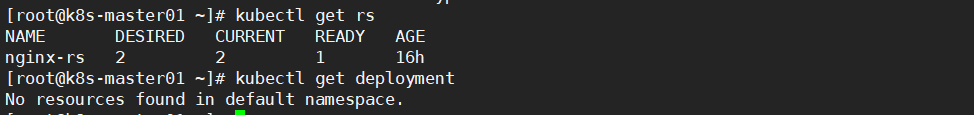

kubectl get rs

kubectl get pods

kubectl get deployment

找不到deployment,说明单独创建的rs并没有创建deployment

2.3 删除deployment

1.删除deployment

使用如下命令删除deployment,那么里面的rs跟pod也会被自动删除

kubectl delete deployment nginx-deployment

kubectl delete -f nginx-deployment.yml

2.删除deployment中的pod

删之前想一想之前提到的副本控制器replicaset的作用

1, 删除nginx的pod

kubectl get pod

kubectl delete pod nginx-7d9b8757cf-xxxx

2, 再次查看,发现又重新启动了一个pod,pod的IP也发生了变化

kubectl get pods -o wide

-

因为在deployment控制器中定义了副本分片,如果pod数量不满足副本分片的数量,就会启动或缩减pod数量直到满足副本分片数。

-

pod的IP不是固定的,一旦pod因为故障导致重启,IP就会发生变化;

-

如果想固定访问某个IP就能访问到对应的pod的话,就需要以后会提到的service了,service就相当于是一个VIP。

2.4 pod版本升级

查看帮助

kubectl set image -h

1, 升级前验证nginx版本

kubectl get pod

kubectl describe pod nginx-6bb55459d-j7bbg |grep Image:

kubectl exec nginx-6bb55459d-j7bbg -- nginx -v

2, 升级为1.16版

#先查看pod里的容器名

kubectl get pods nginx-6bb55459d-j7bbg -o jsonpath={.spec.containers[*].name}

#升级pod镜像版本

kubectl set image deployment nginx nginx=nginx:1.16-alpine --record

说明:

-

deployment nginx 代表名为nginx的deployment

-

nginx=nginx:1.16-alpine 前面的nginx为容器名

-

--record 表示会记录信息,如果不加后面回退显示版本为None(这个后面会说明)

查看容器名的其他方式:

- kubectl describe pod <pod名>

- kubectl edit deployment <deployment名>

- kubectl get deployment <deployment名> -o yaml

3, 验证

kubectl get pod #此时的pod名已发生改变

kubectl describe pod nginx-684c89cf5c-2q9gq |grep Image:

kubectl exec nginx-684c89cf5c-2q9gq -- nginx -v

#查看更新状态

kubectl rollout status deployment nginx

2.5 pod版本回退

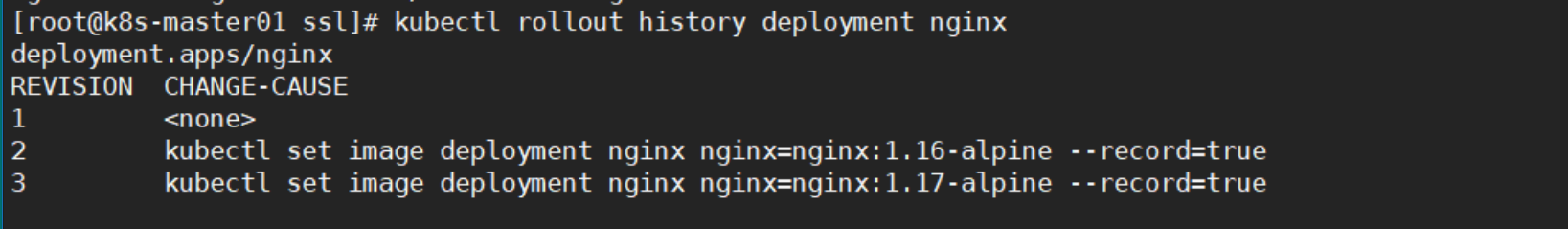

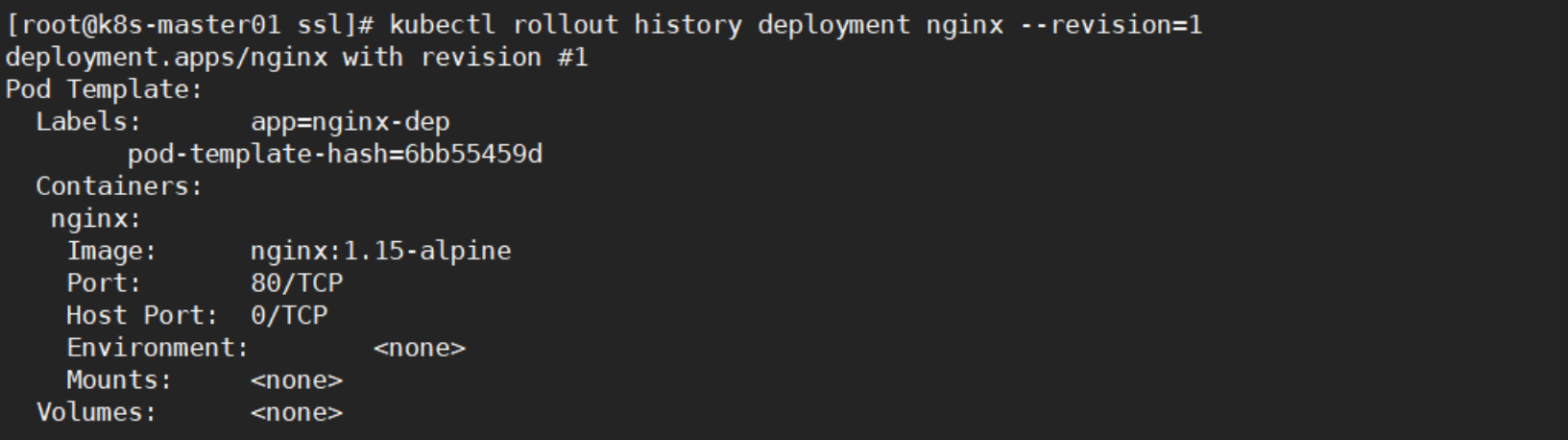

1, 查看版本历史信息

还记得版本升级用的--record选项么,对,这个就是记录你升级的版本信息的

kubectl rollout history deployment nginx

如果没有加--record,就会显示为--record

2, 定义要回退的版本

#--revision=1,这里的1指的前面的版本序号,会显示这个版本的信息

kubectl rollout history deployment nginx --revision=1

3, 执行回退(执行后才是真的回退)

kubectl rollout undo deployment nginx --to-revision=1

4, 验证

kubectl rollout history deployment nginx

kubectl get pods

kubectl describe pod nginx-6bb55459d-6nfgr |grep Image:

2.6 副本扩容

查看帮助

kubectl scale -h

1, 扩容为2个副本

kubectl scale deployment nginx --replicas=2

2, 查看

kubectl get pods -o wide

3, 继续扩容

kubectl scale deployment nginx --replicas=4

kubectl get pods -o wide

2.7 副本裁减

1, 指定副本数为1进行裁减

kubectl scale deployment nginx --replicas=1

2, 查看验证

kubectl get pods -o wide

kubectl get pods | wc -l #统计pod个数

三、DaemonSet

3.1 DaemonSet简介

-

DaemonSet能够让所有(或者特定)的节点运行同一个pod。当新节点加入到K8S集群中,pod会被(DaemonSet)自动调度到该节点上运行,当节点从K8S集群中被移除,被DaemonSet调度的pod会被移除

-

如果删除DaemonSet,所有跟这个DaemonSet相关的pods都会被删除。

-

如果一个DaemonSet的Pod被杀死、停止、或者崩溃,那么DaemonSet将会重新创建一个新的副本在这台计算节点上。

-

DaemonSet一般应用于日志收集、监控采集、分布式存储守护进程等

3.2 创建DaemonSet

1, 编写YAML文件

[root@k8s-master01 ~]# vim nginx-daemonset.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-daemonset

spec:

selector:

matchLabels: #DaemonSet的标签选择器,要跟下面pod的标签对应

name: nginx-test

template:

metadata:

labels:

name: nginx-test #pod的标签,跟选择器必须一致

spec:

tolerations: # tolerations代表容忍,容许

- key: node-role.kubernetes.io/k8s-master01 # 能容忍的污点key

effect: NoSchedule # kubectl explain pod.spec.tolerations查看(能容忍的污点effect)

containers:

- name: nginx

image: nginx:1.15-alpine

imagePullPolicy: IfNotPresent

resources: #资源限制

limits:

memory: 100Mi

requests:

memory: 100Mi

2, apply应用YAML文件

kubectl apply -f nginx-daemonset.yml

3, 验证

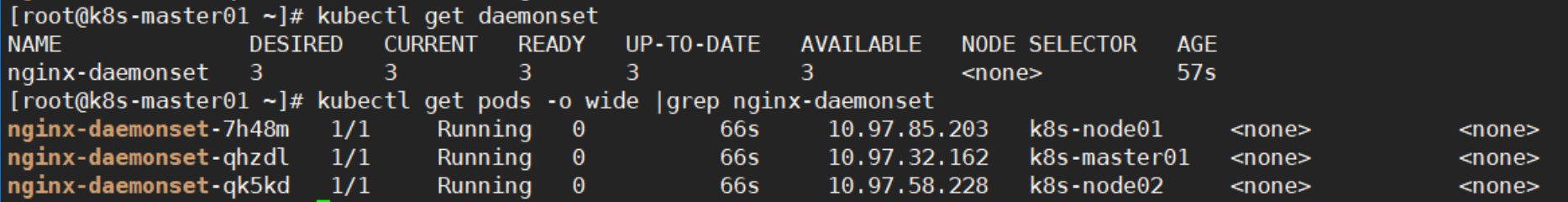

kubectl get daemonset

kubectl get pods |grep nginx-daemonset

可以看到daemonset在每个节点都创建了pod

四、Job

4.1 job简介

-

对于ReplicaSet而言,它希望pod保持预期数目、持久运行下去,除非用户明确删除,否则这些对象一直存在,它们针对的是耐久性任务,如web服务等。

-

对于非耐久性任务,比如压缩文件,任务完成后,pod需要结束运行,不需要pod继续保持在系统中,这个时候就要用到Job。

-

Job负责批量处理短暂的一次性任务 (short lived one-offff tasks),即仅执行一次的任务,它保证批处理任务的一个或多个Pod成功结束。

4.2 案例1: 计算圆周率2000位

[root@k8s-master01 ~]# vim job.yml

apiVersion: batch/v1

kind: Job

metadata:

name: pi # job名

spec:

template:

metadata:

name: pi # pod名

spec:

containers:

- name: pi # 容器名

image: perl # 此镜像有800多M,可提前导入到所有节点,也可能指定导入到某一节点然后指定调度到此节点

imagePullPolicy: IfNotPresent

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never # 执行完后不再重启

2, 应用YAML文件创建job

kubectl apply -f job.yml

3, 验证

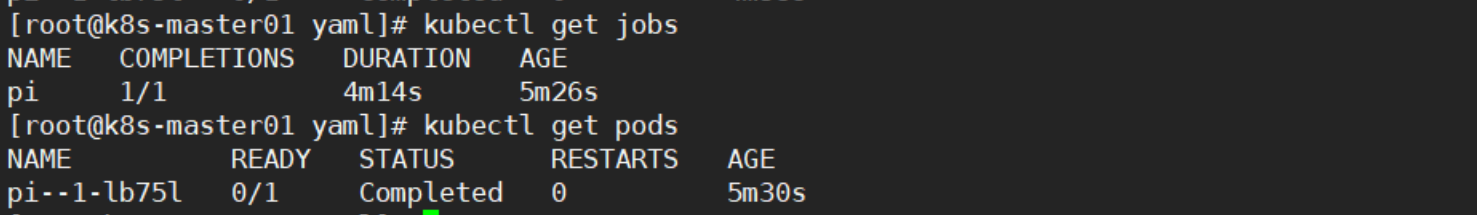

kubectl get jobs

kubectl get pods

kubectl logs pi--1-lb75l #查看计算结果,计算结果在日志中

kubectl logs pi--1-lb75l | wc -c #统计一共多少位

kubectl logs pi--1-lb75l | wc -L

STATUS显示为Completed表示计算能完成,此时才能查看计算结果

4.3 案例2: 创建固定次数job

1, 编写YAML文件

[root@k8s-master01 ~]# vim job2.yml

apiVersion: batch/v1

kind: Job

metadata:

name: busybox-job

spec:

completions: 10

# 执行job的次数

parallelism: 1

# 执行job的并发数,这表示一次做一个,一共做十次

template:

metadata:

name: busybox-job-pod

spec:

containers:

- name: busybox

image: busybox

imagePullPolicy: IfNotPresent

command: ["echo", "hello"]

restartPolicy: Never

2, 应用YAML文件创建job

kubectl apply -f job2.yml

3, 验证

kubectl get job

kubectl get pods | grep busybox

kubectl logs busybox-job--1-4w6mz

可以看到启动了10个pod来输出hello

五、cronjob

类似于Linux系统的crontab,在指定的时间周期运行相关的任务,类似于计划任务

1, 编写YAML文件

[root@k8s-master01 ~]# vim cronjob.yml

apiVersion: batch/v1

kind: CronJob

metadata:

name: cronjob1

spec:

schedule: "* * * * *" # 分时日月周,全星号表示每分钟都做

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date; echo hello kubernetes

imagePullPolicy: IfNotPresent

restartPolicy: OnFailure #失败了才重启

2, 应用YAML文件创建cronjob

kubectl apply -f cronjob.yml

3, 查看验证

kubectl get cronjob

kubectl get pods | grep cron #因为是周期性的,所以每分钟就会有一个完成状态的pod

#查看输出结果

kubectl logs cronjob1-27288555--1-2bzzg

周期性的只会保留最近的3个pod

六、Ingress

6.1 Ingress简介

前面提到的Service实现的是四层负载均衡,使用的是IP跟端口的形式,而Ingress使用的是七层负载均衡,可以使用域名进行负载均衡。

关于介绍看这篇,废话不多说我们直接安装,这里介绍两种安装方式,一种是yaml文件部署,一种是使用helm包管理工具。

重点来了,我是用的是二进制安装的k8s,版本是v1.22.2,所以Ingress-nginx的版本尽可能用最新的,不然会报错,详见版本对照表。

6.2 yaml部署Ingress

1.创建ingress-control.yaml文件

点击查看代码

[root@k8s-master01 ~]# cat ingress-control.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

hostNetwork: true

dnsPolicy: ClusterFirst

containers:

- name: controller

image: registry.cn-beijing.aliyuncs.com/kole_chang/controller:v1.0.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

- --watch-ingress-without-class=true

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/controller-ingressclass.yaml

# We don't support namespaced ingressClass yet

# So a ClusterRole and a ClusterRoleBinding is required

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: nginx

namespace: ingress-nginx

spec:

controller: k8s.io/ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: registry.cn-beijing.aliyuncs.com/kole_chang/kube-webhook-certgen:v1.0

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

namespace: ingress-nginx

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: registry.cn-beijing.aliyuncs.com/kole_chang/kube-webhook-certgen:v1.0

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

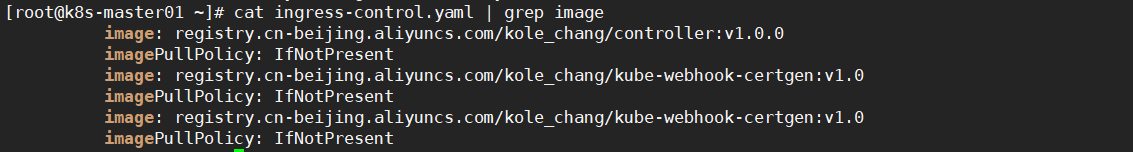

这里涉及到使用两个镜像,因为我的k8s是v1.22.2版本的,镜像最好也用v1.0以上版本不然会报错,另外镜像已经在阿里云上了,国内可以访问到

2.应用yaml文件

kubectl apply -f ingress-control.yaml

3.查看

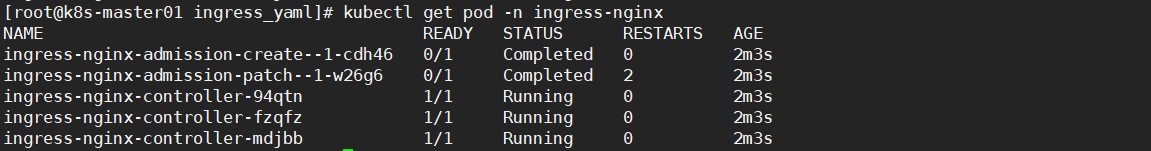

kubectl get pod -n ingress-nginx

状态为Completed的两个pod是job控制器部署的,用于检查配置环境的,如图所示是部署成功的状态

参考文章:yaml部署Ingress

6.3 helm部署Ingress

helm是k8s的包管理器,好了开始安装Ingress

1.解压

tar -zxvf helm-v3.7.1-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

2.下载Ingress包

#添加ingress-ninx仓库

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

#查看添加的仓库

helm repo list

#搜索ingress-nginx版本,直接安装最新版

helm search hub ingress-nginx

helm search repo ingress-nginx

#前面是仓库的名称,后面是包名

helm pull ingress-nginx/ingress-nginx

#解压下载下来的tgz包

tar xf ingress-nginx-4.0.10.tgz

cd ingress-nginx

#这里要修改几个地方

vim values.yam

①修改镜像地址

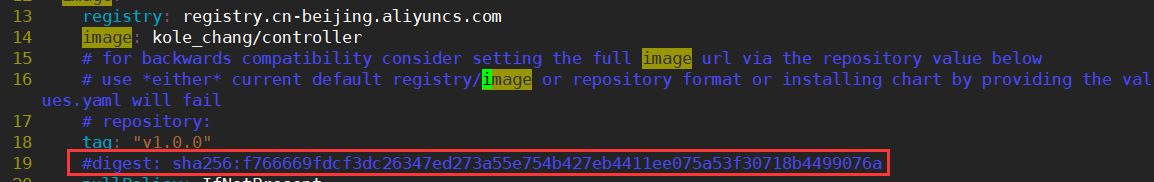

该values.yam会用到3个镜像,如果k8s是v1.22.2版本的,那么镜像版本一定要用v1.0及其以上的,修改这3个镜像地址为国内能访问的地址

在13、14行,18行,注释掉19行digest校验

registry: registry.cn-beijing.aliyuncs.com

image: kole_chang/controller

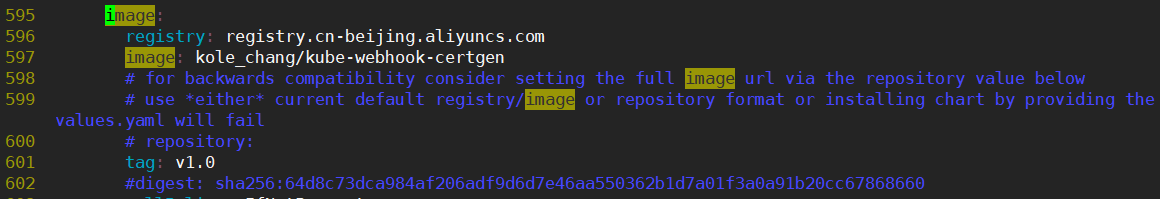

在596、597、601行,注释掉602行digest校验

registry: registry.cn-beijing.aliyuncs.com

image: kole_chang/kube-webhook-certgen

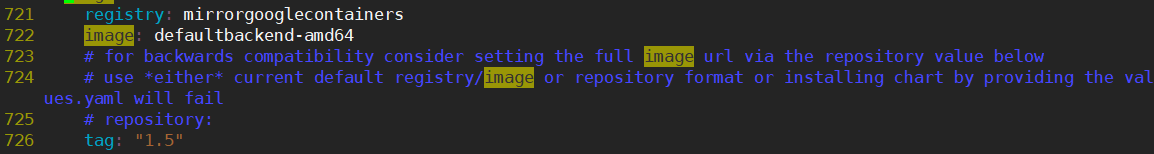

在721、722、726行,修改为如下图所示

registry: mirrorgooglecontainers

image: defaultbackend-amd64

②修改参数

58行,dns策略改为ClusterFirstWithHostNet

dnsPolicy: ClusterFirstWithHostNet

81行,修改为主机网络模式

hostNetwork: true

183行,设置为守护进程集模式,这个就不用我多说了吧

kind: DaemonSet

新增283行,设置标签,表示使用ingress标签值为true的节点进行安装Ingress,注意格式要跟上一行对齐

ingress: "true"

483行,改为ClusterIP类型

type: ClusterIP

3.安装Ingress

#创建命名空间

kubectl create ns ingress-nginx

#给master01添加标签,让他安装ingress

kubectl label node k8s-master01 ingress=true

kubectl get nodes -L ingress

#安装

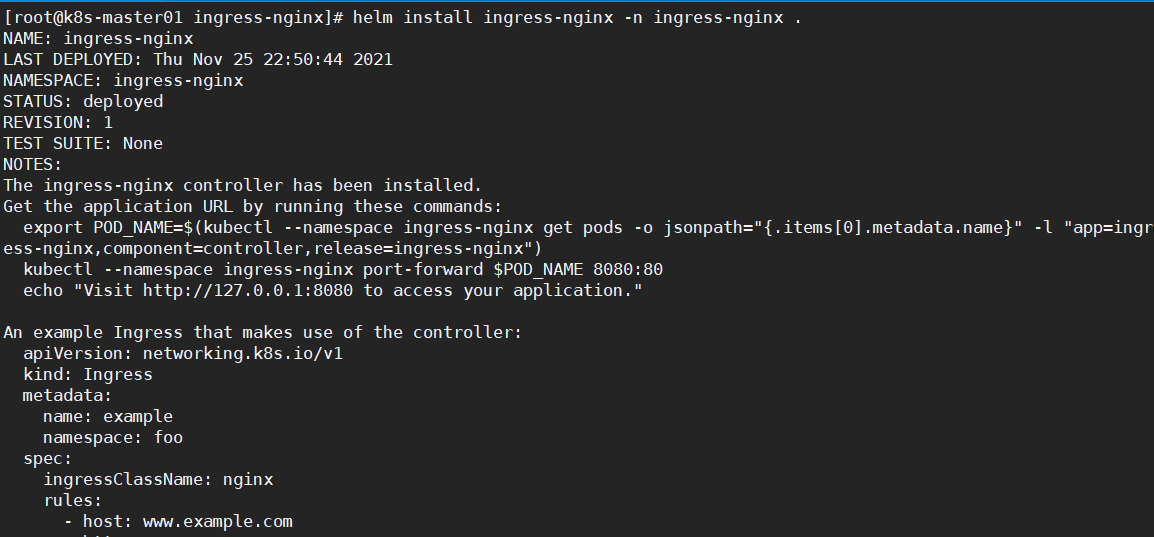

helm install ingress-nginx -n ingress-nginx .

#查看

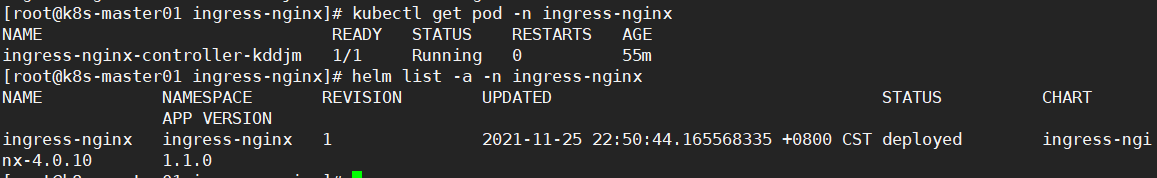

kubectl get pod -n ingress-nginx

helm list -a -ningress-nginx

#报错查看

kubectl describe pod -n ingress-nginx ingress-nginx-admission-patch--1-6zvvt

kubectl logs -n ingress-nginx ingress-nginx-admission-patch--1-6zvvt

#删除helm包

helm delete ingress-nginx -n ingress-nginx

因为我只在master01上打了标签,所以ingress-nginx只在master01上安装了

安装成功如下图所示

参考资料:

6.4 Ingress扩容缩容

前面yaml配置文件中写到Ingress会安装到标签ingress值为true的节点上,那么我们修改节点标签即可完成扩容

kubectl label node k8s-node01 ingress=true

kubectl get pod -n ingress-nginx -owide

缩容,删除该标签即可

kubectl label node k8s-master01 ingress-

6.5 部署单域名Ingress

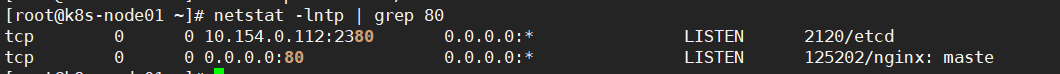

Ingress-nginx原理上还是使用的Nginx来实现负载均衡的,所以会在其部署的节点上开启80端口用于Nginx服务

#因为配置使用的是宿主机模式,所以会使用宿主机80端口用于nginx服务

netstat -lntp | grep 80

1.部署一个ingress

新版本的ingress格式跟旧版本的不一致,要看ingress-nginx的版本

ingress必须跟关联的Service在同一命名空间下。

[root@k8s-master01 ~]# vim ingress-tz.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-tz

annotations:

# use the shared ingress-nginx

kubernetes.io/ingress.class: "nginx" #集群中可能使用了不止一种ingress,这里指定使用nginx的ingress,在value.yaml第98行与之对应

spec:

rules:

- host: tzlinux.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-dep #这里写要绑定的service服务名

port:

number: 8000 #Service暴露的端口

有时候复制粘贴到vim编辑器中,格式会乱,这时在vim编辑器中使用

:set paste即可

2.应用yaml文件

kubectl apply -f ingress-tz.yaml

kubectl get ingress

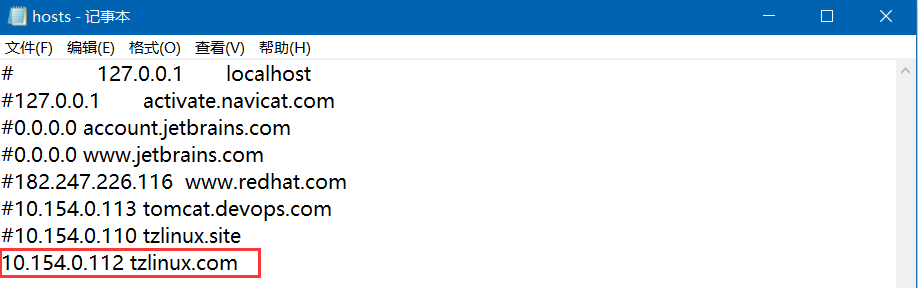

3.修改win主机hosts文件

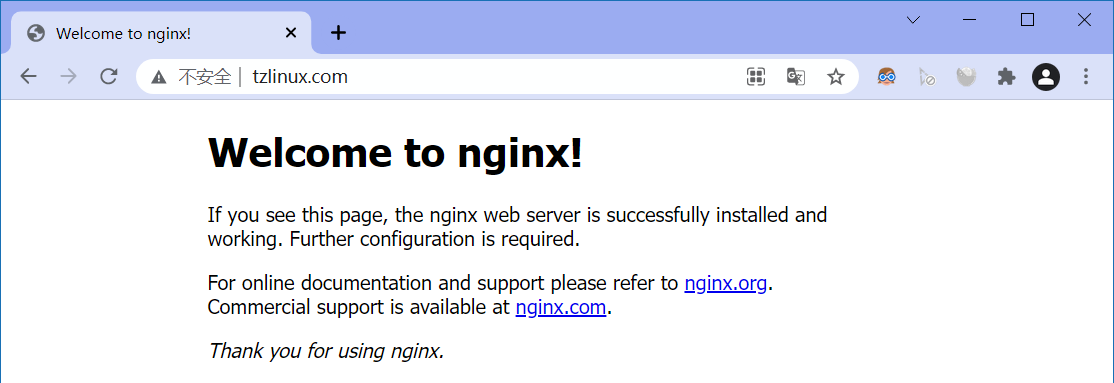

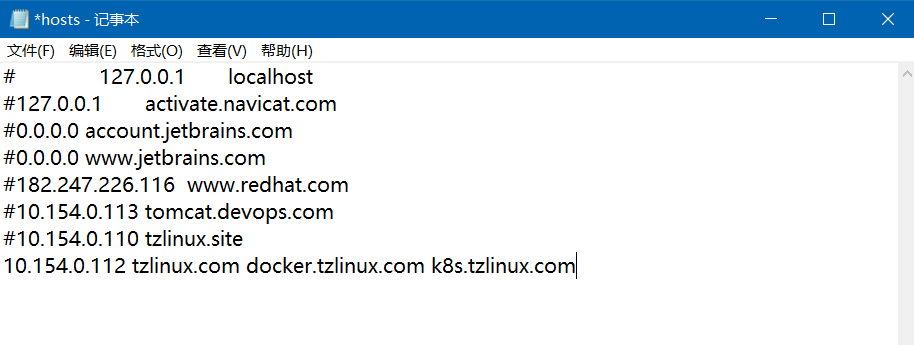

10.154.0.112是我安装了Ingress-nginx的那台k8s-node01节点,这里要注意,只有安装了Ingress-nginx的节点才可以使用负载均衡

4.访问tzlinux.com

进入ingress的pod查看会发现我们写的ingress-tz.yaml文件已经解析为nginx.conf的配置了

kubectl exec -it ingress-nginx-controller-rmcfn -n ingress-nginx -- sh

grep "tzlinux.com" nginx.conf -A 20 #查看后20行

6.6 部署多域名Ingress

1.编写yaml文件

[root@k8s-master01 ~]# vim ingress-mulDomain.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-tz

annotations:

# use the shared ingress-nginx

kubernetes.io/ingress.class: "nginx" #集群中可能使用了不止一种ingress,这里指定使用nginx的ingress,在value.yaml第98行与之对应

spec:

rules:

- host: docker.tzlinux.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-dep #这里写要绑定的service服务名

port:

number: 8000 #Service暴露的端口

- host: k8s.tzlinux.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc #这里写要绑定的service服务名

port:

number: 8000 #Service暴露的端口

2.应用yaml文件

kubectl apply -f ingress-mulDomain.yaml

#如果修改了yaml文件可以使用来更新

kubectl replace -f ingress-mulDomain.yaml

3.修改Hosts文件

4.使用浏览器访问

遇到的一个小报错

[root@k8s-master01 ingress-nginx]# helm install ingress-nginx -n ingress-nginx .

Error: INSTALLATION FAILED: rendered manifests contain a resource that already exists. Unable to continue with install: ClusterRole "ingress-nginx" in namespace "" exists and cannot be imported into the current release: invalid ownership metadata; annotation validation error: missing key "meta.helm.sh/release-name": must be set to "ingress-nginx"; annotation validation error: missing key "meta.helm.sh/release-namespace": must be set to "ingress-nginx"

解决

kubectl get ClusterRoles --all-namespaces | grep ingress

kubectl delete ClusterRole xxxx --all-namespaces

参考https://help.aliyun.com/document_detail/279475.html

七、参考资料

黑马Linux-k8s第三天视频