进入我这篇博客的博友们,相信你们具备有一定的spark学习基础和实践了。

先给大家来梳理下。spark的运行模式和常用的standalone、yarn部署。这里不多赘述,自行点击去扩展。

2、Spark standalone模式的安装(spark-1.6.1-bin-hadoop2.6.tgz)(master、slave1和slave2)

3、Spark standalone简介与运行wordcount(master、slave1和slave2)

4、Spark on YARN模式的安装(spark-1.6.1-bin-hadoop2.6.tgz + hadoop-2.6.0.tar.gz)(master、slave1和slave2)(博主推荐)

5、Spark on YARN简介与运行wordcount(master、slave1和slave2)(博主推荐)

正文开始

想说的是,对于spark的配置文件,即spark-env.sh。压根可以不做任何修改和配置。(当然我指的是常用和固定的那些参数)

#!/bin/sh

home=$(cd `dirname $0`; cd ..; pwd)

bin_home=$home/bin

conf_home=$home/conf

logs_home=$home/logs

data_home=$home/data

lib_home=$home/lib

#服务器配置文件

configFile=${conf_home}/my1.properties

spark_submit=/home/hadoop/spark/bin/spark-submit

#!/bin/sh

deleteCheckpoint=$1

home=$(cd `dirname $0`; cd ..; pwd)

. ${home}/bin/common.sh

hdfs dfs -rm -r /spark-streaming/behavior/stop

if [ "$deleteCheckpoint" == "delck" ]; then

hdfs dfs -rm -r /spark-streaming/checkpoint/behavior

fi

${spark_submit}

--master yarn

--deploy-mode cluster

--name behavior

--driver-memory 512M

--executor-memory 512M

--class com.djt.spark.streaming.UserBehaviorStreaming

${lib_home}/behavior-stream-jar-with-dependencies.jar ${configFile}

>> ${logs_home}/behavior.log 2>&1 &

echo $! > ${logs_home}/behavior_stream.pid

#!/bin/sh

home=$(cd `dirname $0`; cd ..; pwd)

. ${home}/bin/common.sh

hdfs dfs -mkdir -p /spark-streaming/behavior/stop

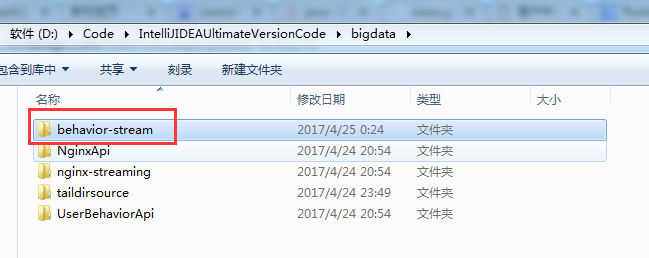

然后,直接将这个本地写好的目录,上传到集群

后续很简单,不多说。

藏经阁技术资料分享群二维码