Hive官方文档:Home-UserDocumentation

Hive DDL官方文档:LanguageManual DDL

参考文章:Hive 用户指南

注意:各个语句的版本时间,有的是在 hive-1.2.1 之后才有的,这些语句我们在hive-1.2.1中是不能使用的。

注意:本文写的都是常用的知识点,更多细节请参考官网。

常用命令

1 select current_database(); # 当前使用哪个库 2 show databases; # 显示所有库名 3 show tables; # 显示当前库有哪些表 4 describe extended talbe_name; # 表的扩展信息 和 describe formatted talbe_name; 类似 5 describe formatted talbe_name; # 推荐使用★★★ 如:describe formatted t_sz01; # 描述表信息,也可知道是 MANAGED_TABLE 还是 EXTERNAL_TABLE 6 describe database test_db; # 查看数据库信息 7 show partitions t_sz03_part; # 查看分区表的分区信息

说明

1 本文章使用Hive操作时,可能是 HIVE的命令行,也可能是beeline 2 所以看到2种风格的命令行,请不要惊讶。

1. DDL- Database操作

1.1. Create Database

1 CREATE (DATABASE|SCHEMA) [IF NOT EXISTS] database_name 2 [COMMENT database_comment] 3 [LOCATION hdfs_path] 4 [WITH DBPROPERTIES (property_name=property_value, ...)];

创建库

1 # 创建库 2 create database if not exists test_db 3 comment 'my frist db'; 4 5 0: jdbc:hive2://mini01:10000> describe database test_db; # 建库信息 6 +----------+--------------+----------------------------------------------------+-------------+-------------+-------------+--+ 7 | db_name | comment | location | owner_name | owner_type | parameters | 8 +----------+--------------+----------------------------------------------------+-------------+-------------+-------------+--+ 9 | test_db | my frist db | hdfs://mini01:9000/user/hive/warehouse/test_db.db | yun | USER | | 10 +----------+--------------+----------------------------------------------------+-------------+-------------+-------------+--+

1.2. Drop Database

1 DROP (DATABASE|SCHEMA) [IF EXISTS] database_name [RESTRICT|CASCADE]; 2 # 默认为 RESTRICT,如果库下有表就不能删除库;如果使用 CASCADE 那么即使库下有表,也会删除库下的表和库。

1.3. Use Database

1 USE database_name; # 使用哪个库 2 USE DEFAULT; # 使用默认库

例如:

1 hive (default)> show databases; # 查询有哪些库 2 OK 3 default 4 test001 5 test_db 6 zhang 7 Time taken: 0.016 seconds, Fetched: 4 row(s) 8 hive (default)> use test_db; # 使用哪个库 9 OK 10 Time taken: 0.027 seconds 11 hive (test_db)> select current_database(); # 当前使用的是哪个库 12 OK 13 test_db 14 Time taken: 1.232 seconds, Fetched: 1 row(s)

2. DDL-Table

2.1. Create Table

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS] [db_name.]table_name

[(col_name data_type [COMMENT col_comment], ... [constraint_specification])]

[COMMENT table_comment]

[PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)] # PARTITIONED 分割

[CLUSTERED BY (col_name, col_name, ...) [SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS] # CLUSTERED 分群 BUCKETS 桶

[SKEWED BY (col_name, col_name, ...)

ON ((col_value, col_value, ...), (col_value, col_value, ...), ...)

[STORED AS DIRECTORIES]

[

[ROW FORMAT row_format]

[STORED AS file_format]

| STORED BY 'storage.handler.class.name' [WITH SERDEPROPERTIES (...)]

]

[LOCATION hdfs_path]

[TBLPROPERTIES (property_name=property_value, ...)] # TBLPROPERTIES 表属性

[AS select_statement]; -- (Note: Available in Hive 0.5.0 and later; not supported for external tables)

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS] [db_name.]table_name

LIKE existing_table_or_view_name

[LOCATION hdfs_path];

data_type

: primitive_type

| array_type

| map_type

| struct_type

| union_type -- (Note: Available in Hive 0.7.0 and later)

primitive_type

: TINYINT

| SMALLINT

| INT

| BIGINT

| BOOLEAN

| FLOAT

| DOUBLE

| DOUBLE PRECISION -- (Note: Available in Hive 2.2.0 and later)

| STRING

| BINARY -- (Note: Available in Hive 0.8.0 and later)

| TIMESTAMP -- (Note: Available in Hive 0.8.0 and later)

| DECIMAL -- (Note: Available in Hive 0.11.0 and later)

| DECIMAL(precision, scale) -- (Note: Available in Hive 0.13.0 and later)

| DATE -- (Note: Available in Hive 0.12.0 and later)

| VARCHAR -- (Note: Available in Hive 0.12.0 and later)

| CHAR -- (Note: Available in Hive 0.13.0 and later)

array_type

: ARRAY < data_type >

map_type

: MAP < primitive_type, data_type >

struct_type

: STRUCT < col_name : data_type [COMMENT col_comment], ...>

union_type

: UNIONTYPE < data_type, data_type, ... > -- (Note: Available in Hive 0.7.0 and later)

row_format

: DELIMITED [FIELDS TERMINATED BY char [ESCAPED BY char]] [COLLECTION ITEMS TERMINATED BY char]

[MAP KEYS TERMINATED BY char] [LINES TERMINATED BY char]

[NULL DEFINED AS char] -- (Note: Available in Hive 0.13 and later)

| SERDE serde_name [WITH SERDEPROPERTIES (property_name=property_value, property_name=property_value, ...)]

file_format:

: SEQUENCEFILE

| TEXTFILE -- (Default, depending on hive.default.fileformat configuration)

| RCFILE -- (Note: Available in Hive 0.6.0 and later)

| ORC -- (Note: Available in Hive 0.11.0 and later)

| PARQUET -- (Note: Available in Hive 0.13.0 and later)

| AVRO -- (Note: Available in Hive 0.14.0 and later)

| JSONFILE -- (Note: Available in Hive 4.0.0 and later)

| INPUTFORMAT input_format_classname OUTPUTFORMAT output_format_classname

constraint_specification:

: [, PRIMARY KEY (col_name, ...) DISABLE NOVALIDATE ]

[, CONSTRAINT constraint_name FOREIGN KEY (col_name, ...) REFERENCES table_name(col_name, ...) DISABLE NOVALIDATE

注意:使用CREATE TABLE建表时,如果该表或视图已存在,那么会报错。可以使用IF NOT EXISTS 跳过该错误。

- 表名和列名大小写不明感,但是SerDe(Serializer/Deserializer的简写。hive使用Serde进行行对象的序列与反序列化)和property (属性)名大小写敏感。

- 表和列注释是字符串文本(需要单引号)。

- 一个表的创建没有使用EXTERNAL子句,那么被称为managed table(托管表);因为Hive管理它的数据。查看一个表是托管的还是外部的,可以通过 DESCRIBE EXTENDED 或者 table_name 查看tableType获知。【或者通过describe formatted table_name; 查看】 【参见示例1】

- TBLPROPERTIES 子句允许你使用自己的元数据key/value【键/值对】去标记表定义。一些预定义的表属性也存在,比如last_modified_user和last_modified_time,它们是由Hive自动添加和管理的。【参见示例1】

1 # 示例1 2 0: jdbc:hive2://mini01:10000> create table t_sz05 (id int, name string) tblproperties ('key001'='value1', 'key200'='value200'); # 创建表 3 No rows affected (0.224 seconds) 4 0: jdbc:hive2://mini01:10000> describe formatted t_sz05; # 查看表信息 5 +-------------------------------+-------------------------------------------------------------+-----------------------+--+ 6 | col_name | data_type | comment | 7 +-------------------------------+-------------------------------------------------------------+-----------------------+--+ 8 | # col_name | data_type | comment | 9 | | NULL | NULL | 10 | id | int | | 11 | name | string | | 12 | | NULL | NULL | 13 | # Detailed Table Information | NULL | NULL | 14 | Database: | zhang | NULL | 15 | Owner: | yun | NULL | 16 | CreateTime: | Sat Jul 07 20:13:53 CST 2018 | NULL | 17 | LastAccessTime: | UNKNOWN | NULL | 18 | Protect Mode: | None | NULL | 19 | Retention: | 0 | NULL | 20 | Location: | hdfs://mini01:9000/user/hive/warehouse/zhang.db/t_sz05 | NULL | 21 | Table Type: | MANAGED_TABLE 【# 如果是外部表,则为EXTERNAL_TABLE】 | NULL | 22 | Table Parameters: | NULL | NULL | 23 | | key001 【# 自定义】 | value1 | 24 | | key200 【# 自定义】 | value200 | 25 | | transient_lastDdlTime | 1530965633 | 26 | | NULL | NULL | 27 | # Storage Information | NULL | NULL | 28 | SerDe Library: | org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe | NULL | 29 | InputFormat: | org.apache.hadoop.mapred.TextInputFormat | NULL | 30 | OutputFormat: | org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat | NULL | 31 | Compressed: | No | NULL | 32 | Num Buckets: | -1 | NULL | 33 | Bucket Columns: | [] | NULL | 34 | Sort Columns: | [] | NULL | 35 | Storage Desc Params: | NULL | NULL | 36 | | serialization.format | 1 | 37 +-------------------------------+-------------------------------------------------------------+-----------------------+--+ 38 29 rows selected (0.153 seconds)

2.1.1. Managed Table

建表

1 # 可以通过 describe formatted table_name; # 查看表信息 2 hive (test_db)> create table t_sz01 (id int, name string comment 'person name') 3 comment 'a table of name' 4 row format delimited fields terminated by ','; 5 OK 6 Time taken: 0.311 seconds 7 hive (test_db)> show tables; 8 OK 9 t_sz01 10 Time taken: 0.031 seconds, Fetched: 1 row(s)

通过 desc formatted t_sz01; 中的信息可知: Location: 为 hdfs://mini01:9000/user/hive/warehouse/test_db.db/t_sz01

添加表数据

1 [yun@mini01 hive]$ pwd 2 /app/software/hive 3 [yun@mini01 hive]$ cat t_sz01.dat 4 1,zhnagsan 5 3,李四 6 5,wangwu 7 7,赵六 8 2,sunqi 9 4,周八 10 6,kkkkk 11 8,zzzzz 12 [yun@mini01 hive]$ cp -a t_sz01.dat t_sz01.dat2 # 复制一份 13 [yun@mini01 hive]$ hadoop fs -put t_sz01.dat /user/hive/warehouse/test_db.db/t_sz01 # 数据上传 14 [yun@mini01 hive]$ hadoop fs -put t_sz01.dat2 /user/hive/warehouse/test_db.db/t_sz01 # 数据上传 15 [yun@mini01 hive]$ hadoop fs -ls /user/hive/warehouse/test_db.db/t_sz01 # 表下可以有多个数据文件 16 Found 2 items 17 -rw-r--r-- 2 yun supergroup 71 2018-07-12 21:58 /user/hive/warehouse/test_db.db/t_sz01/t_sz01.dat 18 -rw-r--r-- 2 yun supergroup 71 2018-07-12 22:30 /user/hive/warehouse/test_db.db/t_sz01/t_sz01.dat2

通过hive查看数据

1 0: jdbc:hive2://mini01:10000> select * from t_sz01; 2 +------------+--------------+--+ 3 | t_sz01.id | t_sz01.name | 4 +------------+--------------+--+ 5 | 1 | zhnagsan | 6 | 3 | 李四 | 7 | 5 | wangwu | 8 | 7 | 赵六 | 9 | 2 | sunqi | 10 | 4 | 周八 | 11 | 6 | kkkkk | 12 | 8 | zzzzz | 13 | 1 | zhnagsan | 14 | 3 | 李四 | 15 | 5 | wangwu | 16 | 7 | 赵六 | 17 | 2 | sunqi | 18 | 4 | 周八 | 19 | 6 | kkkkk | 20 | 8 | zzzzz | 21 +------------+--------------+--+ 22 16 rows selected (0.159 seconds)

2.1.2. External Tables

外表就是自己提供一个LOCATION,而不使用默认的表位置。并且删除该表时,表中的数据是不会删除的。

建表

1 # 其中location也可省略 hdfs://mini01:9000 改为 /user02/hive/database/ext_table 2 # 其中如果location目录不存在,那么hive会创建 3 hive (test_db)> create external table t_sz02_ext (id int, name string) 4 comment 'a ext table' 5 row format delimited fields terminated by ' ' 6 location 'hdfs://mini01:9000/user02/hive/database/ext_table'; 7 OK 8 Time taken: 0.065 seconds 9 hive (test_db)> show tables; 10 OK 11 t_sz01 12 t_sz02_ext 13 Time taken: 0.03 seconds, Fetched: 2 row(s) 14 0: jdbc:hive2://mini01:10000> select * from t_sz02_ext; # 无数据 15 +----------------+------------------+--+ 16 | t_sz02_ext.id | t_sz02_ext.name | 17 +----------------+------------------+--+ 18 +----------------+------------------+--+ 19 No rows selected (0.094 seconds) 20 21 # 通过desc formatted t_sz02_ext; 可能查询表的Location,得到下面的信息 22 # hdfs://mini01:9000/user02/hive/database/ext_table

添加表数据

1 [yun@mini01 hive]$ pwd 2 /app/software/hive 3 [yun@mini01 hive]$ ll 4 total 12 5 -rw-rw-r-- 1 yun yun 56 Jul 3 21:26 sz.dat 6 -rw-rw-r-- 1 yun yun 71 Jul 12 21:53 t_sz01.dat 7 -rw-rw-r-- 1 yun yun 79 Jul 12 22:15 t_sz02_ext.dat 8 [yun@mini01 hive]$ cat t_sz02_ext.dat # 最后有一行空行 9 1 刘晨 10 2 王敏 11 3 张立 12 4 刘刚 13 5 孙庆 14 6 易思玲 15 7 李娜 16 8 梦圆圆 17 18 [yun@mini01 hive]$ hadoop fs -put t_sz02_ext.dat /user02/hive/database/ext_table # 上传数据 19 [yun@mini01 hive]$ hadoop fs -ls /user02/hive/database/ext_table 20 Found 1 items 21 -rw-r--r-- 2 yun supergroup 79 2018-07-12 22:16 /user02/hive/database/ext_table/t_sz02_ext.dat

通过hive查看数据

1 0: jdbc:hive2://mini01:10000> select * from t_sz02_ext; 2 +----------------+------------------+--+ 3 | t_sz02_ext.id | t_sz02_ext.name | 4 +----------------+------------------+--+ 5 | 1 | 刘晨 | 6 | 2 | 王敏 | 7 | 3 | 张立 | 8 | 4 | 刘刚 | 9 | 5 | 孙庆 | 10 | 6 | 易思玲 | 11 | 7 | 李娜 | 12 | 8 | 梦圆圆 | 13 | NULL | NULL | # 原因是数据中,最后一行为空行 14 +----------------+------------------+--+ 15 9 rows selected (0.14 seconds)

2.1.3. Partitioned Tables

分区表(Partitioned tables)可以使用 PARTITIONED BY 子句。一个表可以有一个或多个分区列,并且为每个分区列中的不同值组合创建一个单独的数据目录。

当创建一个分区表,你得到这样的错误:“FAILED: Error in semantic analysis: Column repeated in partitioning columns,【失败:语义分析错误:分区列重复列】”。意味着你试图在表的本身数据中包含分区列。你可能确实定义了列。但是,您创建的分区可以生成一个可以查询的伪列,因此必须将表的列重命名为其他(那样用户不会查询)。

例如,假设原始未分区表有三列:id、date和name。

1 id int, 2 date date, 3 name varchar

现在要按日期分区。你的Hive定义可以使用 "dtDontQuery"作为列名,以便 "date" 可以被用作分区(和查询)。

1 create table table_name ( 2 id int, 3 dtDontQuery string, 4 name string 5 ) 6 partitioned by (date string)

现在你的用户仍然查询"where date = '...'",但是第二列dtDontQuery将保存原始值。

建表

1 # 不能使用date作为表的字段【列】,因为date是关键字 2 hive (test_db)> create table t_sz03_part (id int, name string) 3 comment 'This is a partitioned table' 4 partitioned by (dt string, country string) 5 row format delimited fields terminated by ',';

添加数据

1 [yun@mini01 hive]$ pwd 2 /app/software/hive 3 [yun@mini01 hive]$ cat t_sz03_20180711.dat1 4 1,张三_20180711 5 2,lisi_20180711 6 3,Wangwu_20180711 7 [yun@mini01 hive]$ cat t_sz03_20180711.dat2 8 11,Tom_20180711 9 12,Dvid_20180711 10 13,cherry_20180711 11 [yun@mini01 hive]$ cat t_sz03_20180712.dat1 12 1,张三_20180712 13 2,lisi_20180712 14 3,Wangwu_20180712 15 [yun@mini01 hive]$ cat t_sz03_20180712.dat2 16 11,Tom_20180712 17 12,Dvid_20180712 18 13,cherry_20180712 19 #### 在Hive中导入数据 20 hive (test_db)> load data local inpath '/app/software/hive/t_sz03_20180711.dat1' into table t_sz03_part partition (dt='20180711', country='CN'); 21 Loading data to table test_db.t_sz03_part partition (dt=20180711, country=CN) 22 Partition test_db.t_sz03_part{dt=20180711, country=CN} stats: [numFiles=1, numRows=0, totalSize=52, rawDataSize=0] 23 OK 24 Time taken: 0.406 seconds 25 hive (test_db)> load data local inpath '/app/software/hive/t_sz03_20180711.dat2' into table t_sz03_part partition (dt='20180711', country='US'); 26 Loading data to table test_db.t_sz03_part partition (dt=20180711, country=US) 27 Partition test_db.t_sz03_part{dt=20180711, country=US} stats: [numFiles=1, numRows=0, totalSize=52, rawDataSize=0] 28 OK 29 Time taken: 0.453 seconds 30 hive (test_db)> load data local inpath '/app/software/hive/t_sz03_20180712.dat1' into table t_sz03_part partition (dt='20180712', country='CN'); 31 Loading data to table test_db.t_sz03_part partition (dt=20180712, country=CN) 32 Partition test_db.t_sz03_part{dt=20180712, country=CN} stats: [numFiles=1, numRows=0, totalSize=52, rawDataSize=0] 33 OK 34 Time taken: 0.381 seconds 35 hive (test_db)> load data local inpath '/app/software/hive/t_sz03_20180712.dat2' into table t_sz03_part partition (dt='20180712', country='US'); 36 Loading data to table test_db.t_sz03_part partition (dt=20180712, country=US) 37 Partition test_db.t_sz03_part{dt=20180712, country=US} stats: [numFiles=1, numRows=0, totalSize=52, rawDataSize=0] 38 OK 39 Time taken: 0.506 seconds

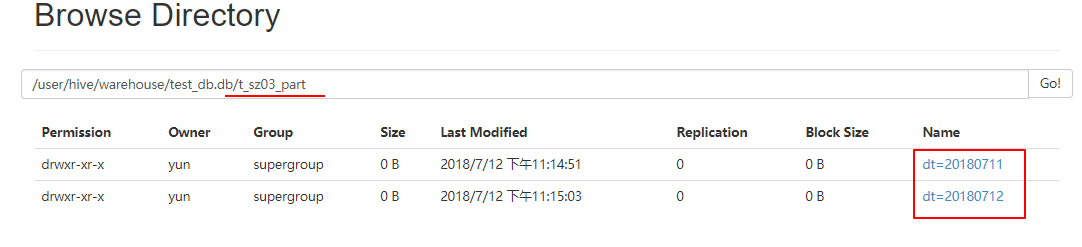

浏览器访问

通过hive查看数据

1 0: jdbc:hive2://mini01:10000> select * from t_sz03_part; 2 +-----------------+-------------------+-----------------+----------------------+--+ 3 | t_sz03_part.id | t_sz03_part.name | t_sz03_part.dt | t_sz03_part.country | 4 +-----------------+-------------------+-----------------+----------------------+--+ 5 | 1 | 张三_20180711 | 20180711 | CN | 6 | 2 | lisi_20180711 | 20180711 | CN | 7 | 3 | Wangwu_20180711 | 20180711 | CN | 8 | 11 | Tom_20180711 | 20180711 | US | 9 | 12 | Dvid_20180711 | 20180711 | US | 10 | 13 | cherry_20180711 | 20180711 | US | 11 | 1 | 张三_20180712 | 20180712 | CN | 12 | 2 | lisi_20180712 | 20180712 | CN | 13 | 3 | Wangwu_20180712 | 20180712 | CN | 14 | 11 | Tom_20180712 | 20180712 | US | 15 | 12 | Dvid_20180712 | 20180712 | US | 16 | 13 | cherry_20180712 | 20180712 | US | 17 +-----------------+-------------------+-----------------+----------------------+--+ 18 12 rows selected (0.191 seconds) 19 0: jdbc:hive2://mini01:10000> show partitions t_sz03_part; # 查看分区表的分区信息 20 +-------------------------+--+ 21 | partition | 22 +-------------------------+--+ 23 | dt=20180711/country=CN | 24 | dt=20180711/country=US | 25 | dt=20180712/country=CN | 26 | dt=20180712/country=US | 27 +-------------------------+--+ 28 4 rows selected (0.164 seconds)

2.1.4. Create Table As Select (CTAS)

表还可以由一个create-table-as-select (CTAS)语句中的查询结果创建和填充。CTAS创建的表是原子的,这意味着在填充所有查询结果之前,其他用户看不到该表。因此,其他用户要么看到表的完整结果,要么根本看不到表。

CTAS限制:

目标表不能是分区表

目标表不能是外部表

目标表不能是分桶表

这里的目标表指的是要创建的表。

1 # 示例: 2 CREATE TABLE new_key_value_store 3 ROW FORMAT SERDE "org.apache.hadoop.hive.serde2.columnar.ColumnarSerDe" 4 STORED AS RCFile 5 AS 6 SELECT (key % 1024) new_key, concat(key, value) key_value_pair 7 FROM key_value_store 8 SORT BY new_key, key_value_pair;

实例

1 hive (test_db)> create table t_sz02_ext_new 2 row format delimited fields terminated by '#' 3 AS 4 SELECT id , concat(name, '_', id) name2 5 FROM t_sz02_ext 6 SORT BY name2; 7 8 0: jdbc:hive2://mini01:10000> show tables; 9 +-----------------+--+ 10 | tab_name | 11 +-----------------+--+ 12 | t_sz01 | 13 | t_sz02_ext | 14 | t_sz02_ext_new | 15 | t_sz03_part | 16 | t_sz100_ext | 17 | t_sz101_ext | 18 +-----------------+--+ 19 6 rows selected (0.069 seconds) 20 0: jdbc:hive2://mini01:10000> select * from t_sz02_ext_new; 21 +--------------------+-----------------------+--+ 22 | t_sz02_ext_new.id | t_sz02_ext_new.name2 | 23 +--------------------+-----------------------+--+ 24 | NULL | NULL | 25 | 4 | 刘刚_4 | 26 | 1 | 刘晨_1 | 27 | 5 | 孙庆_5 | 28 | 3 | 张立_3 | 29 | 6 | 易思玲_6 | 30 | 7 | 李娜_7 | 31 | 8 | 梦圆圆_8 | 32 | 2 | 王敏_2 | 33 +--------------------+-----------------------+--+ 34 9 rows selected (0.094 seconds) 35 36 # 其中路径location可以通过desc formatted t_sz02_ext_new; 获取 37 hive (test_db)> dfs -ls /user/hive/warehouse/test_db.db/t_sz02_ext_new/; 38 Found 1 items 39 -rwxr-xr-x 2 yun supergroup 100 2018-07-12 23:50 /user/hive/warehouse/test_db.db/t_sz02_ext_new/000000_0 40 hive (test_db)> dfs -cat /user/hive/warehouse/test_db.db/t_sz02_ext_new/000000_0; 41 N#N 42 4#刘刚_4 43 1#刘晨_1 44 5#孙庆_5 45 3#张立_3 46 6#易思玲_6 47 7#李娜_7 48 8#梦圆圆_8 49 2#王敏_2

hdfs查看

1 # 其中路径location可以通过desc formatted t_sz02_ext_new; 获取 2 [yun@mini01 hive]$ hadoop fs -ls /user/hive/warehouse/test_db.db/t_sz02_ext_new; 3 Found 1 items 4 -rwxr-xr-x 2 yun supergroup 100 2018-07-12 23:44 /user/hive/warehouse/test_db.db/t_sz02_ext_new/000000_0 5 [yun@mini01 hive]$ hadoop fs -cat /user/hive/warehouse/test_db.db/t_sz02_ext_new/000000_0 6 N#N 7 4#刘刚_4 8 1#刘晨_1 9 5#孙庆_5 10 3#张立_3 11 6#易思玲_6 12 7#李娜_7 13 8#梦圆圆_8 14 2#王敏_2

2.1.5. Create Table Like

拷贝一个已存在的表结构作为一个新表

1 CREATE TABLE empty_key_value_store 2 LIKE key_value_store [TBLPROPERTIES (property_name=property_value, ...)];

实例

1 hive (test_db)> create table t_sz03_part_new 2 like t_sz03_part tblproperties ('proper1'='value1', 'proper2'='value2'); 3 OK 4 Time taken: 0.083 seconds 5 0: jdbc:hive2://mini01:10000> select * from t_sz03_part_new; # 只复制表结构,不复制内容 6 +---------------------+-----------------------+---------------------+--------------------------+--+ 7 | t_sz03_part_new.id | t_sz03_part_new.name | t_sz03_part_new.dt | t_sz03_part_new.country | 8 +---------------------+-----------------------+---------------------+--------------------------+--+ 9 +---------------------+-----------------------+---------------------+--------------------------+--+ 10 No rows selected (0.087 seconds) 11 hive (test_db)> create table t_sz04_like 12 like t_sz02_ext tblproperties ('proper1'='value1', 'proper2'='value2'); 13 No rows affected (0.153 seconds) 14 15 0: jdbc:hive2://mini01:10000> select * from t_sz04_like; 16 +-----------------+-------------------+--+ 17 | t_sz04_like.id | t_sz04_like.name | 18 +-----------------+-------------------+--+ 19 +-----------------+-------------------+--+

通过desc formatted tab_name; 可以看到,t_sz03_part 和 t_sz03_part_new 的表结构是一样的。 只是tblproperties 不一样而已。

如果like对象即使是一个外部表,那么生成的表也是MANAGED_TABLE,。

2.1.6. Bucketed Sorted Tables

1 CREATE TABLE page_view(viewTime INT, userid BIGINT, 2 page_url STRING, referrer_url STRING, 3 ip STRING COMMENT 'IP Address of the User') 4 COMMENT 'This is the page view table' 5 PARTITIONED BY(dt STRING, country STRING) 6 CLUSTERED BY(userid) SORTED BY(viewTime) INTO 32 BUCKETS 7 ROW FORMAT DELIMITED 8 FIELDS TERMINATED BY '�01' 9 COLLECTION ITEMS TERMINATED BY '�02' 10 MAP KEYS TERMINATED BY '�03' 11 STORED AS SEQUENCEFILE;

CLUSTERED BY

对于每一个表(table)或者分区, Hive可以进一步组织成桶,也就是说桶是更为细粒度的数据范围划分。Hive也是 针对某一列进行桶的组织。Hive采用对列值哈希,然后除以桶的个数求余的方式决定该条记录存放在哪个桶当中。

把表(或者分区)组织成桶(Bucket)有两个理由:

(1)获得更高的查询处理效率。桶为表加上了额外的结构,Hive 在处理有些查询时能利用这个结构。具体而言,连接两个在(包含连接列的)相同列上划分了桶的表,可以使用 Map 端连接 (Map-side join)高效的实现。比如JOIN操作。对于JOIN操作两个表有一个相同的列,如果对这两个表都进行了桶操作。那么将保存相同列值的桶进行JOIN操作就可以,可以大大较少JOIN的数据量。

(2)使取样(sampling)更高效。在处理大规模数据集时,在开发和修改查询的阶段,如果能在数据集的一小部分数据上试运行查询,会带来很多方便。

注意点

1、order by 会对输入做全局排序,因此只有一个reducer,会导致当输入规模较大时,需要较长的计算时间。

2、sort by不是全局排序,其在数据进入reducer前完成排序。因此,如果用sort by进行排序,并且设置mapred.reduce.tasks>1,则sort by只保证每个reducer的输出有序,不保证全局有序。

3、distribute by(字段)根据指定的字段将数据分到不同的reducer,且分发算法是hash散列。

4、Cluster by(字段)除了具有Distribute by的功能外,还会对该字段进行排序。

因此,如果分桶和sort字段是同一个时,此时,cluster by = distribute by + sort by

说明:是根据表查询数据结果向分桶表中插入数据。

分桶表的作用:最大的作用是用来提高join操作的效率;

(思考这个问题:

select a.id,a.name,b.addr from a join b on a.id = b.id;

如果a表和b表已经是分桶表,而且分桶的字段是id字段

做这个join操作时,还需要全表做笛卡尔积吗?)

实例

1 # 创建分桶表 2 hive (test_db)> create table t_sz05_buck (id int, name string) 3 clustered by(id) sorted by(id) into 4 buckets 4 row format delimited fields terminated by ','; 5 OK 6 Time taken: 0.267 seconds 7 0: jdbc:hive2://mini01:10000> select * from t_sz05_buck; 8 +-----------------+-------------------+--+ 9 | t_sz05_buck.id | t_sz05_buck.name | 10 +-----------------+-------------------+--+ 11 +-----------------+-------------------+--+ 12 No rows selected (0.095 seconds)

准备数据

1 [yun@mini01 hive]$ pwd 2 /app/software/hive 3 [yun@mini01 hive]$ cat t_sz05_buck.dat 4 1,mechanics 5 2,mechanical engineering 6 3,Instrument Science and technology 7 4,Material science and Engineering 8 5,Dynamic engineering and Engineering Thermophysics 9 6,Electrical engineering 10 7,Control science and Engineering 11 8,Computer science and technology 12 9,Architecture 13 10,irrigation works 14 11,Nuclear science and technology 15 12,Environmental Science and Engineering 16 13,Urban and rural planning 17 14,Landscape architecture 18 15,Electronic Science and technology 19 16,Information and Communication Engineering 20 17,Civil Engineering 21 18,Chemical engineering and technology 22 19,software engineering 23 20,biomedical engineering 24 21,Safety science and Engineering 25 22,Aeronautical and Astronautical Science and Technology 26 23,Transportation Engineering 27 24,Optical engineering 28 ############## 将数据导入hive中的一个普通表 29 hive (test_db)> create table t_sz05 (id int, name string) 30 row format delimited fields terminated by ','; 31 OK 32 Time taken: 0.091 seconds 33 hive (test_db)> load data local inpath '/app/software/hive/t_sz05_buck.dat' into table t_sz05; # 导入数据 34 Loading data to table test_db.t_sz05 35 Table test_db.t_sz05 stats: [numFiles=1, totalSize=753] 36 OK 37 Time taken: 0.276 seconds 38 hive (test_db)> select * from t_sz05; # 查询结果数据正常 39 ……………………

指定分桶信息

1 # 原参数 在Hive命令行或者 0: jdbc:hive2 命令行 2 hive (test_db)> set hive.enforce.bucketing; 3 hive.enforce.bucketing=false 4 hive (test_db)> set mapreduce.job.reduces; 5 mapreduce.job.reduces=-1 6 # 指定参数 7 hive (test_db)> set hive.enforce.bucketing = true; 8 hive (test_db)> set mapreduce.job.reduces = 4; 9 hive (test_db)> set hive.enforce.bucketing; 10 hive.enforce.bucketing=true 11 hive (test_db)> set mapreduce.job.reduces; 12 mapreduce.job.reduces=4 13 ######### 在查询 t_sz05 ############################### 14 0: jdbc:hive2://mini01:10000> select id, name from t_sz05 cluster by (id); 15 INFO : Number of reduce tasks not specified. Defaulting to jobconf value of: 4 16 INFO : In order to change the average load for a reducer (in bytes): 17 INFO : set hive.exec.reducers.bytes.per.reducer=<number> 18 INFO : In order to limit the maximum number of reducers: 19 INFO : set hive.exec.reducers.max=<number> 20 INFO : In order to set a constant number of reducers: 21 INFO : set mapreduce.job.reduces=<number> 22 INFO : number of splits:1 23 INFO : Submitting tokens for job: job_1531531561184_0009 24 INFO : The url to track the job: http://mini02:8088/proxy/application_1531531561184_0009/ 25 INFO : Starting Job = job_1531531561184_0009, Tracking URL = http://mini02:8088/proxy/application_1531531561184_0009/ 26 INFO : Kill Command = /app/hadoop/bin/hadoop job -kill job_1531531561184_0009 27 INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 4 28 INFO : 2018-07-14 10:56:10,711 Stage-1 map = 0%, reduce = 0% 29 INFO : 2018-07-14 10:56:15,963 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec 30 INFO : 2018-07-14 10:56:30,998 Stage-1 map = 100%, reduce = 25%, Cumulative CPU 4.94 sec 31 INFO : 2018-07-14 10:56:32,196 Stage-1 map = 100%, reduce = 50%, Cumulative CPU 11.03 sec 32 INFO : 2018-07-14 10:56:35,630 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 22.46 sec 33 INFO : MapReduce Total cumulative CPU time: 22 seconds 460 msec 34 INFO : Ended Job = job_1531531561184_0009 35 +-----+--------------------------------------------------------+--+ 36 | id | name | 37 +-----+--------------------------------------------------------+--+ 38 | 4 | Material science and Engineering | 39 | 8 | Computer science and technology | 40 | 12 | Environmental Science and Engineering | 41 | 16 | Information and Communication Engineering | 42 | 20 | biomedical engineering | 43 | 24 | Optical engineering | 44 | 1 | mechanics | 45 | 5 | Dynamic engineering and Engineering Thermophysics | 46 | 9 | Architecture | 47 | 13 | Urban and rural planning | 48 | 17 | Civil Engineering | 49 | 21 | Safety science and Engineering | 50 | 2 | mechanical engineering | 51 | 6 | Electrical engineering | 52 | 10 | irrigation works | 53 | 14 | Landscape architecture | 54 | 18 | Chemical engineering and technology | 55 | 22 | Aeronautical and Astronautical Science and Technology | 56 | 3 | Instrument Science and technology | 57 | 7 | Control science and Engineering | 58 | 11 | Nuclear science and technology | 59 | 15 | Electronic Science and technology | 60 | 19 | software engineering | 61 | 23 | Transportation Engineering | 62 +-----+--------------------------------------------------------+--+ 63 24 rows selected (33.214 seconds)

向分桶表导入数据★★★★★

1 # 其中 select id, name from t_sz05 cluster by (id); 2 # 等价于 select id, name from t_sz05 distribute by (id) sort by (id); 3 # 因此,如果分桶和sort字段是同一个时,此时,cluster by = distribute by + sort by 4 # 当根据一个字段分桶,另一个字段排序时就可以使用后面的语句 5 0: jdbc:hive2://mini01:10000> insert into table t_sz05_buck 6 select id, name from t_sz05 cluster by (id); 7 INFO : Number of reduce tasks determined at compile time: 4 8 INFO : In order to change the average load for a reducer (in bytes): 9 INFO : set hive.exec.reducers.bytes.per.reducer=<number> 10 INFO : In order to limit the maximum number of reducers: 11 INFO : set hive.exec.reducers.max=<number> 12 INFO : In order to set a constant number of reducers: 13 INFO : set mapreduce.job.reduces=<number> 14 INFO : number of splits:1 15 INFO : Submitting tokens for job: job_1531531561184_0010 16 INFO : The url to track the job: http://mini02:8088/proxy/application_1531531561184_0010/ 17 INFO : Starting Job = job_1531531561184_0010, Tracking URL = http://mini02:8088/proxy/application_1531531561184_0010/ 18 INFO : Kill Command = /app/hadoop/bin/hadoop job -kill job_1531531561184_0010 19 INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 4 20 INFO : 2018-07-14 11:01:40,785 Stage-1 map = 0%, reduce = 0% 21 INFO : 2018-07-14 11:01:46,089 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.49 sec 22 INFO : 2018-07-14 11:02:03,691 Stage-1 map = 100%, reduce = 25%, Cumulative CPU 10.35 sec 23 INFO : 2018-07-14 11:02:04,962 Stage-1 map = 100%, reduce = 75%, Cumulative CPU 25.76 sec 24 INFO : 2018-07-14 11:02:05,987 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 32.36 sec 25 INFO : MapReduce Total cumulative CPU time: 32 seconds 360 msec 26 INFO : Ended Job = job_1531531561184_0010 27 INFO : Loading data to table test_db.t_sz05_buck from hdfs://mini01:9000/user/hive/warehouse/test_db.db/t_sz05_buck/.hive-staging_hive_2018-07-14_11-01-34_616_4744571023046037741-1/-ext-10000 28 INFO : Table test_db.t_sz05_buck stats: [numFiles=4, numRows=24, totalSize=753, rawDataSize=729] 29 No rows affected (33.762 seconds) 30 0: jdbc:hive2://mini01:10000> select * from t_sz05_buck; 31 +-----------------+--------------------------------------------------------+--+ 32 | t_sz05_buck.id | t_sz05_buck.name | 33 +-----------------+--------------------------------------------------------+--+ 34 | 4 | Material science and Engineering | 35 | 8 | Computer science and technology | 36 | 12 | Environmental Science and Engineering | 37 | 16 | Information and Communication Engineering | 38 | 20 | biomedical engineering | 39 | 24 | Optical engineering | 40 | 1 | mechanics | 41 | 5 | Dynamic engineering and Engineering Thermophysics | 42 | 9 | Architecture | 43 | 13 | Urban and rural planning | 44 | 17 | Civil Engineering | 45 | 21 | Safety science and Engineering | 46 | 2 | mechanical engineering | 47 | 6 | Electrical engineering | 48 | 10 | irrigation works | 49 | 14 | Landscape architecture | 50 | 18 | Chemical engineering and technology | 51 | 22 | Aeronautical and Astronautical Science and Technology | 52 | 3 | Instrument Science and technology | 53 | 7 | Control science and Engineering | 54 | 11 | Nuclear science and technology | 55 | 15 | Electronic Science and technology | 56 | 19 | software engineering | 57 | 23 | Transportation Engineering | 58 +-----------------+--------------------------------------------------------+--+ 59 24 rows selected (0.097 seconds)

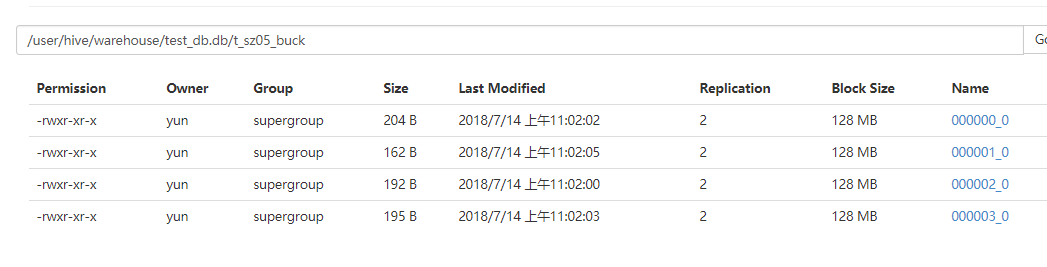

浏览器访问

1 0: jdbc:hive2://mini01:10000> dfs -cat /user/hive/warehouse/test_db.db/t_sz05_buck/000000_0; 2 +-----------------------------------------------+--+ 3 | DFS Output | 4 +-----------------------------------------------+--+ 5 | 4,Material science and Engineering | 6 | 8,Computer science and technology | 7 | 12,Environmental Science and Engineering | 8 | 16,Information and Communication Engineering | 9 | 20,biomedical engineering | 10 | 24,Optical engineering | 11 +-----------------------------------------------+--+ 12 6 rows selected (0.016 seconds) 13 0: jdbc:hive2://mini01:10000> dfs -cat /user/hive/warehouse/test_db.db/t_sz05_buck/000001_0; 14 +------------------------------------------------------+--+ 15 | DFS Output | 16 +------------------------------------------------------+--+ 17 | 1,mechanics | 18 | 5,Dynamic engineering and Engineering Thermophysics | 19 | 9,Architecture | 20 | 13,Urban and rural planning | 21 | 17,Civil Engineering | 22 | 21,Safety science and Engineering | 23 +------------------------------------------------------+--+ 24 6 rows selected (0.015 seconds) 25 0: jdbc:hive2://mini01:10000> dfs -cat /user/hive/warehouse/test_db.db/t_sz05_buck/000002_0; 26 +-----------------------------------------------------------+--+ 27 | DFS Output | 28 +-----------------------------------------------------------+--+ 29 | 2,mechanical engineering | 30 | 6,Electrical engineering | 31 | 10,irrigation works | 32 | 14,Landscape architecture | 33 | 18,Chemical engineering and technology | 34 | 22,Aeronautical and Astronautical Science and Technology | 35 +-----------------------------------------------------------+--+ 36 6 rows selected (0.014 seconds) 37 0: jdbc:hive2://mini01:10000> dfs -cat /user/hive/warehouse/test_db.db/t_sz05_buck/000003_0; 38 +---------------------------------------+--+ 39 | DFS Output | 40 +---------------------------------------+--+ 41 | 3,Instrument Science and technology | 42 | 7,Control science and Engineering | 43 | 11,Nuclear science and technology | 44 | 15,Electronic Science and technology | 45 | 19,software engineering | 46 | 23,Transportation Engineering | 47 +---------------------------------------+--+ 48 6 rows selected (0.016 seconds)

2.1.7. Temporary Tables

一个表创建为一个临时表那么只对当前会话看见。数据将存储在用户的scratch目录中,并在会话结束时删除。

如果使用数据库中已经存在的永久表的数据库/表名创建临时表,那么在该会话中对该表的任何引用都将解析为临时表,而不是永久表。如果不删除临时表或将其重命名为不冲突的名称,用户将无法访问会话中的原始表。

临时表有以下限制:

分区列不支持

不支持创建索引

实例

1 0: jdbc:hive2://mini01:10000> create temporary table t_sz06_temp (id int, name string) 2 0: jdbc:hive2://mini01:10000> row format delimited fields terminated by ','; 3 No rows affected (0.062 seconds) 4 0: jdbc:hive2://mini01:10000> select * from t_sz06_temp ; 5 +-----------------+-------------------+--+ 6 | t_sz06_temp.id | t_sz06_temp.name | 7 +-----------------+-------------------+--+ 8 +-----------------+-------------------+--+ 9 No rows selected (0.042 seconds) 10 0: jdbc:hive2://mini01:10000> load data local inpath '/app/software/hive/t_sz01.dat' into table t_sz06_temp ; # 导入数据 11 INFO : Loading data to table test_db.t_sz06_temp from file:/app/software/hive/t_sz01.dat 12 INFO : Table test_db.t_sz06_temp stats: [numFiles=1, totalSize=71] 13 No rows affected (0.09 seconds) 14 0: jdbc:hive2://mini01:10000> select * from t_sz06_temp ; 15 +-----------------+-------------------+--+ 16 | t_sz06_temp.id | t_sz06_temp.name | 17 +-----------------+-------------------+--+ 18 | 1 | zhnagsan | 19 | 3 | 李四 | 20 | 5 | wangwu | 21 | 7 | 赵六 | 22 | 2 | sunqi | 23 | 4 | 周八 | 24 | 6 | kkkkk | 25 | 8 | zzzzz | 26 +-----------------+-------------------+--+ 27 8 rows selected (0.062 seconds) 28 # 其中 Location为 hdfs://mini01:9000/tmp/hive/yun/4bf7c650-56ed-4f93-8a73-23420c7292a1/_tmp_space.db/158ad837-ee43-4870-9e34-6ad5cd4b292a 29 0: jdbc:hive2://mini01:10000> desc formatted t_sz06_temp; 30 +-------------------------------+------------------------------------------------------------+-----------------------+--+ 31 | col_name | data_type | comment | 32 +-------------------------------+------------------------------------------------------------+-----------------------+--+ 33 | # col_name | data_type | comment | 34 | | NULL | NULL | 35 | id | int | | 36 | name | string | | 37 | | NULL | NULL | 38 | # Detailed Table Information | NULL | NULL | 39 | Database: | test_db | NULL | 40 | Owner: | yun | NULL | 41 | CreateTime: | Sat Jul 14 11:47:14 CST 2018 | NULL | 42 | LastAccessTime: | UNKNOWN | NULL | 43 | Protect Mode: | None | NULL | 44 | Retention: | 0 | NULL | 45 | Location: | hdfs://mini01:9000/tmp/hive/yun/UUID/_tmp_space.db/UUID | NULL | 46 | Table Type: | MANAGED_TABLE | NULL | 47 | Table Parameters: | NULL | NULL | 48 | | COLUMN_STATS_ACCURATE | true | 49 | | numFiles | 1 | 50 | | totalSize | 71 | 51 | | NULL | NULL | 52 | # Storage Information | NULL | NULL | 53 | SerDe Library: | org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe | NULL | 54 | InputFormat: | org.apache.hadoop.mapred.TextInputFormat | NULL | 55 | OutputFormat: | org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat | NULL | 56 | Compressed: | No | NULL | 57 | Num Buckets: | -1 | NULL | 58 | Bucket Columns: | [] | NULL | 59 | Sort Columns: | [] | NULL | 60 | Storage Desc Params: | NULL | NULL | 61 | | field.delim | , | 62 | | serialization.format | , | 63 +-------------------------------+------------------------------------------------------------+-----------------------+--+ 64 30 rows selected (0.024 seconds)

2.1.8. Constraints【约束条件】

Hive包含对未验证的 主键和外键约束的支持。一些SQL工具在出现约束时生成更有效的查询。由于这些约束没有经过验证,所以上游系统需要在将数据加载到Hive之前确保数据的真实性。

1 # Example: 2 create table pk(id1 integer, id2 integer, 3 primary key(id1, id2) disable novalidate); 4 5 create table fk(id1 integer, id2 integer, 6 constraint c1 foreign key(id1, id2) references pk(id2, id1) disable novalidate);

2.2. Drop Table

1 DROP TABLE [IF EXISTS] table_name [PURGE];

DROP TABLE 删除该表的元数据和数据。如果配置了 Trash那么数据实际移到了 .Trash/Current 目录(并且PURGE没有指定)。元数据完全丢失。

当删除一个 EXTERNAL表时,表的数据将不会从文件系统删除。

当删除视图引用的表时,不会给出任何警告。

表信息从元存储中被删除并且行数据也会被删除就像通过“hadoop dfs -rm”。多数情况下,这会导致表数据被移动到用户家目录中的.Trash文件夹中; 因此,错误地删除表的用户可以通过使用相同的模式重新创建表,重新创建任何必要的分区,然后使用Hadoop手动将数据移回原位,从而恢复丢失的数据。由于该解决方案依赖于底层实现,因此可能会随时间或跨安装设备进行更改;强烈建议用户不要随意删除表。

如果PURGE被指定,那么表数据不会移到.Trash/Current目录,因此如果是错误的DROP那么不能被恢复。purge 选项也可以使用表属性auto. cleanup指定

2.3. Truncate Table

1 TRUNCATE TABLE table_name [PARTITION partition_spec]; 2 3 partition_spec: 4 : (partition_column = partition_col_value, partition_column = partition_col_value, ...)

从表或分区表删除所有的行。如果文件系统垃圾(Trash)可用那么行数据将进入垃圾站,否则将被删除。当前,目标表应该是本机/托管表,否则将抛出异常。用户可以指定部分partition_spec来一次截断多个分区,而省略partition_spec将截断表中的所有分区。

3. Alter Table/Partition/Column

1 Alter Table 2 Rename Table 3 Alter Table Properties 4 Alter Table Comment 5 Add SerDe Properties 6 Alter Table Storage Properties 7 Alter Table Skewed or Stored as Directories 8 Alter Table Skewed 9 Alter Table Not Skewed 10 Alter Table Not Stored as Directories 11 Alter Table Set Skewed Location 12 Alter Table Constraints 13 Additional Alter Table Statements 14 Alter Partition 15 Add Partitions 16 Dynamic Partitions 17 Rename Partition 18 Exchange Partition 19 Recover Partitions (MSCK REPAIR TABLE) 20 Drop Partitions 21 (Un)Archive Partition 22 Alter Either Table or Partition 23 Alter Table/Partition File Format 24 Alter Table/Partition Location 25 Alter Table/Partition Touch 26 Alter Table/Partition Protections 27 Alter Table/Partition Compact 28 Alter Table/Partition Concatenate 29 Alter Table/Partition Update columns 30 Alter Column 31 Rules for Column Names 32 Change Column Name/Type/Position/Comment 33 Add/Replace Columns 34 Partial Partition Specification

参见:Alter Table/Partition/Column

4. Show

1 Show Databases 2 Show Tables/Views/Partitions/Indexes 3 Show Tables 4 Show Views 5 Show Partitions 6 Show Table/Partition Extended 7 Show Table Properties 8 Show Create Table 9 Show Indexes 10 Show Columns 11 Show Functions 12 Show Granted Roles and Privileges 13 Show Locks 14 Show Conf 15 Show Transactions 16 Show Compactions

参见:Show

5. Describe

1 Describe Database 2 Describe Table/View/Column 3 Display Column Statistics 4 Describe Partition

参见:Describe