本次课程内容为英文内容,故作业以英文格式,有需要的同学自行翻译

1. Introduction (5%)

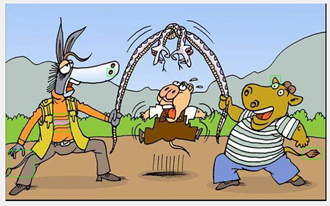

The task of this project is to find different parts of the two images, and need to remove the difference between the butterfly parts, that is, the difference between the frame and the butterfly, and give four pairs of pictures, Separate boxes in different places, for example:

Fig.1 Four pairs of maps

It can be seen that in the above four pairs of figures, there are two butterflies in the picture, which need to be matched in the original picture. When selecting the difference, remove it and give a template about the butterfly:

Fig.2 Butterfly example

In order to solve this problem, the problem itself is analyzed first: the two images are inconsistent in size, and the drawing software is used to find that the length and width of the two images are inconsistent. Therefore, the difference can not be directly subtracted from the problem. Therefore, I want to register the two image sizes, and then directly subtract the difference to get the difference. In the process of registering images, firstly, the surf algorithm (an improved algorithm based on sift algorithm) is used to extract the features of the image; the second is to match the eigenvalues of the two images, so that the length and width of the two images are pixel values. The registration is completed. The second is to extract the butterfly part of the image, and to delete the butterfly part in the image by calculating the gray value. After the selection is completed, the butterfly part can be found according to the connected domain. Finally, the position in the picture is determined, and finally the butterfly part is unselected, and the pixel value in the position is set to zero. Finally, the different parts of the two images are framed.

2 Proposed approach (50%)

The proposed approach includes ….

The proposed method mainly includes several sub-algorithms:

1) Image registration algorithm, using the improved algorithm surf algorithm of sift;

2) extracting the butterfly portion by the gray value;

3) Image difference extracts the difference between the two images;

4) Extract the butterfly part from the original image;

5) Perform morphological processing and remove the butterfly parts of the different;

6) The difference between the rectangular frame

2.1 Image registration algorithm, registering two images through feature points

First, you need to convert the two images into grayscale images, and then register the images. The following image is the corrected image after the grayscale operation of the second image:

Fig.3 Registration picture

2.2 Secondly, it is necessary to perform the difference operation between the registered image of the second image obtained above and the first image to obtain the difference between the two images. The running results are as follows:

Fig.4 Differential picture

2.3 Then, the butterfly image is extracted by the gray value. Firstly, some software obtains the gray value range of the area where the butterfly image is located, and calculates the corresponding threshold value;

2.4 Extract the butterfly part in the original picture, prepare for removing the butterfly part below, and perform threshold segmentation on the above image. The running result is as follows:

Fig.5 Threshold segmentation

2.5 Fill in the image, the results are as follows:

Fig.6 Image fill

2.6 Delete the smaller area of the image according to the connected domain area

Fig.7 Eliminate small noise points

2.7 The larger area of the image is removed according to the connected domain area. The results are as follows:

Fig.8 Eliminate large connected domains

2.8 Inflate the image to get a butterfly image:

Fig.9 Image expansion

2.9 Perform a threshold segmentation on the above graph to get the difference graph:

Fig.10 Threshold segmentation

2.10 Clear the isolated bright spots in the above image

Fig.11 Clear isolated highlights

2.11 A pixel having a pixel equal to or more than 5 pixels in the 8 neighborhood of the pixel has a value of 1, and the pixel value of the point is set to 1;

Fig.12 Eliminate noise

2.12 消除图像中的小区域

Fig.13 Eliminate small areas in the image

2.13 Dilate the image

Fig.14 Image expansion

2.14 Fill the image with a round hole and get

Fig.15 Round hole filling

2.15 Remove the butterfly part of the picture:

Fig.16 Removing butterflies

2.16 Frame the corresponding position in the original image

Fig.17 Box corresponding position

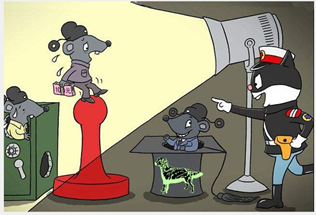

3 Experimental results and analysis (40%)

In order to verify the versatility of the above algorithm, the algorithm is simulated and analyzed, and the actual test results are analyzed. The simulation results are as follows:

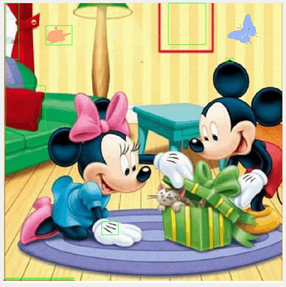

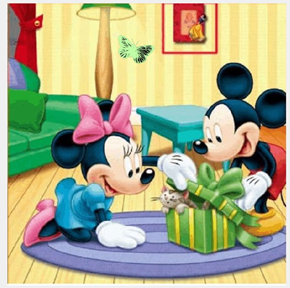

Fig.18 Figure test2-1 and test2-2 test results

Fig.19 Figure timg1.1 and timg2.2 test results

Fig.20 Figure timg2-1 and timg2-2 test results

Fig.21 Figure timgKT-1 and timgKT-2 test results

As can be seen from the above experimental results, the algorithm can perform a better detection function for the difference in the above images, and the differences in the image are framed. The experimental results show that no false detection occurs, and different parts of the two images are Can be accurately identified, the experimental results are better.

附上三个部分代码:

demo_1:

function bw2 = bwremovelargearea(varargin)

%BWAREAOPEN Remove small objects from binary image.

% BW2 = BWAREAOPEN(BW,P) removes from a binary image all connected

% components (objects) that have fewer than P pixels, producing another

% binary image BW2. This operation is known as an area opening. The

% default connectivity is 8 for two dimensions, 26 for three dimensions,

% and CONNDEF(NDIMS(BW),'maximal') for higher dimensions.

%

% BW2 = BWAREAOPEN(BW,P,CONN) specifies the desired connectivity. CONN

% may have the following scalar values:

%

% 4 two-dimensional four-connected neighborhood

% 8 two-dimensional eight-connected neighborhood

% 6 three-dimensional six-connected neighborhood

% 18 three-dimensional 18-connected neighborhood

% 26 three-dimensional 26-connected neighborhood

%

% Connectivity may be defined in a more general way for any dimension by

% using for CONN a 3-by-3-by- ... -by-3 matrix of 0s and 1s. The

% 1-valued elements define neighborhood locations relative to the center

% element of CONN. CONN must be symmetric about its center element.

%

% Class Support

% -------------

% BW can be a logical or numeric array of any dimension, and it must be

% nonsparse.

%

% BW2 is logical.

%

% Example

% -------

% Remove all objects in the image text.png containing fewer than 50

% pixels.

%

% BW = imread('text.png');

% BW2 = bwareaopen(BW,50);

% imshow(BW);

% figure, imshow(BW2)

%

% See also BWCONNCOMP, CONNDEF, REGIONPROPS.

% Copyright 1993-2011 The MathWorks, Inc.

% Input/output specs

% ------------------

% BW: N-D real full matrix

% any numeric class

% sparse not allowed

% anything that's not logical is converted first using

% bw = BW ~= 0

% Empty ok

% Inf's ok, treated as 1

% NaN's ok, treated as 1

%

% P: double scalar

% nonnegative integer

%

% CONN: connectivity

%

% BW2: logical, same size as BW

% contains only 0s and 1s.

[bw,p,conn] = parse_inputs(varargin{:});

CC = bwconncomp(bw,conn);

area = cellfun(@numel, CC.PixelIdxList);

idxToKeep = CC.PixelIdxList(area <= p);

idxToKeep = vertcat(idxToKeep{:});

bw2 = false(size(bw));

bw2(idxToKeep) = true;

%%%

%%% parse_inputs

%%%

function [bw,p,conn] = parse_inputs(varargin)

narginchk(2,3)

bw = varargin{1};

validateattributes(bw,{'numeric' 'logical'},{'nonsparse'},mfilename,'BW',1);

if ~islogical(bw)

bw = bw ~= 0;

end

p = varargin{2};

validateattributes(p,{'double'},{'scalar' 'integer' 'nonnegative'},...

mfilename,'P',2);

if (nargin >= 3)

conn = varargin{3};

else

conn = conndef(ndims(bw),'maximal');

end

iptcheckconn(conn,mfilename,'CONN',3)

demo_2

function on2 = registerImages1(MOVING,FIXED)

% This function can be used to image registration

% moving is image A, fixed is image B,

% type is the method used

% type 'no'do not use image registration

% type 'auto' use the automatic image registration.

% type 'user' use the user defined control points to registration

%registerImages Register grayscale images using auto-generated code from Registration Estimator app.

% [MOVINGREG] = registerImages(MOVING,FIXED) Register grayscale images

% MOVING and FIXED using auto-generated code from the Registration

% Estimator App. The values for all registration parameters were set

% interactively in the App and result in the registered image stored in the

%-----------------------------------------------------------

% Feature-based techniques require license to Computer Vision System Toolbox

checkLicense()

% Default spatial referencing objects

fixedRefObj = imref2d(size(FIXED));

movingRefObj = imref2d(size(MOVING));

% Detect SURF features

fixedPoints = detectSURFFeatures(FIXED,'MetricThreshold',750.000000,'NumOctaves',3,'NumScaleLevels',5);

movingPoints = detectSURFFeatures(MOVING,'MetricThreshold',750.000000,'NumOctaves',3,'NumScaleLevels',5);

% Extract features

[fixedFeatures,fixedValidPoints] = extractFeatures(FIXED,fixedPoints,'Upright',false);

[movingFeatures,movingValidPoints] = extractFeatures(MOVING,movingPoints,'Upright',false);

% Match features

indexPairs = matchFeatures(fixedFeatures,movingFeatures,'MatchThreshold',50.000000,'MaxRatio',0.500000);

fixedMatchedPoints = fixedValidPoints(indexPairs(:,1));

movingMatchedPoints = movingValidPoints(indexPairs(:,2));

MOVINGREG.FixedMatchedFeatures = fixedMatchedPoints;

MOVINGREG.MovingMatchedFeatures = movingMatchedPoints;

% Apply transformation - Results may not be identical between runs because of the randomized nature of the algorithm

tform = estimateGeometricTransform(movingMatchedPoints,fixedMatchedPoints,'projective');

MOVINGREG.Transformation = tform;

MOVINGREG.RegisteredImage = imwarp(MOVING, movingRefObj, tform, 'OutputView', fixedRefObj, 'SmoothEdges', true);

on2=MOVINGREG.RegisteredImage;

end

function checkLicense()

% Check for license to Computer Vision System Toolbox

CVSTStatus = license('test','video_and_image_blockset');

if ~CVSTStatus

error(message('images:imageRegistration:CVSTRequired'));

end

end

main_1

clear all;

clc;

%读取图片

I1=imread('timgKT-1.jpg');

figure(101);imshow(I1);

I2=imread('timgKT-2.jpg');

figure(102);imshow(I2);

%转换为灰度图,将为单维空间

Image1_gary=rgb2gray(I1);

Image2_gary=rgb2gray(I2);

%对两幅图片进行配准,便于相减得出不同

Image2_reg=registerImages1(Image2_gary,Image1_gary);

figure(1);imshow(Image2_reg);

%% 差影法,通过相减得出两幅图之间得区别

%进行差分运算得出两幅图像不同之处

Image_cha1=imabsdiff(Image2_reg,Image1_gary);

Image_cha3=Image_cha1;

figure(2);imshow(Image_cha3);

%筛选灰度值

[height,width,c]=size(Image_cha1);

A=0;B=0;

for i=1:height

for j=1:width

if 1==1

A=A+1;%计算总灰度值

end

if Image_cha1(i,j,1)<30

B=B+1;%计算小于30的灰度值

end

if Image_cha3(i,j,1)>60||Image_cha3(i,j,1)<8 %删选蝴蝶图形

Image_cha3(i,j,1)=0;

end

end

end

C=B/A;

Image_cha2=Image_cha1;

figure(3),imshow(Image_cha2);

if C>0.92 %为阈值函数做准备

Image_cha2=immultiply(Image_cha1,3);

end

%% 再原图中提取出蝴蝶

%图像乘法,乘以缩放因子

Image_cha3=immultiply(Image_cha3,3);

figure(4),imshow(Image_cha3);

%阈值分割

Image_three=imbinarize(Image_cha3,0);

figure(5),imshow(Image_three);

%图像填充

Image_three=imfill(Image_three,'holes');

figure(6),imshow(Image_three);

%删除小于一定面积的目标

Image_three= bwareaopen(Image_three,1500,8);

figure(7),imshow(Image_three);

%删除大于一定面积的目标

Image_three=bwremovelargearea(Image_three,4000,8);

figure(8),imshow(Image_three);

%膨胀

market_d=strel('disk',3);

Image_three=imdilate(Image_three,market_d);

figure(9),imshow(Image_three);

Image_three1=imcomplement(Image_three);%取反

figure(10),imshow(Image_three1);

%

%% 处理差影法获得的差异图

%阈值函数阈值分割,最大类差间法寻找阈值

T=graythresh(Image_cha2);

figure(11),imshow(T);

Image_two=imbinarize(Image_cha2,T);

figure(12),imshow(Image_two);

%形态学操作

Image_two=bwmorph(Image_two,'clean',100);

figure(13),imshow(Image_two);

Image_two=bwmorph(Image_two,'majority');

figure(14),imshow(Image_two);

Image_two = bwareaopen(Image_two,10,8);

figure(15),imshow(Image_two);

market_d=strel('disk',4);

Image_RGary_d=imdilate(Image_two,market_d);

figure(16),imshow(Image_RGary_d);

Image_two=imfill(Image_RGary_d,'holes');

figure(17),imshow(Image_two);

Image_two = bwareaopen(Image_two,100,8);

figure(18),imshow(Image_two);

%边角置黑

%获取图像的长和宽

[m,n]=size(Image_two);

Image_two(m-10:m,1:n)=0;

figure(19),imshow(Image_two);

Image_two(1:m,1:7)=0;

figure(20),imshow(Image_two);

Image_two(1:m,n-6:n)=0;

figure(21),imshow(Image_two);

%处理膨胀后细小的点

Image_two = bwareaopen(Image_two,100,8);

figure(22),imshow(Image_two);

%% 在差异图上去除蝴蝶

Image_two=immultiply(Image_two,Image_three1);

figure(23),imshow(Image_two);

Image_two = bwareaopen(Image_two,100,8);

figure(24),imshow(Image_two);

%% 绘图

[L,num]=bwlabel(Image_two);

Image_T=regionprops(L,'area','boundingbox');

T_area=[Image_T.Area];

rects=cat(1,Image_T.BoundingBox);

figure(103),imshow(I1);

figure(50),

subplot(1,2,1),imshow(I1);

for Tti=1:size(rects,1)

rectangle('position',rects(Tti,:),'EdgeColor','g')

end

subplot(1,2,2),imshow(I2);

figure(104),imshow(I2);

4 Conclusion (2%)

For this experiment, the knowledge of the previous learning was applied as a whole, and in this operation, the surf algorithm was studied and applied at the same time, and the morphological processing in the image was more deeply understood. How to apply Among them, various functions deal with noise points and small orphans in the image, and have a good understanding of the operation of the connected domain. However, in the program, the gray value extraction of the butterfly does not include an intelligent algorithm. By manually setting the gray value, there is an algorithmic defect, and the training can be automatically matched according to the given butterfly to obtain the position of the butterfly. Therefore, in the later stage, relevant deep learning training can be continued according to the needs.