1. 引言

相比于Java而言,基于C#的开源爬虫就少了很多。入行这么多年也从未接触过爬虫。出于兴趣今天给大家介绍一个C# 的爬虫工具Abot. 需要使用可以通过Nuget获取。Abot本身就支持多线程的爬取,

内部使用CsQuery来解析爬取到的Html文档。熟悉jQuery的同学肯定能快速上手CsQuery, 它就是一个C#版本的jQuery。

这里以爬取博客园当天的新闻数据为例,看看如何使用Abot。

2. 博客园新闻页面

http://news.cnblogs.com/ 这是博客园的新闻首页。可以看到典型的分页显示。比如 http://news.cnblogs.com/n/page/2/ 这是新闻的第二页。

真正的新闻详细页面 比如:http://news.cnblogs.com/n/544956/, 通过正则表达式可以很轻松的匹配这两种Url 类型。

当然我们可以通过一个 for 循环分别爬取各个page的新闻数据。然后解析出发表于今天的新闻。但是我希望只以http://news.cnblogs.com/ 为种子页面,爬取今天的新闻。

由于博客园新闻分页并不是采用Ajax,对于爬虫而言这非常友好

因此我们定义

/// <summary>

/// 种子Url

/// </summary>

public static readonly Uri FeedUrl = new Uri(@"http://news.cnblogs.com/");

/// <summary>

///匹配新闻详细页面的正则

/// </summary>

public static Regex NewsUrlRegex = new Regex("^http://news.cnblogs.com/n/\d+/$", RegexOptions.Compiled);

/// <summary>

/// 匹配分页正则

/// </summary>

public static Regex NewsPageRegex = new Regex("^http://news.cnblogs.com/n/page/\d+/$", RegexOptions.Compiled);

3. 实现

Abot 其实已经对爬虫内部实现封装的非常精巧,使用者只需要设置一些Config 参数和爬取页面的一些事件即可。

public static IWebCrawler GetManuallyConfiguredWebCrawler()

{

CrawlConfiguration config = new CrawlConfiguration();

config.CrawlTimeoutSeconds = 0;

config.DownloadableContentTypes = "text/html, text/plain";

config.IsExternalPageCrawlingEnabled = false;

config.IsExternalPageLinksCrawlingEnabled = false;

config.IsRespectRobotsDotTextEnabled = false;

config.IsUriRecrawlingEnabled = false;

config.MaxConcurrentThreads = System.Environment.ProcessorCount;

config.MaxPagesToCrawl = 1000;

config.MaxPagesToCrawlPerDomain = 0;

config.MinCrawlDelayPerDomainMilliSeconds = 1000;

var crawler = new PoliteWebCrawler(config, null, null, null, null, null, null, null, null);

crawler.ShouldCrawlPage(ShouldCrawlPage);

crawler.ShouldDownloadPageContent(ShouldDownloadPageContent);

crawler.ShouldCrawlPageLinks(ShouldCrawlPageLinks);

crawler.PageCrawlStartingAsync += crawler_ProcessPageCrawlStarting;

//爬取页面后的回调函数

crawler.PageCrawlCompletedAsync += crawler_ProcessPageCrawlCompletedAsync;

crawler.PageCrawlDisallowedAsync += crawler_PageCrawlDisallowed;

crawler.PageLinksCrawlDisallowedAsync += crawler_PageLinksCrawlDisallowed;

return crawler;

}

具体调用非常简单:

public static void Main(string[] args)

{

var crawler = GetManuallyConfiguredWebCrawler();

var result = crawler.Crawl(FeedUrl);

System.Console.WriteLine(result.ErrorException);

}

最主要的是PageCrawlCompletedAsync,可以在该事件下获取需要的页面数据。

public static void crawler_ProcessPageCrawlCompletedAsync(object sender, PageCrawlCompletedArgs e)

{

//判断是否是新闻详细页面

if (NewsUrlRegex.IsMatch(e.CrawledPage.Uri.AbsoluteUri))

{

//获取信息标题和发表的时间

var csTitle = e.CrawledPage.CsQueryDocument.Select("#news_title");

var linkDom = csTitle.FirstElement().FirstChild;

var newsInfo = e.CrawledPage.CsQueryDocument.Select("#news_info");

var dateString = newsInfo.Select(".time", newsInfo);

//判断是不是今天发表的

if (IsPublishToday(dateString.Text()))

{

var str = (e.CrawledPage.Uri.AbsoluteUri + " " + HtmlData.HtmlDecode(linkDom.InnerText) + "

");

System.IO.File.AppendAllText("fake.txt", str);

}

}

}

/// <summary>

/// "发布于 2016-05-09 11:25" => true

/// </summary>

public static bool IsPublishToday(string str)

{

if (string.IsNullOrEmpty(str))

{

return false;

}

const string prefix = "发布于";

int index = str.IndexOf(prefix, StringComparison.OrdinalIgnoreCase);

if (index >= 0)

{

str = str.Substring(prefix.Length).Trim();

}

DateTime date;

return DateTime.TryParse(str, out date) && date.Date.Equals(DateTime.Today);

}

为了提升爬取的效果 比如在首页爬虫抓取到 http://news.cnblogs.com/n/topiclist/, 显然这样的链接我们不需要, 那就可以设置爬取的规则:

/// <summary>

/// 如果是Feed页面或者分页或者详细页面才需要爬取

/// </summary>

private static CrawlDecision ShouldCrawlPage(PageToCrawl pageToCrawl, CrawlContext context)

{

if (pageToCrawl.IsRoot || pageToCrawl.IsRetry || FeedUrl == pageToCrawl.Uri

|| NewsPageRegex.IsMatch(pageToCrawl.Uri.AbsoluteUri)

|| NewsUrlRegex.IsMatch(pageToCrawl.Uri.AbsoluteUri))

{

return new CrawlDecision {Allow = true};

}

else

{

return new CrawlDecision {Allow = false, Reason = "Not match uri"};

}

}

/// <summary>

/// 如果是Feed页面或者分页或者详细页面才需要爬取

/// </summary>

private static CrawlDecision ShouldDownloadPageContent(PageToCrawl pageToCrawl, CrawlContext crawlContext)

{

if (pageToCrawl.IsRoot || pageToCrawl.IsRetry || FeedUrl == pageToCrawl.Uri

|| NewsPageRegex.IsMatch(pageToCrawl.Uri.AbsoluteUri)

|| NewsUrlRegex.IsMatch(pageToCrawl.Uri.AbsoluteUri))

{

return new CrawlDecision

{

Allow = true

};

}

return new CrawlDecision { Allow = false, Reason = "Not match uri" };

}

private static CrawlDecision ShouldCrawlPageLinks(CrawledPage crawledPage, CrawlContext crawlContext)

{

if (!crawledPage.IsInternal)

return new CrawlDecision {Allow = false, Reason = "We dont crawl links of external pages"};

if (crawledPage.IsRoot || crawledPage.IsRetry || crawledPage.Uri == FeedUrl

|| NewsPageRegex.IsMatch(crawledPage.Uri.AbsoluteUri))

{

return new CrawlDecision {Allow = true};

}

else

{

return new CrawlDecision {Allow = false, Reason = "We only crawl links of pagination pages"};

}

}

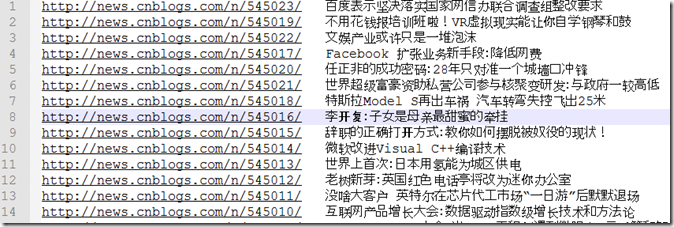

最终抓到的数据:

4. 总结

Abot 还是一个非常方便爬虫,如果运用到实际生产环境中,参数配置是首先需要解决的,比如 MaxPagesToCrawl 最大抓取的页面数,还可以设置爬虫内存限制等。