使用scrapy爬取整个网站的图片数据。并且使用 CrawlerProcess 启动。 1 # -*- coding: utf-8 -* 2 import scrapy 3 import requests

4 from bs4 import BeautifulSoup 5 6 from meinr.items import MeinrItem 7 8 9 class Meinr1Spider(scrapy.Spider): 10 name = 'meinr1' 11 # allowed_domains = ['www.baidu.com'] 12 # start_urls = ['http://m.tupianzj.com/meinv/xiezhen/'] 13 headers = { 14 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36', 15 } 16 def num(self,url,headers): #获取网页每个分类的页数和URL格式 17 html = requests.get(url=url,headers=headers) 18 if html.status_code != 200: 19 return '','' 20 soup = BeautifulSoup(html.text,'html.parser') 21 nums = soup.select('#pageNum li')[3] 22 nums = nums.select('a')[0].attrs.get('href') 23 num = str(nums[:-5]).split('_')[-1] 24 papa = str(nums[:-5]).split('_')[:-1] 25 papa = '_'.join(papa)+'_' 26 return int(num),papa 27 28 29 def start_requests(self):

#这是网站的所有分类 30 urls = ['http://m.tupianzj.com/meinv/xiezhen/','http://m.tupianzj.com/meinv/xinggan/','http://m.tupianzj.com/meinv/guzhuang/','http://m.tupianzj.com/meinv/siwa/','http://m.tupianzj.com/meinv/chemo/','http://m.tupianzj.com/meinv/qipao/','http://m.tupianzj.com/meinv/mm/'] 31 num = 0 32 for url in urls: 33 num,papa = self.num(url,self.headers) 34 for i in range(1,num): 35 if i != 1: 36 urlzz = url + papa + str(i) + '.html' #拼装每页URL 37 else: 38 urlzz = url 39 yield scrapy.Request(url=urlzz,headers=self.headers,callback=self.parse) 40 def parse(self, response): 41 # print(response.body) 42 htmllist = response.xpath('//div[@class="IndexList"]/ul[@class="IndexListult"]/li')#获取每页的图集URL和title 43 # print(htmllist) 44 for html in htmllist: 45 url = html.xpath('./a/@href').extract() 46 title = html.xpath('./a/span[1]/text()').extract() 47 # print(url) 48 # print(title) 49 yield scrapy.Request(url=url[0],meta={ 50 'url':url[0], 51 'title':title[0]}, 52 headers=self.headers, 53 callback=self.page 54 ) 55 def page(self,response): 56 is_it = response.xpath('//div[@class="m-article"]/h1/text()').extract() 57 if is_it: 58 is_it = is_it[0].strip() 59 num = int(is_it[-4]) 60 a = 0 61 for i in range(1,int(num)): 62 a += 1 63 url = str(response.url)[:-5] + '_' + str(i) + '.html' #拼装图集内的URL分页 64 yield scrapy.Request(url=url, headers=self.headers, callback=self.download, meta={ 65 'url': response.meta.get('url'), 66 'title': response.meta.get('title'), 67 'num':a 68 69 },dont_filter=True) #使用了dont_filter取消去重是因为我们需要进入第一页获取总页数

70 71 def download(self,response): 72 img = response.xpath("//img[@id='bigImg']/@src").extract() #获取每个页面里的img 73 if img: 74 time = MeinrItem() 75 time['img'] = img[0] 76 time['title'] = response.meta.get('title') 77 time['num'] = response.meta.get('num') 78 yield time

上面的是spider文件

import scrapy class MeinrItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() title = scrapy.Field() img = scrapy.Field() num = scrapy.Field() pass

上面的是item文件

1 import os 2 import requests 3 4 class MeinrPipeline(object): 5 def open_spider(self,spider):#打开spider时启动。获取下载地址 6 self.path = os.path.dirname(os.path.dirname(os.path.abspath(__file__))) + os.sep + 'download' 7 # print(self.path) 8 def process_item(self, item, spider): 9 title = item['title'] 10 img = item['img'] 11 num = item['num'] 12 path = self.path + os.sep + title #将图集的title设置为每个图集的文件夹的名字 13 if not os.path.exists(path): #没有则创建 14 os.makedirs(path) 15 html = requests.get(url=img,headers=spider.headers).content 16 path = path + os.sep + str(num) + '.jpg' #这是每个图集内的图片是第几页 17 with open(path,'wb')as f: 18 f.write(html) 19 return item

这上面是管道文件

1 import datetime,os 2 time = datetime.datetime.now().strftime('%Y_%m_%H_%M_%S') 3 LOG_FILE = 'logs'+ os.sep +str(time) + '_' + "meinr.log" 4 LOG_LEVEL = "INFO"

这是在setting里面的,设置的日志信息和保存的位置以及消息的级别

1 # -*- coding: utf-8 -*- 2 import sys,datetime 3 import os 4 5 from meinr.spiders.meinr1 import Meinr1Spider 6 from scrapy.crawler import CrawlerProcess 7 from scrapy.utils.project import get_project_settings 8 9 10 process = CrawlerProcess(get_project_settings()) #这里获取spider里面的setting 11 process.crawl(Meinr1Spider) #使用crawl启动Meinr1Spider爬虫

12 process.start() #开始运行

这是spider的启动文件

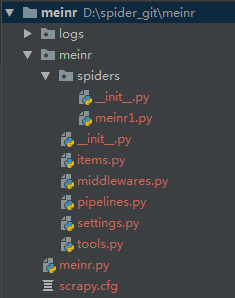

文件格式就是这样

Git地址 :https://github.com/18370652038/meinr.git