Apache Spark 2.0: Faster, Easier, and Smarter

http://blog.madhukaraphatak.com/categories/spark-two/

https://amplab.cs.berkeley.edu/technical-preview-of-apache-spark-2-0-easier-faster-and-smarter/

Dataset - New Abstraction of Spark

For long, RDD was the standard abstraction of Spark.

But from Spark 2.0, Dataset will become the new abstraction layer for spark. Though RDD API will be available, it will become low level API, used mostly for runtime and library development. All user land code will be written against the Dataset abstraction and it’s subset Dataframe API.

2.0中,最关键的是在RDD这个low level抽象层上,又加了一组DataSet的high level的抽象层,让用户可以跟方便的开发

From Definition, ” A Dataset is a strongly typed collection of domain-specific objects that can be transformed in parallel using functional or relational operations. Each dataset also has an untyped view called a DataFrame, which is a Dataset of Row. “

which sounds similar to RDD definition

” RDD represents an immutable,partitioned collection of elements that can be operated on in parallel “

Dataset is a superset of Dataframe API which is released in Spark 1.3.

Dataframe是一种特殊化的Dataset,Dataframe = Dataset[row]

SparkSession - New entry point of Spark

In earlier versions of spark, spark context was entry point for Spark. As RDD was main API, it was created and manipulated using context API’s. For every other API,we needed to use different contexts.For streaming, we needed StreamingContext, for SQL sqlContext and for hive HiveContext. But as DataSet and Dataframe API’s are becoming new standard API’s we need an entry point build for them. So in Spark 2.0, we have a new entry point for DataSet and Dataframe API’s called as Spark Session.

因为有了新的抽象层,所以需要加新的入口,SparkSession

就像RDD对应于SparkContext

更强大的SQL支持

On the SQL side, we have significantly expanded the SQL capabilities of Spark, with the introduction of a new ANSI SQL parser and support for subqueries.

Spark 2.0 can run all the 99 TPC-DS queries, which require many of the SQL:2003 features.

Tungsten 2.0

Spark 2.0 ships with the second generation Tungsten engine.

This engine builds upon ideas from modern compilers and MPP databases and applies them to data processing.

The main idea is to emit optimized bytecode at runtime that collapses the entire query into a single function, eliminating virtual function calls and leveraging CPU registers for intermediate data. We call this technique “whole-stage code generation.”

在内存管理和基于CPU的性能优化上,faster

Structured Streaming

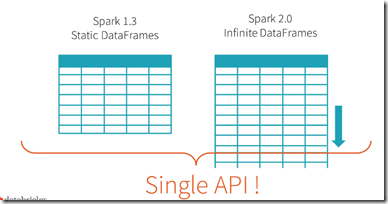

Spark 2.0’s Structured Streaming APIs is a novel way to approach streaming.

It stems from the realization that the simplest way to compute answers on streams of data is to not having to reason about the fact that it is a stream.

意思就是说,不要意识到流,流就是个无限的DataFrames

这个是典型的spark的思路,batch是一切的根本,和Flink截然相反