1. 下载kubespray

# git clone https://github.com/kubernetes-sigs/kubespray.git # cd kubespray # pip install –r requirements.txt

2. 生成inventory文件

# cp -r inventory/sample inventory/testcluster

如果安装了python3,执行下面的命令自动生成inventory文件:

# declare -a IPS=(10.32.3.80 10.32.3.81 10.32.3.82) # CONFIG_FILE=inventory/testcluster/hosts.ini python3 contrib/inventory_builder/inventory.py

否则手动编辑inventory/testcluster/hosts.ini:

[all] node1 ansible_ssh_host=10.32.3.80 ansible_user=root etcd_member_name=etcd1 node2 ansible_ssh_host=10.32.3.81 ansible_user=root etcd_member_name=etcd2 node3 ansible_ssh_host=10.32.3.82 ansible_user=root etcd_member_name=etcd3 [kube-master] node1 node2 node3 [etcd] node1 node2 node3 [kube-node] node1 node2 node3 [k8s-cluster:children] kube-master kube-node

3. 下载镜像并上传到本地仓库

我的方法是先执行download tag:

# ansible-playbook -i inventory/testcluster/hosts.ini cluster.yml -t download -b -v -k

遇到下载的镜像会报错停下,然后手动在一台配置了docker proxy的机器上从pull官方镜像,打上tag后push到本地镜像仓库。

以下docker镜像上传到本地镜像仓库:

gcr.io/google_containers/cluster-proportional-autoscaler-amd64:1.3.0 gcr.io/google_containers/pause-amd64:3.1 lachlanevenson/k8s-helm:v2.11.0 quay.io/coreos/etcd:v3.2.24 quay.io/calico/ctl:v3.1.3 quay.io/calico/node:v3.1.3 quay.io/calico/cni:v3.1.3 quay.io/calico/kube-controllers:v3.1.3 quay.io/calico/routereflector:v0.6.1 gcr.io/kubernetes-helm/tiller:v2.11.0 nginx:1.13 gcr.io/google_containers/kubernetes-dashboard-amd64:v1.10.0 quay.io/jetstack/cert-manager-controller:v0.5.2 quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.21.0 quay.io/external_storage/local-volume-provisioner:v2.1.0 quay.io/external_storage/cephfs-provisioner:v2.1.0-k8s1.11 k8s.gcr.io/addon-resizer:1.8.3 k8s.gcr.io/metrics-server-amd64:v0.3.1 coredns/coredns:1.2.6 gcr.io/google-containers/kube-apiserver:v1.13.0 gcr.io/google-containers/kube-controller-manager:v1.13.0 gcr.io/google-containers/kube-scheduler:v1.13.0 gcr.io/google-containers/kube-proxy:v1.13.0 gcr.io/google-containers/pause:3.1 gcr.io/google-containers/coredns:1.2.6

以下文件上传到本地http服务器:

https://storage.googleapis.com/kubernetes-release/release/v1.13.0/bin/linux/amd64/kubeadm https://storage.googleapis.com/kubernetes-release/release/v1.13.0/bin/linux/amd64/hyperkube https://github.com/coreos/etcd/releases/download/v3.2.24/etcd-v3.2.24-linux-amd64.tar.gz https://github.com/containernetworking/plugins/releases/download/v0.6.0/cni-plugins-amd64-v0.6.0.tgz

4. 替换下载地址

镜像地址集中配置在roles/download/defaults/main.yml文件中,修改如下:

kube_image_repo: "gcr.io/google-containers" kube_image_repo: "registry.example.com/google_containers" gcr.io/google_containers/cluster-proportional-autoscaler-{{ image_arch }} registry.example.com/google_containers/cluster-proportional-autoscaler-{{ image_arch }} gcr.io/google_containers/pause-{{ image_arch }} registry.example.com/google_containers/pause-{{ image_arch }} lachlanevenson/k8s-helm registry.example.com/lachlanevenson/k8s-helm quay.io/coreos/etcd registry.example.com/coreos/etcd quay.io/calico/ctl registry.example.com/calico/ctl quay.io/calico/node registry.example.com/calico/node quay.io/calico/cni registry.example.com/calico/cni quay.io/calico/kube-controllers registry.example.com/calico/kube-controllers quay.io/calico/routereflector registry.example.com/calico/routereflector gcr.io/kubernetes-helm/tiller registry.example.com/kubernetes-helm/tiller nginx_image_repo: nginx nginx_image_repo: registry.example.com/common/nginx gcr.io/google_containers/kubernetes-dashboard-{{ image_arch }} registry.example.com/google_containers/kubernetes-dashboard-{{ image_arch }} quay.io/jetstack/cert-manager-controller registry.example.com/jetstack/cert-manager-controller quay.io/kubernetes-ingress-controller/nginx-ingress-controller registry.example.com/kubernetes-ingress-controller/nginx-ingress-controller quay.io/external_storage/local-volume-provisioner registry.example.com/external_storage/local-volume-provisioner quay.io/external_storage/cephfs-provisioner registry.example.com/external_storage/cephfs-provisioner k8s.gcr.io/addon-resizer registry.example.com/google_containers/addon-resizer k8s.gcr.io/metrics-server-amd64 registry.example.com/google_containers/metrics-server-amd64 coredns/coredns registry.example.com/coredns/coredns https://storage.googleapis.com/kubernetes-release/release/{{ kubeadm_version }}/bin/linux/{{ image_arch }}/kubeadm http://10.204.250.110/kubernetes-release/release/{{ kubeadm_version }}/bin/linux/{{ image_arch }}/kubeadm https://storage.googleapis.com/kubernetes-release/release/{{ kube_version }}/bin/linux/amd64/hyperkube http://10.204.250.110/kubernetes-release/release/{{ kube_version }}/bin/linux/amd64/hyperkube https://github.com/coreos/etcd/releases/download/{{ etcd_version }}/etcd-{{ etcd_version }}-linux-amd64.tar.gz http://10.204.250.110/coreos/etcd/releases/download/{{ etcd_version }}/etcd-{{ etcd_version }}-linux-amd64.tar.gz https://github.com/containernetworking/plugins/releases/download/{{ cni_version }}/cni-plugins-{{ image_arch }}-{{ cni_version }}.tgz http://10.204.250.110/containernetworking/plugins/releases/download/{{ cni_version }}/cni-plugins-{{ image_arch }}-{{ cni_version }}.tgz

5. 配置部署参数

根据实际环境修改inventory/testcluster/group_vars/k8s-cluster/k8s-cluster.yml中kube_image_repo、kube_service_addresses和kube_pods_subnet的值,比如:

kube_service_addresses: 10.200.0.0/16 kube_pods_subnet: 172.27.0.0/16 kube_image_repo: "registry.example.com/google_containers"

修改roles/kubernetes-apps/helm/defaults/main.yml中的helm stable repo:

helm_stable_repo_url: "https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts"

6. 启用addons

编辑inventory/testcluster/group_vars/k8s-cluster/addons.yml,将需要的addon设为true,并配置对应的参数。比如我这里启用了dashboard、heml、local_volume_provisioner、cephfs_provisioner、ingress_nginx和cert_manager:

dashboard_enabled: true helm_enabled: true registry_enabled: false metrics_server_enabled: true local_volume_provisioner_enabled: true cephfs_provisioner_enabled: true cephfs_provisioner_namespace: "cephfs-provisioner" cephfs_provisioner_cluster: ceph cephfs_provisioner_monitors: "10.32.3.70:6789,10.32.3.71:6789,10.32.3.72:6789" cephfs_provisioner_admin_id: k8s cephfs_provisioner_secret: AQBCk+tbHeLjORAAHiUMFIeu8f76JWBWlCWfbg== cephfs_provisioner_storage_class: cephfs cephfs_provisioner_reclaim_policy: Delete cephfs_provisioner_claim_root: /k8s_volumes cephfs_provisioner_deterministic_names: true ingress_nginx_enabled: true cert_manager_enabled: true

7. 部署集群

做好以上准备工作后,正式开始部署集群:

# ansible all -i inventory/mycluster/hosts.ini -m command -a 'swapoff -a' -u root -k # 所有节点关闭交换分区 # ansible-playbook -i inventory/testcluster/hosts.ini cluster.yml -b -v -k

8. 添加节点

后续如果要增加新的节点,执行下面的命令:

# ansible-playbook -i inventory/testcluster/hosts.ini scale.yml -b -v -u root -k

9. 删除节点

不指定节点就是删除整个集群:

# ansible-playbook -i inventory/testcluster/hosts.ini remove-node.yml -b -v

10. 集群验证

查看节点状态:

# kubectl get nodes NAME STATUS ROLES AGE VERSION node1 Ready master,node 8m41s v1.13.0 node2 Ready master,node 7m57s v1.13.0 node3 Ready master,node 7m58s v1.13.0

修改kube-dashboard service的类型为NodePort,就可以通过任意一个节点访问dashboard:

# kubectl edit service -n kube-system kubernetes-dashboard apiVersion: v1 kind: Service metadata: labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" name: kubernetes-dashboard namespace: kube-system spec: clusterIP: 10.200.108.205 externalTrafficPolicy: Cluster ports: - nodePort: 30443 port: 443 protocol: TCP targetPort: 8443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type: NodePort status: loadBalancer: {}

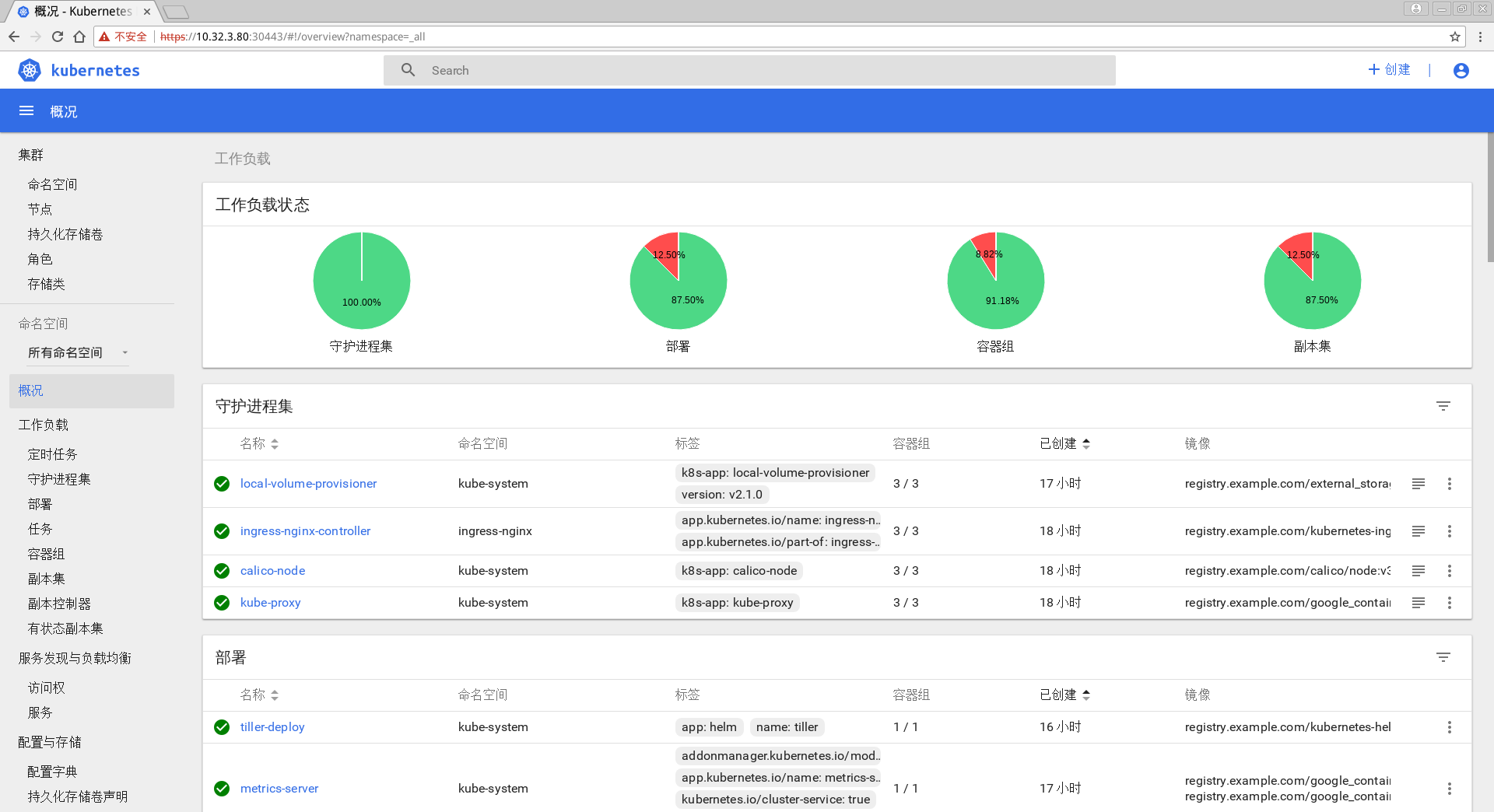

访问dashboard:

11. 后续

1.13.0版本不再使用hyperkube去启master的各个服务,而是将kube-apiserver、kube-scheduler、kube-controller-manager分别打包镜像(不知道是不是从1.13.0开始的,我上一个使用的版本是1.10.4,还是通过hyperkube的方式启的)。

部署完之后发现kube-controller-manager中没有安装ceph相关的包,导致Ceph RBD StorageClass无法使用(CephFS StorageClass是正常的,因为它是由独立的cephfs-provisioner管理的)。所以需要自己重新build kube-controller-manager装上rbd相关的包。

在使用过程中遇到其他坑再补充。

参考资料