urllib库作为基本库,requests库也是在urllib库基础上发展的

但是urllib在使用上不如requests便利,比如上篇文章在写urllib库的时候,比如代理设置,处理cookie时,没有写,因为感觉比较繁琐,另外在发送post请求的时候,也是比较繁琐。

一言而代之,requests库是python实现的简单易用的HTTP库

在以后做爬虫的时候,建议用requests,不用urllib

用法讲解:

#!/usr/bin/env python

# -*- coding:utf-8 -*-

import requests

response = requests.get('http://www.baidu.com')

print(type(response))

print(response.status_code)

print(type(response.text))

print(response.text)

print(response.cookies)

输出结果为:

<class 'requests.models.Response'>

200

<class 'str'>

<!DOCTYPE html>

<!--STATUS OK--><html>省略了 </html>

<RequestsCookieJar[<Cookie BDORZ=27315 for .baidu.com/>]>

由上面的小程序中可以看出,response.text直接就拿到str类型的数据,而在urllib中的urllib.request.read()得到的是bytes类型的数据,还需要decode,比较繁琐,同样的response.cookies直接就将cookies拿到了,而不像在urllib中那样繁琐

各种请求方式

import requests

requests.post('http://www.baidu.com')

requests.put('http://www.baidu.com')

requests.delete('http://www.baidu.com')

requests.head('http://www.baidu.com')

requests.options('http://www.baidu.com')

基本get请求:

利用http://httpbin.org/get进行get请求测试:

import requests

response = requests.get('http://httpbin.org/get')

print(response.text)

输出结果:

{"args":{},"headers":{"Accept":"*/*","Accept-Encoding":"gzip, deflate","Connection":"close","Host":"httpbin.org","User-Agent":"python-requests/2.18.4"},"origin":"113.71.243.133","url":"http://httpbin.org/get"}

带参数的get请求:

import requests

data = {'name':'geme','age':'22'}

response = requests.get('http://httpbin.org/get',params=data)

print(response.text)

输出结果为:

{"args":{"age":"22","name":"geme"},"headers":{"Accept":"*/*","Accept-Encoding":"gzip, deflate","Connection":"close","Host":"httpbin.org","User-Agent":"python-requests/2.18.4"},"origin":"113.71.243.133","url":"http://httpbin.org/get?age=22&name=geme"}

解析json

response.text返回的其实是json形式的字符串,可以通过response.json()直接进行解析,解析结果与json模块loads方法得到的结果是完全一样的

import requests

import json

response = requests.get('http://httpbin.org/get')

print(type(response.text))

print(response.json())

print(json.loads(response.text))

print(type(response.json()))

输出结果为:

<class 'str'>

{'headers': {'Connection': 'close', 'User-Agent': 'python-requests/2.18.4', 'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'Host': 'httpbin.org'}, 'url': 'http://httpbin.org/get', 'args': {}, 'origin': '113.71.243.133'}

{'headers': {'Connection': 'close', 'User-Agent': 'python-requests/2.18.4', 'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'Host': 'httpbin.org'}, 'url': 'http://httpbin.org/get', 'args': {}, 'origin': '113.71.243.133'}

<class 'dict'>

该方法在分析ajax请求的时候比较常用

获取二进制数据

二进制数据是在下载图片或者视频的时候常用的一个方法

import requests

response = requests.get('https://github.com/favicon.ico')

print(type(response.text),type(response.content))

print(response.text)

print(response.content)

#用response.content可以获取二进制内容

文件的保存在爬虫原理一文中讲到,就不再赘述

添加headers

import requests

response = requests.get('https://www.zhihu.com/explore')

print(response.text)

输出结果为:

<html>

<head><title>400 Bad Request</title></head>

<body bgcolor="white">

<center><h1>400 Bad Request</h1></center>

<hr><center>openresty</center>

</body>

</html>

在请求这个url的时候,没有加headers,报了一个400的状态码,下面加上headers试一下:

import requests

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36'}

response = requests.get('https://www.zhihu.com/explore',headers = headers)

print(response.text)

加上这个headers之后,可以正常运行请求了

基本post请求

import requests

data = {'name':'haha','age':'12'}

response = requests.post('http://httpbin.org/post',data = data)

print(response.text)

输出结果为:

{"args":{},"data":"","files":{},"form":{"age":"12","name":"haha"},"headers":{"Accept":"*/*","Accept-Encoding":"gzip, deflate","Connection":"close","Content-Length":"16","Content-Type":"application/x-www-form-urlencoded","Host":"httpbin.org","User-Agent":"python-requests/2.18.4"},"json":null,"origin":"113.71.243.133","url":"http://httpbin.org/post"}

不像在urllib中那样,需要转码等操作,方便了很多,还能在post中继续添加headers

响应

response一些常用的属性:

response.status_code

response.headers

response.cookies

response.url

response.history

状态码的判断:

不同的状态码对应

import requests

response = requests.get('http://httpbin.org/get.html')

exit() if not response.status_code ==requests.codes.not_found else print('404 not found')

#或者这句替换为

exit() if not response.status_code ==200 else print('404 not found')

#因为不同的状态对应着不同的数字

输出结果为:

404 not found

requests的一些高级操作

文件上传;

import requests

url = 'http://httpbin.org/post'

file = {'file':open('tt.jpeg','rb')}

response = requests.post(url,files = file)

print(response.text)

获取cookie

import requests

response = requests.get('https://www.baidu.com')

print(response.cookies)

print(type(response.cookies))

Cookie 的返回对象为 RequestsCookieJar,它的行为和字典类似,将其key,value打印出来

import requests

response = requests.get('https://www.baidu.com')

print(response.cookies)

# print(type(response.cookies))

for key,value in response.cookies.items():

print(key + '=' + value)

输出结果为:

<RequestsCookieJar[<Cookie BDORZ=27315 for .baidu.com/>]>

BDORZ=27315

会话维持:

import requests

requests.get('http://httpbin.org/cookies/set/number/12345678')#行1

response = requests.get('http://httpbin.org/cookies')#行2

print(response.text)

输出结果:{"cookies":{}}

在http://httpbin.org/cookies中有一个set可以设置cookie

通过get拿到这个cookie

但是输出结果为空,原因是行1 和 行2 的两次get在两个浏览器中进行

import requests

s = requests.Session()

s.get('http://httpbin.org/cookies/set/number/12345678')

response = s.get('http://httpbin.org/cookies')

print(response.text)

输出结果为:{"cookies":{"number":"12345678"}}

证书验证:

import requests

response = requests.get('https://www.12306.cn')

print(response.status_code)

输出结果为:

......

requests.exceptions.SSLError: HTTPSConnectionPool(host='www.12306.cn', port=443): Max retries exceeded with url: / (Caused by SSLError(CertificateError("hostname 'www.12306.cn' doesn't match either of 'webssl.chinanetcenter.com', 'i.l.inmobicdn.net', '*.fn-mart.com', 'www.1zhe.com', 'appcdn.liwusj.com', 'static.liwusj.com', 'download.liwusj.com', 'ptlogin.liwusj.com', 'app.liwusj.com', '*.pinganfang.com', '*.anhouse.com', 'dl.jphbpk.gxpan.cn', 'dl.givingtales.gxpan.cn', 'dl.toyblast.gxpan.cn', 'dl.sds.gxpan.cn', 'yxhhd.5054399.com', 'download.ctrip.com', 'mh.tiancity.com', 'yxhhd2.5054399.com', 'app.4399.cn', 'i.4399.cn', 'm.4399.cn', 'a.4399.cn', 'newsimg.5054399.com', 'cdn.hxjyios.iwan4399.com', 'ios.hxjy.iwan4399.com', 'gjzx.gjzq.com.cn', 'f.3000test.com', 'tj.img4399.com', 'vedio.5054399.com', '*.zhe800.com', '*.qiyipic.com', '*.vxinyou.com', '*.gdjh.vxinyou.com', '*.3000.com', 'pay.game2.cn', 'static1.j.cn', 'static2.j.cn', 'static3.j.cn', 'static4.j.cn', 'video1.j.cn', 'video2.j.cn', 'video3.j.cn', 'online.j.cn', 'playback.live.j.cn', 'audio1.guang.j.cn', 'audio2.guang.j.cn', 'audio3.guang.j.cn', 'img1.guang.j.cn', 'img2.guang.j.cn', 'img3.guang.j.cn', 'img4.guang.j.cn', 'img5.guang.j.cn', 'img6.guang.j.cn', '*.4399youpai.com', 'v.3304399.net', 'w.tancdn.com', '*.3000api.com', 'static11.j.cn', '*.kuyinyun.com', '*.kuyin123.com', '*.diyring.cc', '3000test.com', '*.3000test.com', 'hdimg.5054399.com', 'www.3387.com', 'bbs.4399.cn', '*.cankaoxiaoxi.com', '*.service.kugou.com', 'test.macauslot.com', 'testm.macauslot.com', 'testtran.macauslot.com', 'xiuxiu.huodong.meitu.com', '*.meitu.com', '*.meitudata.com', '*.wheetalk.com', '*.shanliaoapp.com', 'xiuxiu.web.meitu.com', 'api.account.meitu.com', 'open.web.meitu.com', 'id.api.meitu.com', 'api.makeup.meitu.com', 'im.live.meipai.com', '*.meipai.com', 'm.macauslot.com', 'www.macauslot.com', 'web.macauslot.com', 'translation.macauslot.com', 'img1.homekoocdn.com', 'cdn.homekoocdn.com', 'cdn1.homekoocdn.com', 'cdn2.homekoocdn.com', 'cdn3.homekoocdn.com', 'cdn4.homekoocdn.com', 'img.homekoocdn.com', 'img2.homekoocdn.com', 'img3.homekoocdn.com', 'img4.homekoocdn.com', '*.macauslot.com', '*.samsungapps.com', 'auto.tancdn.com', '*.winbo.top', 'static.bst.meitu.com', 'api.xiuxiu.meitu.com', 'api.photo.meituyun.com', 'h5.selfiecity.meitu.com', 'api.selfiecity.meitu.com', 'h5.beautymaster.meiyan.com', 'api.beautymaster.meiyan.com', 'www.yawenb.com', 'm.yawenb.com', 'www.biqugg.com', 'www.dawenxue.net', 'cpg.meitubase.com', 'www.qushuba.com', 'www.ranwena.com', 'www.u8xsw.com', '*.4399sy.com', 'ms.qaqact.cn', 'ms.awqsaged.cn', 'fanxing2.kugou.com', 'fanxing.kugou.com', 'sso.56.com', 'upload.qf.56.com', 'sso.qianfan.tv', 'cdn.danmu.56.com', 'www-ppd.hermes.cn', 'www-uat.hermes.cn', 'www-ts2.hermes.cn', 'www-tst.hermes.cn', '*.syyx.com', 'img.wgeqr.cn', 'img.wgewa.cn', 'img.09mk.cn', 'img.85nh.cn', '*.zhuoquapp.com', 'img.dtmpekda8.cn', 'img.etmpekda6.cn'",),))

import requests

response = requests.get('https://www.12306.cn',verify = False)

print(response.status_code)

输出结果为:

D:python-3.5.4.amd64python.exe E:/PythonProject/Test1/爬虫/requests模块.py

D:python-3.5.4.amd64libsite-packagesurllib3connectionpool.py:858: InsecureRequestWarning: Unverified HTTPS request is being made. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/latest/advanced-usage.html#ssl-warnings

InsecureRequestWarning)

200

.。。。

代理设置

代理类型为http或者https时

import requests

proxies = {

'http':'http://127.0.0.1:9743',

'https':'https://127.0.0.1:9743'

}

#声明一个字典,指定代理,请求时把代理传过去就可以了,比urllib方便了很多

#如果代理有密码,在声明的时候,比如:

#proxies = {

# 'http':'http://user:password@127.0.0.1:9743',

#}

#按照如上修改就可以了

response = requests.get('https://www.taobao.com',proxies = proxies)

print(response.status_code)

代理类型为socks时:pip3 install request[socks]

proxies = {

'http':'socks5://127.0.0.1:9743',

'https':'socks5://127.0.0.1:9743'

}

response = requests.get('https://www.taobao.com',proxies = proxies)

print(response.status_code)

超时设置:

response = requests.get('http://www.baidu.com',timeout = 1)

认证设置

有的密码需要登陆认证,这时可以利用auth这个参数:

import requests

from requests.auth import HTTPBasicAuth

r = requests.get('http://120.27.34.24:900',auth = HTTPBasicAuth('user','password'))

#r = requests.get('http://120.27.34.24:900',auth=('user','password'))这样写也可以

print(r.status_code)

异常处理:

import requests

from requests.exceptions import ReadTimeout,HTTPError,RequestException

try:

response = requests.get('http://www.baidu.com',timeout = 1)

print(response.status_code)

except ReadTimeout:

print('timeout')

except HTTPError:

print('httrerror')

except RequestException:

print('error')

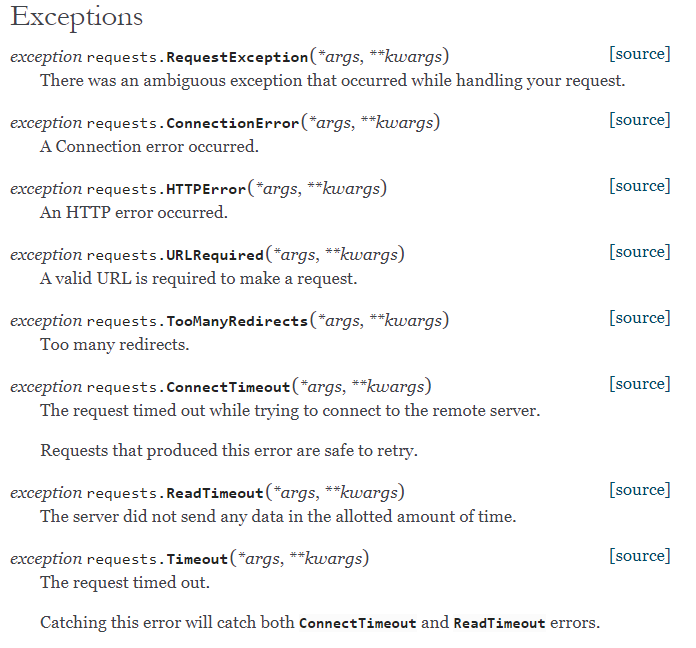

例程只引入了三个异常,官方文档里还有别的异常,见上图,也可以引入,跟例程中的三个异常操作相似

例程只引入了三个异常,官方文档里还有别的异常,见上图,也可以引入,跟例程中的三个异常操作相似